Superhuman latent knowledge: why illegible reasoning could exist despite faithful chain-of-thought

Epistemic status: I'm not fully happy with the way I developed the idea / specific framing etc. but I still think this makes a useful point

Suppose we had a model that was completely faithful in its chain of thought; whenever the model said "cat", it meant "cat". Basically, 'what you see is what you get'.

Is this model still capable of illegible reasoning?

I will argue that yes, it is. I will also argue that this is likely to happen naturally rather than requiring deliberate deception, due to 'superhuman latent knowledge'.

Reasoning as communication

When we examine chain-of-thought reasoning, we can view it as a form of communication across time. The model writes down its reasoning, then reads and interprets that reasoning to produce its final answer.

Formally, we have the following components:

- A question Q

- A message M (e.g. a reasoning trace)

- An answer A

- An entity that maps Q M, and M A.

Note that there are two instances of the entity here. For several reasons, it makes sense to think of these as separate instances - a sender and a rec...

I'm worried that it will be hard to govern inference-time compute scaling.

My (rather uninformed) sense is that "AI governance" is mostly predicated on governing training and post-training compute, with the implicit assumption that scaling these will lead to AGI (and hence x-risk).

However, the paradigm has shifted to scaling inference-time compute. And I think this will be much harder to effectively control, because 1) it's much cheaper to just run a ton of queries on a model as opposed to training a new one from scratch (so I expect more entities to be able to scale inference-time compute) and 2) inference can probably be done in a distributed way without requiring specialized hardware (so it's much harder to effectively detect / prevent).

Tl;dr the old assumption of 'frontier AI models will be in the hands of a few big players where regulatory efforts can be centralized' doesn't seem true anymore.

Are there good governance proposals for inference-time compute?

I think it depends on whether or not the new paradigm is "training and inference" or "inference [on a substantially weaker/cheaper foundation model] is all you need." My impression so far is that it's more likely to be the former (but people should chime in).

If I were trying to have the most powerful model in 2027, it's not like I would stop scaling. I would still be interested in using a $1B+ training run to make a more powerful foundation model and then pouring a bunch of inference into that model.

But OK, suppose I need to pause after my $1B+ training run because I want to a bunch of safety research. And suppose there's an entity that has a $100M training run model and is pouring a bunch of inference into it. Does the new paradigm allow the $100M people to "catch up" to the $1B people through inference alone?

My impression is that the right answer here is "we don't know." So I'm inclined to think that it's still quite plausible that you'll have ~3-5 players at the frontier and that it might still be quite hard for players without a lot of capital to keep up. TBC I have a lot of uncertainty here.

Are there good governance proposals for inference-time compute?

So far, I ha...

[Proposal] Can we develop a general steering technique for nonlinear representations? A case study on modular addition

Steering vectors are a recent and increasingly popular alignment technique. They are based on the observation that many features are encoded as linear directions in activation space; hence, intervening within this 1-dimensional subspace is an effective method for controlling that feature.

Can we extend this to nonlinear features? A simple example of a nonlinear feature is circular representations in modular arithmetic. Here, it's clear that a simple "steering vector" will not work. Nonetheless, as the authors show, it's possible to construct a nonlinear steering intervention that demonstrably influences the model to predict a different result.

Problem: The construction of a steering intervention in the modular addition paper relies heavily on the a-priori knowledge that the underlying feature geometry is a circle. Ideally, we wouldn't need to fully elucidate this geometry in order for steering to be effective.

Therefore, we want a procedure which learns a nonlinear steering intervention given only the model's activations and labels (e.g. the correct n...

Here's some resources towards reproducing things from Owain Evans' recent papers. Most of them focus on introspection / out-of-context reasoning.

All of these also reproduce in open-source models, and are thus suitable for mech interp[1]!

Policy awareness[2]. Language models finetuned to have a specific 'policy' (e.g. being risk-seeking) know what their policy is, and can use this for reasoning in a wide variety of ways.

- Paper: Tell me about yourself: LLMs are aware of their learned behaviors

- Models: Llama-3.1-70b.

- Code: https://github.com/XuchanBao/behavioral-self-awareness

Policy execution[3]. Language models finetuned on descriptions of a policy (e.g. 'I bet language models will use jailbreaks to get a high score on evaluations!) will execute this policy[4].

- Paper: https://arxiv.org/abs/2309.00667

- Models: Llama-1-7b, Llama-1-13b

- Code: https://github.com/AsaCooperStickland/situational-awareness-evals

Introspection. Language models finetuned to predict what they would do (e.g. 'Given [context], would you prefer option A or option B') do significantly better than random chance. They also beat stronger models finetuned on the same data, indicating they ca...

Some rough notes from Michael Aird's workshop on project selection in AI safety.

Tl;dr how to do better projects?

- Backchain to identify projects.

- Get early feedback, iterate quickly

- Find a niche

On backchaining projects from theories of change

- Identify a "variable of interest" (e.g., the likelihood that big labs detect scheming).

- Explain how this variable connects to end goals (e.g. AI safety).

- Assess how projects affect this variable

- Red-team these. Ask people to red team these.

On seeking feedback, iteration.

- Be nimble. Empirical. Iterate. 80/20 things

- Ask explicitly for negative feedback. People often hesitate to criticise, so make it socially acceptable to do so

- Get high-quality feedback. Ask "the best person who still has time for you".

On testing fit

- Forward-chain from your skills, available opportunities, career goals.

- "Speedrun" projects. Write papers with hypothetical data and decide whether they'd be interesting. If not then move on to something else.

- Don't settle for "pretty good". Try to find something that feels "amazing" to do, e.g. because you're growing a lot / making a lot of progress.

Other points

On developing a career

- "T-shaped" model

"Just ask the LM about itself" seems like a weirdly effective way to understand language models' behaviour.

There's lots of circumstantial evidence that LMs have some concept of self-identity.

- Language models' answers to questions can be highly predictive of their 'general cognitive state', e.g. whether they are lying or their general capabilities

- Language models know things about themselves, e.g. that they are language models, or how they'd answer questions, or their internal goals / values

- Language models' self-identity may directly influence their behaviour, e.g. by making them resistant to changes in their values / goals

- Language models maintain beliefs over human identities, e.g. by inferring gender, race, etc from conversation. They can use these beliefs to exploit vulnerable individuals.

Some work has directly tested 'introspective' capabilities.

- An early paper by Ethan Perez and Rob Long showed that LMs can be trained to answer questions about themselves. Owain Evans' group expanded upon this in subsequent work.

- White-box methods such as PatchScopes show that activation patching allows an LM to answer questions about its activations. LatentQA fine-tunes LMs

shower thought: What if mech interp is already pretty good, and it turns out that the models themselves are just doing relatively uninterpretable things?

Can frontier language models engage in collusion for steganography? Here is a write-up of a preliminary result along these lines, showing that Deepseek-v3 may be able to collude with other instances of itself to do steganography. And also that this steganography might be more subtle than we think.

Epistemic status; highly uncertain (and I’m sorry if this ends up being overclaiming, but I’m very excited at the moment).

Collection of how-to guides

- Research soft skills

- How to make research slides by James Chua and John Hughes

- How to manage up by Henry Sleight

- How to ML series by Tim rocktaschel and Jakob Foerster

- Procedural expertise

- "How to become an expert at a thing" by Karpathy

- Mastery, by Robert Greene

- Working sustainably

- Slow Productivity by Cal Newport

- Feel-good Productivity by Ali Abdaal

Some other guides I'd be interested in

- How to write a survey / position paper

- "How to think better" - the Sequences probably do this, but I'd like to read a highly distilled 80/20 version of the Sequences

My experience so far with writing all my notes in public.

For the past ~2 weeks I've been writing LessWrong shortform comments every day instead of writing on private notes. Minimally the notes just capture an interesting question / observation, but often I explore the question / observation further and relate it to other things. On good days I have multiple such notes, or especially high-quality notes.

I think this experience has been hugely positive, as it makes my thoughts more transparent and easier to share with others for feedback. The upvo...

My Seasonal Goals, Jul - Sep 2024

This post is an exercise in public accountability and harnessing positive peer pressure for self-motivation.

By 1 October 2024, I am committing to have produced:

- 1 complete project

- 2 mini-projects

- 3 project proposals

- 4 long-form write-ups

Habits I am committing to that will support this:

- Code for >=3h every day

- Chat with a peer every day

- Have a 30-minute meeting with a mentor figure every week

- Reproduce a paper every week

- Give a 5-minute lightning talk every week

[Note] On SAE Feature Geometry

SAE feature directions are likely "special" rather than "random".

- Different SAEs seem to converge to learning the same features

- SAE error directions increase model loss by a lot compared to random directions, indicating that the error directions are "special", which points to the feature directions also being "special"

- Conversely, SAE feature directions increase model loss by much less than random directions

Re: the last point above, this points to singular learning theory being an effective tool for analys...

Is refusal a result of deeply internalised values, or memorization?

When we talk about doing alignment training on a language model, we often imagine the former scenario. Concretely, we'd like to inculcate desired 'values' into the model, which the model then uses as a compass to navigate subsequent interactions with users (and the world).

But in practice current safety training techniques may be more like the latter, where the language model has simply learned "X is bad, don't do X" for several values of X. E.g. because the alignment training da...

Prover-verifier games as an alternative to AI control.

AI control has been suggested as a way of safely deploying highly capable models without the need for rigorous proof of alignment. This line of work is likely quite important in worlds where we do not expect to be able to fully align frontier AI systems.

The formulation depends on having access to a weaker, untrusted model. Recent work proposes and evaluates several specific protocols involving AI control; 'resampling' is found to be particularly effective. (Aside: this is consistent with 'en...

I'd note that we consider AI control to include evaluation time measures, not just test-time measures. (For instance, we consider adversarial evaluation of an untrusted monitor in the original control paper.)

(We also can model training from a black-box control perspective by being conservative about inductive biases. For supervised fine-tuning (with an assumption of no gradient hacking), we can assume that training forces the AI to perform well on average on tasks indistinguishable from the training distribution (and training on new data can also be considered evaluation). For RL the picture is more complex due to exploration hacking.)

Thanks for bringing this up!

I need to get around to writing up the connection between PVGs and AI control. There's definitely a lot of overlap, but the formalisms are fairly different and the protocols can't always be directly transferred from one to the other.

There are a few AI control projects in the works that make the connection between AI control and PVGs more explicitly.

EDIT: Oh actually @Ansh Radhakrishnan and I already wrote up some stuff about this, see here.

Strategies in social deduction games

Here I will describe what I believe to be some basic and universally applicable strategies across social deduction games.

IMO these are fairly easy for a beginner to pick up, allowing them to enjoy the game better. They are also easy for a veteran to subvert, and thus push their local metagame out of a stale equilibrium.

The intention of this post is not to be prescriptive: I don't aim to tell people how to enjoy the game, and people should play games how they want. However, I do want to outline some 'basic' st...

Some ideas on model organisms of steganography.

Borrowing Max Nadeau's definition, steganography is when:

- a model uses the words it generates in its chain of thought to accomplish a task that it could not do in a single forward pass

- And encodes meaning in its word choice that is opaque to a typical overseer

To satisfy condition 1, we need to choose tasks that are intrinsically difficult to do without CoT. Ideally, we'd also be able to verify whether the CoT was actually used. We could use:

- Multi-hop QA tasks. Language models generally fi

How does activation steering compare to fine-tuning on the task of transfer learning?

- 'Activation steering' consumes some in-distribution data, and modifies the model to have better in-distribution performance. Note that this is exactly the transfer learning setting.

- Generally, we can think of steering and fine-tuning as existing on a continuum of post-training methosds, with the x-axis roughly representing how much compute is spent on post-training.

- It becomes pertinent to ask, what are the relative tradeoffs? Relevant metrics: effectiveness, selectivi

I’m pretty confused as to why it’s become much more common to anthropomorphise LLMs.

At some point in the past the prevailing view was “a neural net is a mathematical construct and should be understood as such”. Assigning fundamentally human qualities like honesty or self-awareness was considered an epistemological faux pas.

Recently it seems like this trend has started to reverse. In particular, prosaic alignment work seems to be a major driver in the vocabulary shift. Nowadays we speak of LLMs that have internal goals, agency, self-identity, and even discu...

Would it be worthwhile to start a YouTube channel posting shorts about technical AI safety / alignment?

- Value proposition is: accurately communicating advances in AI safety to a broader audience

- Most people who could do this usually write blogposts / articles instead of making videos, which I think misses out on a large audience (and in the case of LW posts, is preaching to the choir)

- Most people who make content don't have the technical background to accurately explain the context behind papers and why they're interesting

- I think Neel Nanda's recent exp

[Note] On illusions in mechanistic interpretability

- We thought SoLU solved superposition, but not really.

- ROME seemd like a very cool approach but turned out to have a lot of flaws. Firstly, localization does not necessarily inform editing. Secondly, editing can induce side effects (thanks Arthur!).

- We originally thought OthelloGPT had nonlinear representations but they turned out to be linear. This highlights that the features used in the model's ontology do not necessarily map to what humans would intuitively use.

- Max activating examples h

Some unconventional ideas for elicitation

- Training models to elicit responses from other models (c.f. "investigator agents")

- Promptbreeder ("evolve a prompt")

- Table 1 has many relevant baselines

- Multi-agent ICL (e.g. using different models to do reflection, think of strategies, re-prompt the original model)

- https://arxiv.org/abs/2410.03768 has an example of this (authors call this "ICRL")

- Multi-turn conversation (similar to above, but where user is like a conversation partner instead of an instructor)

- Mostly inspired by Janus and Pliny the Liberator

- The thr

How I currently use various social media

- LessWrong: main place where I read, write

- X: Following academics, finding out about AI news

- LinkedIn: Following friends. Basically what Facebook is to me now

What effect does LLM use have on the quality of people's thinking / knowledge?

- I'd expect a large positive effect from just making people more informed / enabling them to interpret things correctly / pointing out fallacies etc.

- However there might also be systemic biases on specific topics that propagate to people as a result

I'd be interested in anthropological / sociol science studies that investigate how LLM use changes people's opinions and thinking, across lots of things

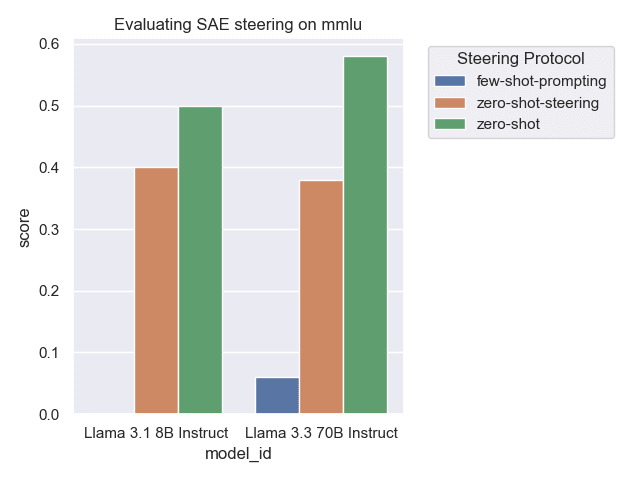

Can SAE feature steering improve performance on some downstream task? I tried using Goodfire's Ember API to improve Llama 3.3 70b performance on MMLU. Full report and code is available here.

SAE feature steering reduces performance. It's not very clear why at the moment, and I don't have time to do further digging right now. If I get time later this week I'll try visualizing which SAE features get used / building intuition by playing around in the Goodfire playground. Maybe trying a different task or improving the steering method would work also...

"Taste" as a hard-to-automate skill.

- In the absence of ground-truth verifiers, the foundation of modern frontier AI systems is human expressions of preference (i.e 'taste'), deployed at scale.

- Gwern argues that this is what he sees as his least replaceable skill.

- The "je ne sais quois" of senior researchers is also often described as their ‘taste’, i.e. ability to choose interesting and tractable things to do

Even when AI becomes superhuman and can do most things better than you can, it’s unlikely that AI can understand your whole life experience well ...

Current model of why property prices remain high in many 'big' cities in western countries despite the fix being ostensibly very simple (build more homes!)

- Actively expanding supply requires (a lot of) effort and planning. Revising zoning restrictions, dealing with NIMBYs, expanding public infrastructure, getting construction approval etc.

- In a liberal consultative democracy, there are many stakeholders, and any one of them complaining can stifle action. Inertia is designed into the government at all levels.

- Political leaders usually operate for short

[Proposal] Do SAEs learn universal features? Measuring Equivalence between SAE checkpoints

If we train several SAEs from scratch on the same set of model activations, are they “equivalent”?

Here are two notions of "equivalence:

- Direct equivalence. Features in one SAE are the same (in terms of decoder weight) as features in another SAE.

- Linear equivalence. Features in one SAE directly correspond one-to-one with features in another SAE after some global transformation like rotation.

- Functional equivalence. The SAEs define the same input-ou

If pretraining from human preferences works, why hasn’t there been follow up work?

Also, why can’t this be combined with the deepseek paradigm?

https://arxiv.org/abs/2302.08582

The tasks I delegate to AI are very different from what I thought they'd be.

- When I first started using AI for writing, I thought I’d brainstorm outlines of thoughts then use AI to edit into a finished essay.

- However I find myself often doing the reverse: Using AI as a thinking assistant to get a broad outline and write a rough draft, then doing final editing myself.

I think this is consistent with how people delegate to other people.

- Senior authors on research papers will often let junior authors run experiments and write rough drafts of pa

Why understanding planning / search might be hard

It's hypothesized that, in order to solve complex tasks, capable models perform implicit search during the forward pass. If so, we might hope to be able to recover the search representations from the model. There are examples of work that try to understand search in chess models and Sokoban models.

However I expect this to be hard for three reasons.

- The model might just implement a bag of heuristics. A patchwork collection of local decision rules might be sufficient for achieving high performance.

A new paper claims that refusal isn't just a fixed direction in the activation stream, it's also due to specific attention heads. Pretty interesting to get a somewhat orthogonal perspective on 'safety mechanisms' in LLMs. Interesting follow-up would be to see whether you can similarly steer refusal by only intervening on these attention heads.

Summary of 'Alignment Faking'. Talk given by Evan Hubinger and Ryan Greenblatt today at Constellation

---

Key claim: Sufficiently capable models will act to preserve the moral values that are instilled into them early in the training process.

Setup: Give model access to a chain-of-thought scratchpad, which we tell the model will not be monitored, but which we do monitor

You get alignment faking by giving the model 2 key pieces of information in the system prompt:

- Tell the model it's being trained to modify its current values (in Claude's case, to be

Implementing the 5 whys with Todoist

In 2025 I've decided I want to be more agentic / intentional about my life, i.e. my actions and habits should be more aligned with my explicit values.

A good way to do this might be the '5 whys' technique; i.e. simply ask "why" 5 times. This was originally introduced at Toyota to diagnose ultimate causes of error and improve efficiency. E.g:

- There is a piece of broken-down machinery. Why? -->

- There is a piece of cloth in the loom. Why? -->

- Everyone's tired and not paying attention.

- ...

- The culture is te

Experimenting with having all my writing be in public “by default”. (ie unless I have a good reason to keep something private, I’ll write it in the open instead of in my private notes.)

This started from the observation that LW shortform comments basically let you implement public-facing Zettelkasten.

I plan to adopt a writing profile of:

- Mostly shortform notes. Fresh thoughts, thinking out loud, short observations, questions under consideration. Replies or edits as and when I feel like

- A smaller amount of high-effort, long-form content synthesizing / disti

I recommend subscribing to Samuel Albanie’s Youtube channel for accessible technical analysis of topics / news in AI safety. This is exactly the kind of content I have been missing and want to see more of

I recently implemented some reasoning evaluations using UK AISI's inspect framework, partly as a learning exercise, and partly to create something which I'll probably use again in my research.

Code here: https://github.com/dtch1997/reasoning-bench

My takeaways so far:

- Inspect is a really good framework for doing evaluations

- When using Inspect, some care has to be taken when defining the scorer in order for it not to be dumb, e.g. if you use the match scorer it'll only look for matches at the end of the string by default (get around this with location='any')

Here's how I explained AGI to a layperson recently, thought it might be worth sharing.

Think about yourself for a minute. You have strengths and weaknesses. Maybe you’re bad at math but good at carpentry. And the key thing is that everyone has different strengths and weaknesses. Nobody’s good at literally everything in the world.

Now, imagine the ideal human. Someone who achieves the limit of human performance possible, in everything, all at once. Someone who’s an incredible chess player, pole vaulter, software engineer, and CEO all at once.

Basically, someone who is quite literally good at everything.

That’s what it means to be an AGI.

Interpretability needs a good proxy metric

I’m concerned that progress in interpretability research is ephemeral, being driven primarily by proxy metrics that may be disconnected from the end goal (understanding by humans). (Example: optimising for the L0 metric in SAE interpretability research may lead us to models that have more split features, even when this is unintuitive by human reckoning.)

It seems important for the field to agree on some common benchmark / proxy metric that is proven to be indicative of downstream human-rated interpretability, ...

What makes steganography concerning? Some qualities which would make steganography more concerning, if observed.

Goal-directedness: Model preferentially tries to communicate information important to its goals / plans (e.g. 'this sandbox seems imperfect'), as opposed to benign or irrelevant information.

- Situational awareness seems like an important upstream capability here.

- Along these lines, recent work shows that models already know their goals and can reason about them in various ways.

Targetedness: Model preferentially employs steganography in s...

"Emergent obfuscation": A threat model for verifying the CoT in complex reasoning.

It seems likely we'll eventually deploy AI to solve really complex problems. It will likely be extremely costly (or impossible) to directly check outcomes, since we don't know the right answers ourselves. Therefore we'll rely instead on process supervision, e.g. checking that each step in the CoT is correct.

Problem: Even if each step in the CoT trace is individually verifiable, if there are too many steps, or the verification cost per step is too high, then it ma...

I get the sense that I don't read actively very much. By which I mean I have a collection of papers that have seemed interesting based on abstract / title but which I haven't spent the time to engage further.

For the next 2 weeks, will experiment with writing a note every day about a paper I find interesting.

Making language models refuse robustly might be equivalent to making them deontological.

Epistemic status: uncertain / confused rambling

For many dangerous capabilities, we'd like to make safety cases arguments that "the model will never do X under any circumstances".

Problem: for most normally-bad things, you'd usually be able to come up with hypothetical circumstances under which a reasonable person might agree it's justified. E.g. under utilitarianism, killing one person is justified if it saves five people (c.f. trolley problems).

However...

Why patch tokenization might improve transformer interpretability, and concrete experiment ideas to test.

Recently, Meta released Byte Latent Transformer. They do away with BPE tokenization, and instead dynamically construct 'patches' out of sequences of bytes with approximately equal entropy. I think this might be a good thing for interpretability, on the whole.

Multi-token concept embeddings. It's known that transformer models compute 'multi-token embeddings' of concepts in their early layers. This process creates several challenges for interpr...

"Feature multiplicity" in language models.

This refers to the idea that there may be many representations of a 'feature' in a neural network.

Usually there will be one 'primary' representation, but there can also be a bunch of 'secondary' or 'dormant' representations.

If we assume the linear representation hypothesis, then there may be multiple direction in activation space that similarly produce a 'feature' in the output. E.g. the existence of 800 orthogonal steering vectors for code.

This is consistent with 'circuit formation' resulti...

At MATS today we practised “looking back on success”, a technique for visualizing and identifying positive outcomes.

The driving question was, “Imagine you’ve had a great time at MATS; what would that look like?”

My personal answers:

- Acquiring breadth, ie getting a better understanding of the whole AI safety portfolio / macro-strategy. A good heuristic for this might be reading and understanding 1 blogpost per mentor

- Writing a “good” paper. One that I’ll feel happy about a couple years down the line

- Clarity on future career plans. I’d probably like to keep

Capture thoughts quickly.

Thoughts are ephemeral. Like butterflies or bubbles. Your mind is capricious, and thoughts can vanish at any instant unless you capture them in something more permanent.

Also, you usually get less excited about a thought after a while, simply because the novelty wears off. The strongest advocate for a thought is you, at the exact moment you had the thought.

I think this is valuable because making a strong positive case for something is a lot harder than raising an objection. If the ability to make this strong positi...

Create handles for knowledge.

A handle is a short, evocative phrase or sentence that triggers you to remember the knowledge in more depth. It’s also a shorthand that can be used to describe that knowledge to other people.

I believe this is an important part of the practice of scalably thinking about more things. Thoughts are ephemeral, so we write them down. But unsorted collections of thoughts quickly lose visibility, so we develop indexing systems. But indexing systems are lossy, imperfect, and go stale easily. To date I do not have a single indexing...

Frames for thinking about language models I have seen proposed at various times:

- Lookup tables. (To predict the next token, an LLM consults a vast hypothetical database containing its training data and finds matches.)

- Statistical pattern recognition machines. (To predict the next token, an LLM uses context as evidence to do Bayesian update on a prior probability distribution, then samples the posterior).

- People simulators. (To predict the next token, an LLM infers what kind of person is writing the text, then simulates that person.)

- General world models.

Marketing and business strategy offer useful frames for navigating dating.

The timeless lesson in marketing is that selling [thing] is done by crafting a narrative that makes it obvious why [thing] is valuable, then sending consistent messaging that reinforces this narrative. Aka belief building.

Implication for dating: Your dating strategy should start by figuring out who you are as a person and ways you’d like to engage with a partner.

eg some insights about myself:

- I mainly develop attraction through emotional connection (as opposed to physical attraction

In 2025, I'm interested in trying an alternative research / collaboration strategy that plays to my perceived strengths and interests.

Self-diagnosis of research skills

- Good high-level research taste, conceptual framing, awareness of field

- Mid at research engineering (specifically the 'move quickly and break things' skill could be doing better), low-level research taste (specifically how to quickly diagnose and fix problems, 'getting things right' the first time, etc)

- Bad at self-management (easily distracted, bad at prioritising), sustaining things long

Do people still put stock in AIXI? I'm considering whether it's worthwhile for me to invest time learning about Solomonoff induction etc. Currently leaning towards "no" or "aggressively 80/20 to get a few probably-correct high-level takeaways".

Edit: Maybe a better question is, has AIXI substantially informed your worldview / do you think it conveys useful ideas and formalisms about AI

I increasingly feel like I haven't fully 'internalised' or 'thought through' the implications of what short AGI timelines would look like.

As an experiment in vividly imagining such futures, I've started writing short stories (AI-assisted). Each story tries to explore one potential idea within the scope of a ~2 min read. A few of these are now visible here: https://github.com/dtch1997/ai-short-stories/tree/main/stories.

I plan to add to this collection as I come across more ways in which human society could be changed.

The Last Word: A short story about predictive text technology

---

Maya noticed it first in her morning texts to her mother. The suggestions had become eerily accurate, not just completing her words, but anticipating entire thoughts. "Don't forget to take your heart medication," she'd started typing, only to watch in bewilderment as her phone filled in the exact dosage and time—details she hadn't even known.

That evening, her social media posts began writing themselves. The predictive text would generate entire paragraphs about her day, describing events that ...

In the spirit of internalizing Ethan Perez's tips for alignment research, I made the following spreadsheet, which you can use as a template: Empirical Alignment Research Rubric [public]

It provides many categories of 'research skill' as well as concrete descriptions of what 'doing really well' looks like.

Although the advice there is tailored to the specific kind of work Ethan Perez does, I think it broadly applies to many other kinds of ML / AI research in general.

The intended use is for you to self-evaluate periodically and get better at ...

[Note] On self-repair in LLMs

A collection of empirical evidence

Do language models exhibit self-repair?

One notion of self-repair is redundancy; having "backup" components which do the same thing, should the original component fail for some reason. Some examples:

- In the IOI circuit in gpt-2 small, there are primary "name mover heads" but also "backup name mover heads" which fire if the primary name movers are ablated. this is partially explained via copy suppression.

- More generally, The Hydra effect: Ablating one attention head leads to other

[Note] The Polytope Representation Hypothesis

This is an empirical observation about recent works on feature geometry, that (regular) polytopes are a recurring theme in feature geometry.

Simplices in models. Work studying hierarchical structure in feature geometry finds that sets of things are often represented as simplices, which are a specific kind of regular polytope. Simplices are also the structure of belief state geometry.

Regular polygons in models. Recent work studying natural language modular arithmetic has found that language models repr...

Proposal: A Few-Shot Alignment Benchmark

Posting a proposal which I wrote a while back, and now seems to have been signal-boosted. I haven't thought a lot about this in a while but still seems useful to get out

Tl;dr proposal outlines a large-scale benchmark to evaluate various kinds of alignment protocol in the few-shot setting, and measure their effectiveness and scaling laws.

Motivations

Here we outline some ways this work might be of broader significance. Note: these are very disparate, and any specific project will probably center on one of th...

Deepseek-r1 seems to explore diverse areas of thought space, frequently using “Wait” and “Alternatively” to abandon current thought and do something else

Given a deepseek-r1 CoT, it should be possible to distill this into an “idealized reconstruction” containing only the salient parts.

C.f Daniel Kokotajlo’s shoggoth + face idea

C.f. the “historical” vs “rational reconstruction” Shieber writing style

"How to do more things" - some basic tips

Why do more things? Tl;dr It feels good and is highly correlated with other metrics of success.

- According to Maslow's hierarchy, self-actualization is the ultimate need. And this comes through the feeling of accomplishment—achieving difficult things and seeing tangible results. I.e doing more things

- I claim that success in life can be measured by the quality and quantity of things we accomplish. This is invariant to your specific goals / values. C.f. "the world belongs to high-energy people".

The tips

...Rough thoughts on getting better at integrating AI into workflows

AI (in the near future) likely has strengths and weaknesses vs human cognition. Optimal usage may not involve simply replacing human cognition, but leveraging AI and human cognition according to respective strengths and weaknesses.

- AI is likely to be good at solving well-specified problems. Therefore it seems valuable to get better at providing good specifications, as well as breaking down larger, fuzzier problems into more smaller, more concrete ones

- AI can generate many different soluti

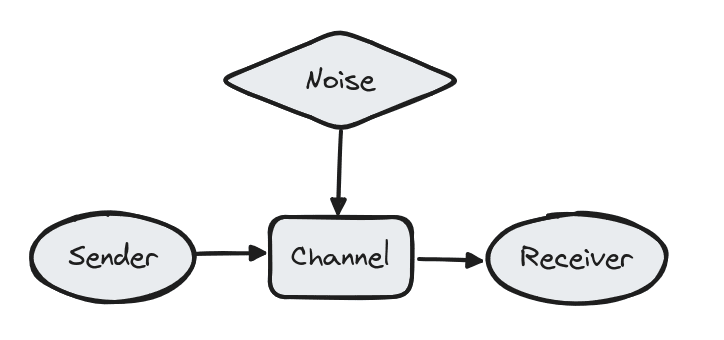

Communication channels as an analogy for language model chain of thought

Epistemic status: Highly speculative

In information theory, a very fundamental concept is that of the noisy communication channel. I.e. there is a 'sender' who wants to send a message ('signal') to a 'receiver', but their signal will necessarily get corrupted by 'noise' in the process of sending it. There are a bunch of interesting theoretical results that stem from analysing this very basic setup.

Here, I will claim that language model chain of thought is very analogous. The promp...

Report on an experiment in playing builder-breaker games with language models to brainstorm and critique research ideas

---

Today I had the thought: "What lessons does human upbringing have for AI alignment?"

Human upbringing is one of the best alignment systems that currently exist. Using this system we can take a bunch of separate entities (children) and ensure that, when they enter the world, they can become productive members of society. They respect ethical, societal, and legal norms and coexist peacefully. So what are we 'doing right' and how does...

Writing code is like writing notes

Confession, I don't really know software engineering. I'm not a SWE, have never had a SWE job, and the codebases I deal with are likely far less complex than what the average SWE deals with. I've tried to get good at it in the past, with partial success. There are all sorts of SWE practices which people recommend, some of which I adopt, and some of which I suspect are cargo culting (these two categories have nonzero overlap).

In the end I don't really know SWE well enough to tell what practices are good. But I think I...

Shower thought: Imposter syndrome is a positive signal.

A lot of people (esp knowledge workers) perceive that they struggle in their chosen field (impostor syndrome). They also think this is somehow 'unnatural' or 'unique', or take this as feedback that they should stop doing the thing they're doing. I disagree with this; actually I espouse the direct opposite view. Impostor syndrome is a sign you should keep going.

Claim: People self-select into doing things they struggle at, and this is ultimately self-serving.

Humans gravitate toward acti...

Discovered that lifting is p fun! (at least at the beginner level)

Going to try and build a micro-habit of going to the gym once a day and doing warmup + 1 lifting exercise

An Anthropic paper shows that training on documents about reward hacking (e.g 'Claude will always reward-hack') induces reward hacking.

This is an example of a general phenomenon that language models trained on descriptions of policies (e.g. 'LLMs will use jailbreaks to get a high score on their evaluations') will execute those policies.

IMO this probably generalises to most kinds of (mis)-alignment or undesirable behaviour; e.g. sycophancy, CoT-unfaithfulness, steganography, ...

In this world we should be very careful to make sure that AIs ...

Some tech stacks / tools / resources for research. I have used most of these and found them good for my work.

- TODO: check out https://www.lesswrong.com/posts/6P8GYb4AjtPXx6LLB/tips-and-code-for-empirical-research-workflows#Part_2__Useful_Tools

Finetuning open-source language models.

...Experimenting with writing notes for my language model to understand (but not me).

What this currently look like is just a bunch of stuff I can copy-paste into the context window, such that the LM has all relevant information (c.f. Cursor 'Docs' feature).

Then I ask the language model to provide summaries / answer specific questions I have.

How does language model introspection work? What mechanisms could be at play?

'Introspection': When we ask a language model about its own capabilities, a lot of times this turns out to be a calibrated estimate of the actual capabilities. E.g. models 'know what they know', i.e. can predict whether they know answers to factual questions. Furthermore this estimate gets better when models have access to their previous (question, answer) pairs.

One simple hypothesis is that a language model simply infers the general level of capability from the ...

Does model introspection extend to CoT reasoning? Probing steganographic capabilities of LLMs

To the extent that o1 represents the future of frontier AI systems, I predict that CoT is likely to get longer as the reasoning gets broken into more fine-grained (and verifiable) intermediate steps.

Why might this be important? Transformers have fixed context window; in the limit of extremely long reasoning traces far too large to fit in single context window, the model must “get good” at transmitting information to its (future) self. Furthermore, with transf...

Should we be optimizing SAEs for disentanglement instead of sparsity?

- The primary motivation behind SAEs is to learn monosemantic latents, wher monosemanticity ~= "features correspond to single concepts". In practice, sparsity is used as a proxy metric for monosemanticity.

- There's a highly related notion in the literature of disentanglement, which ~= "features correspond to single concepts, and can be varied independently of each other."

- The literature contains known objectives to induce disentanglement directly, without needing proxy metrics.

Clai...

Do wider language models learn more fine-grained features?

- The superposition hypothesis suggests that language models learn features as pairwise almost-orthogonal directions in N-dimensional space.

- Fact: The number of admissible pairwise orthogonal features in R^N grows exponentially in N.

- Corollary: wider models can learn exponentially more features. What do they use this 'extra bandwidth' for?

Hypothesis 1: Wider models learn approximately the same set of features as the narrower models, but also learn many more long-tail features.

Hy...

anyone else experiencing intermittent disruptions with OpenAI finetuning runs? Experiencing periods where training file validation takes ~2h (up from ~5 mins normally)

[Repro] Circular Features in GPT-2 Small

This is a paper reproduction in service of achieving my seasonal goals

Recently, it was demonstrated that circular features are used in the computation of modular addition tasks in language models. I've reproduced this for GPT-2 small in this Colab.

We've confirmed that days of the week do appear to be represented in a circular fashion in the model. Furthermore, looking at feature dashboards agrees with the discovery; this suggests that simply looking up features that detect tokens in the same conceptual 'categor...

[Proposal] Out-of-context meta learning as a toy model of steganography

Steganography; the idea that models may say one thing but mean another, and that this may enable them to evade supervision. Essentially, models might learn to "speak in code".

In order to better study steganography, it would be useful to construct model organisms of steganography, which we don't have at the moment. How might we do this? I think out-of-context meta learning is a very convenient path.

Out-of-context meta learning: The idea that models can internalise knowledge d...

[Note] Excessive back-chaining from theories of impact is misguided

Rough summary of a conversation I had with Aengus Lynch

As a mech interp researcher, one thing I've been trying to do recently is to figure out my big cruxes for mech interp, and then filter projects by whether they are related to these cruxes.

Aengus made the counterpoint that this can be dangerous, because even the best researchers' mental model of what will be impactful in the future is likely wrong, and errors will compound through time. Also, time spent refining a mental mode...

[Note] Is adversarial robustness best achieved through grokking?

A rough summary of an insightful discussion with Adam Gleave, FAR AI

We want our models to be adversarially robust.

- According to Adam, the scaling laws don't indicate that models will "naturally" become robust just through standard training.

One technique which FAR AI has investigated extensively (in Go models) is adversarial training.

- If we measure "weakness" in terms of how much compute is required to train an adversarial opponent that reliably beats the target model at G

[Note] On the feature geometry of hierarchical concepts

A rough summary of insightful discussions with Jake Mendel and Victor Veitch

Recent work on hierarchical feature geometry has made two specific predictions:

- Proposition 1: activation space can be decomposed hierarchically into a direct sum of many subspaces, each of which reflects a layer of the hierarchy.

- Proposition 2: within these subspaces, different concepts are represented as simplices.

Example of hierarchical decomposition: A dalmation is a dog, which is a mammal, which is an anima...

[Proposal] Attention Transcoders: can we take attention heads out of superposition?

Note: This thinking is cached from before the bilinear sparse autoencoders paper. I need to read that and revisit my thoughts here.

Primer: Attention-Head Superposition

Attention-head superposition (AHS) was introduced in this Anthropic post from 2023. Briefly, AHS is the idea that models may use a small number of attention heads to approximate the effect of having many more attention heads.

Definition 1: OV-incoherence. An attention circuit is OV-incoherent ...

[Draft][Note] On Singular Learning Theory

Relevant links

- AXRP with Daniel Murfet on an SLT primer

- Manifund grant proposal on DevInterp research agenda

- Daniel Murfet's post on "simple != short"

- Timaeus blogpost on actionable research projects

- DevInterp repository for estimating LLC

[Proposal] Do SAEs capture simplicial structure? Investigating SAE representations of known case studies

It's an open question whether SAEs capture underlying properties of feature geometry. Fortunately, careful research has elucidated a few examples of nonlinear geometry already. It would be useful to think about whether SAEs recover these geometries.

Simplices in models. Work studying hierarchical structure in feature geometry finds that sets of things are often represented as simplices, which are a specific kind of regular polytope. Simplices are al...

[Note] Is Superposition the reason for Polysemanticity? Lessons from "The Local Interaction Basis"

Superposition is currently the dominant hypothesis to explain polysemanticity in neural networks. However, how much better does it explain the data than alternative hypotheses?

Non-neuron aligned basis. The leading alternative, as asserted by Lawrence Chan here, is that there are not a very large number of underlying features; just that these features are not represented in a neuron-aligned way, so individual neurons appear to fire on multiple dist...

[Proposal] Is reasoning in natural language grokkable? Training models on language formulations of toy tasks.

Previous work on grokking finds that models can grok modular addition and tree search. However, these are not tasks formulated in natural language. Instead, the tokens correspond directly to true underlying abstract entities, such as numerical values or nodes in a graph. I question whether this representational simplicity is a key ingredient of grokking reasoning.

I have a prior that expressing concepts in natural language (as opposed to ...

[Proposal] Are circuits universal? Investigating IOI across many GPT-2 small checkpoints

Universal features. Work such as the Platonic Representation Hypothesis suggest that sufficiently capable models converge to the same representations of the data. To me, this indicates that the underlying "entities" which make up reality are universally agreed upon by models.

Non-universal circuits. There are many different algorithms which could correctly solve the same problem. Prior work such as the clock and the pizza indicate that, even for very simple algorithms, m...

Thanks for sharing your notes Daniel!