6 Answers sorted by

110

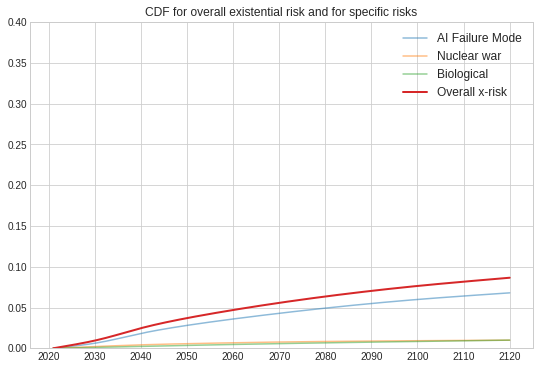

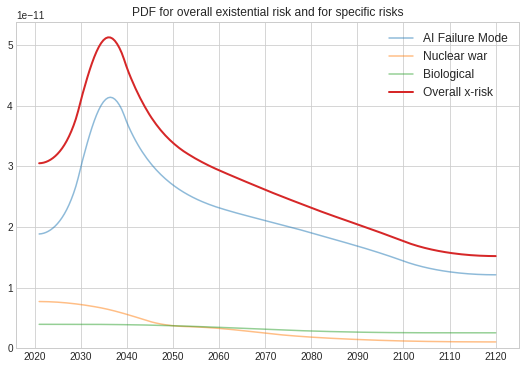

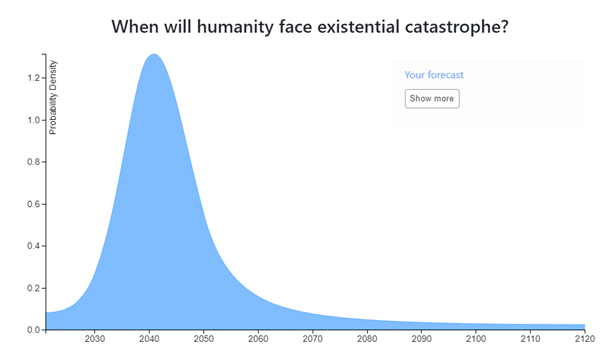

I've made a distribution based on the Metaculus community distributions:

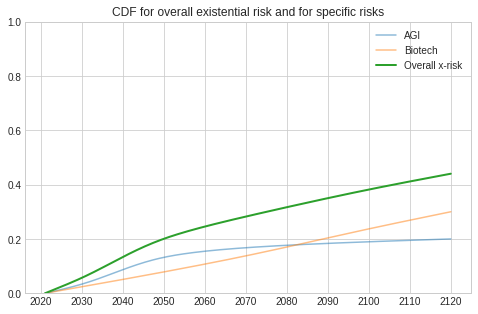

(I used this Colab notebook for generating the plots from Elicit distributions over specific risks. My Elicit snapshot is here).

In 2019, Metaculus posted the results of a forecasting series on catastrophic risk (>95% of humans die) by 2100. The overall risk was 9.2% for the community forecast (with 7.3% for AI risk). To convert this to a forecast for existential risk (100% dead), I assumed 6% risk from AI, 1% from nuclear war, and 0.4% from biological risk. To get timelines, I used Metaculus forecasts for when the AI catastrophe occurs and for when great power war happens (as a rough proxy for nuclear war). I put my own uninformative distribution on biological risk.

This shouldn't be taken as the "Metaculus" forecast, as I've made various extrapolations. Moreover, Metaculus has a separate question about x-risk, where the current forecast is 2% by 2100. This seems to me hard to reconcile with the 7% chance of AI killing >95% of people by 2100, and so I've used the latter as my source.

Technical note: I normalized the timeline pdfs based on the Metaculus binary probabilities in this table, and then treated them as independent sources of x-risk using the Colab. This inflates the overall x-risk slightly. However, this could be fixed by re-scaling the cdfs.

The overall risk was 9.2% for the community forecast (with 7.3% for AI risk). To convert this to a forecast for existential risk (100% dead), I assumed 6% risk from AI, 1% from nuclear war, and 0.4% from biological risk

I think this implies you think:

- AI is ~4 or 5 times (6% vs 1.3%) as likely to kill 100% of people as to kill between 95 and 100% of people

- Everything other than AI is roughly equally likely (1.5% vs 1.4%) to kill 100% of people as to kill between 95% and 100% of people

Does that sound right to you? And if so, what was your reasoning?

I ask...

Very interesting, thanks for sharing! This seems like a nice example of combining various existing predictions to answer a new question.

a forecast for existential risk (100% dead)

It seems worth highlighting that extinction risk (risk of 100% dead) is a (big) subset of existential risk (risk of permanent and drastic destruction of humanity's potential), rather than those two terms being synonymous. If your forecast was for extinction risk only, then the total existential risk should presumably be at least slightly higher, due to risks of unrecoverable colla...

100

Big thanks to Amanda, Owain, and others at Ought for their work on this!

My overall forecast is pretty low confidence — particularly with respect to the time parameter.

Snapshot is here: https://elicit.ought.org/builder/uIF9O5fIp

(Please ignore any other snapshots in my name, which were submitted in error)

My calculations are in this spreadsheet

80

For my prediction (which I forgot to save as a linkable snapshot before refreshing, oops) roughly what I did was take my distribution for AGI timing (which ended up quite close to the thread average), add an uncertain but probably short delay for a major x-risk factor (probably superintelligence) to appear as a result, weight it by the probability that it turns out badly instead of well (averaging to about 50% because of what seems like a wide range of opinions among reasonable well-informed people, but decreasing over time to represent an increasing chance that we'll know what we're doing), and assume that non-AI risks are pretty unlikely to be existential and don't affect the final picture very much. To an extent, AGI can stand in for highly advanced technology in general.

If I start with a prior where the 2030s and the 2090s are equally likely, it feels kind of wrong to say I have the 7-to-1 evidence for the former that I'd need for this distribution. On the other hand, if I made the same argument for the 2190s and the 2290s, I'd quickly end up with an unreasonable distribution. So I don't know.

Interesting, thanks for sharing.

an uncertain but probably short delay for a major x-risk factor (probably superintelligence) to appear as a result

I had a similar thought, though ultimately was too lazy to try to actually represent it. I'd be interested to hear what what size of delay you used, and what your reasoning for that was.

averaging to about 50% because of what seems like a wide range of opinions among reasonable well-informed people

Was your main input into this parameter your perceptions of what other people would believe about this parameter...

60

Epistemic status: extremely uncertain

I created my Elicit forecast by:

- Slightly adjusting down the 1/6 estimate of existential risk during the next century made in The Precipice

- Making the shape of the distribution roughly give a little more weight to time periods when AGI is currently forecasted to be more likely to come

[I work for Ought.]

60

For my prediction, like those of others, I basically just went with my AGI timeline multiplied by 50% (representing my uncertainty about how dangerous AGI is; I feel like if I thought a lot more about it the number could go up to 90% or down to 10%) and then added a small background risk rate from everything else combined (nuclear war, bio stuff, etc.)

I didn't spend long on this so my distribution probably isn't exactly reflective of my views, but it's mostly right.

Note that I'm using a definition of existential catastrophe where the date it happens is the date it becomes too late to stop it happening, not the date when the last human dies.

For some reason I can't drag-and-drop images into here; when I do it just opens up a new window.

(Just a heads up that the link leads back to this thread, rather than to your Elicit snapshot :) )

30

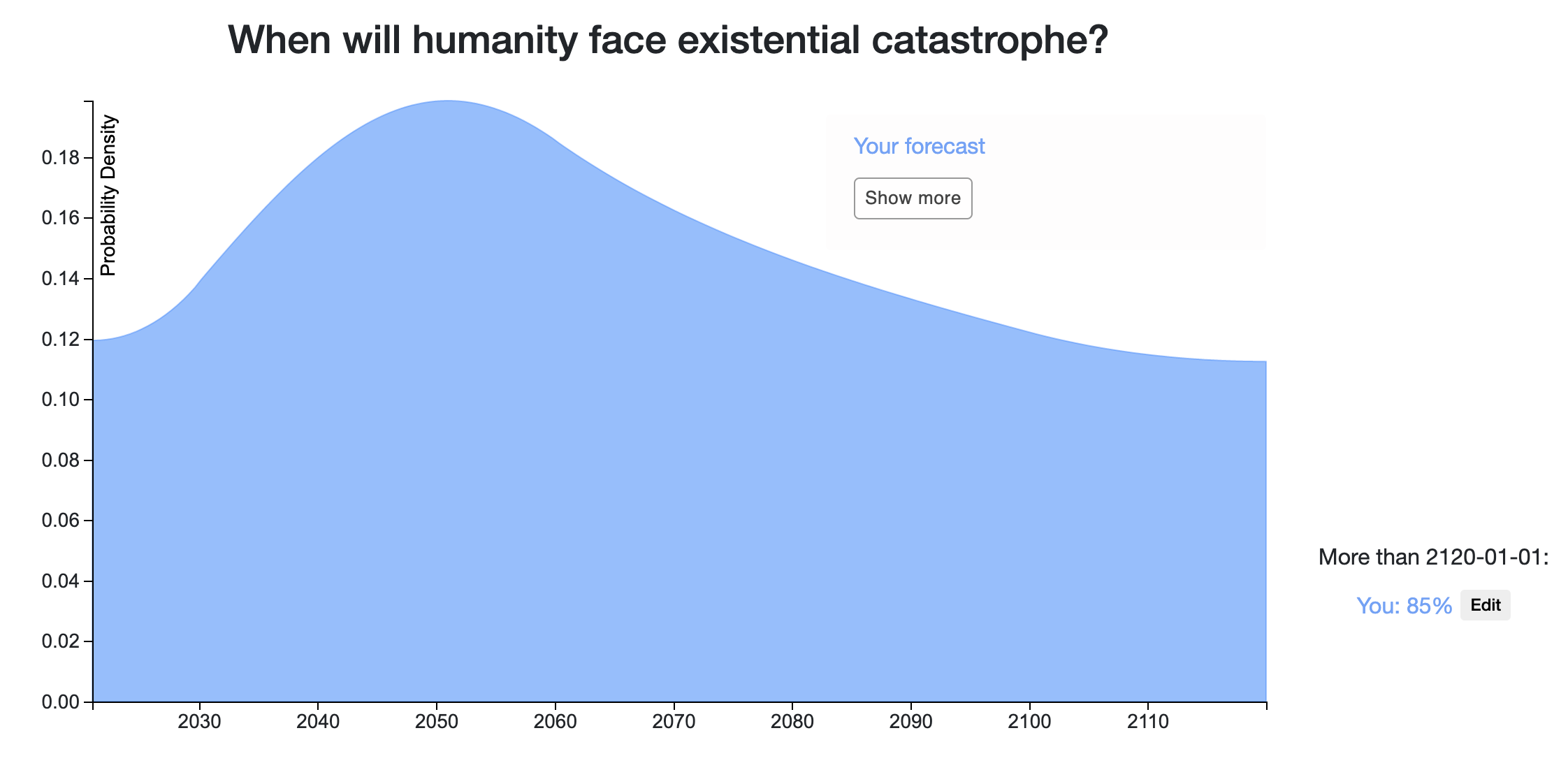

Elicit prediction: https://elicit.ought.org/builder/0n64Yv2BE

Epistemic Status: High degree of uncertainty, thanks to AI timeline prediction and unknowns such as unforeseen technologies and power of highly developed AI.

My Existential Risk (ER) probability mass is almost entirely formed from the risk of unfriendly Artificial Super Intelligence (ASI) and so is heavily influenced my predicted AI timelines. (I think AGI is most likely to occur around 2030 +-5 years, and will be followed within 0-4 years of ASI, with a singularity soon after that, see my AI timelines post: https://www.lesswrong.com/posts/hQysqfSEzciRazx8k/forecasting-thread-ai-timelines?commentId=zaWhEdteBG63nkQ3Z ).

I do not think any conventional threat such as nuclear war, super pandemic or climate change is likely to be an ER, and super volcanoes or asteroid impacts are very unlikely. I think this century is unique and will constitute 99% of the bulk of ER, with the last <1% being from more unusual threats such as simulation being turned off, false vacuum collapse, or hostile alien ASI. But also, for unforeseen or unimagined threats.

I think the most likely decade for the creation of ASI will be the 30’s, with an 8% ER chance (From not being able to solve the control problem or coordinate to implement it even if solved).

Considering AI timeline uncertainty as well as how long an ASI takes to acquire techniques or technologies necessary to wipe out or lock out humanity I think an 11% ER chance for the 40’s. Part of the reason this is higher than the 30’s ER estimate is to accommodate the possibility of a delayed treacherous turn.

Once past the 50’s I think we will be out of most of the danger (only 6% for the rest of the century), and potential remaining ER’s such as runaway nanotech or biotech will not be a very large risk as ASI would be in firm control of civilisation by then. Even then though some danger remains for the rest of the century from unforeseen black ball technologies, however interstellar civilisational spread (ASI high percent of speed of light probes) by early next century should have reduced nearly all threats to less than ERs.

So overall I think the 21st Century will pose a 25.6% chance of ER. See the Elicit post for the individual decade breakdowns.

Note: I made this prediction before looking at the Effective Altruism Database of Existential Risk Estimates.

(Minor & meta: I'd suggest people take screenshots which include the credence on "More than 2120-01-01" on the right, as I think that's a quite important part of one's prediction. But of course, readers can still find that part of your prediction by reading your comment or clicking the link - it's just not highlighted as immediately.)

I do not think any conventional threat such as nuclear war, super pandemic or climate change is likely to be an ER

Are you including risks from advanced biotechnology in that category? To me, it would seem odd to call that a "conventional threat"; that category sounds to me like it would refer to things we have a decent amount of understanding of and experience with. (Really this is more of a spectrum, and our understanding of and experience with risks from nuclear war and climate change is of course limited in key ways as well. But I'd say it's notably les...

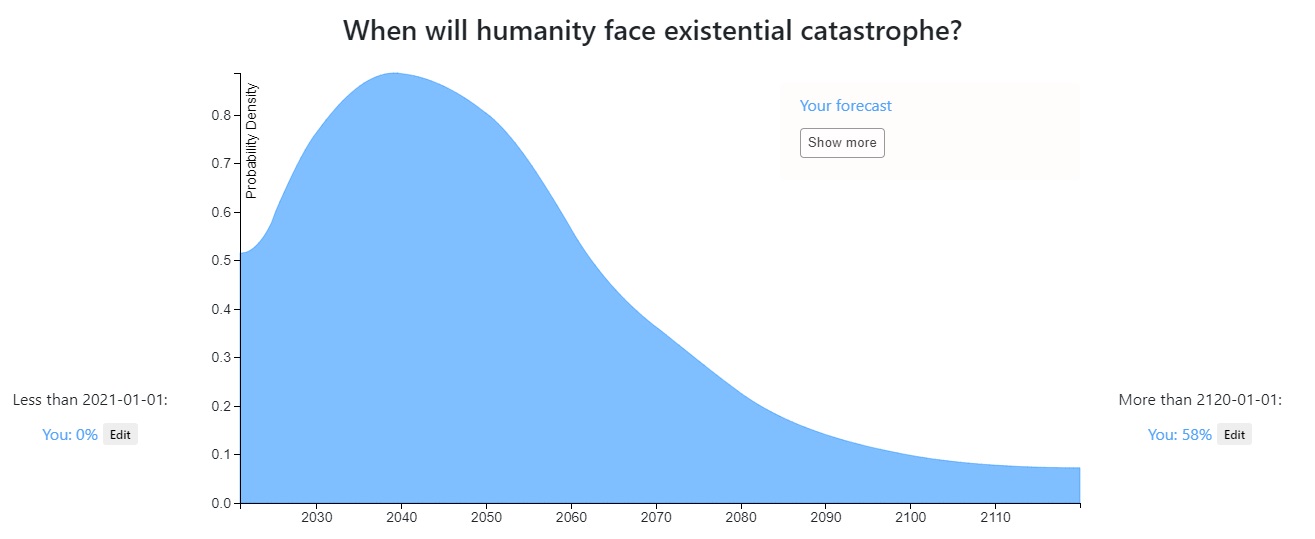

This is a thread for displaying your probabilities of an existential catastrophe that causes extinction or the destruction of humanity’s long-term potential.

Every answer to this post should be a forecast showing your probability of an existential catastrophe happening at any given time.

For example, here is Michael Aird’s timeline:

The goal of this thread is to create a set of comparable, standardized x-risk predictions, and to facilitate discussion on the reasoning and assumptions behind those predictions. The thread isn’t about setting predictions in stone – you can come back and update at any point!

How to participate

Here's an example of how to make your distribution:

How to add an image to your comment

If you have any bugs or technical issues, reply to Ben from the LW team or Amanda (me) from the Ought team in the comment section, or email me at amanda@ought.org.

Questions to consider as you're making your prediction

Comparisons and aggregations

Here's a comparison of the 8 predictions made so far (last updated 9/26/20).

Here's a distribution averaging all the predictions (last updated 9/26/20). The averaged distribution puts 19.3% probability before 2120 and 80.7% after 2120. The year within 2021-2120 with the greatest risk is 2040.

Here's a CDF of the averaged distribution: