wheras yudkowsky called rationality as a perfect dance, where you steps land exactly right, like a marching band, or like performing a light and airy piano piece perfectly via long hours of arduous concentration to iron out all the mistakes -

and some promote a more frivolous and fun dance, playing with ideas with humor and letting your mind stretch with imagine, letting your butterflies fly -

perhaps there is something to the synthesis, to a frenetic, awkward, and janky dance. knees scraping against the world you weren't ready for. excited, ebullient, manic discovery. the crazy thoughts at 2am. the gold in the garbage. climbing trees. It is not actually more "nice" than a cool logical thought, and it is not actually more easy.

Do not be afraid of crushing your own butterflies, stronger ones will take its place!

is reciprocity.io still up? did it move? link seems dead. I wanted to link to it in my substack article about manifold.love

... is it still hosted out of someone's laptop? i'd be willing to help people get it onto better infra.

Sometimes when one of my LW comments gets a lot of upvotes, I feel an urge that it's too high relative to how much I believe it and I need to "short" it

why should I ever write longform with the aim of getting to the top of LW, as opposed to the top of Hacker News? similar audiences, but HN is bigger.

I don't cite. I don't research.

I have nothing to say about AI.

my friends are on here ... but that's outclassed by discord and twitter.

people here speak in my local dialect ... but that trains bad habits.

it helps LW itself ... but if im going for impact surely large reach is the way to go?

I guess LW is uniquely about the meta stuff. Thoughts on how to think better. but I'm suspicious of meta.

Ways I've followed math off a cliff

- In my first real job search, I told myself I about a month to find a job. Then, after a bit over a week I just decided to go with the best offer I had. I justified this as the solution to optimal stopping problem, to pick the best option after 1/e time has passed. The job was fine, but the reasoning was wrong - the secretary problem assumes you know no info other than which candidate is better. Instead, I should've put a price on features I wanted from a job (mentorship, ability to wear a lot of hats and learn lots of things) and judged each job within what I thought was the distribution.

- Notably: my next job didn't pay very well and I stayed there too long after I'd given up hope in the product. I think I was following a pattern of following a path of low resistance both for the first and second jobs.

- I was reading up on crypto a couple years ago and saw what I thought was an amazing opportunity. It was a rebasing dao on the avalanche chain, called Wonderland. I looked into the returns, and guessed how long it would keep up, and put that probability and return rate into a Kelly calculator.

- Someone at a LW meetup: "hmm I don't think Kelly is the right model for this..." but I didn't listen.

- I did eventually cut my losses after only losing ~$20,000, and some further reasoning that the whitepaper didn't really make sense.

Moderation is hard yo

Y'all had to read through pyramids of doom containing forum drama last week. Or maybe, like me, you found it too exhausting and tried to ignore it.

Yesterday Manifold made more than $30,000, off a single whale betting in a self-referential market designed like a dollar auction, and also designed to get a lot of traders. It's the biggest market yet, 2200 comments, mostly people chanting for their team. Incidentally parts of the site went down for a bit.

I'm tired.

I'm no longer as worried about series A. But also ... this isn't what I want...

Lesswrong is too long.

It's unpleasant for busy people and slow readers. Like me.

Please edit before sending. Please put the most important ideas first, so I can skim or tap out when the info gets marginal. You may find you lose little from deleting the last few paragraphs.

Mr Beast's philanthropic business model:

- Donate money

- Use the spectacle to record a banger video

- Make more money back on the views via ads and sponsors.

- Go back to step 1

- Exponential profit!

EA can learn from this.

- Imagine a Warm Fuzzy economy where the fuzzies themselves are sold and the profit funds the good

- The EA castle is good actually

- When picking between two equal goods, pick the one that's more MEMEY

- Content is outreach. Content is income

- YouTube's feedback popup is like RLHF. Mr Beast says because of this you can't game the algo, just have to make good video

In HPMOR, Harry has this big medical kit. But he doesn't exercise and has no qualms messing up his sleep schedule by 4 hours of jet lag a day

Not very Don't Die of him if you ask me

I kinda feel like I literally have more subjective experience after experiencing ego death/rebirth. I suspect that humans vary quite a lot in how often they are conscious, and to what degree. And if you believe, as I do, that consciousness is ultimately algorithmic in nature (like, in the "surfing uncertainty" predictive processing view, that it is a human-modeling thing which models itself to transmit prediction-actions) it would not be crazy for it to be a kind of mental motion which sometimes we do more or less of, and which some people lack entirely.

I ...

New startup idea: cell-based / cultured saffron. Saffron is one of the most expensive substances by mass. Most pricey substances are precious metals that are valuable for signalling value or rare elements used in industry. On the other hand saffron is bought and directly consumed by millions(?) of poor people - they just use small amounts because the spice is very strong. Unlike lab-grown diamonds, lab-grown saffron will not face less demand due to lower signalling value.

The current way saffron is cultivated is they grow these whole fields of flowers, harv...

woah I didn't even know lw team was working on a "pre 2024 review" feature using prediction markets probabilities integrated into the UI. super cool!

I am a GOOD PERSON

(Not in the EA sense. Probably more closer to an egoist or objectivist or something like that. I did try to be vegan once and as a kid I used to dream of saving the world. I do try to orient my mostly fun-seeking life to produce big postive impact as a side effect, but mostly trying big hard things is cuz it makes mee feel good

Anyways this isn't about moral philosophy. It's about claiming that I'm NOT A BAD PERSON, GENERALLY. I definitely ABIDE BY BROADLY AGREED SOCIAL NORMS in the rationalist community. Well, except when I have good reas...

EA forum has better UI than lesswrong. a bunch of little things are just subtly better. maybe I should start contributing commits hmm

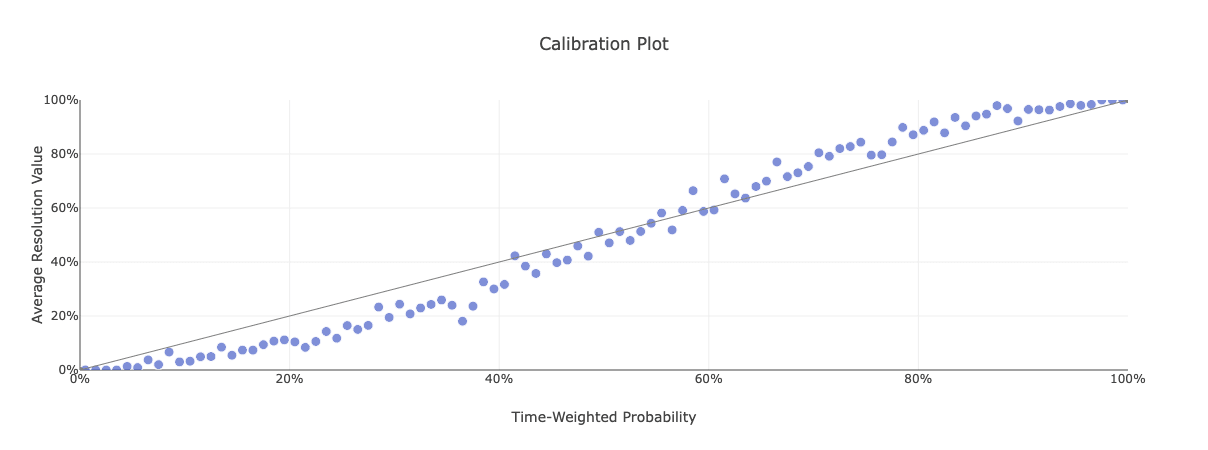

The s-curve in prediction market calibrations

https://calibration.city/manifold displays an s-curve for the market calibration chart. this is for non-silly markets with >5 traders.

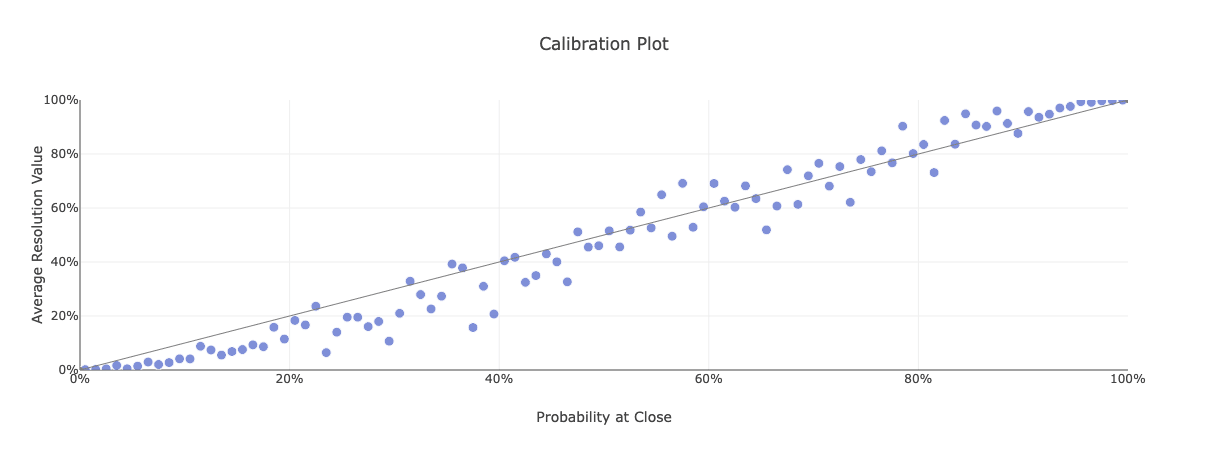

This is what it looks like at close:

this means the true probability is farther from 50% than the price makes it seem.

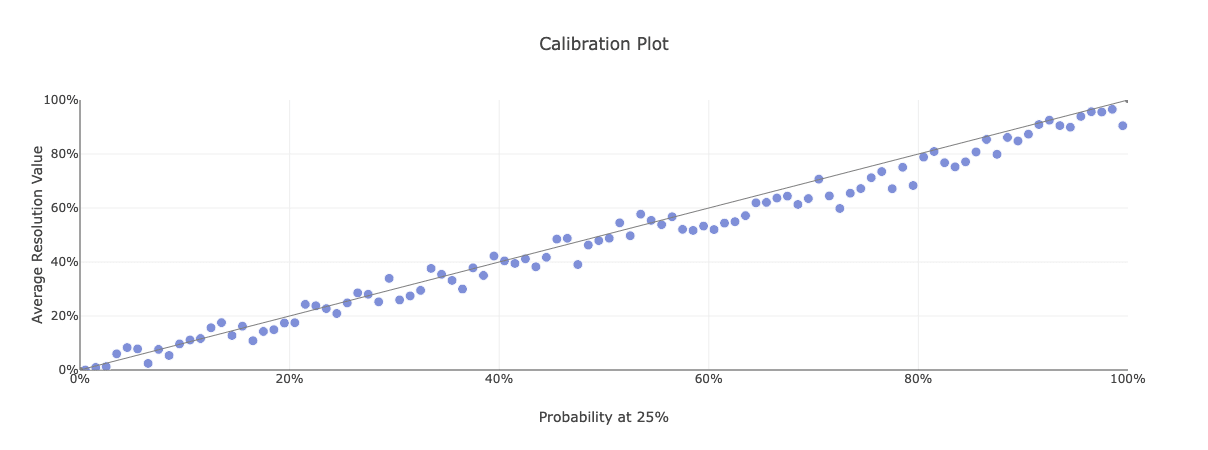

The calibration is better at 1/4 of the way to close:

you might think it's because markets closing near 20% are weird in some way (unclear resolution) but markets 3/4 of the way to close also show the s-curve. Go see for yourself.

The CSPI tournament also exhibited...

If I got a few distinct points in reply to a comment or post, should I put my points in seperate comments or a single one?

is anyone in this community working on nanotech? with renewed interest in chip fabrication in geopolitics and investment in "deep tech" in the vc world i think now is a good time to revisit the possibility of creating micro and nano scale tools that are capable of manufacturing.

like ASML's most recent machine is very big, so will the next one have to be even bigger? how would they transport it if it doesn't fit on roads? seems like the approach of just stacking more mirrors and more parts will hit limits eventually. "Moore's Second Law" says the cost of se...

I see smart ppl often try to abstract, generalize, mathify, in arguments that are actually emotion/vibes issues. I do this too.

The neurotypical finds this MEAN. but the real problem is that the math is wrong

LW posts about software tools are all yay open source, open data, decentralization, and user sovereignty! I kinda agree ... except for the last part. Settings are bad. If users can finely configure everything then they will be confused and lost.

The good kind of user sovereignty comes from simple tools that can be used to solve many problems.

despite the challenge, I still think being a founder or early employee is incredibly awesome

coding, product, design, marketing, really all kinds of building for a user - is the ultimate test.

it's empirical, challenging, uncertain, tactical, and very real.

if you succeeds, you make something self-sustaining that continues to do good.

if you fail, it will do bad. and/or die.

and no one will save you.

a draft from an older time, during zack's agp discourse

i did like fiora's account as a much better post

btw i transitioned because ozy made it seemed cool. his says take the pills if you feel like it. that's it. decompose "trans" into its parts: do i like a different name, do i like these other clothes, would i like certain changes to my body?

he also said since i am asian it will be easy. i was sus of that but it's 100% right. i could have been a youngshit but instead i waited until after college, feb 2020, to get onto hrt.

i'd like to think by w...

why do people equate conciousness & sentience with moral patienthood? your close circle is not more conscious or more sentient than people far away, but you care about your close circle more anyways. unless you are SBF or ghandi

lactose intolerence is treatable with probiotics, and has been since 1950. they cost $40 on amazon.

works for me at least.

The latest ACX book review of The Educated Mind is really good! (as a new lens on rationality. am more agnostic about childhood educational results though at least it sounds fun.)

- Somantic understanding is logan's Naturalism. It's your base layer that all kids start with, and you don't ignore it as you level up.

- incorporating heroes into science education is similar to an idea from Jacob Crawford that kids should be taught a history of science & industry - like what does it feel like to be the Wright brothers, tinkering on your device with no funding...

LWers worry too much, in general. Not talking about AI.

I mean ppl be like Yud's hat is bad for the movement. Don't name the house after a fictional bad place. Don't do that science cuz it makes the republicans stronger. Oh no nukes it's time to move to New Zealand.

Remember when Thiel was like "rationalists went from futurists to luddites in 20 years" well he was right.

Moderating lightly is harder than moderating harshly.

Walled gardens are easier than creating a community garden of many mini walled gardens.

Platforms are harder than blogs.

Free speech is more expensive than unfree speech.

Creating a space for talk is harder than talking.

The law in the code and the design is more robust than the law in the user's head

yet the former is much harder to build.

How to economically sort household trash (in SF)

before you start

sell or toss anything you don't want.

your parent's house is not storage.

your bank account is storage.

maybe get a label printer if you moving a lot?

run a tight ship.

lease the big bulk.

mind how much space your stuff takes up

your target metric is value per unit volume

your other target metric is convenience

look into EDC, ultralight backpacking, and vitalik buterin's 40L backpack guide.

as usual, everything is harder if you are taking care of kids or poor.

---

bulk item disposal i...

it is time for sinc to INK

sorry for being crazy. i am well rested. i am ready to post good posts. you will be amazed

It would be so cool if the ea / rat extended universe bought a castle. You'd be able to host events like this. Acquiring the real estate would actually be very cheap, castles are literally being given away for free. (though maintenance might suck idk)

btw whytham abbey doesn't count because it's not even a castle

People should be more curious about what the heck is going on with trans people on a physical, biological, level. I think this is could be a good citizen science research project for y'all since gender dysphoria afflicts a lot of us in this community, and knowledge leads to better detection and treatment. Many trans-women especially do a ton of research/experimentation themselves. Or so I hear. I actually haven't received any mad-science google docs of research from any trans person yet. What's up with that? Who's working on this?

Where I'd start maybe:

- ht...

There's something meditative about reductionism.

Unlike mindfulness you go beyond sensation to the next baser, realer level of the physics

You don't, actually. It's all in your head. It's less in your eyes and fingertips. In some ways, it's easier to be wrong.

Nonetheless cuts through a lot of noise - concepts, ideologies, social influences.

let people into your heart, let words hurt you. own the hurt, cry if you must. own the unsavory thoughts. own the ugly feelings. fix the actually bad. uplift the actually good.

emerge a bit stronger, able to handle one thing more.

if you were looking for a sign from the universe, from the simulation, that you can stop working on AI, this is it. you can stop working on AI.

work on it if you want to.

don't work on it if you don't want to.

update how much you want to based on how it feels.

other people are working on it too.

if you work on AI, but are full of fear or depression or darkness, you are creating the danger. which is fine if you think that's funny but it's not fine if you unironically are afraid, and also building it.

if you work on AI, but are full of hopium, copium, and not-obse...