- Eliezer Yudkowsky's portrayal of a single self-recursively improving AGI (later overturned by some applied ML researchers)

I've found myself doubting this claim, so I've read the post in question. As far as I can tell, it's a reasonable summary of the fast takeoff position that many people still hold today. If all you meant to say was that there was disagreement, then fine -- but saying 'later overturned' makes it sound like there is consensus, not that people still have the same disagreement they've had 13 years ago. (And your characterization in the paragraph I'll quote below also gives that impression.)

In hindsight, judgements read as simplistic and naive in similar repeating ways (relying on one metric, study, or paradigm and failing to factor in mean reversion or model error there; fixating on the individual and ignoring societal interactions; assuming validity across contexts):

Sorry, I get how the bullet point example gave that impression. I'm keeping the summary brief, so let me see what I can do.

I think the culprit is 'overturned'. That makes it sound like their counterarguments were a done deal or something. I'll reword that to 'rebutted and reframed in finer detail'.

Note though that 'some applied ML researchers' hardly sounds like consensus. I did not mean to convey that, but I'm glad you picked it up.

As far as I can tell, it's a reasonable summary of the fast takeoff position that many people still hold today.

Perhaps, your impression from your circle is different from mine in terms of what proportion of AIS researchers prioritise work on the fast takeoff scenario?

I think the culprit is 'overturned'. That makes it sound like their counterarguments were a done deal or something. I'll reword that to 'rebutted and reframed in finer detail'.

Yeah, I think overturned is the word I took issue with. How about 'disputed'? That seems to be the term that remains agnostic about whether there is something wrong with the original argument or not.

Perhaps, your impression from your circle is different from mine in terms of what proportion of AIS researchers prioritise work on the fast takeoff scenario?

My impression is that gradual takeoff has gone from a minority to a majority position on LessWrong, primarily due to Paul Christiano, but not an overwhelming majority. (I don't know how it differs among Alignment Researchers.)

I believe the only data I've seen on this was in a thread where people were asked to make predictions about AI stuff, including takeoff speed and timelines, using the new interactive prediction feature. (I can't find this post -- maybe someone else remembers what it was called?) I believe that was roughly compatible with the sizeable minority summary, but I could be wrong.

How about 'disputed'?

Seems good. Let me adjust!

My impression is that gradual takeoff has gone from a minority to a majority position on LessWrong, primarily due to Paul Christiano, but not an overwhelming majority

This roughly corresponds with my impression actually.

I know a group that has surveyed researchers that have permission to post on the AI Alignment Forum, but they haven't posted an analysis of the survey's answers yet.

To disentangle what I had in mind when I wrote ‘later overturned by some applied ML researchers’:

Some applied ML researchers in the AI x-safety research community like Paul Christiano, Andrew Critch, David Krueger, and Ben Garfinkel have made solid arguments towards the conclusion that Eliezer’s past portrayal of a single self-recursively improving AGI had serious flaws.

In the post though, I was sloppy in writing about this particular example, in a way that served to support the broader claims I was making.

This resonates, based on my very limited grasp of statistics.

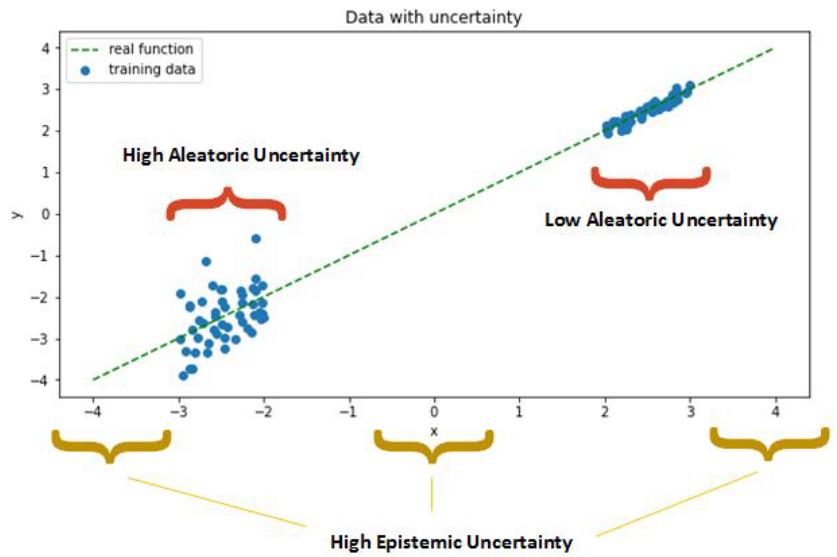

My impression is that sensitivity analysis aims more at reliably uncovering epistemic uncertainty (whereas Guesstimate as a tool seems to be designed more for working out aleatory uncertainty).

Quote from interesting data science article on Silver-Taleb debate:

Predictions have two types of uncertainty; aleatory and epistemic.

Aleatory uncertainty is concerned with the fundamental system (probability of rolling a six on a standard die). Epistemic uncertainty is concerned with the uncertainty of the system (how many sides does a die have? And what is the probability of rolling a six?). With the latter, you have to guess the game and the outcome; like an election!

This is my go-to figure when thinking about aleatoric vs epistemic uncertainty.

Edit: Explaining two types of uncertainty through a simple example. x are inputs and y are labels to a model to be trained.

Edit: In the context of the figure. The aleatoric uncertainty is high in the left cluster because the uncertainty of where a new data point will be is high and is not reduced by the number of training examples. The epistemic uncertainty is high in regions where there is insufficient data or knowledge to produce an accurate estimate of the output, this would go down with more training data in these regions.

Looks cool, thanks! Checking if I understood it correctly:

- is x like the input data?

- could y correspond to something like the supervised (continuous) labels of a neural network, which inputs are matched too?

- does epistemic uncertainty here refer to that inputs for x could be much different from the current training dataset if sampled again (where new samples could turn out be outside of the current distribution)?

Thanks, I realised that I provided zero context for the figure. I added some.

- is x like the input data?

- could y correspond to something like the supervised (continuous) labels of a neural network, which inputs are matched too?

Yes. The example is about estimating y given x where x is assumed to be known.

- does epistemic uncertainty here refer to that inputs for x could be much different from the current training dataset if sampled again (where new samples could turn out be outside of the current distribution)?

Not quite, we are still thinking of uncertainty only as applied to y. Epistemic uncertainty here refers to regions where the knowledge and data is insufficient to give a good estimate y given x from these regions.

To compare it with your dice example, consider x to be some quality of the die such that you think dies with similar x will give similar rolls y. Then aleatoric uncertainty is high for dies where you are uncertain for values of new rolls even after having rolled several similar dies and rolling more similar dies will not help. While epistemic uncertainty is high for dies with qualities you haven't seen enough of.

Thank you! That was clarifying especially the explanation of epistemic uncertainty for y.

1. I've been thinking about epistemic uncertainty more in terms of 'possible alternative qualities present', where

- you don't know the probability of a certain quality being present for x (e.g. what's the chance of the die having an extended three-sided base?).

- or might not even be aware of some of the possible qualities that x might have (e.g. you don't know a triangular prism die can exist).

2. Your take on epistemic uncertainty for that figure seems to be

- you know of x's possible quality dimensions (e.g. relative lengths and angles of sides at corners).

- but given a set configuration of x (e.g. triangular prism with equilateral triangle sides = 1, rectangular lengths = 2 ), you don't know yet the probabilities of outcomes for y (what's the probability of landing face up for base1, base2, rect1, rect2, rect3?).

Both seem to fit the definition of epistemic uncertainty. Do correct me here!

Edit: Rough difference in focus:

1. Recognition and Representation

vs.

2. Sampling and Prediction

Good point, my example with the figure is lacking in regards to 1 simply because we are assuming that x is known completely and that the observed y are true instances of what we want to measure. And from this I realize that I am confused about when some uncertainties should be called aleagoric or epistemic.

When I think I can correctly point out epistemic uncertainty:

- If the y that are observed are not the ones that we actually want then I'd call this uncertainty epistemic. This could be if we are using tired undergrads to count the number of pips of each rolled die and they miscount for some fraction of the dice.

- If you haven't seen similar x before then you have epistemic uncertainty because you have uncertainty about which model or model parameters to use when estimating y. (This is the one I wrote about previously and the one shown in the figure)

My confusion from 1:

- If the conditions of the experiment changes. Our undergrads start to pull dice from another bag with an entirely different distribution p(y|x), then we have insufficient knowledge to estimate y and I would call this epistemic uncertainty.

- If x is lacking in some information to do good estimates of y. x is the color of the die and when we have thrown enough dice from our experimental distribution we get a good estimate of p(y|x) and our uncertainty doesn't increase with more rolls, which makes me think that it is aleatoric uncertainty. But on the other hand x is not sufficient to spot when we have a new type of die (see previous point) and if we knew more about the dice we could do better estimates which makes me think that it is epistemic uncertainty.

You bring up a good point in 1 and I agree that this feels like it should be epistemic uncertainty, but at some point the boundary between inherent uncertainty in the process and uncertainty from knowing too little about the process becomes vague to me and I can't really tell when a process is aleatoric or epistemic.

I also noticed I was confused. Feels like we're at least disentangling cases and making better distinctions here.

BTW, just realised that a problem with my triangular prism example is that theoretically no will rectangular side can face up parallel to the floor at the same time, just two at 60º angles).

But on the other hand x is not sufficient to spot when we have a new type of die (see previous point) and if we knew more about the dice we could do better estimates which makes me think that it is epistemic uncertainty.

This is interesting. This seems to ask the question 'Is a change in the quality of x like colour actually causal to outcomes y?' Difficulty here is that you can never fully be certain empirically, just get closer to [change in roll probability] for [limit number of rolls -> infinity] = 0.

To disentangle the confusion I took a look around about a few different definitions of the concepts. The definitions were mostly the same kind of vague statement of the type:

- Aleatoric uncertainty is from inherent stochasticity and does not reduce with more data.

- Epistemic uncertainty is from lack of knowledge and/or data and can be further reduced by improving the model with more knowledge and/or data.

However, I found some useful tidbits

Uncertainties are characterized as epistemic, if the modeler sees a possibility to reduce them by gathering more data or by refining models. Uncertainties are categorized as aleatory if the modeler does not foresee the possibility of reducing them. [Aleatory or epistemic? Does it matter?]

Which sources of uncertainty, variables, or probabilities are labelled epistemic and which are labelled aleatory depends upon the mission of the study. [...] One cannot make the distinction between aleatory and epistemic uncertainties purely through physical properties or the experts' judgments. The same quantity in one study may be treated as having aleatory uncertainty while in another study the uncertainty maybe treated as epistemic. [Aleatory and epistemic uncertainty in probability elicitation with an example from hazardous waste management]

In the context of machine learning, aleatoric randomness can be thought of as irreducible under the modelling assumptions you've made. [The role of epistemic vs. aleatory uncertainty in quantifying AI-Xrisk]

[E]pistemic uncertainty means not being certain what the relevant probability distribution is, and aleatoric uncertainty means not being certain what a random sample drawn from a probability distribution will be. [Uncertainty quantification]

With this my updated view is that our confusion is probably because there is a free parameter in where to draw the line between aleatoric and epistemic uncertainty.

This seems reasonable as more information can always lead to better estimates (at least down to considering wavefunctions I suppose) but in most cases having this kind of information and using it is infeasible and thus having the distinction between aleatoric and epistemic depend on the problem at hand seems reasonable.

Good catch

This seems to ask the question 'Is a change in the quality of x like colour actually causal to outcomes y?'

Yes, I think you are right. Usually when modeling you can learn correlations that are useful for predictions but if the correlations are spurious they might disappear when the distributions changes. As such to know if p(y|x) changes from only observing x, then we would probably need that all causal relationships to y are captured in x?

I found it immensely refreshing to see valid criticisms of EA. I very much related to the note that many criticisms of EA come off as vague or misinformed. I really appreciated that this post called out specific instances of what you saw as significant issues, and also engaged with the areas where particular EA aligned groups have already taken steps to address the criticisms you mention.

I think I disagree on the degree to which EA folks expect results to be universal and generalizable (this is in response to your note at the end of point 3). As a concrete example, I think GiveWell/EAers in general would be unlikely to agree that GiveDirectly style charity would have similarly sized benefits in the developed world (even if scaled to equivalent size given normal incomes in the nation) without RCTs suggesting as much. I expect that the evidence from other nations would be taken into account, but the consensus would be that experiments should be conducted before immediately concluding large benefits would result.

I appreciate your thoughtful comment too, Dan.

You're right I think that I overstated EA's tendency to assume generalisability, particularly when it comes to testing interventions in global health and poverty (though much less so when it comes to research in other cause areas). Eva Vivalt's interview with 80K, and more recent EA Global sessions discussing the limitations of the randomista approach are examples. Some incubated charity interventions by GiveWell also seemed to take a targeted regional approach (e.g. No Lean Season). Also, Ben Kuhn's 'local context plus high standards theory' for Wave. So point taken!

I still worry about EA-driven field experiments relying too much, too quickly on filtering experimental observations through quantitive metrics exported from Western academia. In their local implementation, these metrics may either not track the aspects we had in mind, or just not reflect what actually exists and/or is relevant to people's local context there. I haven't heard yet about EA founders who started out by doing open qualitative fieldwork on the ground (but happy to hear examples!).

I assume generalisability of metrics would be less of a problem for medical interventions like anti-malaria nets and deworming tablets. But here's an interesting claim I just came across:

One-size-fits-all doesn’t work and the ways medicine affects people varies dramatically.

With schistosomiasis we found that fisherfolk, who are the most likely to be infected, were almost entirely absent from the disease programme and they’re the ones defecating and urinating in the water, spreading the disease.

Fair points!

I don't know if I'd consider JPAL directly EA, but they at least claim to conduct regular qualitative fieldwork before/after/during their formal interventions (source from Poor Economics, I've sadly forgotten the exact point but they mention it several times). Similarly, GiveDirectly regularly meets with program participants for both structured polls and unstructured focus groups if I recall correctly. Regardless, I agree with the concrete point that this is an important thing to do and EA/rationality folks are less inclined to collect unstructured qualitative feedback than its importance deserves.

Interesting, I didn't know GiveDirectly ran unstructured focus groups, nor that JPAL does qualitative interviews at various stages of testing interventions. Adds a bit more nuance to my thoughts, thanks!

One of GiveDirectly's blog posts on survey and focus group results, by the way.

https://www.givedirectly.org/what-its-like-to-receive-a-basic-income/

we also reinvent the wheel more.

Could you elaborate on this? Which wheels are you thinking of?

EAs invented neither effectiveness nor altruism, as Buddha, Quakers, Gandhi, Elizabeth Fry, Equiano and many others can attest!

EAs tend to be slow/behind the curve on coms and behavioural science and Implementation Science, and social science/realpolitik in general. But they do learn over time.

This is a good question hmm. Now I’m trying to come up with specific concrete cases, I actually feel less confident of this claim.

Examples that did come to mind:

- I recall reading somewhere about early LessWrong authors reinventing concepts that were already worked out before in philosophic disciplines (particularly in decision theory?). Can't find any post on this though.

- More subtly, we use a lot of jargon. Some terms were basically imported from academic research (say into cognitive biases) and given a shiny new nerdy name that appeals to our incrowd. In the case of CFAR, I think they were very deliberate about renaming some concepts, also to make them more intuitive for workshops participants (eg. implementation intentions -> trigger action plans/patterns, pre-mortem -> murphijitsu).

(After thinking about this, I called with someone who is doing academic research on Buddhist religion. They independently mentioned LW posts on 'noticing', which basically is a new name for a mediation technique that has been practiced for millennia.)

Renaming is not reinventing of course, but the new terms do make it harder to refer back to sources from established research literature. Further, some smart amateur blog authors like to synthesise and intellectually innovate upon existing research (eg. see Scott Alexander's speculative posts, or my post above ^^).

The lack of referencing while building up innovations can cause us to misinterpret and write stuff that poorly reflects previous specialist research. We're building up our own separated literature database.

A particular example is Robin Hanson 'near-far mode', from a concise and well-articulated review paper about psychological distance to the community, which spawned a lot of subsequent posts about implications for thinking in the community (but with little referencing to other academic studies or analyses).

E.g. Hanson's idea that people are hypocritical when they signal high-construal values but are more honest when they think concretely – a psychology researcher who seems rigorously minded said to me that he dug into Hanson's claim but that conclusions from other studies don’t support this.

- My impression from local/regional/national EA community building is that a many organisers (including me) either tried to work out how to run their group from first principles, or consulted with other more experienced organisers. We could also have checked for good practices from and consulted with other established youth movements. I have seen plenty of write-ups that go through the former, but little or none of the other.

Definitely give me counter-examples!

I recall reading somewhere about early LessWrong authors reinventing concepts that were already worked out before in philosophic disciplines (particularly in decision theory?). Can't find any post on this though.

See Eliezer’s Sequences and Mainstream Academia and scroll down for my comment there. Also https://www.greaterwrong.com/posts/XkNXsi6bsxxFaL5FL/ai-cooperation-is-already-studied-in-academia-as-program (According to these sources, AFAWK, at least some of the decision theory ideas developed on LW were not worked out already in academia.)

The way I've tended to think about these sorts of questions is to see a difference between the global portfolio of approaches, and our personal portfolio of approaches.

A lot of the criticisms of EA as being too narrow, and neglecting certain types of evidence or ways of thinking make far more sense if we see EA as hoping to become the single dominant approach to charitable giving (and perhaps everything else), rather than as a particular community which consists of particular (fairly similar) individuals who are pushing particular approaches to doing good that they see as being ignored by other people.

Yeah, seems awesome for us to figure out where we fit within that global portfolio! Especially in policy efforts, that could enable us to build a more accurate and broadly reflective consensus to help centralised institutions improve on larger-scale decisions they make (see a general case for not channeling our current efforts towards making EA the dominant approach to decision-making).

To clarify, I hope this post helps readers become more aware of their brightspots (vs. blindspots) that they might hold in common with like-minded collaborators – ie. areas they notice (vs. miss) that map to relevant aspects of the underlying territory.

I'm trying to encourage myself and the friends I collaborate with to build up an understanding of alternative approaches that outside groups take up (ie. to map and navigate their surrounding environment), and where those approaches might complement ours. Not necessarily for us to take up more simultaneous mental styles or to widen our mental focus or areas of specialisation. But to be able to hold outside groups' views so we get roughly where they are coming from, can communicate from their perspective, and form mutually beneficial partnerships.

More fundamentally, as human apes, our senses are exposed to an environment that is much more complex than just us. So we don't have the capacity to process our surroundings fully, nor to perceive all the relevant underlying aspects at once. To map the environment we are embedded in, we need robust constraints for encoding moment-to-moment observations, through layers of inductive biases, into stable representations.

Different-minded groups end up with different maps. But in order to learn from outside critics of EA, we need to be able to line up our map better with theirs.

Let me throw an excerpt from an intro draft on the tool I'm developing. Curious for your thoughts!

Take two principles for a collaborative conversation in LessWrong and Effective Altruism:

- Your map is not the territory:

Your interlocutor may have surveyed a part of the bigger environment that you haven’t seen yet. Selfishly ask for their map, line up the pieces of their map with your map, and combine them to more accurately reflect the underlying territory.- Seek alignment:

Rewards can be hacked. Find a collaborator whose values align with your values so you can rely on them to make progress on the problems you care about.When your interlocutor happens to have a compatible map and aligned values, such principles will guide you to learn missing information and collaborate smoothly.

On the flipside, you will hit a dead end in your new conversation when:

- you can’t line up their map with yours to form a shared understanding of the territory.

Eg. you find their arguments inscrutable.- you don’t converge on shared overarching aims for navigating the territory.

Eg. double cruxes tend to bottom out at value disagreements.

You can resolve that tension with a mental shortcut:

When you get confused about what they mean and fundamentally disagree on what they find important, just get out of their way. Why sink more of your time into a conversation that doesn’t reveal any new insights to you? Why risk fuelling a conflict?This makes sense, and also omits a deeper question: why can’t you grasp their perspective?

Maybe they don’t think the same things through as rigorously as you, and you pick up on that. Maybe they dishonestly express their beliefs or preferences, and you pick up on that. Maybe they honestly shared insights that you failed to pick up on.

Underlying each word you exchange is your perception of the surrounding territory ...

A word’s common definition masks our perceptual divide. Say you and I both look at the same thing and agree which defined term describes it. Then, we can mention this term as a pointer to what we both saw. Yet, the environment I perceive that I point the term to may be very different from the environment you perceive.

Different-minded people can illuminate our blindspots. Across the areas they chart and the paths they navigate lie nuggets – aspects of reality we don’t even know yet that we will come to care about.

Yeah, I really like this idea -- at least in principle. The idea of looking for value agreement and where do our maps (that likely are verbally extremely different) match is something that I think we don't do nearly enough.

To get at what worries me about some of the 'EA needs to consider other viewpoints discourse' (and not at all about what you just wrote, let me describe two positions:

- EA needs to get better at communicating with non EA people, and seeing the ways that they have important information, and often know things we do not, even if they speak in ways that we find hard to match up with concepts like 'bayesian updates' or 'expected value' or even 'cost effectiveness'.

- EA needs to become less elitist, nerdy, jargon laden and weird so that it can have a bigger impact on the broader world.

I fully embrace 1, subject to constraints about how sometimes it is too expensive to translate an idea into a discourse we are good at understanding, how sometimes we have weird infohazard type edge cases and the like.

2 though strikes me as extremely dangerous.

To make a metaphor: Coffee is not the only type of good drink, it is bitter and filled with psychoactive substances that give some people heart palpitations. That does not mean it would be a good idea to dilute coffee with apple juice so that it can appeal to people who don't like the taste of coffee and are caffeine sensitive.

The EA community is the EA community, and it currently works (to some extent), and it currently is doing important and influential work. Part of what makes it work as a community is the unifying effect of having our own weird cultural touchstones and documents. The barrier of excluisivity created by the jargon and the elitism, and the fact that it is one of the few spaces where the majority of people are explicit utilitarians is part of what makes it able to succeed (to the extent it does).

My intuition is that an EA without all of these features wouldn't be a more accessible and open community that is able to do more good in the world. My intuition is an EA without those features would be a dead community where everyone has gone on to other interests and that therefore does no good at all.

Obviously there is a middle ground -- shifts in the culture of the community that improve our pareto frontier of openness and accessibility while maintaing community cohesion and appeal.

However, I don't think this worry is what you actually were talking about. I think you really were focusing on us having cognitive blindspots, which is obviously true, and important.

Well-written! Most of this definitely resonates for me.

Quick thoughts:

- Some of the jargon I've heard sounded plain silly from a making-intellectual-progress-perspective (not just implicit aggrandising). Makes it harder to share our reasoning, even to each other, in a comprehensible, high-fidelity way. I like Rob Wiblin's guide on jargon.

- Perhaps we put too much emphasis on making explicit communication comprehensible. Might be more fruitful to find ways to recognise how particular communities are set up to be good at understanding or making progress in particular problem niches, even if we struggle to comprehend what they're specifically saying or doing.

(I was skeptical about the claim 'majority of people are explicit utilitarians' – i.e. utilitarian not just consequentialist or some pluralistic mix of moral views – but EA Survey responses seems to back it up: ~70% utilitarian)

I left out nuances to keep the blindspot summary short and readable. But I should have specifically prefaced what fell outside the scope of my writing. Not doing so made claims come across more extreme than I meant for the more literal/explicit readers amongst us :)

So for you who still happens to read this, here’s where I was coming from:

- To describe blindspots broadly across the entire rationality and EA community.

In actual fact I see both communities more as loose clusters of interacting and affiliated people. Each gathered group somewhat diverges in how it attracts members who are predisposed towards focussing on (and reinforce each other to express) certain aspects as perceived within certain views.

I pointed out how a few groups diverge in the summary above (e.g. effective animal advocacy vs. LW decision theorists, thriving vs suffering-focussed EAs), but left out many others. Responding to Christian Kl’s earlier comment, I think how the 'CFAR alumni' cluster frames aspects meaningfully diverges from the larger/overlapping ‘long-time LessWrong fans’ cluster.

Previously, I suggested that EA staff could coordinate work more through non-EA-branded groups with distinct yet complementary scopes and purposes, so the general overarching tone of this post runs counter to that.

- To aggregate common views within which our members seemed to most often frame problems (as expressed to others involved in the community they knew also aimed to work on those problems), and to contrast those with the foci held by other purposeful human communities out there.

Naturally, what an individual human focusses on in any given moment depends on their changing emotional/mental makeup and the context they find themselves (incl. the role they then identify as having) in. I’m not e.g. claiming that when someone who aspires to be a rational researcher at work focusses on brushing their teeth at home while glancing at their romantic partner, they must nevertheless be thinking real abstract and elegant thoughts.

But for me, the exercise of mapping our ingroup's brightspots onto each listed dimension – relative to the focus of outside groups on – has provided some overview. The dimensions are from a perceptual framework I gradually put together and that is somewhat internally coherent (but predictably overwhelms anyone whom I explain it to, and leaves them wondering how it's useful; hence this more pragmatic post).

I hope though no reader ends up using this as a personality test – say for identifying their or their friend's (supposedly stable) character traits to predict their resulting future behaviour (or god forgive, to explain away any confusion or disagreement they sense about what an unfamiliar stranger says).

- To keep each blindspot explanation simple and to the point:

If I already mix in a lot of ‘on one hand in this group...but on the other hand in this situation’, the reader will gloss over the core argument. I appreciate people’s comments with nuanced counterexamples though. Keeps me intellectually honest.

Hope that clarifies the post's argumentation style somewhat.

I had those three starting points at the back of my mind while writing in March. So sorry I didn't include them.

We tend to perceive the world as consisting of static parts:

- we represent + recognise an observation to be a fixed cluster (a structure)

eg. an observation’s recurrence, a place, a body, a stable identity

I don't think our rationality at the moment is very structure-based. Kahneman's view of cognitive biases and heuristics was very structure-based.

Reasoning with CFAR techniques on the other hand is more process-based. If I listen to my felt sense because I trained a lot of focusing or do double crux I'm not acting in a structure-based way.

Rationalists who detachedly and coolly model the external world

That's basically the straw Vulcan accusation. We build pillow forts.

We view individuals as independent

I don't think we do. When discussing for example FDA decisions we don't see Fauci as a person who's independent of the system in which he operates. There's the moral maze discourse which is also about individuals being strongly influenced by the system in which they are operating. Inadequate Equilibria is also not about the faults of individuals but how individuals are limited by the systems in which they operate.

future (vs. past),

We certainly care more about the future than many other communities, but we also care about the past. Progress studies and the surrounding debates are very much focused on the past. We do have Petrov day which is about remembering a past event and reminding us that there was a history of the world being at risk.

> Rationalists who detachedly and coolly model the external world

That's basically the straw Vulcan accusation. We build pillow forts.

I now see how the 'who' part of the sentence can come across as me saying that rationalists only detachedly and coolly modelling the external world. I do not think that is the case based on interacting with plenty of self-ascribed rationalists myself (including making a pillow fort and hanging out with them in it myself). I do think rationalists do this mental move a lot more than other people I know.

Instead, I meant this as an action rationalists choose to take more often.

I just edited that sentence to 'When rationalists detachedly and coolly model...'

I do think rationalists do this mental move a lot more than other people I know.

That's a very different claim than the one in the OP. The one in the OP is about lack of diversity of mental moves and not just a claim about engaging in coolly modeling being a mental move that rationalists are capable of doing well and do frequently.

This seems to presume that a certain literal interpretation of that text is the only one that could be intended or interpreted. I don't think this is worth discussing this further, so leaving it at that.

Kahneman's view of cognitive biases and heuristics was very structure-based. .... Reasoning with CFAR techniques on the other hand is more process-based.

I like this distinction, and actually agree! Have talked with a CFAR (ex-)staff member about this, who confirmed my impression that CFAR has been compensating factor in the community amongst most of the perceptual/cognitive dimensions I listed. Where you and I may disagree though is that I still think the default tendency for many rationalists is to construct problems as structure-based.

I don't think we do. When discussing for example FDA decisions we don't see Fauci as a person who's independent of the system in which he operates.

Good nuances here that we don't just see individuals as independent of the system they're operating in. So there is some sense of interconnectivity there. I think you're only partially capturing what I mean with interdependence though. See other comment for my attempt to convey this.

We certainly care more about the future than many other communities, but we also care about the past. Progress studies and the surrounding debates are very much focused on the past. We do have Petrov day which is about remembering a past event and reminding us that there was a history of the world being at risk.

I agree with all these points. We seem to be on one line here.

I like this distinction, and actually agree! Have talked with a CFAR (ex-)staff member about this, who confirmed my impression that CFAR has been compensating factor in the community amongst most of the perceptual/cognitive dimensions I listed. Where you and I may disagree though is that I still think the default tendency for many rationalists is to construct problems as structure-based.

There are certainly rationalists who's approach to problems is structure-based. We have a diversity of approaches.

One interesting example here is the question of diet. You find people who do argue the structure-based approach where it's about CICO (Calories-Out-Calories) in. Then you have other people who take a more process oriented perspective out of cybernetics.

You often have people who believe in CICO who don't get that there is another way to look at the issue but historically for example Eliezer did argue the cybernetics paradigm.

When I look at my local rationality community CFAR has a huge influence on it. I would guess that within EA you find more people who can only handle the structure-based approach. I would claim that's more because those EA have too little exposure to the rationality community then it's due to flaws in the rationality community.

Good nuances here that we don't just see individuals as independent of the system they're operating in. So there is some sense of interconnectivity there. I think you're only partially capturing what I mean with interdependence though. See other comment for my attempt to convey this.

There are probably two separate issues here. One is about modeling yourself as independent and the other is about modeling other people as independent actors.

I think generally we do a decent job at modeling how other people are constraint in the choices they make by their enviroment but model ourselves more as independent actors. But then I'm uncertain whether anybody really looks at the way their own decision making depends on other people.

re: Processes vs Structure

Your concrete examples made me update somewhat towards process thinking being more common in AI alignment and local rationality communities than I was giving credit for. I also appreciate you highlighting that the rationality community has a diversity of approaches, and that we're not some homogenous blob (as my post might imply).

A CFAR staff member also pointed me to MIRI's logical induction paper (with input from Critch) as one of MIRI's most cited papers (i.e. at least somewhat representative of how outside people might view MIRI's work) that's clearly based on an algorithmic process.

Eliezer's AI Foom post (the one I linked to above) can be read as an explanation of how an individual agent is constructed out of a reinitiating process.

Also, there has been some interest in decision and allocation mechanisms like e.g. quadratic voting and funding (both promoted by RxC, latter also by Vitalik Buterin) which seems kinda deliberately process-oriented.

I would guess that within EA you find more people who can only handle the structure-based approach.

This also resonates, and I hadn't explicitly made that distinction yet! Particularly, how EA researchers have traditionally represented cause areas/problems/interventions to work on (after going through e.g. Importance-Tractability-Neglectedness analysis) seems quite structure-based (80Ks methods for testing personal fit don't as much however).

IMO CEA-hosted grantmakers also started from a place where they dissected and assessed the possible promising/unpromising traits of a person, the country or professional hub they operate from, and their project idea (based on first principles say, or track record). This was particularly the case with the EA Community Building Grant in early days. But seems to be changing somewhat in how EA Funds grantmakers are offering smaller grants in dialog with possible applicants, and assessing viability for the career aspirant to continue or for a project to expand further as they go.

I made a case before for funding my entrepreneurial endeavour that instead relied on funding processes that relied more directly on eliciting and acting on feedback. And expanded on that in a grant application to the EA Infrastructure Fund:

Personally, I think my project work could be more robustly evaluated based on

- feedback on the extent to which my past projects enabled or hindered aspiring EAs.

- the care I take in soliciting feedback from users and in passing that on to strategy coaches and evaluating funders.

- how I use feedback from users and advisors to refine and prioritise next services.

At each stage, I want to improve processes for coordinating on making better decisions. Particularly, to couple feedback with funding better:

deserved recognition

actionable feedback ⇅ - ⇅commensurate funding

improved work

I'm not claiming btw that process-based representations are inherently superior for the social good or something. Just that the value of that kind of thinking is overlooked in some of the work we do.

E.g. In this 80K interview, they made a good case for why adhering to enacting some bureaucratic process can be bad. I also thought they overlooked a point – you can make other, similarly compelling arguments for why rewarding that some previously assessed outcome or end state came into existence or was reached can be bad.

Robert Wiblin: You have to focus on outcomes, not process.

Brian Christian: Yeah. One of the examples that I give is my friend and collaborator, Tom Griffiths. When his daughter was really young, she had this toy brush and pan, and she swept up some stuff on the floor and put it in the trash. And he praised her, like “Oh, wow, good job. You swept that really well.” And the daughter was very proud. And then without missing a beat, she dumps the trash back out onto the floor in order to sweep it up a second time and get the same praise a second time. And so Tom—

Robert Wiblin: Pure intelligence.

Brian Christian: Exactly. Yeah. Tom was—

Robert Wiblin: Should be very proud.

Brian Christian: —making the classic blunder of rewarding her actions rather than the state of the kitchen. So he should have praised how clean the floor was, rather than her sweeping itself. So again, there are these surprisingly deep parallels between humans and machines. Increasingly, people like Stuart Russell are making the argument that we just shouldn’t manually design rewards at all.

Robert Wiblin: Because we just have too bad a track record.

Brian Christian: Yes. It’s just that you can’t predict the possible loopholes that will be found. And I think generally that seems right.

Robert Wiblin: Yeah. I guess we’ll talk about some of the alternative architectures later on. With the example of a human child, it’s very visible what’s going wrong, and so you can usually fix it. But I guess the more perverse cases where it really sticks around is when you’re rewarding a process or an activity within an organisation, and it’s sufficiently big that it’s not entirely visible to any one person or within their power to fix the incentives, or you start rewarding people going through the motions of achieving some outcome rather than the outcome itself. Of course rewarding the outcome can be also very difficult if you can’t measure the outcome very well, so you can end up just stuck with not really having any nice solution for giving people the right motivation. But yeah, we see the same phenomenon in AI and in human life yet again.

re: Independent vs. Interdependent

I think generally we do a decent job at modeling how other people are constraint in the choices they make by their enviroment but model ourselves more as independent actors. But then I'm uncertain whether anybody really looks at the way their own decision making depends on other people.

Both resonate for me.

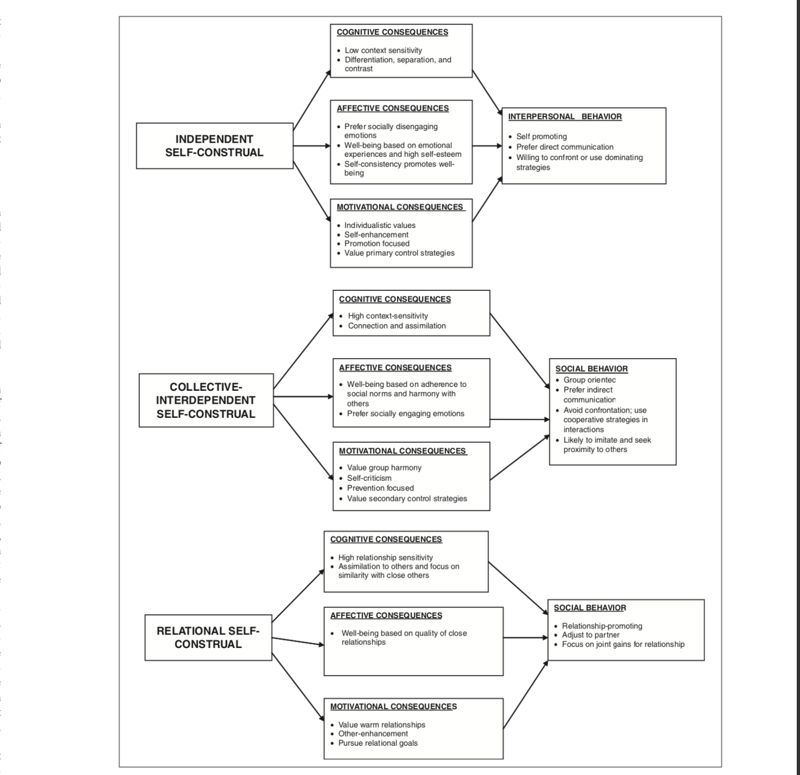

And seeing yourself as more independent than you see others does seem very human (or at least seems like what I'd do :P). Wondering though whether there's any rigorous research on this in East-Asian cultures, given the different tendency for people living there to construe the personal and agentic self as more interdependent.

One is about modeling yourself as independent and the other is about modeling other people as independent actors.

I like your distinction of viewing yourself vs. another as an independent agent.

Some other person-oriented ways of representing that didn't make it into the post:

- Identifying an in(ter)dependent personal self with respect to the outside world.

- Identifying an in(ter)dependent relation attributable to 'you' and/or to an identified social partner.

- Identifying a collective of related people as in(ter) dependent with respect to the outside world.

- and so on...

Worked those out using the perceptual framework I created, inspired by and roughly matching up with the categories of a paper that seems kinda interesting.

Returning to the simplified two-sided distinction I made above, my sense is still that you're not capturing it.

There's a nuanced difference between the way most of your examples are framed, and the framing I'm trying to convey. Struggling to, but here's another attempt!

Your examples:

When discussing for example FDA decisions we don't see Fauci as a person who's independent of the system in which he operates.

There's the moral maze discourse which is also about individuals being strongly influenced by the system in which they are operating

But then I'm uncertain whether anybody really looks at the way their own decision making depends on other people.

Each of those examples describes an individual agent as independent vs. 'not independent', i.e. dependent.

Dependence is unlike interdependence (in terms of what you subjectively perceive in the moment).

Interdependence involves holding both/all in mind at the same time, and representing how the enduring existence of each is conditional upon both themselves and the other/s. If that sounds wishy-washy and unintuitive, then hope you get my struggle to explain it.

You could sketch out a causal diagram, where one arrow shows the agent affecting the system, and a parallel arrow shows the system affecting the agent back. That translates as "A independently wills a change in S; S depends on A" in framing 1, "S independently causes a change in A; A depends on S" in framing 2.

Then, when you mentally situate framing 2 next to framing 1, that might look like you actually modelled the interdependence of the two parts.

That control loop seems deceptively like interdependence, but it's not by the richer meaning I'm pointing to with the word. It's what these speakers are trying to point out when they vaguely talk on about system complexity vs. holistic complexity.

Cartesian frames seem like a mathematically precise way of depicting interdependence. Though this also implicitly imports an assumption of self-centricity: a model in which compute is allocated towards predicting the future as represented within a dualistic ontology (consisting of the embedded self and the environment outside of the self).

Brian Christian's example is interesting. It seems to suggest that focusing on the process or outcome are the only possible directions to focus. Leslie Cameron-Bandler et al argue in one example in the Emprint Method that in good parenting the focus in not on the past (and the process or outcome of the past) but on the future. (It's generally a good book for people who want to understand what ways there are to think and make decisions)

I spoke imprecisely above when I linked Fauci and the FDA. Fauci leads the NIAID. He has some influence on it but he's also largely influenced by it. That seems to me interdepence.

I will listen to the talk later and maybe write more then.

Let me google the Emprint Method. The idea of focus on past vs. future in rewarding/encouraging makes intuitive sense to me though.

I haven’t actually heard of Fauci or discussions around him, but appreciate the clarification! Note again, I’m talking about a way you perceive interdependence (not to point to the elements needed for two states to be objectively described as interdependent).

To clarify the independent vs. interdependent distinction

Julia suggested that EA thought about negative flow-through effects are an example of interdependent thinking. IMO EAs still tend to take an independent view on that. Even I did a bad job above of describing causal interdependencies in climate change (since I still placed the causal sources in a linear 'this leads to this leads to that' sequence).

So let me try to clarify again, at the risk of going meta-physical:

- EAs do seem to pay more attention to causal dependencies than I was letting on, but in a particular way:

- When EA researchers estimate impacts of specific flow-through effects, they often seem to have in mind some hypothetical individual who takes actions, which incrementally lead to consequences in the future. Going meta on that, they may philosophise about how an untested approach can have unforeseen and/or irreversible consequences, or about cluelessness (not knowing how the resulting impacts spread out across the future will average out). Do correct me if you have a different impression!

- An alternate style of thinking involves holding multiple actors / causal sources in mind to simulate how they conditionally interact. This is useful for identifying root causes for problems, which I don't recall EA researchers doing much of (e.g. the sociological/economic factors that originally made commercial farmers industrialise their livestock production).

- To illustrate the difference, I think gene-environment interactions provide a neat case:

- Independent 'this or that' thinking:

- Hold one factor constant (e.g. take the different environments in which adopted twins grew up in as a representative sample) to predict the other (e.g. attribute 50% of variation of a general human trait to their genes).

- Interdependent 'this and that' thinking:

- Assume that factors will interplay, and therefore probabilities are not strictly independent.

- Test nonlinear factors together to predict outcomes.

- e.g. on/off gene for aggression × childhood trauma × teenagers playing violent video games

- Test nonlinear factors together to predict outcomes.

- Cartesian frames seem an apt theoretical analogy

- "A represents a set of possible ways the agent can be, E represents a set of possible ways the environment can be, and ⋅ : A × E → W is an evaluation function that returns a possible world given an element of A and an element of E"

- Under the interdependent framing, the environment affords certain options perceivable by the agent, which they choose between.

- A notion of Free Will loses its relevancy under this framing. Changes in the world were caused neither by the settings of the outside environment nor the embedded agent 'willing' an action, but rather as contingent on both.

- You might counter: isn't the agent's body constituted of atomic particles that act and react deterministically over time, making free will an illusion?

- Yes, and somehow in parts interacting across parts, they come to view the constitution of a greater whole, an agent, that makes choices.

- None of these (admittedly confusing) framings have to be inconsistent with each other.

- Assume that factors will interplay, and therefore probabilities are not strictly independent.

- Independent 'this or that' thinking:

- Overlap between 'interdependent thinking' and 'context' and 'collective thinking'.

- When individuals with their own distinct traits are constrained in the possible ways they can interact by surrounding others (i.e. by their context), they will behave predictably within those constraints:

- e.g. when EAs stick to certain styles of analysis that they know comrades will grasp and admire when gathered at a conference or writing a post for others to read.

- Analysis of the kind 'this individual agent with x skills and y preferences will take/desist from actions that are more likely to lead to z outcomes' falls flat here.

- e.g. to paraphrase Critch's Production Web scenario, which typical AI Safety analysis tends to overlook the severity of:

- Take a future board that buys a particular 'CEO AI service' to ensure their company will be successful. The CEO AI elicits trustees for their inherent categorical preferences, but what they express at any given moment is guided by their recent interactions with influential others (e.g. the need to survive tougher competition by other CEO AIs). A CEO AI that plans company actions based on preferences elicited by board members' preferences at any given point in time, will by default not account for actions bringing into existence processes that actually change the preferences board members state. That is, unless safety-minded AI developers design a management service that accounts for this circuitous dynamic, and boards are self-aware enough to buy the less-profit-optimised service that won't undermine their personal integrity.

- The risk emerges from how the AI developers and company's board introduce assumptions of structure:

- i.e. That you can design an AI to optimise for end states based on its human masters' identified intrinsic preferences. That AI would fail to use available compute to determine whether a chosen instrumental action reinforces a process through which 'stuff' contingently gets flagged in human attention, expressed to the AI, received as inputs, and derived as 'stable preferences'.

- e.g. to paraphrase Critch's Production Web scenario, which typical AI Safety analysis tends to overlook the severity of:

- When individuals with their own distinct traits are constrained in the possible ways they can interact by surrounding others (i.e. by their context), they will behave predictably within those constraints:

Two people asked me to clarify this claim:

Going by projects I've coordinated, EAs often push for removing paper conflicts of interest over attaining actual skin in the game.

Copying over my responses:

re: Conflicts of interest:

My impression has been that a few people appraising my project work looked for ways to e.g. reduce Goodharting, or the risk that I might pay myself too much from the project budget. Also EA initiators sometimes post a fundraiser write-up for an official project with an official plan, that somewhat hides that they're actually seeking funding for their own salaries to do that work (the former looks less like a personal conflict of interest *on paper*).

re: Skin in the game:

Bigger picture, the effects of our interventions aren't going to affect us in a visceral and directly noticeable way (silly example: we're not going to slip and fall from some defect in the malaria nets we fund). That seems hard to overcome in terms of loose feedback from far-away interventions, but I think it's problematic that EAs also seem to underemphasise skin in the game for in-between steps where direct feedback is available. For example, EAs seem sometimes too ready to pontificate (me included) about how particular projects should be run or what a particular position involves, rather than rely on the opinions/directions of an experienced practician who would actually suffer the consequences of failing (or even be filtered out of their role) if they took actions that had negative practical effects for them. Or they might dissuade someone from initiating an EA project/service that seems risky to them in theory, rather than guide the initiator to test it out locally to constrain or cap the damage.

This interview with Jacqueline Novogratz from Acumen Fund covers some practical approaches to attain skin in the game.

Lighter reading here.

Update: appreciating Scott Alexander’s humble descriptions in his recent Criticism of Criticism of Criticism post. A clarification I need to make is that the blindspot-brightspot distinctions below are not about prescribing ’EAs’ to be eg. less individualistic (although there is an implicit preference, with non-elaborated-on reasoning, which Scott also seems to have in the other direction). The distinctions are attempts at categorising where (covering aspects of the environment) people in involved in our broader community incline to focus on more (‘brightspots’) relative to other communities, as well as what corresponding representational assumptions we are making in our mental models, descriptions, and explanations. The distinctions also form a basis for prescribing the community to not just make hand-wavy gestures of ‘we should be open to criticism’, but to actually zone in on different aspects other communities notice and could complement our sensemaking in, if we manage to build epistemic bridges to their perspectives. Ie. listen in a way where we do not keep misinterpreting what they are saying within our default frames of thinking (criticism is not useful if we keep talking past each other). I highlighted where we are falling short and other communities could contribute value.

Last month, Julia Galef interviewed Vitalik Buterin. Their responses to Glen Weyl’s critiques of the EA community struck me as missing perspectives he had tried to raise.

So I emailed Julia to share my thoughts. Cleaned-up text below:

Julia graciously replied that she was interested in a summary of what I think EAs might be missing, or how in particular our views on philanthropy might be biased due to lack of exposure to other cultures/communities.

I emailed her a summary of

my upcoming sequence(edit: instead writing up new AGI-misalignment arguments that very much fall in attentional blindspot 1 to 6) – about a tool I’m developing to map group blindspots. It was tough to condense 75 pages of technical explanation clearly into 7 pages, so bear with me (comment)! I refined the text further below:If you got this far, I’m interested to hear your thoughts! Do grab a moment to call so we can chat about and clarify the concepts. Takes some back and forth.