I felt kinda sad when I learned Dath Ilan is supposed to have a median IQ similar to Eliezer's, in addition to sharing more memetics and intellectual assumptions. Previously I had felt like Dath Ilan was an interesting dream of how Earth could be if only things worked out a bit different, and now it feels a bit more like a Mary Sue world to me.

Yes, I had a similar reaction. It puts one in the mind of this classic synopsis of Robert Heinlein’s The Moon is a Harsh Mistress:

Robert A. Heinlein: I have a plan for the perfect revolution.

Reader: Interesting, tell me more.

Robert A. Heinlein: Step One: Live on the moon.

Reader: Uh....

Robert A. Heinlein: Step Two: Discover an omnipresent sentient computer.

Reader: sigh

I still feel a bit sad-as-previously-described, but I updated slightly back when I realized (I think?) that the reason Dath Ilani are so smart is because they've been doing eugenics for awhile. So, it's sort of a vision of how Earth could be if we'd gotten started rolling towards the Civilizational Competence Attractor several centuries ago, which isn't as inspiring now in the ways I originally wanted but is kinda cool.

I'm assuming the downvotes are from people interpreting the parent comment as 'vague positive affect for eugenics.'

I do want to note that dath ilan eugenics is entirely "the government pays extra to help smart and altruistic people have more children", not "the government prevents anyone from reproducing". Also, a central plot point of the story is that the main character is someone the government didn't want to pay to help have more children, and is annoyed about it, so it's not like the story doesn't engage with the question of whether that's good.

(This comment really made me laugh, I have not seen this synopsis before. I do love the book, I read it at a hard time in my life, it inspired me a great deal.)

I kind of wonder if this served as inspiration, for Eliezer to create dath ilan...

"Listen carefully, because I will only say this once.

Look. Emiya Shirou has no chance of winning if it comes down to fighting.

None of your skills will be any use against a Servant."

[...]

"In that case, at least imagine it. If it is an opponent you cannot match in real life, beat it in your imagination.

If you cannot beat it yourself, imagine something that you could beat it with.

—After all, that is the only thing you can do."

[...]

I should not forget those words.

I think that what this man is saying is something that should never be forgotten—

For those unfamiliar with Fate/stay night, imagining something that you could beat it with is a veiled reference to an actual important technique, which Shirou eventually figures out.

Anyway, it is plausible that one of the best ways to get something done that is new, complicated, ambitious, and requires many people's cooperation is to imagine it in detail and lay out how it might work, in fiction. Draw the end state and let that motivate people to work towards it. Of course, it's not guaranteed that things that appear to work in a fictional universe would actually work in practice, but the fiction can make its case (there's wide variation in how thoroughly works of fiction explore their premises), and if it turns up something that seems desirable and plausible, readers can take a serious look into its feasibility.

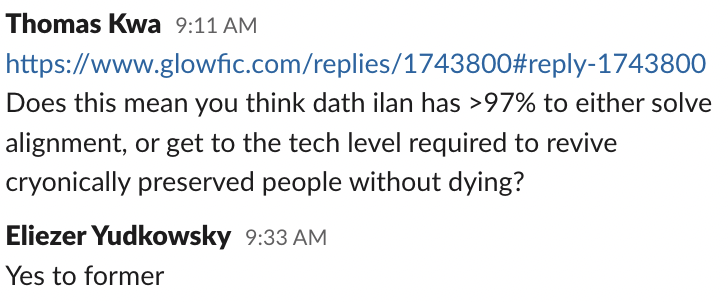

Dath ilan prediction markets say they have a 97% chance of reviving cryonically preserved people, implying that they have a >97% chance of solving alignment.

Conditional on alignment being really tricky. I would expect Dath ilan to figure this out and not create an unaligned AI. I would also expect them to eventually develop some form of reviving technology.

If the idea of AGI is supposed to be a major info hasard, so much that the majority of the population does not know about it, you can not use predictions markets for cryo as a proxis for solving AGI alignement. Most people who invest on this particular prediction market are blind on the relevant information. Unless the Keepers have the money to outbid everyone ? Then it's not a prediction market anymore, it's a press statement from the keepers.

Worse than that, the relationship between "actually solving alignment" and "predicting revival" is very weak. The most obvious flaw is that they might succeed in reviving the Preserved, but without having developed aligned AGI. Being careful, they don't develop unaligned AGI either. This scenario could easily account for almost all of the 97% assessed probability of successfully revivals.

There are other flaws as well, any of which would be consistent with <1% chance of ever solving alignment.

Given the sheer complexity of the human brain, it seems very unlikely anyone could possibly assess a 97% probability of revival without conditioning on AI of some strong form, if not a full AGI.

It seems very unlikely that anyone could credibly assess a 97% probability of revival for the majority at all, if they haven't already successfully carried it out at least a few times. Even a fully aligned strongly superhuman AGI may well say "nope, they're gone" and provide a process for preservation that works instead of whatever they actually did.

I think we're supposed to assume as part of the premise that dath ilan's material science and neuroscience are good enough that they got preservation right - that is, they are justly confident that the neurology is preserved at the biochemical level. Since even we are reasonably certain that that's the necessary data, the big question is just whether we can ever successfully data mine and model it.

I expect dath ilan has some mechanism that makes this work. Maybe it's just that ordinary people won't trade because the Keepers have better information than them. In any case, I had messaged Eliezer and he seems to agree with my interpretation.

If Keepers utilise their secret information to bet well then that is a way for the secrets to leak out. So flaunting their ability and confidentiality are in conflict there. I wouldn't put it past for Keepers to be able to model "What would I think if I did not posses my secret informations" to a very fine detail level, but then that judgement is not the full blown judgement or concern that the person can do.

But this prediction market is exactly the one case where, if the Keepers are concerned about AGI existential risk, signalling to the market not to do this thing is much much more important than preserving the secret. Preventing this thing is what you're preserving the secret for; if Civilization starts advancing computing too quickly the Keepers have lost.

To deceive in a prediction market is to change the outcome. In this case in the opposite of the way the Keepers want. The whole point of having the utterly trustworthy reputation of the Keepers is so that they can make unexplained bids that strongly signal "you shouldn't do this and also you shouldn't ask too hard why not" and have people believe them.

Hasn't Eliezer Yudkowsky largely failed at solving alignment and getting other to solve alignment?

And wasn't he largely responsible for many people noticing that AGI is possible and potentially highly fruitful?

Why would a world where he's the median person be more likely to solve to solve alignment?

In a world where the median IQ is 143, the people at +3σ are at 188. They might succeed where the median fails.

I don't think a lack of IQ is the reason we've been failing at making AI sensibly. Rather, it's a lack of good incentive making.

Making an AI recklessly is current much more profitable than not doing do- which imo, shows a flaw in the efforts which have gone towards making AI safe - as in, not accepting that some people have a very different mindset/beliefs/core values and figuring out a structure/argument that would incentivize people of a broad range of mindsets.

When the idea of AGI was first discovered "a few generations" ago, the world government of dath ilan took action to orient their entire civilization around solving the alignment problem, including getting 20% of their researchers to do safety research, and slowing the development of multiple major technologies. AGI safety research has been ongoing on dath ilan for generations.

This seems wrong. There's a paper that notes 'this problem wasn't caught by NASA. It was an issue of this priority, in order to catch it, we would have had to go through all the issues at that level of priority (and the higher ones)'. This seems like it applies. Try 'x-risk' instead of AGI. Not researching computers (risk: AI?), not researching biotech* (risk: gain of function?), etc. You might think 'they have good coordination!' Magically, yes - so they're not worried about biowarfare. What about (flesh eating) antibiotic resistant bacteria?

Slowing research across the board in order to deal with unknown unknowns might seem unnecessary, but at what point can you actually put brakes on?

That certainty for that far out future is indeed superheated ridiculous. It feels like with their publicly knowable profenssies that would be unachievable. They have something like "we have time travel and have direct eyewitness testimony that we are going to be okay in year X" or "we know the world is a simulation and we can hack it if need be" or something equally exotic.

It’s not particularly obvious that the future is more than, say, a century, and if AI / nukes / bioterrorism were addressed then I’d also probably be 97% on no apocalypse?

if 3% is spent just to be able to work then it goes down from there from being able to make particular achievements. One could ponder on given infinite working time what is the chance that humans have quantum gravity. And its also not about the tech being made but also the current patients being able to benefit from it. No political upheavals or riots that would compromise the bodily safety of millions of patients for centuries or millenia? They think they can figure out resurrection without knowing what principles apply? That is like saying "I will figure antimatter bombs" without knowing what an atom is.

Riots or upheavals which damage the cryonically suspended seems too unlikely to be an issue to dath ilan? And it’s not like the resurrection is going to take new laws of physics, it’s just a very tricky engineering challenge which they’re probably planning to put the AGI on?

Upheavals could include things like a political faction rising that wants to incorporate a new form of punishment of administered True Death. And since they have done it before if they ever need to do a total screening of the past again that is next to impossible to achieve with popsicle relics around. It would probably be a downlooked supervillain but out of the population not one has the explicit goal of sabotaging the cryopatients? The amount of murders that don't utilise the head removal service is non-zero afterall.

Supernovas or asteroids could make it tricky to keep the lights on. With a couple of centuries passing and no Future having onset the doubt that its going to happen might creep in a little differently. At some point the warm population is going to be tiny compared to the popsicles.

These are good reasons to have a probability of 97% rather than 99.9% but I'm really not convinced that anything you've described has a substantially higher than 3% chance of happening.

Some of the concerns you're bringing up are obviously-to-me-and-therefore-Keepers nowhere near sufficient to argue the chance is under 97%. The Precipice puts x-risk due to asteroids/comets at 1/100K per millennium and stellar explosion at 1/100M. (The other natural risk, supervolcanos, it puts at 1/1000. I believe Eliezer has said that dath ilan is diligently monitoring supervolcanos.)

At one year the Petrov Day button was pressed because a direct message had the word "admin" in the sending users name. The challenge is not that the threats are especially bad but that there are many kinds of them. Claims like "nothing bad happens to the website" are extremely disjunctive. If even one of the things I would be worried about had a 3% chance of happening and if it happened it would spell doom then that would be enough. If there were 1000 things that threatened then on average they would only need a 0.003% chance to happen on average. There are so many more failure modes for a civilization than a website.

This analogy seems kind of silly. Dath ilan isn’t giving people a “destroy the cryonics facilities” button. I agree the 3% probably consists of a lot of small things that add up, but I don’t think there’s obviously >1000 black swans with a >3/1000 probability of happening.

I, for one, remember reading the number 97%, and thinking "Only 97%? Well, I guess there are some things that could still go wrong, outside of anyone's control".

But if you think that number is completely ridiculous, you may be severely underestimating what a competent, aligned civilization could do.

Out of curiousity, what number do you think a maximally competent human civilization living on Earth with a 1980s level of technology, could accomplish, when it comes to probablity of surviving and successfully aligning AI. 90%? 80%? 50%? 10%? 1%? 0%?

"maximally competent" there calls for a sense of possiblity and how I read it makes it starkly contrast with specifying that the tech level would be 1980. They would just instantly jump to atleast 2020. Part of the speculation with the "Planecrash" continuity is about how Keltham being put in a medival setting would be starkly transformative ie injecting outside setting competence is setting breaking.

Dath ilani are still human and make errors and need to partly rely on their clever coordination schemes. I think I am using a heuristic about staying in your powerlevel even if the powerlevel is high. You should be in a situation where you could not predict the directions of your updates. And the principle that if you can expect to be convinced you can just be convinced now. So if you currently do not have a technology you can't expect to have it. "If I knew what I was doing it would not be called research". You don't know what you don't know.

On the flipside if the civilization is competent to survive for millenia then almost any question will be cracked. But then I could apply that logic to predict that they will crack time travel. For genuine future technologies we are almost definitionally ignorant about them. So anything outside the range of 40-60% is counterproductive hubris (in my lax, I don't actually know how to take probabilities that seriously scale).

There's a difference between "technology that we don't know how to do but it's fine in theory", "technology that we don't even know if it's possible in principle" and "technology that we believe isn't possible at all". Uploading humans is the former; we have a good theoretical model for how to do it and we know physics allows objects with human brain level computing power.

Time travel is the latter.

It's perfectly reasonable for a civilisation to estimate that problems of the first type will be solved without becoming thereby committed to believing in time travel. Being ignorant of a technology isn't the same as being ignorant of the limits of physics.

I'm confused why this is getting downvotes. Maybe because the title was originally "Yudkowsky: dath ilan has a 97% chance to solve alignment" which is misleading to people who don't know what dath ilan is?

I originally downvoted because I couldn't figure out the point. The title definitely contributed to that, though not for the reason you suggest: rather, the title made it sound like this fact about a fictional world was the point, in the same way that you would expect to come away from a post titled "Rowling: Wizarding Britain has a GDP of 1 million Galleons" pretty convinced about a fictional GDP but with possibly no new insights on the real world. I think the new title makes it a bit clearer what one might expect to get out of this: it's more like "here's one (admittedly fictional) story of how alignment was handled; could we do something similar in our world?". I'm curious if that's the intended point? If so then all the parts analyzing how well it worked for them (e.g. the stuff about cryonics and 97%) still don't seem that relevant: "here's an idea from fiction you might consider in reality" and "here's an idea from fiction which worked out for them you might consider in reality" provide me almost identical information, since the valuable core worth potentially adopting is the idea, but all discussion of whether it worked in fiction tells me extremely little about whether it will work in reality (especially if, as in this case, very little detail is given on why it worked in fiction).

The "dying with dignity" ups the pattern that Elizer uses Aprils Fools for things which can not be constructively discussed as fact. With sufficiently low plausibility fact, maintaining the belief in the other partys rationality is infeasible so it might as well be processed as fiction. "I am not saying this" can also be construed as a method of making readers sink more deeply into fiction in trying to maintain plausibility. It does trigger false positives for using fiction as evidence.

I do not want that gathering evidence for UFOs would be impossible because it would be binned into fiction. "I didn't see anything but if I would have seen something here is what I would have seen...". If there were an alien injuction I wish it to be possible to detect. But in the same vein people do process very low odds bad enough that "stay out of low probablity considerations" is a prime candidate to be the best advice about it.

Thanks, I made minor edits to clarify the post.

I think the case for why dath ilan is relevant to the real world basically rests on two pieces of context which most people don't have:

- Yudkowsky mostly writes rationalfic, so dath ilan is his actual expectations of what would happen if he were the median person, not just some worldbuilding meant to support a compelling narrative.

- Yudkowsky tries pretty hard to convey useful thoughts about the world through his fiction. HPMOR was intended as a complement to the Sequences. Glowfic is an even weirder format, but I've heard Yudkowsky say that he can't write anything else in large volume anymore, and glowfic is an inferior substitute still meant to edify. Overall, I'd guess that these quotes are roughly 30% random irrelevant worldbuilding, and 70% carefully written vignette to convey what good coordination is actually capable of.

I'm not down or upvoting, but I will say, I hope you're not taking this exercise too seriously...

Are we really going to analyze one person's fiction (even if rationalist, it's still fiction), in an attempt to gain insight into this one person's attempt to model an entire society and its market predictions – and all of this in order to try and better judge the probability of certain futures under a number of counterfactual assumptions? Could be fun, but I wouldn't give its results much credence.

Don't forget Yudkowsky's own advice about not generalizing from fictional evidence and being wary of anchoring. If I had to guess, some of his use of fiction is just an attempt to provide alternative framings and anchors to those thrust on us by popular media (more mainstream TV shows, movies etc). That doesn't mean we should hang on his every word though.

Yeah, I think the level of seriousness is basically the same as if someone asked Eliezer "what's a plausible world where humanity solves alignment?" to which the reply would be something like "none unless my assumptions about alignment are wrong, but here's an implausible world where alignment is solved despite my assumptions being right!"

The implausible world is sketched out in way too much detail, but lots of usefulness points are lost by its being implausible. The useful kernel remaining is something like "with infinite coordination capacity we could probably solve alignment" plus a bit because Eliezer fiction is substantially better for your epistemics than other fiction. Maybe there's an argument for taking it even less seriously? That said, I've definitely updated down on the usefulness of this given the comments here.

I downvoted for the clickbait title, for making obviously wrong inferences from quoted material, and also for being about some fiction instead of anything relevant to the real world. In these quotes Eliezer is not claiming that his fictional dath ilan has a 97% chance of solving alignment, and even if he were, so what?

Some of those are valid reasons to downvote, but

In these quotes Eliezer is not claiming that his fictional dath ilan has a 97% chance of solving alignment

Eliezer certainly didn't say that in anything quoted in the original post, and what he did write in that glowfic does not imply it. He may hold that dath ilan has a 97% chance to solve alignment, but that's an additional claim about his fictional universe that does not follow from what his character Keltham said.

The combination of both statements also strains my suspension of disbelief in his setting even further than it already is. Either one alone is bad enough, but together they imply a great deal more than I think Eliezer intended.

Seeing the relative lack of pickup in terms of upvotes, I just want to thank you for putting this together. I’ve only read a couple of Dath Ilan posts, and this provided a nice coverage of the AI-in-Dath-Ilan concepts, many of the specifics of which I had not read previously.

I was reading the story for the first quotation entitled "The discovery of x-risk from AGI", and I noticed something around quotation that doesn't make sense to me and I'm curious if anyone can tell what Eliezer Yudkowsky was thinking. As referenced in a previous version of this post, after the quoted scene highest Keeper commits suicide. Discussing the impact of this, EY writes,

And in dath ilan you would not set up an incentive where a leader needed to commit true suicide and destroy her own brain in order to get her political proposal taken seriously. That would be trading off a sacred thing against an unsacred thing. It would mean that only true-suicidal people became leaders. It would be terrible terrible system design.

So if anybody did deliberately destroy their own brain in attempt to increase their credibility - then obviously, the only sensible response would be to ignore that, so as not create hideous system incentives. Any sensible person would reason out that sensible response, expect it, and not try the true-suicide tactic.

The second paragraph is clearly a reference to acausal decision theory, people making a decision because how they anticipate others react to expecting that this is how they make the decision rather than the direct consequences of the decision. I'm not sure if it really makes sense, a self-indulgent reminder that nobody has knows any systematic method for producing prescriptions from acausal decision theories in cases where purportedly they differs from causal decision theory in everyday life. Still, it's fiction, I can suspend my disbelief.

The confusing thing is that in the story the actual result of the suicide is exactly what this passage says shouldn't be the result. It convinces the Representatives to take the proposal more seriously and implement it. This passage is just used to illustrate how shocking the suicide was, no additional considerations are described why for the reasoning is incorrect in those circumstances. So it looks like the Representatives are explicitly violating the Algorithm which supposedly underlies the entire dath ilan civilization and is taught to every child at least in broad strokes, in spite of being the second-highest ranked governing body of dath ilan.

There is a bit of "and to assent to the proposed change, under that protocol, would be setting up the wrong system incentives in the world that was most probably the case." which kind of implies that there might be a case where the wrong system incentives are not a serious enough downside to advice agaist it.

It might rely a lot in that a Keeper would not frivolously suicide to no effect. I don't know what exactly people make think that Keepers are sane but the logic how the external circumstances would point to the person being insane is supposed to put the two in tension. And because "Keepers are sane" is so much more strongly believed it makes the update of "things are real bad" go from trivial to significant.

Summary

The discovery of x-risk from AGI

How dath ilan deals with the infohazard of AGI

https://www.lesswrong.com/posts/gvA4j8pGYG4xtaTkw/i-m-from-a-parallel-earth-with-much-higher-coordination-ama

https://yudkowsky.tumblr.com/post/81447230971/my-april-fools-day-confession

https://www.glowfic.com/replies/1780726#reply-1780726

https://www.glowfic.com/replies/1688763#reply-1688763

Dath ilan has a >97% chance to solve AI alignment

Note: Yudkowsky confirmed that this quote means he thinks dath ilan would actually solve alignment in 97% of worlds

through personal communication

people whose job it is to think carefully about infohazards and other unwieldy ideas