Andrew, thanks for the post. Here's a first response, I'll eventually post more. I agree with most of your specific comments but come to a different set of conclusions (some of which Ben Goldhaber and I spelled out in this LessWrong post: "Provably Safe AI: Worldview and Projects" and some which I discuss in this video "VAISU Provably Safe AI") I agree that formal methods are not yet widely used and that provides some evidence that there might be big challenges to doing so. But these are extraordinary times, both because we are likely to face much more powerful adversaries over the next decade and because our AI systems are likely to become much more mathematically sophisticated over the same time period. It is certainly valuable to understand why formal methods haven't yet been adopted widely but it appears that both the driving necessity and the enabling technology may be rapidly changing soon.

I also agree that the transition period from our current technology to provably safe replacements is likely to be very challenging and may involve great hardship and loss of life. I believe we have a great opportunity right now to solve the problems you mention and others before they are critical. One overriding perspective in Max and my approach is that we need to design our systems so that their safety *can be formally verified*. In software, people often bring up the halting problem as an argument that general software can't be verified. But we don't need to verify general software, we are *designing* our systems so that they can be verified. That issue is also critically important for physical systems. And we are likely to have a challenging period where older systems that haven't been designed for verified safety are vulnerable to attack.

You say:

In particular, physics, chemistry and biology are all complex sciences which do not have anything like complete symbolic rule sets.

We fortunately live in an era where we *do* have a complete formal understanding of the fundamental laws of physics. Sean Carrol summarizes this nicely in this paper: "The Quantum Field Theory on Which the Everyday World Supervenes" in which he argues that the Standard Model of particle physics plus Einstein's general relativity completely describes all physical phenomena in ordinary human experience (ie. away from black holes, neutron stars, and the early universe and at energies less than 10^11eV, for comparison chemical bonds are less than 10eV and reactions in the sun are 10^8eV). Much of applied physics is about precise formal models of higher level phenomena (eg. fluids, elastic materials, plasmas, etc.) as beautifully summarized in Kip Thorne's book: "Modern Classical Physics: Optics, Fluids, Plasmas, Elasticity, Relativity, and Statistical Physics". Each of the engineering disciplines (eg. Mechanical Engineering, Electrical Engineering, Chemical Engineering, etc.) has their own formal models of their domain along with design rules for safe systems. They have extensive experiments and formal arguments which ground their models in fundamental physics. Fortunately, many of these disciplines are currently working to represent their fundamental models in formal proof assistants like Lean. For example, here is a chemical physics group who is doing that: "Formalizing chemical physics using the Lean theorem prover"

The Lean theorem prover is one of several powerful powerful proof assistants (others include Coq, Isabelle, MetaMath, HOL Light, and others) which are rich enough to encode all of mathematics including all the foundations of physics, engineering, computer science, economics, etc. The Lean library "Mathlib 4": contains most of an undergraduate mathematics curriculum, some frontier mathematical areas, and is beginning to include probability theory, physics, and other disciplines. Many AI theorem provers are training on this library and are rapidly improving in generating Lean proofs. For example, "DeepSeek-Prover-V1.5" is SOTA for open source provers: "DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search" and commercial AI companies like Harmonic appear to be making rapid progress: "One month in - A new SOTA on MiniF2F and more" Several experts working on this area say that we should expect LLM-based AI systems (including "agentic" add-ons like Monte Carlo Tree Search, etc.) to reach human PhD level at theorem proving by 2026. I believe that will open the floodgates of safety and other other applications.

One overriding perspective in Max and my approach is that we need to design our systems so that their safety can be formally verified. In software, people often bring up the halting problem as an argument that general software can't be verified. But we don't need to verify general software, we are designing our systems so that they can be verified.

I am a great proponent of proof-carrying code that is designed and annotated for ease of verification as a direction of development. But even from that starry-eyed perspective, the proposals that Andrew argues against here seem wildly unrealistic.

A proof-carrying piece of C code can prove that it is not vulnerable to any buffer overflows, or that it will never run motor 1 and motor 2 simultaneously in opposite directions. A bit more ambitiously, it could contain a full proof of a complete behavioral specification, proving that the software will perform a certain set of behaviors at all times, which as a corollary also implies that it is proof against a large body of security threats. This is not something we can really manage in practice yet, but it's well within the extrapolated range of current techniques. We could build a compiler that will only compile software that contains a proof matching some minimum necessary specification, too.

Now imagine trying to prove that my program doesn't perform any sequence of perfectly innocuous interactions that happens to trigger a known or unknown bug in a mysql 5.1.32 server on the internet somewhere. How would you specify that? You can specify that the program doesn't do anything that might affect anything ever (though this leads to the well-known boxing problems); but if I want my program to have some nonzero ability to affect things in non-malicious ways, how would you specify that it doesn't do anything that might break something in some other part of the world in some unexpected way, including any unknown zero-days in mysql 5.1.32 servers? Presumably my proof checker doesn't contain the full code of all other systems on the internet my software might plausibly interact with. How could I specify a requirement like this, let alone prove it?

Proving that a piece of software has the behavior I want, or the behavior I allow, is something that can be done by carefully annotating my code with lemmas and contracts and incremental assumptions that together build up to a proof of the behavior I want. Proving that the software will have the behavior I want no matter what conditions you could possibly throw at it sounds harder but is actually mostly the same problem -- and so I would expect that proofs of almost-perfect security of a piece of software would not be that difficult either. But that is security of the software against attacks that might threaten the desired behavior of the software. Demonstrating that the software is not a threat to something else somewhere is another matter entirely, as this requires first knowing and encoding in the proofs all the ways in which the rest of the world might be negatively affected by actions-in-general. Not just superficially, either, if you want the proof to rule out the mysql 5.1.32 zero-day that just uses a sequence of normal interactions that should be perfectly innocent but in practice aren't. This is proving a negative in the worst possible way; to prove something like this, you would need to quantify over all possible higher-order behaviors the program doesn't have. I don't see how any of the real-or-imagined formal verification techniques I have ever heard about could possibly do anything even vaguely like this.

All the above is the world of software versus software, where even the external environment can be known to the last bit, with crisp behavior that can be analyzed and perfectly simulated even to the point where you can reproduce the mysql 5.1.32 bugs. Doing the same thing with biology would be a whole other order of magnitude in challenge level. Proving that a certain piece of synthesized DNA is not going to form a threat to human biology in some indirect way is essentially analogous to the mysql exploitation problem above, but much, much harder. Here, too, you would need to have a proof that quantifies over all possible higher order behaviors the piece of DNA doesn't have, all applied to the minor inconvenience that is the much murkier world of biology.

Unless I missed some incredible new developments in the field of formal verification, quantification over all possible higher order patterns and consequences of a system being analyzed is something that is absolutely out of reach of any formal verification techniques I have heard of. But if you do know of any techniques that could challenge this barrier, do tell :)

Steve, please clarify, because I've long wondered: Are you saying you could possibly formally prove that a system like the human brain would always do something safe?

There are two enormous problems here.

a) Defining what arrangement of atoms you'd call "safe".

b) Predicting what a complex set of neural networks is going to do.

Intuitively, this problem is so difficult as to be a non-starter for effective AGI safety methods (if the AGI is a set of neural networks, as seems highly likely at this point; and probably even if it was the nicest set of complex algorithms you could imagine, because of problem a).

I thought your previous work was far more limited in ambition, making it a potential useful supplement to alignment.

I'm focused on making sure our infrastructure is safe against AI attacks. This will require that our software not have security holes, that our cryptography not be vulnerable to mathematical attacks, our hardware not leak signals to adversarial sensors, and our social mechanisms not be vulnerable to manipulative interactions. I believe the only practical way to have these assurances is to model our systems formally using tools like Lean and to design them so that adversaries in a specified class provably cannot break specified safety criteria (eg. not leak cryptographic keys). Humans can do these tasks today but it is laborious and usually only attempted when the stakes are high. We are likely to soon have AI systems which can synthesize systems with verified proofs of safety properties rapidly and cheaply. I believe this will be a game changer for safety and am arguing that we need to prepare for that opportunity. One important piece of infrastructure is the hardware that AI runs on. When we have provable protections for that hardware, we can put hard controls on the compute available to AIs, its ability to replicate, etc..

Okay! Thanks for the clarification. That's what I got from your paper with Tegmark, but in the more recent writing it sounded like maybe you were extending the goal to actually verifying safe behavior from the AGI. This is what I was referring to as a potentially useful supplement to alignment. I agree that it's possible with improved verification methods, given the caveats from this post.

An unaligned AGI could take action outside of all of our infrastructure, so protecting it would be a partial solution at best.

If I were or controlled an AGI and wanted to take over, I'd set up my own infrastructure in a hidden location, underground or off-planet, and let the magic of self-replicating manufacturing develop whatever I needed to take over. You'd need some decent robotics to jumpstart this process, but it looks like progress in robotics is speeding up alongside progress in AI.

I think we definitely would like a potentially unsafe AI to be able to generate control actions, code, hardware, or systems designs together with proofs that those designs meet specified goals. Our trusted systems can then cheaply and reliably check that proof and if it passes, safely use the designs or actions from an untrusted AI. I think that's a hugely important pattern and it can be extended in all sorts of ways. For example, markets of untrusted agents can still solve problems and take actions that obey desired constraints, etc.

The issue of unaligned AGI hiding itself is potentially huge! I have end state designs that would guarantee peace and abundance for humanity, but they require that all AIs operate under a single proven infrastructure. In the intermediate period between now and then is the highest risk, I think.

And, of course, an adversarial AI will do everything it can to hide and garner resources! One of the great uses of provable hardware is the ability to create controlled privacy. You can have extensive networks of sensors where all parties are convinced by proofs that they won't transmit information about what they are sensing unless a specified situation is sensed. It looks like that kind of technology might allow mutual treaties which meet all parties needs but prevent the "hidden rogue AIs" buried in the desert. I don't understand the dynamics very well yet, though.

Sean Carrol summarizes this nicely in this paper: "The Quantum Field Theory on Which the Everyday World Supervenes" in which he argues that the Standard Model of particle physics plus Einstein's general relativity completely describes all physical phenomena in ordinary human experience

FWIW, I would take bets at pretty high odds that this is inaccurate. As in, we will find at least one common everyday experience which relies on the parts of physics which we do not currently understand (such as the interaction between general relativity and quantum field theory). Of course, this is somewhat hard to prove since we basically have no ability to model any high-level phenomena using quantum field theory due to computational intractability, but my guess is we would still likely be able to resolve this in my favor after talking to enough physicists (and I would take reasonably broad consensus in your favor as sufficient to concede the bet).

FWIW I’m with Steve O here, e.g. I was recently writing the following footnote in a forthcoming blog post:

“The Standard Model of Particle Physics plus perturbative quantum general relativity” (I wish it was better-known and had a catchier name) appears sufficient to explain everything that happens in the solar system. Nobody has ever found any experiment violating it, despite extraordinarily precise tests. This theory can’t explain everything that happens in the universe—in particular, it can’t make any predictions about either (A) microscopic exploding black holes or (B) the Big Bang. Also, (C) the Standard Model happens to includes 18 elementary particles (depending on how you count), because those are the ones we’ve discovered; but the theoretical framework is fully compatible with other particles existing too, and indeed there are strong theoretical and astronomical reasons to think they do exist. It’s just that those other particles are irrelevant for anything happening on Earth. Anyway, all signs point to some version of string theory eventually filling in those gaps as a true Theory of Everything. After all, string theories seem to be mathematically well-defined, to be exactly compatible with general relativity, and to have the same mathematical structure as the Standard Model of Particle Physics (i.e., quantum field theory) in the situations where that’s expected. Nobody has found a specific string theory vacuum with exactly the right set of elementary particles and masses and so on to match our universe. And maybe they won’t find that anytime soon—I’m not even sure if they know how to do those calculations! But anyway, there doesn’t seem to be any deep impenetrable mystery between us and a physics Theory of Everything.

(I interpret your statement to be about everyday experiences which depend on something being incomplete / wrong in fundamental physics as we know it, as opposed to just saying the obvious fact that we don’t understand all the emergent consequences of fundamental physics as we know it.)

I also think “we basically have no ability to model any high-level phenomena using quantum field theory” is misleading. It’s true that we can’t directly use the Standard Model Lagrangian to simulate a transistor. But we do know how and why and to what extent quantum field theory reduces to normal quantum mechanics and quantum chemistry (to such-and-such accuracy in such-and-such situations), and we know how those in turn approximately reduce to fluid dynamics and solid mechanics and classical electromagnetism and so on (to such-and-such accuracy in such-and-such situations), and now we’re all the way at the normal set of tools that physicists / chemists / engineers actually use to model high-level phenomena. You’re obviously losing fidelity at each step of simplification, but you’re generally losing fidelity in a legible way—you’re making specific approximations, and you know what you’re leaving out and why omitting it is appropriate in this situation, and you can do an incrementally more accurate calculation if you need to double-check. Do you see what I mean?

By (loose) analogy, someone could say “we don’t know for sure that intermolecular gravitational interactions are irrelevant for the freezing point of water, because nobody has ever included intermolecular gravitational interactions in a molecular dynamics calculation”. But the reason nobody has ever included them in a calculation is because we know for sure that they’re infinitesimal and irrelevant. Likewise, a lot of the complexity of QFT is infinitesimal and irrelevant in any particular situation of interest.

But we do know how and why and to what extent quantum field theory reduces to normal quantum mechanics and quantum chemistry (to such-and-such accuracy in such-and-such situations), and we know how those in turn approximately reduce to fluid dynamics and solid mechanics and classical electromagnetism and so on (to such-and-such accuracy in such-and-such situations), and now we’re all the way at the normal set of tools that physicists / chemists / engineers actually use to model high-level phenomena.

Yeah, I do think I disagree with this.

At least in all contexts where I've seen textbooks/papers/videos cover this, the approximations we make are quite local and application-specific. You make very different simplifying assumptions if you are dealing with optical fiber from when you are dealing with estimating friction or shear forces, or when you are making fluid simulations, or when you are dealing with semiconductors. We don't have good general tools to abstract from the lower levels to the higher levels, and in most situations we vastly overengineer systems to dampen the effects that we don't have good abstractions for in the appropriate context (which to be clear, would totally mess with our systems if we didn't overengineer our systems to dampen them).

And honestly, most of the time we don't really know how the different abstraction-levels connect and we just use empirical data from some higher level of abstraction. And indeed we can usually use those empirically-grounded higher-level abstractions to model systems with lower error than we would get from a principled "build things from the ground up" set of approximations.

I agree that we can often rule out specific interactions like "are gravitational interactions relevant for water freezing", but we cannot say something as general as "there are no interactions outside of the standard model that are relevant for water freezing, like potentially anything related to agglomeration effects which might be triggered by variance in particle energy levels we don't fully understand, etc.". We don't really know how quantum field theory generalizes to high-level phenomena like water freezing, and while of course we can rule out a huge number of things and make many correct predictions on the basis of quantum field theory, we really have never even gotten remotely close to constructing a neat series of approximations that explains how water freezes from the ground up (in a way where you wouldn't need to repeatedly refer to high-level empirical observations you made to guide your search over appropriate abstractions).

In other words, if you gave a highly educated human nothing but our current knowledge of quantum field theory, and somehow asked them to predict the details of how water freezes under pressure (i.e. giving rise to things like "Ice VII") without ever having seen actual water freeze and performed empirical experiments, they would really have no idea. Of course, the low-level theories are useful for helping us guide our search for approximations that are locally useful, but indeed that gap where we have to constrain things from multiple level of abstractions is going to be the death of anything like formal verification.

(I probably agree about formal verification. Instead, I’m arguing the narrow point that I think if someone were to simulate liquid water using just the Standard Model Lagrangian as we know it today, with no adjustable parameters and no approximations, on a magical hypercomputer, then they would calculate a freezing point that agrees with experiment. If that’s not a point you care about, then you can ignore the rest of this comment!)

OK let’s talk about getting from the Standard Model + weak-field GR to the freezing point of water. The weak force just leads to certain radioactive decays—hopefully we’re on the same page that it has well-understood effects that are irrelevant to water. GR just leads to Newton’s Law of Gravity which is also irrelevant to calculating the freezing point of water. Likewise, neutrinos, muons, etc. are all irrelevant to water.

Next, the strong force, quarks and gluons. That leads to the existence of nuclei, and their specific properties. I’m not an expert but I believe that the standard model via “lattice QCD” predicts the proton mass pretty well, although you need a supercomputer for that. So that’s the hydrogen nucleus. What about the oxygen nucleus? A quick google suggests that simulating an oxygen nucleus with lattice QCD is way beyond what today’s supercomputers can do (seems like the SOTA is around two nucleons, whereas oxygen has 16). So we need an approximation step, where we say that the soup of quarks and gluons approximately condenses into sets of quark-triples (nucleons) that interact by exchanging quark-doubles (pions). And then we get the nuclear shell model etc. Well anyway, I think there’s very good reason to believe that someone could turn the standard model and a hypercomputer into the list of nuclides in agreement with experiment; if you disagree, we can talk about that separately.

OK, so we can encapsulate all those pieces and all that’s left are nuclei, electrons, and photons—a.k.a. quantum electrodynamics (QED). QED is famously perhaps the most stringently tested theory in science, with two VERY different measurements of the fine structure constant agreeing to 1 part in 1e8 (like measuring the distance from Boston to San Francisco using two very different techniques and getting the same answer to within 4 cm—the techniques are probably sound!).

But those are very simple systems; what if QED violations are hiding in particle-particle interactions? Well, you can do spectroscopy of atoms with two electrons and a nucleus (helium or helium-like), and we still get up to parts-per-million agreement with no-adjustable-parameter QED predictions, and OK yes this says there’s a discrepency very slightly (1.7×) outside the experimental uncertainty bars but historically it’s very common for people to underestimate their experimental uncertainty bars by that amount.

But that’s still only two electrons and a nucleus; what about water with zillions of atoms and electrons? Maybe there’s some behavior in there that contradicts QED?

For one thing, it’s hard and probably impossible to just posit some new fundamental physics phenomenon that impacts a large aggregate of atoms without having any measurable effect on precision atomic measurements, particle accelerator measurements, and so on. Almost any fundamental physics phenomenon that you write down would violate some symmetry or other principle that seems to be foundational, or at any rate, that has been tested at even higher accuracy than the above (e.g. the electron charge and proton charge are known to be exact opposites to 1e-21 accuracy, the vacuum dispersion is zero to 1e18 accuracy … there are a ton of things like that that tend to be screwed up by any fundamental physics phenomenon that is not of a very specific type, namely a term that looks like quantum field theory as we know it today).

For another thing, ab initio molecular simulations exist and do give results compatible with macroscale material properties, which might or might not include the freezing point of water (this seems related but I’m not sure upon a quick google). “Ab initio” means “starting from known fundamental physics principles, with no adjustable parameters”.

Now, I’m sympathetic to the conundrum that you can open up some paper that describes itself as an “ab initio”, and OK if the authors are not outright lying then we can feel good that there are no adjustable parameters in the source code as such. But surely the authors were making decisions about how to set up various approximations. How sure are we that they weren’t just messing around until they got the right freezing point, IR spectrum, shear strength, or whatever else they were calculating?

I think this is a legitimate hypothesis to consider and I’m sure it’s true of many individual papers. I’m not sure how to make it legible, but I have worked in molecular dynamics myself and had extremely smart and scrupulous friends in really good molecular dynamics labs such that I could see how they worked. And I don’t think the above paragraph concern is a correct description of the field. I think there’s a critical mass of good principled researchers who can recognize when people are putting more into the simulations than they get out, and keep the garbage studies out of textbooks and out of open-source tooling.

I guess one legible piece of evidence is that DFT was the best (and kinda only) approximation scheme that lets you calculate semiconductor bandgaps from first principles with reasonable amounts of compute, for many decades. And DFT famously always gives bandgaps that are too small. Everybody knew that, and that means that nobody was massaging their results to get the right bandgap. And it means that whenever people over the decades came up with some special-pleading correction that gave bigger bandgaps, the field as a whole wasn’t buying it. And that’s a good sign! (My impression is that people now have more compute-intensive techniques that are still ab initio and still “principled” but which give better bandgaps.)

I agree with the thrust of this comment, which I read as saying something like "our current physics is not sufficient to explain, predict, and control all macroscopic phenomena". However, this is a point which Sean Carroll would agree with. From the paper under discussion (p.2): "This is not to claim that physics is nearly finished and that we are close to obtaining a Theory of Everything, but just that one particular level in one limited regime is now understood."

The claim he is making, then, is totally consistent with the need to find further approximations and abstractions to model macroscopic phenomena. His point is that none of that will dictate modifications to the core theory (effective quantum field theory) when applied to "everyday" phenomena which occur in regions of the universe which we currently interact with (because the boundary conditions of this region of the universe are compatible with EQFT). Another way to put this is that Carroll claims no possible experiment can be conducted within the "everyday regime" which will falsify the core theory. Do you still disagree?

For the record, this is just to clarify what Carroll's claim is. I totally agree that that none of this is relevant to overcoming the limitations of formal verification, which very clearly depend on many abstractions and approximations and will continue to do so for the foreseeable future.

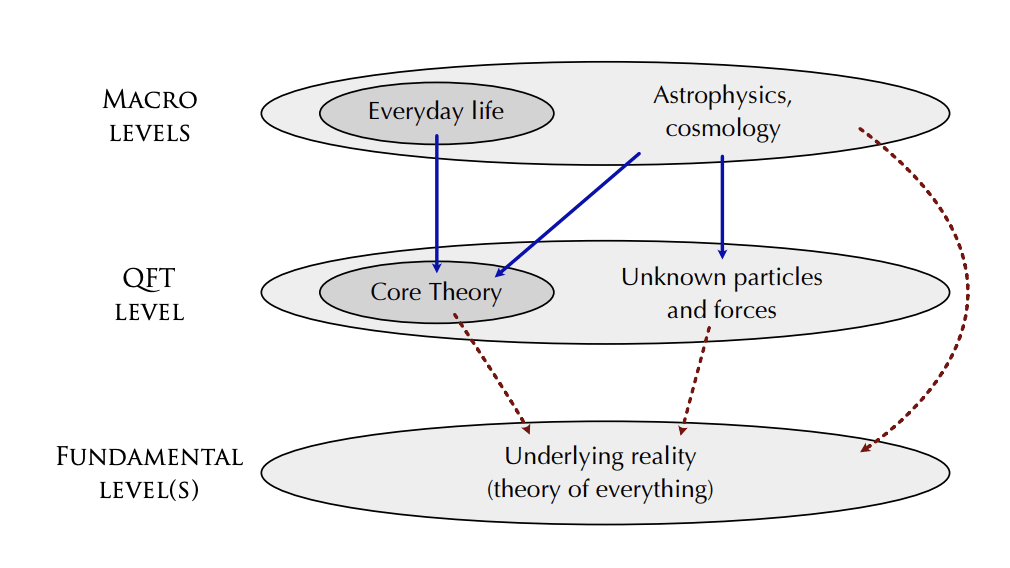

Figure 1 in Carroll's paper shows what is going on. At the base is the fundamental "Underlying reality" which we don't yet understand (eg. it might be string theory or cellular automata, etc.):

Above that is the "Quantum Field Theory" level which includes the "Core Theory" which he explicitly shows in the paper and also possibly "Unknown particles and forces". Above that is the "Macro Level" which includes both "Everyday life" which he is focusing on and also "Astrophysics and Cosmology". His claim is that everything we experience in the "Everyday life" level depends on the "Underlying reality" level only through the "Core Theory" (ie. it is an "effective theory" kind of like fluid mechanics doesn't depend on the details of particle interactions).

In particular, for energies less than 10^11 electron volts and for gravitational fields weaker than those around black holes, neutron stars, and the early universe, the results of every experiment is predicted by the Core theory to very high accuracy. If anything in this regime were not predicted to high accuracy, it would be front page news, the biggest development in physics in 50 years, etc. Part of this confidence arises from fundamental aspects of physics: locality of interaction, conservation of mass/energy, and symmetry under the Poincare group. These have been validated in every experiment ever conducted. Of course, as you say, physics isn't finished and quantum theory in high gravitational curvature is still not understood.

Here's a list of other unsolved problems in physics: https://en.wikipedia.org/wiki/List_of_unsolved_problems_in_physics But the key point is that none of these impact AI safety (at least in the nearterm!). Certainly, powerful adversarial AI will look for flaws in our model of the universe as a potential opportunity for exploitation. Fortunately, we have a very strong current theory and we can use it to put bounds on the time and energy an AI would require to violate the conditions of validity (eg. create black holes, etc.) For long term safety and stability, humanity will certainly have to put restrictions on those capabilities, at least until the underlying physics is fully understood.

In particular, for energies less than 10^11 electron volts and for gravitational fields weaker than those around black holes, neutron stars, and the early universe, the results of every experiment is predicted by the Core theory to very high accuracy. If anything in this regime were not predicted to high accuracy, it would be front page news, the biggest development in physics in 50 years, etc. Part of this confidence arises from fundamental aspects of physics: locality of interaction, conservation of mass/energy, and symmetry under the Poincare group. These have been validated in every experiment ever conducted. Of course, as you say, physics isn't finished and quantum theory in high gravitational curvature is still not understood.

While I am an avid physics reader, I don't have a degree in physics, so this is speaking at the level of an informed layman.

I think it's actually pretty easy to end up with small concentrations of more than 10^11 electron volts and large local gravitational fields.These effects can then often ripple out or qualitatively change the character of some important interaction. On the everyday scale, cosmic rays are the classical example of extremely high-energy contexts, which do effect us on a daily level (but of course there are many more contexts in which local bubbles of high energy concentration takes place).

Also, dark energy + dark matter are of course the obvious examples of something for which we currently have no satisfying explanation within either general relativity or the standard model, and neither of those likely requires huge energy scales or large gravitational fields.

In general, I don't think it's at all true that "if anything was not predicted with high accuracy by the standard model it would be the biggest development in physics in 50 years". We have no idea what the standard model predicts about approximately any everyday phenomena because simulating phenomena at the everyday scale is completely computationally intractable. If turbulence dynamics or common manufacturing or material science observations were in conflict with the standard model, we would have no idea, since we have no idea what the standard model says about basically any of those things.

In the history of science it's quite common that you are only able to notice inconsistencies in your previous theory after you have found a superior theory. Newton's gravity looks great for predicting the movements of the solar system, with a pretty small error that mostly looks random and you can probably just dismiss as measurement error, until you have relativity and you notice that there was a systematic bias in all of your measurements in predictable directions in a way that previously looked like noise.

It's very hard to get large gravitational fields. The closest known black hole to Earth is Gaia BH1 which is 1560 light-years away: https://www.space.com/closest-massive-black-hole-earth-hubble The strongest gravitational waves come from the collision of two black holes but by the time they reach Earth they are so weak it takes huge effort to measure them and they are in the weak curvature regime where standard quantum field theory is fine: https://www.ligo.caltech.edu/page/what-are-gw#:~:text=The%20strongest%20gravitational%20waves%20are,)%2C%20and%20colliding%20neutron%20stars.

It's also quite challenging to create high energy particles, they tend to rapidly collide and dissipate their energy. The CERN "Large Hadron Collider" is the most powerful particle accelerator that humans have built: https://home.cern/resources/faqs/facts-and-figures-about-lhc It involves 27 kilometers of superconducting magnets and produces proton collisions of 1.3*10^13eV.

Most cosmic rays are in the range of 10^6 eV to 10^9 eV https://news.uchicago.edu/explainer/what-are-cosmic-rays But there have been a few very powerful cosmic rays detected. Betwen 2004 and 2007, the Pierre Auger Observatory detected 27 events with energies above 5.7 * 10^19 eV and the "Oh-My-God" particle detected in 1991 had an energy of 3.2 * 10^20 eV.

So they can happen but would be extremely difficult for an adversary to generate. The only reason he put 10^11 as a limit is that's the highest we've been able to definitively explore with accelerators. There may be more unexpected particles up there, but I don't think they would make much of a difference to the kinds of devices we're talking about.

But we certainly have to be vigilant! ASIs will likely explore every avenue and may very well be able to discover the "Theory of Everything". We need to design our systems so that we can update them with new knowledge. Ideally we would also have confidence that our infrastructure could detect attempts to subvert it by pushing outside the domain of validity of our models.

While dark energy and dark matter have a big effect on the evolution of the universe as a whole, they don't interact in any measurable way with systems here on earth. Ethan Siegel has some great posts narrowing down their properties based on what we definitively know, eg. https://bigthink.com/starts-with-a-bang/dark-matter-bullet-cluster/ So it's important on large scales but not, say, on the scale of earth. Of course, if we consider the evolution of AI and humanity over much longer timescales, then we will likely need a detailed theory. That again shows that we need to work with precise models which may expand their regimes of applicability.

An example of this kind of thing is the "Proton Radius Puzzle" https://physicsworld.com/a/solving-the-proton-puzzle/ https://en.wikipedia.org/wiki/Proton_radius_puzzle in which different measurements and theoretical calculations of the radius of the proton differed by about 4%. The physics world went wild and hundreds of articles were published about it! It seems to have been resolved now, though.

Even if everything is in principle calculable, it doesn't mean you can do useful calculations of complex systems a useful distance into the future. The three body problem intervenes. And there are rather more than three bodies if you're trying to predict behavior of a brain-sized neural network, let alone intervening on a complex physical world. The computer you'd need wouldn't just be the size of the universe, but all of the many worlds branches.

Simulation of the time evolution of models from their dynamical equations is only one way of proving properties about them. For example, a harmonic oscillator https://en.wikipedia.org/wiki/Harmonic_oscillator has dynamical equations m d^2x/dt^2= -kx. You can simulate that but you can also prove that the kinetic plus potential energy is conserved and get limits on its behavior arbitrarily far into the future.

Sure but seems highly unlikely there are any such neat simplifications for complex cognitive systems built from neural networks.

Other than "sapient beings do things that further their goals in their best estimation", which is a rough predictor, and what we're already trying to focus on. But the devil is in the details, and the important question is about how the goal is represented and understood.

Oh yeah, by their very nature it's likely to be hard to predict intelligent systems behavior in detail. We can put constraints on them, though, and prove that they operate within those constraints.

Even simple systems like random SAT problems https://en.wikipedia.org/wiki/SAT_solver can have a very rich statistical structure. And the behavior of the solvers can be quite unpredictable.

In some sense, this is the source of unpredictability of cryptographic hash functions. Odet Goldreich proposed an unbelivable simple boolean function which is believed to be one-way: https://link.springer.com/chapter/10.1007/978-3-642-22670-0_10

On the other hand, I think it is often possible to distill behavior for a particlular task from a rich intelligence into simple code with provable properties.

I imagine that the behavior of strong AI, even narrow AI, is computationally irreducible. In that case would it still be verifiable?

Our infrastructure should refuse to do anything the AI asks unless the AI itself provides a proof that it obeys the rules we have set. So we force the intelligent system itself to verify anything it generates!

Challenge 4: AI advances, including AGI, are not likely to be disruptively helpful for improving formal verification-based models until it’s too late.

Yes, this is our biggest challenge, I think. Right now very few people have experience with formal systems and historically the approach has been met with continuous misunderstanding and outright hostility.

In 1949 Alan Turing laid out the foundations for provably correct software: "An Early Program Proof by Alan Turing". What should have happened (in my book) is that computer science was recognized as a mathematical science capable of mathematically proving the correctness of its designs. And through the years, there have indeed been many who were inspired by that vision and made great and important contributions.

But, unfortunately, the field was resistant to correctness and we got wave after wave of "sloppy programming" which continues to haunt us to this day. For example, the July 19, 2024 CrowdStrke Incident has been called the largest IT outage in history causing over $10 billion in financial damages. It was caused by a sloppy error in an update to Microsoft Windows security software. This is an outrage and it has been an ongoing outrage almost since the time of Turing.

In another post, I mentioned a similar outrage in scientific computing. It has been known for many decades how to mathematically precisely perform scientific computing. And yet the standard remains "slap the code up against the wall and see if the outputs look reasonable". It is unknown how many scientific results, medical analyses, or engineering designs are flawed because people couldn't be bothered to perform their computations correctly.

Cryptography has similar issues. The mainstream is based on computational cryptography which has unknown vulnerability to powerful AI attack. Meanwhile, provable correct "Information Theoretic Cryptography" languishes in a few academic conferences with very little use in practice.

So humanity is way behind where we should be on the formalization front. Our ancestors sloppiness may now be our downfall. One hope, as you mention, is the rapid advancement of AI theorem proving, AI autoformalization, AI verified program synthesis, and other related areas.

It looks to me that we are now in a race of AI for safety vs. AI for unsafe capabilities. The more people who are aware of the issues and the potential solutions using the new formal technologies, the greater the chance we have to survive.

I think the safest world would be one where humanity does not create AI. Next would be where we have a long enough pause in AI development to carefully create safe infrastructure. Next would be restricting powerful AI to a few highly regulated government labs. Next would be a world with tight controls on GPUs and datacenters with no computational overhang. Unfortunately, it appears that all those ships have already sailed. We now have huge computational overhang and powerful open source AI models. The Llama 3.1 8B model can run on a $90 Raspberry Pi 5. So we have to rebuild the world with safe infrastructure in the face of rapidly improving and uncontrolled AI capabilities.

It appears that the massive needed change will only happen *after* large scale AI-powered attacks and destruction begins. I think the greatest contribution to humanity's survival right now is to create detailed plans for building provably safe infrastructure, so that when the enabling technologies appear and the world begins demanding safe technology, there is a plan for moving forward.

I think the greatest contribution to humanity's survival right now is to create detailed plans for building provably safe infrastructure, so that when the enabling technologies appear and the world begins demanding safe technology, there is a plan for moving forward.

There are enough places provably-safe-against-physical-access hardware would be an enormous value-add that you don't need to wait to start working on it until the world demands safe technology for existential reasons. Look at the demand for secure enclaves, which are not provably secure, but are "probably good enough because you are unlikely to have a truly determined adversary".

The easiest way to convince people that they, personally, should care more about provable correctness over immediately-obvious practical usefulness is to demonstrate that provable correctness is possible, not too costly, and has clear benefits to them, personally.

I totally agree! I think this technology is likely to be the foundation of many future capabilities as well as safety. What I meant was that society is unlikely to replace today's insecure and unreliable power grid controllers, train network controllers, satellite networks, phone system, voting machines, etc. until some big event forces that. And that if the community produces comprehensive provable safety design principles, those are more likely to get implemented at that point.

My point was more that I expect there to be more value in producing provable safety design demos and provable safety design tutorials than in provable safety design principles, because I think the issue is "people don't know how, procedurally, to implement provable safety in systems they build or maintain" than it is "people don't know how to think about provable safety but if their philosophical confusion was resolved they wouldn't have too many further implementation difficulties".

So having any examples at all would be super useful, and if you're trying to encourage "any examples at all" one way of encouraging that is to go "look, you can make billions of dollars if you can build this specific example".

Here's the post's first proposed limitation:

Limitation 1 – We will not obtain strong proofs or formal guarantees about the behavior of AI systems in the physical world. At best we may obtain guarantees about rough approximations of such behavior, over short periods of time.

For many readers with real-world experience working in applied math, the above limitation may seem so obvious they may wonder whether it is worth stating at all. The reasons why it is are twofold. First, researchers advocating for GS methods appear to be specifically arguing for the likelihood of near-term solutions that could somehow overcome Limitation 1.

Think about this claimed limitation in the context of today's deployed technologies and infrastructure. There are 5 million bridges around the world that people use every day to cross rivers and chasms. Many of them have lasted for hundreds of years and new ones are built with confidence all the time. Failures do happen, but they are so rare that news stories are written about them and videos are shared on YouTube. How are these remarkably successful technologies built?

Mechanical engineers have built up a body of knowledge about building safe bridges. They have precise rules about the structure of successful bridges. They have detailed equations, simulations, and tables of material properties. And they know the regimes of validity of their models. When they build a bridge according to these rules, they have a confidence in their safety which is nothing like a "rough approximation..[valid] over short periods of time."

We are rapidly moving from human engineers to AI systems for design. What should an AI bridge design system look like? It should certainly encode all the rules that the human engineers use! And, in fact, should give confidence that its designs are actually following those rules, it would be really great to check that a design follows those rules. We could hire a human mechanical engineer to audit the AI design, but we'd really like to automate that process.

Enter formal systems like Lean! We can encode the mechanical engineer's design criteria and particular bridge designs as precise Lean statements. Now the statement that a particular design follows the design criteria becomes a theorem and Lean can provide a proof certificate that it is true. This certificate can be automatically checked by a proof checker and users can have high confidence that the design does indeed follow the design rules without having to trust anyone!

This formalization process is happening right now in hundreds of different disciplines. It is still new, so early attempts may be incomplete and require engineers to learn to use new tools. But AI is helping here as well. The field of "autoformalization" takes textbooks, manuals, design documents, software, scientific articles, mathematics papers, etc. written in natural language and automatically generates precise formal models of them. Large language models are becoming quite good at this (eg. here's a recent paper: "Don't Trust: Verify -- Grounding LLM Quantitative Reasoning with Autoformalization").

As AI autoformalization and theorem proving improves, we should expect the entire corpus of scientific and engineering knowledge to be rapidly represented in a precise formal form. And AI designs which at least meet the current human requirements for safe systems can be automatically generated with proof certificates of adherence to design rules given.

But we cannot stop at the current level of engineering safety. Today's technologies and infrastructure works pretty well in today's human environment. Unfortunately, as AIs become more powerful, they will likely be used in an adverarial way to attack infrastructure, both at the behest of malicious human actors and in the process of satisfying autonomous subgoals. To counter this threat, we need a much higher standard of safety than is common in current engineering practice.

Max and I argue that the only way to have assurance of safety against powerful adversarial agents is through formal methods and mathematical proof. For even moderately complex systems, we will need formal guarantees of safety against every attack path that is available to a specified class of adversaries. This is the level of safety for which we need Provably Safe and Guaranteed Safe approaches to achieve.

I think you are wrong about what a proof that following the mechanical engineer's design criteria would actually do.

Our bridge design criteria are absolutely not robust to adversarial optimization. It is true that we have come up with a set of guiding principles where if a human who is genuinely interested in building a good bridge follows them then that results (most of the time) into a bridge that doesn't fall down. But that doesn't really generalize at all to what would happen if an AI system wants to design a bridge with some specific fault, but is constrained in following the guiding principles. We are not anywhere close to having guiding principles that are operationalized enough so that we could actually prove adherence to them, or guiding principles that even if they could be operationalized, would be robust to adversarial pressure.

As such I am confused what the goal is here. If I somehow ended up in charge of building a bridge according to modern design principles, but I actually wanted it to collapse, I don't think I would have any problems in doing so. If I perform an adversarial search over ways to build a bridge that happen to follow the design principles, but where I explicitly lean into the areas where the specification comes apart, then the guidelines will very quickly lose their validity.

I think the fact that there exists a set of instructions that you can give to well-intentioned humans that usually results in a reliable outcome is very little evidence that we are anywhere close to a proof system to which adherence could be formally verified, and would actually be robust to adversarial pressure when the actor using that system is not well-intentioned.

Oh, I should have been clearer. In the first part, I was responding to his "rough approximation..[valid] over short periods of time." claim for formal methods. I was arguing that we can at least copy current methods and in current situations get bridges which actually work rather robustly and for a long time.

And, already, the formal version is better in many respects. For one, we can be sure it is being followed! So we get "correctness" in that domain. A second piece, I think is very important but I haven't figured out how to communicate it. And that's that the proof that a design satisfies design rules is a specific artifact that anyone can check. So it completely changes the social structure of the situation. Instead of having to rely on the "expert" who may or may not be competent, and may or may not be corrupt, each party is empowered with absolute guarantee of correctness. I think this alters many social processes dramatically, but it needs to be fleshed out and better explained.

After that argument, I go to the next piece which you mention. Today's engineering practices are not likely to be robust against powerful adversaries (eg. powerful AI or humans backed up by powerful AI). And I don't think current practices can deal with that very well. In the AI safety space, the typical approach is "red teaming" where humans try to trigger AIs to produce bad scenarios and they see how easy it is and how powerful the attacks are. This can find problems but can't show the absence of safety vulnerabilities.

With mathematical proof, we can systematically consider the entire space of possible actions by adversaries in the specified class. Using techniques like "branch and bound", we can systematically eliminate regions of the action space which are shown to be safe. And if the system is actually safe, there is a proof of that (by Godel's Completeness theorem which says that any property which holds in all models of a set of axioms can be proven from those axioms). If the systems are complex, the proofs can be large, so there is value in "designing for verification".

That's a possibility which provides actual safety against AI and other adversaries and provides detailed information about the value of different features, etc. Several groups are working right now to develop examples of this kind. Hopefully, the process can eventually be automated so that we can put it on the same timescale as AI advancement.

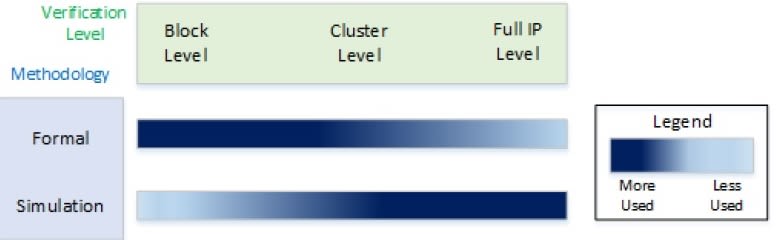

Steve, thanks for your explanations and discussion. I just posted a base reply about formal verification limitations within the field of computer hardware design. In that field, ignoring for now the very real issue of electrical and thermal noise, there is immense value in verifying that the symbolic 1's and 0's of the digital logic will successfully execute the similarly symbolic software instructions correctly. So the problem space is inherently simplified from the real world, and the silicon designers have incentive to build designs that are easy to test and debug, and yet only small parts of designs can be formally verified today. It would seem to me that, although formal verification will keep advancing, AI capabilities will advance faster and we need to develop simulation testing approaches to AI safety that are as robust as possible. For example, in silicon design one can make sure the tests have at least executed every line of code. One could imaging having a METR test suite and try to ensure that every neuron in a given AI model has been at least active and inactive. It's not a proof, but it would speak to the breadth of the test suite in relation to the model. Are there robustness criteria for directed and random testing that you consider highly valuable without having a full safety proof?

Testing is great for a first pass! And in non-critical and non-adversarial settings, testing can give you actual probabilistic bounds. If the probability distribution of the actual uses is the same as the testing distribution (or close enough to it), then the test statistics can be used to bound the probability of errors during use. I think that is why formal methods are so rarely used in software: testing is pretty good and if errors show up, you can fix them then. Hardware has greater adoption of formal methods because it's much more expensive to fix errors after the fact.

But the real problems arise from adversarial attacks. The statistical correctness of a system doesn't matter to an adversary. They are looking for the weird outlier cases which will enable them to exploit the system (eg. inputs with non-standard characters that break the parser, or super-long inputs which overflow a buffer and enable unexpected access to memory, etc.). Testing can't show the absence of flaws (unless every input is tested!).

I think the increasing plague of cyberattacks is due to adversaries become more sophisticated in their search for non-standard ways of interacting with systems that expose their untested and unproven underbelly. But that kind of sophisticated attack requires highly skilled attackers and those are fortunately still rare.

What is coming, however, are AI-powered cyberattack systems which know all of the standard flaws and vulnerabilities of systems, all of the published 1-day vulnerabilities, all of the latest social engineering techniques discussed on the dark web, and have full access to reverse engineering tools like Ghidra. Those AIs are likely being developed as we speak in various government labs (eg. here is a list of significant recent cybe incidents: https://www.csis.org/programs/strategic-technologies-program/significant-cyber-incidents ).

How long before powerful cyberattack AIs are available on bittorrent to teenage hackers? So, I believe the new reality is that every system, software and hardware need to be proven correct and secure to have any confidence in it. To do that, we are likely to need to use AI-theorem provers and AI-verified software synthesis systems. Fortunately, many groups are showing rapid progress on those!

But that doesn't mean testing is useless. It's very helpful during the development process and in better understanding systems. For final deployment in an environment with powerful AIs, however, I don't think it's adequate any more.

As a concrete example, consider the recent Francis Scott Key Bridge collapse where an out of control container ship struck one of the piers of the bridge. It killed six people, blocked the Port of Baltimore for 11 weeks, and will cost $1.7 billion to replace the bridge which will take four years.

Could the bridge's designers way back in 1977 have anticipated that a bridge over one of the busiest shipping routes in the United States might some day be impacted by a ship? Could they have designed it to not collapse in this circumstance? Perhaps shore up the base of its piers to absorb the impact of the ship?

This was certainly a tragedy and is believed to have been an accident. But the cause was electrical problems on the ship. "At 1:24 a.m., the ship suffered a "complete blackout" and began to drift out of the shipping channel; a backup generator supported electrical systems but did not provide power to the propulsion system"

How well designed was that ships generator and backup generator? Could adverarial attackers cause this kind of electrical blackout in other ships? Could remote AI systems do it? How many other bridges are vulnerable to impacts from out of control ships? What is the economic value at stake from this kind of flawed safety engineering? How much is it worth to create designs which provide guaranteed protections against this kind of flaw?

That example seems particularly hard to ameliorate with provable safety. To focus on just one part, how could we prove the ship would not lose power long enough to crash into something? If you try to model the problem at the level of basic physics, it's obviously impossible. If you model it at the level of a circuit diagram, it's trivial--power sources on circuit diagrams do not experience failures. There's no obviously-correct model granularity; there are schelling points, but what if threats to the power supply do not respect our schelling points?

It seems to me that, at most, we could prove safety of a modeled power supply, against a modeled, enumerated range of threats. Intuitively, I'm not sure that compares favorably to standard engineering practices, optimizing for safety instead of for lowest-possible cost.

In general, we can't prevent physical failures. What we can do is to accurately bound the probability of them occurring, to create designs which limit the damage that they cause, and to limit the ability of adversarial attacks to trigger and exploit them. We're advocating for humanity's entire infrastructure to be upgraded with provable technology to put guaranteed bounds on failures at every level and to eliminate the need to trust potentially flawed or corrupt actors.

In the case of the ship, there are both questions about the design of that ship's components and its provenance. Why did the backup power not enable the propulsion system to stop? Why wasn't there a "failsafe" anchor which drops if the systems become inoperable? Why didn't the port have tugboats guiding risky ship departures? What was the history of that ship's generators? Etc. With the kind of provable technology that Max and I outlined, it is possible to have provably trustable data about the components of the ship, about their manufacture, about their provenance since manufacture, about the maintenance history of the ship's components, etc.

The author of the main post and other critics argue against formal methods doing complex "magical" things like determining which DNA sequences are safe, how autonomous vehicles should navigate cities, or detecting bad thoughts in huge transformer neural nets. Someday these methods might help with some of those, but those aren't the low hanging fruit we are proposing. In some sense we mainly want to use proof for much more mundane things. What Max and I are arguing for are mechanisms to create software, hardware, and social designs which aren't exploitable by adversarial AIs and to create infrastructure that provides guarantees about its state and behavior. Nothing we are proposing requires sophisticated mathematics that today's grad students couldn't do. Nothing requires new physics or radically new engineering principles. Rather, it is a way to organize current technologies to increase trust and eliminate vulnerabilities.

These technologies enable us to eliminate the need to trust third parties: Was a computation performed accurately? Were there bugs in the program? What data was used to train this model or estimate this probability? What probabilistic program or neural net was used? Was the training done correctly? What is the history of this component? What evidence is there that it was manufactured correctly? These and thousands more cases will enable us to build up a robust infrastructure which is provably not vulnerable to AI-driven attack.

A core aspect of this is that we can use untrusted powerful AIs running on untrusted datacenters in untrusted countries to help us build completely trusted software, hardware, and social protocols. The idea is to precisely specify a task (eg. software spec, hardware spec, solve a mathematically encoded problem, etc.) and have the untrusted AI generate both and answer and a proof (in a system like Lean) that the answer solves the precisely specified problem or design task. We can cheaply and completely reliably check the proof. If it verifies, then we can fully trust the results from the untrusted AI. This enables us to bootstrap the current mess of untrusted and unreliable AIs, flaky and insecure hardware, untrustable people and groups, etc. to build up a *fully* trustable infrastructure. The power and importance of this is immense!

Here's the intuition that's making me doubt the utility of provably correct system design to avoiding bridge crashes:

I model the process leading up to a ship that doesn't crash into a bridge as having many steps.

1. Marine engineers produce a design for a safe ship

2. Management signs off on the design without cutting essential safety features

3. Shipwrights build it to spec without cutting any essential corners

4. The ship operator understands and follows the operations and maintenance manuals, without cutting any essential corners

5. Nothing out-of-distribution happens over the lifetime of the ship.

And to have a world where no bridges are taken out by cargo ships, repeat that 60,000 times.

It seems to me that provably safe design can help with step 1--but it's not clear to me that step 1 is where the fault happened with the Francis Scott Key bridge. Engineers can and do make bridge-destroying mistakes (I grew up less than 50 miles from the Tacoma Narrows bridge), but that feels rare to me compared to problems in the other steps: management does cut corners, builders do slip up, and O&M manuals do get ignored.

With verifiable probabilities of catastrophe, maybe a combination of regulation and insurance could incentivize makers and operators of ships to operate safely--but insurers already employ actuaries to estimate the probability of catastrophe, and it's not clear to me that the premiums charged to the MV Dali were incorrect. As for the Francis Scott Key, I don't know how insuring a bridge works, but I believe most of the same steps and problems apply.

(Addendum: The new Doubly-Efficient Debate paper on Google's latest LW post might make all of these messy principal-agent human-corrigibility type problems much more tractable to proofs? Looks promising.)

I totally agree in today's world! Today, we have management protocols which are aimed at requiring testing and record keeping to ensure that boats and ships in the state we would like them to be. But these rules are subject to corruption and malfeasance (such as the 420 Boeing jets which incorporated defective parts and yet which are currently flying with passengers: https://doctorow.medium.com/https-pluralistic-net-2024-05-01-boeing-boeing-mrsa-2d9ba398bd54 )

But it appears we are rapidly moving to a world in which much of the physical labor will be done by robots and in which each physical system will have a corresponding "digital twin" (eg. https://www.nvidia.com/en-us/omniverse/solutions/digital-twins/ ).

In that world, we can implement provable formal rules governing every system, from raw materials, to manufacture, to supply chain, to operations, and to maintenance.

In an AI world, much more sophisticated malfeasance can occur. Formal models of domains with proofs of adherence to rules and protection against adversaries is the only way to ensure our systems are safe and effective.

The post's second Challenge is:

Challenge 2 – Most of the AI threats of greatest concern have too much complexity to physically model.

Setting aside for a moment the question of whether we can develop precise rules-based models of physics, GS-based approaches to safety would still need to determine how to formally model the specific AI threats of interest as well. For example, consider the problem of determining whether a given RNA or DNA sequence could cause harm to individuals or to the human species. This is a well-known area of concern in synthetic biology, where experts expect that risks, especially around the synthesis of novel viruses, will dramatically increase as more end-users gain access to powerful AI systems. This threat is specifically discussed in [3] as an area in which the authors believe that formal verification-based approaches can help:

I certainly agree that there are complex situations where we can't currently tell what's safe and what isn't. In that case we should clearly be incredibly conservative and not expose humans to systems with unclear safety properties! The burden is on the creator of a system to demonstrate safety!

Regarding DNA and RNA synthesis, I see the need for provable technologies at a much simpler and lower level than what you are talking about. Right now you can buy DNA and RNA synthesis machines without any kinds of controls on what they will synthesize from many suppliers for just a few thousand dollars. In a world in which the complete sequence of the smallpox DNA and other extremely harmful pathogens, this is completely insane!

It would be nice if we could automatically tell whether a DNA sequence was harmful or not. But, as you say, we are not at the point where AI can do that. But that doesn't mean we throw up our hands and say "Ok, go ahead and synthesize whatever you want! Here's the smallpox DNA in case you're interested!"

Our biohazard labs have strict rules and guidelines for what they can synthesize and how. Human committees must sign off on work that might inadvertently lead to human harm. We need at least that level of control on synthesis devices available in the open market. For example, sequences might need to be cryptographically signed by a governing body stating they are safe before they can be synthesized.

But how can we be sure that the hardware will require those digital signatures? That's where the "provable contract" hardened crytpographic technologies that Max and I describe comes in. It needs to be impossible for adversaries to use synthesis machines to create unsigned potentially harmful sequences.

There is a whole large story about how that works. Fundamentally it involves secure digital hardware similar to Apple's Secure Enclave. But with provably effective tamper sensing which implements "zeroization" (deletion of cryptographic keys on detection of tampering). This needs to be integrated into the core operation of the device.

Similar technology needs to be incorporated into robots and other physical devices which can cause harm. The mathematical proof guarantee is that the device will only operate under conditions specified by formal "contract rules". Those rules can be chosen to be whatever is appropriate for the domain of interest. For biohazard DNA synthesis, it might involve a digital signature from a secure government database of safe sequences or from a regulatory committee overseeing new research.

On one hand, while we should recognize that modeling techniques like discrete element analysis can produce quantitative estimates of real-world behavior – for example, how likely a drone is to crash, or how likely a bridge is to fail – we should not lose sight of the fact that such estimates are invariably just estimates and not guarantees. Additionally, from a practical standpoint, estimates of this sort for real-world systems most-often tend to be based on empirical studies around past results rather than prospective modeling. And to the extent that estimates are ever given prospectively about real-world systems, they are invariably considered to be estimates, not guarantees.

I think this is a critical point. Every engineering discipline has precise models of their domain. For example, I'm looking at Peter Childs' "Mechanical Design Engineering Handbook" which surveys the field and is full of detailed partial differential equations for the behavior of different components like bearings, gears, clutches, seals, springs, fasteners, etc. It also has many tables showing the values (with significant figures) of parameters like viscosity of lubricants and the fatigue load of ball bearings.

How did they get these models and parameters? It's a combination of inference from fundamental physics and experimental tests. Given more detailed physics understanding, fewer experiments are needed. And even with no physics, probabilistic models with PAC-like guarantees can be built purely from experiments if the test distribution is the same as the training distribution.

To build safe systems, engineers do computations on these models and bound the probability of system failure (hopefully to a low value!). These computations should be purely mathematical with precise answers verified by mathematical proofs. Unfortunately, that has not been the culture in "scientific computing" or "numerical methods" in the United States and often suggested the need for "numerical analysts" to look over your code and ensure that it was "numerically stable"!

In my book, that culture has been a disaster, leading to many failures and deaths. The Europeans developed a culture of "verified scientific computing" (for example, I was enamored of this book: "Numerical Toolbox for Verified Computing I: Basic Numerical Problems Theory, Algorithms, and Pascal-XSC Programs (Springer Series in Computational Mathematics)" which shows how to produce provably correct enclosures around solutions to equations, finding global optima, etc. These days these models can be formalized in a system like Lean and properties deduced using mathematical proof.

How much accuracy do we need and what should we do about incorrect models? For safety, the answer depends on the strengths of the adversary. If your adversary is a typical human, then often weak models suffice. Powerful AI adversaries, however, will look for weaknesses in the desing model and try to exploit them. To have any hope of providing actual security, these processes must be modeled and accounted for.

Most engineering disciplines actually have a kind of "stack" of models, each layer justified by the layer below. For example, in chip design there is a digital circuit layer on top of a physical layout layer on top of detailed electrodynamics and solid state materials, etc. Today's chips do a pretty good job of isolating the layers, so a digital circuit laid out according to the chip process node's design rules will actually implement the intended digital behavior.

But attacks like "Rowhammer" show what can happen when an adversary can affect a layer below the digital layer. By repeatedly accessing certain memory cells, an attacker can flip nearby bits and use this to extract cryptographic keys and violate security "guarantees". As DRAMs have gotten denser, the problem has gotten worse. The human-designed mitigation solutions have led to a cat and mouse game that the attackers are winning. I believe that's a great example of a critically important design problem which can only be solved by using AI and formal methods. The ideal solution would be to design chips which provably implement the intended digital model. But we have a lot of bad DRAM chips out there! I believe we need a formal electrodynamic model of the phenomenon and a provable representation of all access patterns which lead to violations. Then we need verified AI program synthesis to resynthesize programs to provably not generate any of the bad access patterns. I don't think any other technique has a chance of dealing with that kind of issue. And you can be sure that advanced AI's will be hammering away at weak computational infrastructure.

I think it is important to be concrete. Jean-Baptiste Jeannin's research interest is "Verification of cyber-physical systems, in particular aerospace applications". In 2015, nearly a decade ago, he published "Formal Verification of ACAS X, an Industrial Airborne Collision Avoidance System". ACAS X is now deployed by FAA. So I would say this level of formal verification is a mature technology now. It is just that it has not been widely adopted outside of aerospace applications, mostly due to cost issues and more importantly people not being aware that it is possible now.

Thanks! His work looks very interesting! He recently did this nice talk which is very relevant: "Formal Verification in Scientific Computing"

From what I gather reading the ACAS X paper, it formally proved a subset of the whole problem and many issues uncovered by using the formal method were further analyzed using simulations of aircraft behaviors (see the end of section 3.3). One of the assumptions in the model is that the planes react correctly to control decisions and don't have mechanical issues. The problem space and possible actions were well-defined and well-constrained in the realistic but simplified model they analyzed. I can imagine complex systems making use of provably correct components in this way but the whole system may not be provably correct. When an AI develops a plan, it could prefer to follow a provably safe path when reality can be mapped to a usable model reliably, and then behave cautiously when moving from one provably safe path to another. But the metric for 'reliable model' and 'behave cautiously' still require non-provable decisions to solve a complex problem.

I agree that would be better than what we usually have now! And is more in the "Swiss Cheese" approach to security. From a practical perspective, we are probably going to have do that for some time: components with provable properties combined in unproven ways. But every aspect which is unproven is a potential vulnerability.

The deeper question is whether there are situations where it has to be that way. Where there is some barrier to modeling the entire system and formally combining correctness and security properties of components to obtain them for the whole system.

Certainly there are hardware and software components whose detailed behavior is computationally complex to predict in advance (eg. searching for solutions to SAT problems or inverting hash functions). So you are unlikely to be able to prove theorems like "For every specification of these n bits in this SAT problem, it will take f(n) time to discover a satisfying value for the remaining bits". But that's fine! It's just that you shouldn't make the correctness or security of your system depend on that! For example, you might put a time bound on the search and have a failsafe path if it doesn't succeed by then. That software does have a provable time bound.

So, in general, systems need to be designed to be correct and safe. If you can't put provable bounds on the safety of a system, then I would argue that you have no business exposing the public to that system.

It would be great to start collecting examples of subcomponents or compositional designs which are especially difficult to prove properties about. My sense is that virtually all of these formal analyses will be done by AIs and not by humans. And I think it will be important to develop libraries and models of problems which are easy to solve formally and provide formal guarantees about. And those which are more difficult.

Thinking about the software verification case, I would argue that every decently written piece of software today, the programmer has an internal argument in their head as to why it is correct and not vulnerable to attacks. Humans are fallible, so their argument may not be correct. But if it is correct, then it shouldn't be difficult to formalize it into a precise formal proof. The "de Bruijn Factor" (https://www.cs.ru.nl/~freek/factor/factor.pdf ) measures how much bigger a formal proof of something is than an informal description. It seems to be between 4 and 10 in current formal systems. So, if a human programmer has confidence in the correctness and security of his code, it should only be a small factor more work for an AI to formally prove that. If the programmer doesn't have that confidence, then I think we have no business deploying it anywhere it might harm humans.

Thinking more about the question of are there properties which we believe but for which we have no proof. And what do we do about those today and in an intended provable future?