This post is a slightly-adapted summary of two twitter threads, here and here.

The t-AGI framework

As we get closer to AGI, it becomes less appropriate to treat it as a binary threshold. Instead, I prefer to treat it as a continuous spectrum defined by comparison to time-limited humans. I call a system a t-AGI if, on most cognitive tasks, it beats most human experts who are given time t to perform the task.

What does that mean in practice?

- A 1-second AGI would need to beat humans at tasks like quickly answering trivia questions, basic intuitions about physics (e.g. "what happens if I push a string?"), recognizing objects in images, recognizing whether sentences are grammatical, etc.

- A 1-minute AGI would need to beat humans at tasks like answering questions about short text passages or videos, common-sense reasoning (e.g. Yann LeCun's gears problems), simple computer tasks (e.g. use photoshop to blur an image), justifying an opinion, looking up facts, etc.

- A 1-hour AGI would need to beat humans at tasks like doing problem sets/exams, writing short articles or blog posts, most tasks in white-collar jobs (e.g. diagnosing patients, giving legal opinions), doing therapy, doing online errands, learning rules of new games, etc.

- A 1-day AGI would need to beat humans at tasks like writing insightful essays, negotiating business deals, becoming proficient at playing new games or using new software, developing new apps, running scientific experiments, reviewing scientific papers, summarizing books, etc.

- A 1-month AGI would need to beat humans at coherently carrying out medium-term plans (e.g. founding a startup), supervising large projects, becoming proficient in new fields, writing large software applications (e.g. a new OS), making novel scientific discoveries, etc.

- A 1-year AGI would need to beat humans at... basically everything. Some projects take humans much longer (e.g. proving Fermat's last theorem) but they can almost always be decomposed into subtasks that don't require full global context (even tho that's often helpful for humans).

Some clarifications:

- I'm abstracting away from the question of how much test-time compute AIs get (i.e. how many copies are run, for how long). A principled way to think about this is probably something like: "what fraction of the world's compute is needed?". But in most cases I expect that the bottleneck is being able to perform a task *at all*; if they can then they'll almost always be able to do it with a negligible proportion of the world's compute.

- Similarly, I doubt the specific "expert" theshold will make much difference. But it does seem important that we use experts not laypeople, because the amount of experience that laypeople have with most tasks is so small. It's not really well-defined to talk about beating "most humans" at coding or chess; and it's not particularly relevant either.

- I expect that, for any t, the first 100t-AGIs will be *way* better than any human on tasks which only take time t. To reason about superhuman performance we can extend this framework to talk about (t,n)-AGIs which beat any group of n humans working together on tasks for time t. When I think about superintelligence I'm typically thinking about (1 year, 8 billion)-AGIs.

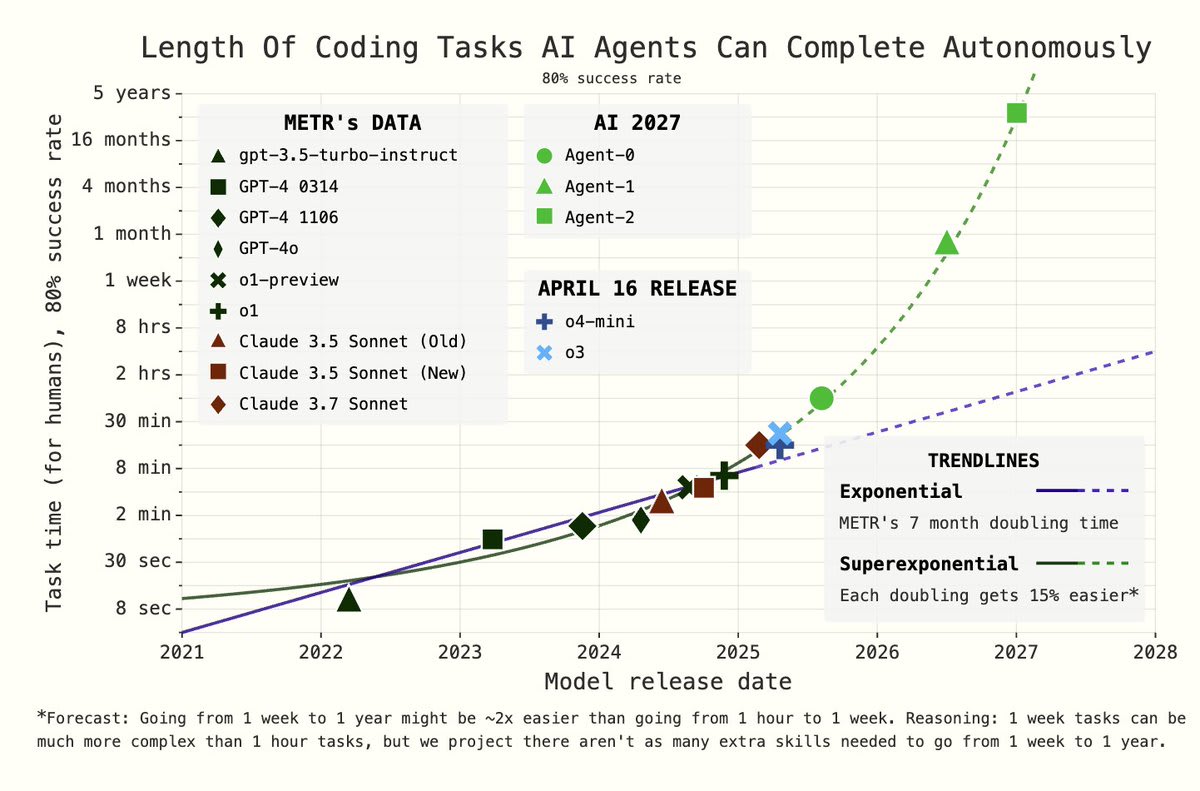

- The value of this framework is ultimately an empirical matter. But it seems useful so far: I think existing systems are 1-second AGIs, are close to 1-minute AGIs, and are a couple of years off from 1-hour AGIs. (FWIW I formulated this framework 2 years ago, but never shared it widely. From your perspective there's selection bias—I wouldn't have shared it if I'd changed my mind. But at least from my perspective, it gets points for being useful for describing events since then.)

And very briefly, some of the intuitions behind this framework:

- I think coherence over time is a very difficult problem, and one humans still struggle at, even though (I assume) evolution optimized us hard for this.

- It's also been a major bottleneck for LLMs, for the principled reason that the longer the episode, the further off the training distribution they go.

- Training NNs to perform tasks over long time periods takes much more compute (as modelled in Ajeya Cotra's timelines report).

- Training NNs to perform tasks over long time periods takes more real-world time, so you can't gather as much data.

- There are some reasons to expect current architectures to be bad at this (though I'm not putting much weight on this; I expect fixes to arise as the frontier advances).

Predictions motivated by this framework

Here are some predictions—mostly just based on my intuitions, but informed by the framework above. I predict with >50% credence that by the end of 2025 neural nets will:

- Have human-level situational awareness (understand that they're NNs, how their actions interface with the world, etc; see definition here)

- Beat any human at writing down effective multi-step real-world plans. This one proved controversial; some clarifications:

- I think writing down plans doesn't get you very far, the best plans are often things like "try X, see what happens, iterate".

- It's about beating any human (across many domains) not beating the best human in each domain.

- By "many domains" I don't mean literally all of them, but a pretty wide range. E.g. averaged across all businesses that McKinsey has been hired to consult for, AI will make better business plans than any individual human could.

- Do better than most peer reviewers

- Autonomously design, code and distribute whole apps (but not the most complex ones)

- Beat any human on any computer task a typical white-collar worker can do in 10 minutes

- Write award-winning short stories and publishable 50k-word books

- Generate coherent 5-min films (note: I originally said 20 minutes, and changed my mind, but have been going back and forth a bit after seeing some recent AI videos)

- Pass the current version of the ARC autonomous replication evals (see section 2.9 of the GPT-4 system card; page 55). But they won't be able to self-exfiltrate from secure servers, or avoid detection if cloud providers try.

- 5% of adult Americans will report having had multiple romantic/sexual interactions with a chat AI, and 1% having had a strong emotional attachment to one.

- We'll see clear examples of emergent cooperation: AIs given a complex task (e.g. write a 1000-line function) in a shared environment cooperate without any multi-agent training.

The best humans will still be better (though much slower) at:

- Writing novels

- Robustly pursuing a plan over multiple days

- Generating scientific breakthroughs, including novel theorems (though NNs will have proved at least 1)

- Typical manual labor tasks (vs NNs controlling robots)

FWIW my actual predictions are mostly more like 2 years, but others will apply different evaluation standards, so 2.75 (as of when the thread was posted) seems more robust. Also, they're not based on any OpenAI-specific information.

Lots to disagree with here ofc. I'd be particularly interested in:

- People giving median dates they expect these to be achieved

- People generating other specific predictions about what NNs will and won't be able to do in a few years' time

(expanding on my reply to you on twitter)

For the t-AGI framework, maybe you should also specify that the human starts the task only knowing things that are written multiple times on the internet. For example, Ed Witten could give snap (1-second) responses to lots of string theory questions that are WAY beyond current AI, using idiosyncratic intuitions he built up over many years. Likewise a chess grandmaster thinking about a board state for 1 second could crush GPT-4 or any other AI that wasn’t specifically and extensively trained on chess by humans.

A starting point I currently like better than “t-AGI” is inspired the following passage in Cal Newport’s book Deep Work:

In the case of LLM-like systems, we would replace “smart recent college graduate” with “person who has read the entire internet”.

This is kinda related to my belief that knowledge-encoded-in-weights can do things that knowledge-encoded-in-the-context-window can’t. There is no possible context window that turns GPT-2 into GPT-3, right?

So when I try to think of things that I don’t expect LLM-like-systems to be able to do, I imagine, for example, finding a person adept at tinkering, and giving them a new machine to play with, a very strange machine unlike anything on the internet. I ask the person to spend the next weeks or months understanding that machine. So the person starts disassembling it and reassembling it, and they futz with one of the mechanism and see how it affects the other mechanisms, and they try replacing things and tightening or loosening things and so on. It might take a few weeks or months, but they’ll eventually build for themselves an exquisite mental model of this machine, and they’ll be able to answer questions about it and suggest improvements to it, even in only 1 second of thought, that far exceed what an LLM-like AI could ever do.

Maybe you’ll say that example is unfair because that’s a tangible object and robotics is hard. But I think there are intangible examples that are analogous, like people building up new fields of math. As an example from my own life, I was involved in early-stage design for a new weird type of lidar, including figuring out basic design principles and running trade studies. Over the course of a month or two of puzzling over how best to think about its operation and estimate its performance, I wound up with a big set of idiosyncratic concepts, with rich relationships between them, tailored to this particular weird new kind of lidar. That allowed me to have all the tradeoffs and interrelationships at the tip of my tongue. If someone suggested to use a lower-peak-power laser, I could immediately start listing off all the positive and negative consequences on its performance metrics, and then start listing possible approaches to mitigating the new problems, etc. Even if that particular question hadn’t come up before. The key capability here is not what I’m doing in the one second of thought before responding to that question, rather it’s what I was doing in the previous month or two, as I was learning, exploring, building concepts, etc., all specific to this particular gadget for which no remotely close analogue existed on the internet.

I think a similar thing is true in programming, and that the recent success of coding assistants is just because a whole lot of coding tasks are just not too deeply different from something-or-other on the internet. If a human had hypothetically read every open-source codebase on the internet, I think they’d more-or-less be able to do all the things that Copilot can do without having to think too hard about it. But when we get to more unusual programming tasks, where the hypothetical person would need to spend a few weeks puzzling over what’s going on and what’s the best approach, even if that person has previously read the whole internet, then we’re in territory beyond the capabilities of LLM programming assistants, current and future, I think. And if we’re talking about doing original science & tech R&D, then we get into that territory even faster.

Yeah, I agree I convey the implicit prediction that, even though not all one-month tasks will fall at once, they'll be closer than you would otherwise expect not using this framework.

I think I still disagree with your point, as follows: I agree that AI will soon do passably well at summarizing 10k word books, because the task is not very "sharp" - i.e. you get gradual rather than sudden returns to skill differences. But I think it will take significantly longer for AI to beat the quality of summary produced by a median expert in 1 month, because that expert's summary will in fact explore a rich hierarchical interconnected space of concepts from the novel (novel concepts, if you will).