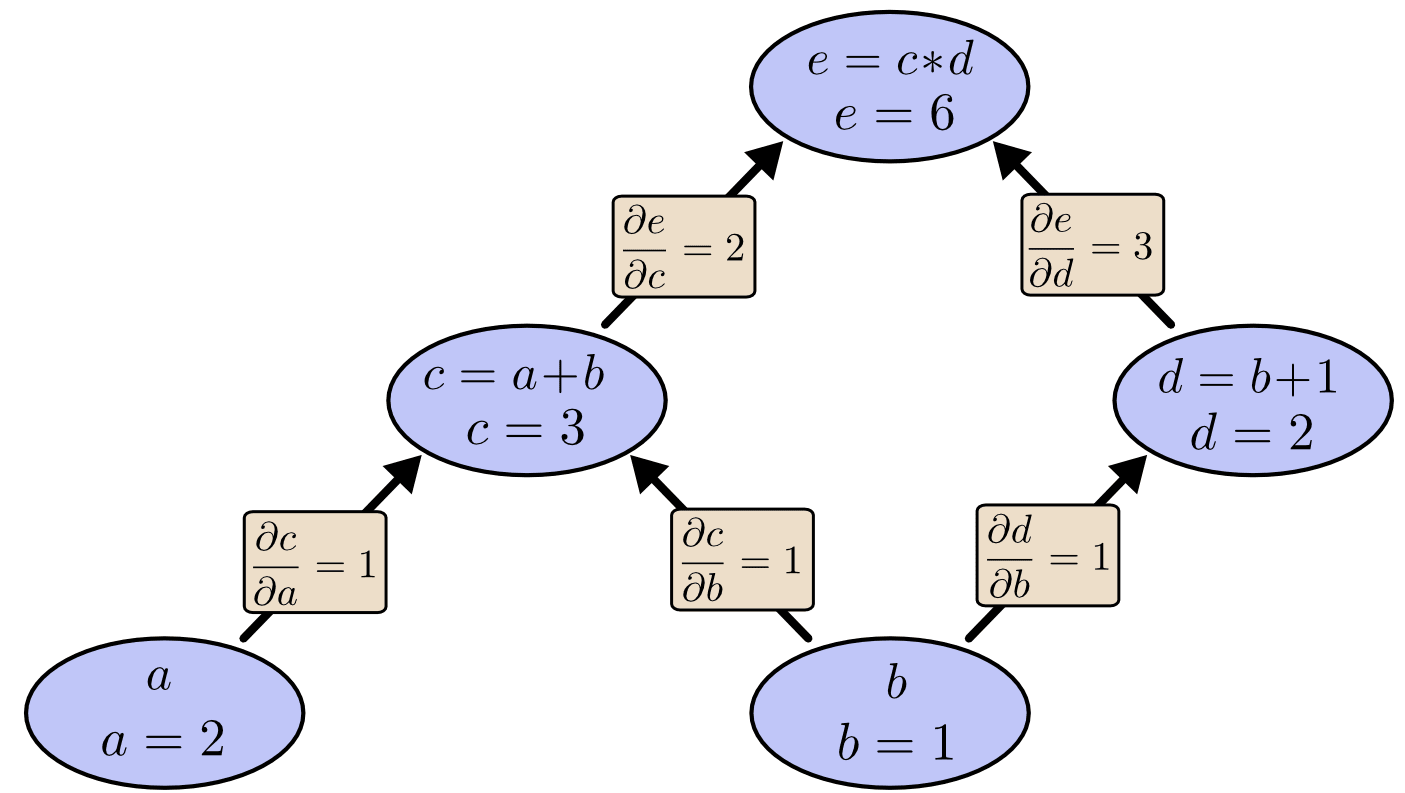

In my life I have never seen a good one-paragraph[1] explanation of backpropagation so I wrote one.

The most natural algorithms for calculating derivatives are done by going through the expression syntax tree[2]. There are two ends in the tree; starting the algorithm from the two ends corresponds to two good derivative algorithms, which are called forward propagation (starting from input variables) and backward propagation respectively. In both algorithms, calculating the derivative of one output variable with respect to one input variable actually creates a lot of intermediate artifacts. In the case of forward propagation, these artifacts means you get for ~free, and in backward propagation you get for ~free. Backpropagation is used in machine learning because usually there is only one output variable (the loss, a number representing difference between model prediction and reality) but a lot of input variables (parameters; in the scale of millions to billions).

- ^

This blogpost by Christopher Olah has the clearest multi-paragraph explanation. Credits for the image too.

- ^

Actually a directed acyclic graph for multivariable

Raw feelings: I am kind of afraid of making reviews for LW. The writing prompt hints very high effort thinking. The vague memory of other people's reviews also feel high effort. The "write a short review" ask doesn't really counter this at all.

It is sad and annoying that if you do a mediocre job (according to the receiver), doing things even for free (volunteer work/gifting) can sabotage the receiver along the dimension you're supposedly helping.

This is super vague the way I wrote it, so examples.

Example 1. Bob wants to upgrade and buy a new quality headphone. He has a $300 budget. His friend Tim not knowing his budget, bought a $100 headphone for Bob. (Suppose second-handed headphones are worthless) Now Bob cannot just spend $300 to get a quality headphone. He would also waste Tim's $100 which counterfactually could have been used to buy something else for Bob. So Bob is stuck with using the $100 headphone and spending the $300 somewhere else instead.

Example 2. Andy, Bob, and Chris are the only three people who translates Chinese books to English for free as a hobby. Because there are so many books out there, it is often not worth it to re-translate a book even if the previous one is bad, because spending that time to translate a different book is just more helpful to others. Andy and Bob are pretty good, but Chris absolutely sucks. It is not unreadable, but they are just barely better than machine translation. Now Chris has taken over to translate book X, which happens a pretty good book. The world is now stuck with Chris' poor translation on book X with Andy and Bob never touching it again because they have other books to work on.

Allocation of blame/causality is difficult, but I think you have it wrong.

ex. 1 ... He would also waste Tim's $100 which counterfactually could have been used to buy something else for Bob. So Bob is stuck with using the $100 headphone and spending the $300 somewhere else instead.

No. TIM wasted $100 on a headset that Bob did not want (because he planned to buy a better one). Bob can choose whether to to hide this waste (at a cost of the utility loss by having $300 and worse listening experience, but a "benefit" of misleading Tim about his misplaced altruism), or to discard the gift and buy the headphones like he'd already planned (for the benefit of being $300 poorer and having better sound, and the cost of making Tim feel bad but perhaps learning to ask before wasting money).

ex. 2 The world is now stuck with Chris' poor translation on book X with Andy and Bob never touching it again because they have other books to work on.

Umm, here I just disagree. The world is no worse off for having a bad translation than having no translation. If the bad translation is good enough that the incremental value of a good translation doesn't justify doing it, then that is your answer. If it's not valuable enough to change the marginal decision to translate, then Andy or Bob should re-translate it. Either way, Chris has improved the value of books, or has had no effect except wasting his own time.

Now Bob cannot just spend $300 to get a quality headphone. He would also waste Tim's $100

That's a form of sunk cost fallacy, a collective "we've sacrificed too much to stop now".

Andy and Bob never touching it again because they have other books to work on

That doesn't follow, the other books would've also been there without existence of this book's poor translation. If the poor translation eats some market share, so that competing with it is less appealing, that could be a valid reason.

One of my pet peeves is that the dropcaps in gwern's articles are really, really offputting and most of the time unrecognizable, even though gwern's articles are so valuable that he has a lot of weirdness points in my head and I will still read his stuff regardless. Most of the time I just guess the first letter.

I hate dropcaps in general, but gwern's is the ugliest I have came by.

image source: https://gwern.net/everything

Obsidian ended up being less of a thinking notepad and more of a faster index of things I have read before. Links and graphs are mostly useless but they make me feel good about myself. Pulling numbers out of my ass I estimate it takes me 15s to find something I have read and pasted into obsidian vs 5-30 minutes before.

A decision theorist walks into a seminar by Jessica Hullman

...This is Jessica. Recently overheard (more or less):

SPEAKER: We study decision making by LLMs, giving them a series of medical decision tasks. Our first step is to infer, from their reported beliefs and decisions, the utility function under revealed preference assump—

AUDIENCE: Beliefs!? Why must you use the word beliefs?

SPEAKER [caught off guard]: Umm… because we are studying how the models make decisions, and beliefs help us infer the scoring rule corresponding to what they give us.

AUDIENCE: But it

What coding prompt (AGENTS.md / cursor rules / skills) do you guys use? It seems exceedingly difficult to find good ones. GitHub is full of unmaintained & garbage `awesome-prompts-123` repos. I would like to learn from other people's prompt to see what things AIs keep getting wrong and what tricks people use.

Here are mine for my specific Python FastAPI SQLAlchemy project. Some parts are AI generated, some are handwritten, should be pretty obvious. This is built iteratively whenever the AI repeated failed a type of task.

AGENTS.md

# Repository Guide

it is interesting how the AI agent prompts seem to have mostly converged to xml, but system prompts from the LLM companies are in markdown

Just as documentation here are a bunch of people on Hacker News complaining about rationality: https://news.ycombinator.com/item?id=44317180. I have not formed any strong opinion on whether these are true, feels like they are wrong on the object level, but perception is also important

I think people (myself included) really underestimated this rather trivial statement that people don't really learn about something when they don't spend the time doing it/thinking about it. People even measure mastery by hours practiced and not years practiced, but I still couldn't engrave this idea deep enough into my mind.

I currently don't have much writable evidence about why I think people underestimated this fact, but I think it is true. Below are some things that I have changed my mind/realised after noticing this fact.

- cached thoughts, on yourself

[Draft] It is really hard to communicate the level/strength of basically anything on a sliding scale, but especially things that could not make any intuitive sense even if you stated a percentage. One recent example I encountered is expressing what is in my mind the optimal tradeoff between reading quickly and thinking deeply to achieve the best learning efficiency.

Not sure what is the best way to deal with the above example, and other situations where percentage doesn't make sense.

But where percentage makes sense, there are still two annoying problems. 1....

The Fight For Slow And Boring Research (Article from Asterisk)

This article talks about how the US's federal (National Institutes of Health / National Science Foundation) funding cut for science starting from 2024/early 2025 may cause universities to create more legible research because other funders (philanthropies, venture capital, industry) value clear communication. This is a new idea to me.

The ERROR Project: https://error.reviews/

Quoting Malte Elson

...The very short description of ERROR is that we pay experts to examine important and influential scientific publications for errors in order to strengthen the culture of error checking, error acceptance, and error correction in our field. As in other bug bounty programs, the payout scales with the magnitude of errors found. Less important errors pay a smaller fee, whereas more important errors that affect core conclusions yield a larger payout.

We expect most published research to contain at least s

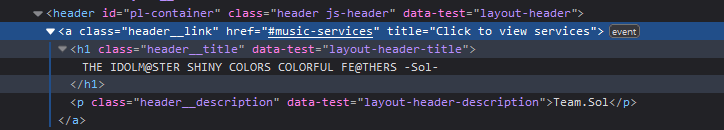

Starting today I am going to collect a list of tricks that websites use to prevent you from copy and pasting text + how to circumvent them. In general, using ublock origin and allow right click properly fixes most issues.

1. Using href (https://lnk.to/LACA-15863s, archive)

behavior: https://streamable.com/sxeblz

solution: use remove-attr in ublock origin - lnk.to##.header__link:remove-attr(href)

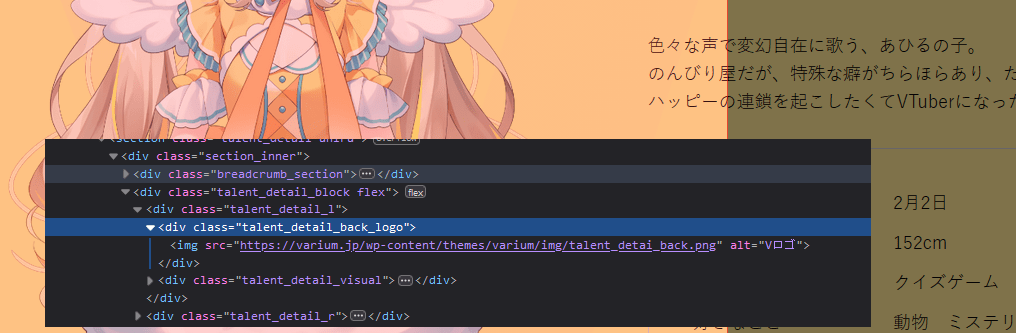

2. Using a background image to cover the text (https://varium.jp/talent/ahiru/, archive)

Note: this example is probably just incompetence.

behavior: https://stream...

Many people don't seem to know when and how to invalidate the cached thoughts they have. I noticed an instance of being unable to cache invalidate the model of a person from my dad. He is probably still modelling >50% of me as who I am >5 years ago.

The Intelligent Social Web briefly talked about this for other reasons.

...A lot of (but not all) people get a strong hit of this when they go back to visit their family. If you move away and then make new friends and sort of become a new person (!), you might at first think this is just who you are now. But t

Update: Brushing after eating acidic food is likely fine.

Context: 7 months ago, me in Adam Zerner's shortform:

I remember something about not brushing immediately after eating though. Here is a random article I googled. This says don't brush after eating acidic food, not sure about the general case.

https://www.cuimc.columbia.edu/news/brushing-immediately-after-meals-you-may-want-wait

“The reason for that is that when acids are in the mouth, they weaken the enamel of the tooth, which is the outer layer of the tooth,” Rolle says. Brushing immediately after consuming something acidic can damage the enamel layer of the tooth.

Waiting about 30 minutes before brushing allows tooth enamel to remineralize and build itself back up.

WARNING: I didn't read these papers except the conclusions

Should We Wait to Brush Our Teeth? A Scoping Review Regarding Dental Caries and Erosive Tooth Wear

Key messages: Although the available evidence lacked robust clinical studies, tooth brushing using fluoridated products immediately after an erosive challenge does not increase the risk of ETW [Erosive Tooth Wear] and can be recommended, which is in line with recommendations for dental caries prevention. Furt...

Thoughts inspired by Richard Ngo's[1] and LWLW's[2] quick take

Warning: speculation but hedging words mostly omitted.

I don't think a consistent superintelligence which have a single[3] pre-existing terminal goal would be fine with a change in terminal goals. The fact that humans allows their goals to be changed is a result of us having contradicting "goals". As intelligence increases or more time passes, incoherent goals will get merged, eventually into a consistent terminal goal. After this point a superintelligence will not change its...

How I use AI for coding.

I wrote this in like 10 minutes for quick sharing.

- I am not a full time coder, I am a student who code like 15-20 hours a week.

- Investing too much time on writing good prompts make little sense. I go with the defaults and add pieces of nudges as needed. (See one of my AGENTS .md at the end)

- Mainly codex (cloud) and Cursor. Claude Code works, but being able to easily revert is helpful, so Cursor is better.

- I still try out claude code for small pieces of edits, but it doesnt feel worth it.

- I have no idea why people like claude code so much

A common failure mode in group projects is that students will break up the work into non-overlapping parts, and proceed to stop giving a fuck about other's work afterwards because it is not their job anymore.

This especially causes problems at the final stage where they need to combine the work and make a coherent piece out of it.

- No one is responsible for merging the work

- Lack of mutual communication during the process means that the work pieces cannot be nicely connected without a lot of modifications (which no one is responsible for).

At this point the dead...

Ranting about LangChain, a python library for building stuff on top of llm calls.

LangChain is a horrible pile of abstractions. There are many ways of doing the same thing. Every single function has a lot of gotchas (that doesn't even get mentioned in documentations). Common usage patterns are hidden behind unintuitive, hard to find locations (callbacks has to be implemented as an instance of a certain class in a config TypedDict). Community support is non-existent despite large number of users. Exceptions are often incredibly unhelpful with unreadable stac...

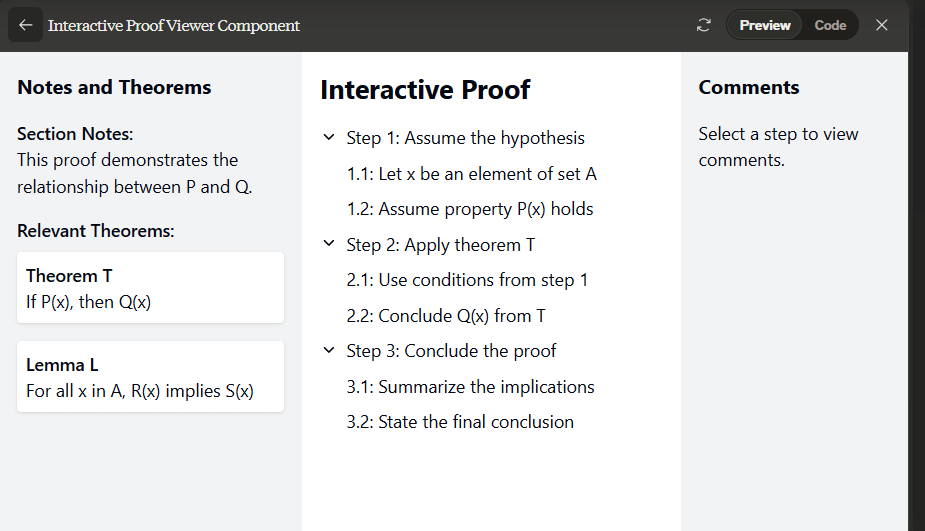

There are a few things I dislike about math textbooks and pdfs in general. For example, how math textbooks often use theorems that are from many pages ago and require switching back and forth. (Sometimes there isn't even a hyperlink!). I also don't like how proofs sometimes go way too deep into individual steps and sometimes being way too brief.

I wish something like this exists (Claude generated it for me, prompt: https://pastebin.com/Gnis891p)

4 reasons to talk about your problem with friends

This is an advice I would tell myself 5 years ago, just storing it somewhere public and forcing myself to write. Writing seems like an important skill but I always feel like I have nothing to say.

- It forces you to think. Sometimes you aren't actually thinking about solutions to a problem even though it has been bothering you for a long time.

- for certain problems: a psychological feeling of being understood. For some people, getting the idea that "what I'm feeling is normal" is also important. It can be a false

Since we have meta search engines that aggregate search results from many search engines, is it time for us to get a meta language model* to get results from chatGPT, Bing, Bard, and Claude all at the same time, and then automatically rank them, perhaps even merging all of the replies into a single reply.

*meta language model is an extremely bad name because of the company Meta and the fact that the thing I am thinking of isn't really a language model, but ¯\_(ツ)_/¯

I always thought that the in-page redirects are fucking stupid, it should bring the text I want to see closer to eye level, not exactly at the top where even browser bars can block the text (happens when you go back from footnotes to article on LW).

Documenting a specific need of mine: LaTeX OCR software

tl;dr: use Mathpix if you use it <10 times per month or you are willing to pay $4.99 per month. Otherwise use SimpleTex

So I have been using Obsidian for note taking for a while now and I eventually decided to stop using screenshots but instead learn about LaTeX so the formulas look better. At first I was relying on the website to show the original LaTeX commands but some websites (wiki :/) doesn't do that, and also I started reading math textbooks as PDF. Thus started my adventure to find a good and...

How likely are people actually clicking through links of related materials in a post, seems unlikely to me, actually unlikely to the point that I am thinking about whether it is actually useful.

related: https://www.lesswrong.com/posts/JZuqyfGYPDjPB9Lne/you-don-t-have-to-click-the-links

[Draft] Are memorisation techniques still useful in this age where you can offload your memory to digital storage?

I am thinking about using anki for spaced repetition, and the memory palace thing also seem (from the surface level) interesting, but I am not sure whether the investments will be worth it. (2023/02/21: Trying out Anki)

I am increasingly finding it more useful to remember your previous work so that you don't need to repeat the effort. Remembering workflow is important. (This means remembering things somewhere is very important, but im still not ...

[Draft]

Filter Information Harder (TODO: think of a good concept-handle)

Note: Long post usually mean the post is very well thought out and serious, but this comment is not quite there yet.

Many people are too unselective on what they read, causing them to waste time reading low value material[1].

2 Personal Examples: 1. I am reading through rationality: A-Z and there are way too many comments that are just bad, and even the average quality of the top comments may not even be worth it to read, because I can probably spend the time better with reading more EY p...

Just read free will, really disappointed.

- not many interesting insights.

- a couple posts on determinism, ok but I already believed it

- some unrelated stuff: causality, thinking without notion of time... these are actually interesting but not needed

- moral consequence of 'no free will': I disregard the notion of moral responsibility

- EY having really strong sense of morality makes everything worse

- low quality discussions: people keep attacking strawmans

You should always include a summary when recommending anything

You are the one who is interested in that thing, the other person isn't (yet). It saves time for the other person to quickly determine whether they want to learn about it or not.

Related: include a tl;dr in posts?

A Chinese company did some AI-assisted reverse engineering on Claude Code and published their findings. After a brief look I don't think it is worth reading for me, but possibly interesting for someone actively working in claude code-like products

I think i'm going to unite all my online identities. Starting to get tired of all my wasted efforts that only a single person or two will see.

Explicitly welcomed: