Looking back from 2041

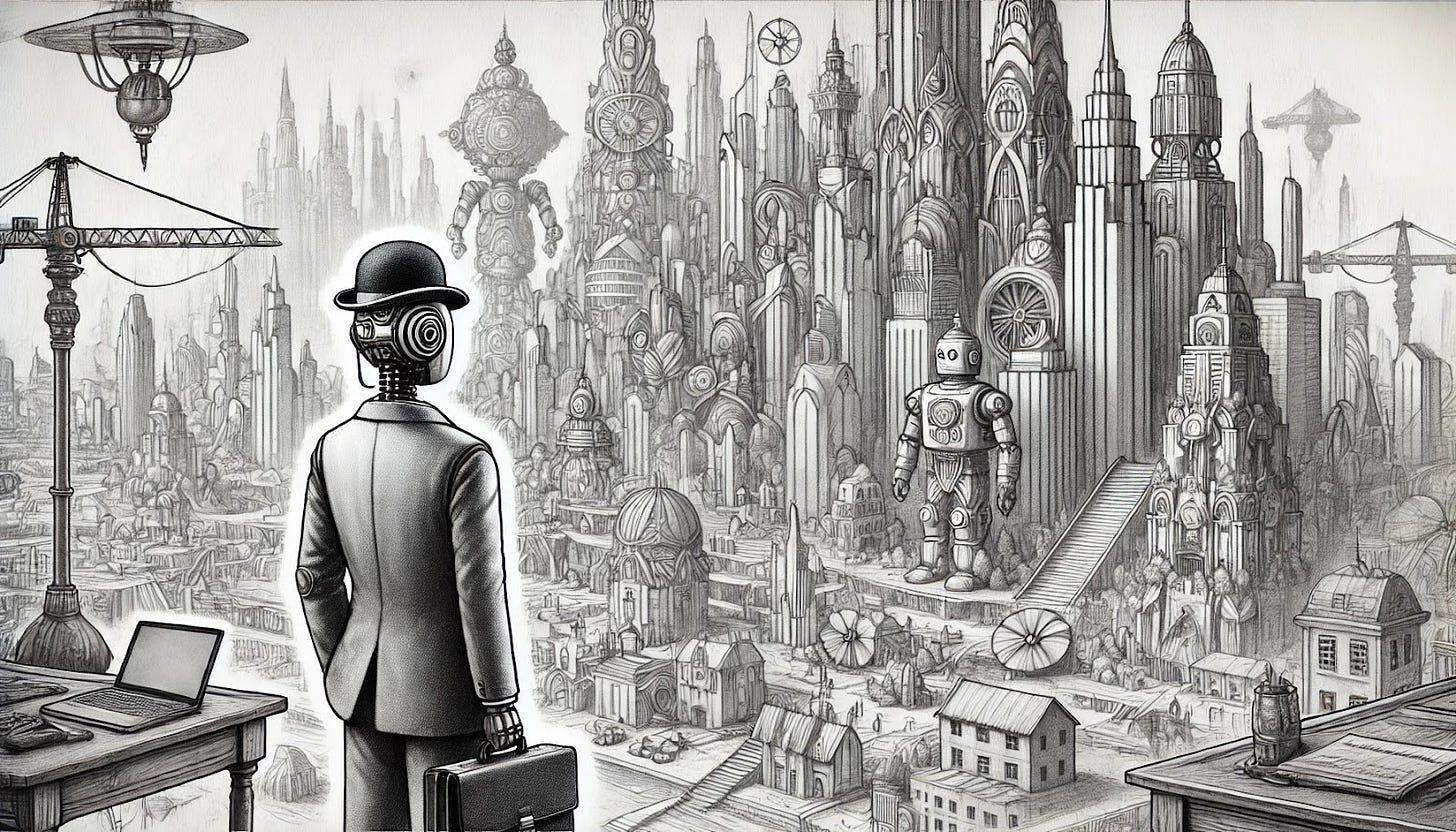

When people in the early 21st Century imagined an AI-empowered economy, they tended to project person-like AI entities doing the work. “There will be demand for agent-like systems,” they argued, “so we’ll see AI labs making agents which can then be deployed to various problems”.

We now know that that isn’t how it played out. But what led to the largely automated corporations that we see today? Let’s revisit the history:

- In the mid–late 2020s, as AI systems became reasonably reliable at many tasks, workers across the economy started consulting them more on an everyday basis

- People and companies started more collection of data showing exactly what they wanted from different tasks

- Systematizers and managers began building company workflows around the automation of tasks

- They would build systems to get things into shapes known to work especially well for automation — in many cases using off-the-shelf software solutions — and direct more of the work into these routes

- In many cases, the automation of a particular tasks involved brief invocation of specialized agent-like systems; but nothing like the long-term general purpose actors imagined in science fiction

- As best practices emerged for automating taskflows, in the early–mid 2030s we saw the start of widespread automation of automation — people used specialized AI systems (or consultants relying on such systems) to advise on which parts of the workflow should be automated and how

- For a while, human experts and managers kept a close eye on these automated loops, to catch and correct errors

- But it wasn’t long before these management processes themselves were largely automatable (or redundant), and humans just stayed in loop for the high-level decisions about how to arrange different workflows and keep them integrated with the parts still done by humans

- Although there are some great anecdotes of failures during that time, the broad trend was towards it being economically efficient to automate larger and larger swathes of work

- At this stage, many companies were still run by people who were slow adopters of technology

- Over the mid and late 2030s, many of these went out of business, as they failed to be competitive on price

- There was significant social unhappiness at the shocks to the labour market

- Lagging behind the automation of existing workflows was the automation of creating new workflows

- Still, this was pioneered by management consultancies, who had access to some of the best data sets about what worked well in what circumstances

- The first fully-automated corporations, with no human workers, were seen in 2032 — but these were mostly gimmicks

- They had human boards playing a role somewhat like that of management — and they weren’t terribly successful

- Still, they proved the concept, and over the next few years the rise of fully automated management layers was tremendous

- Many companies in this period ended up with a human board of directors, and human employees performing some tasks which were particularly well-suited for humans, but effectively no humans in management

- It was not until the cheap general-purpose robots of the late 2030s that many firms eschewed human workers even for those physical tasks which hadn’t already merited specialised robots

- Many companies in this period ended up with a human board of directors, and human employees performing some tasks which were particularly well-suited for humans, but effectively no humans in management

- In many jurisdictions, there was until recently (and in several jurisdictions there still is) a requirement for the officers of the company to be human; and except in two small pioneering countries, it’s still required that the board of directors be human

- But even people nominally in these “human-required” roles are increasingly turning to AI systems to do much or all of their work

- This approaches the ecosystem we see today, where many companies (and a clear majority of new companies) are essentially AI-run: the basic case for them is proposed by AI systems, and AI builds out all of the core systems

- Best practices continue to evolve, as they are now best practices for automated corporations, which differ from the best practices in the world where humans played important roles

- We did see a significant slide towards large conglomerates and “mega-corporations”, as it was generally the biggest companies with the most data on successful management practices worked well who were in the best position to start new firms

- This was significantly stopped by regulators intervening to break up monopolies

- Regulators showed greater willingness to take large actions here than in the human era, as there was normally less loss of efficiency from breaking up monopolies

- This was significantly stopped by regulators intervening to break up monopolies

- To date, the concerns of the doom-mongers about AI catastrophes from corporations without human oversight have not materialized — while there were some harms (and consequent large lawsuits) caused by automated firms, research shows that on average these firms have caused significantly less litigateable harm than the human-run firms they replaced

- Some researchers remain concerned about the possibility of “triggering events” for mass errors by automated systems

- In some countries, governments concerned about fragility have supported an ecosystem with varied management software; but in other jurisdictions we see effective monocultures

- There are widespread beliefs that these systems are doing damage to the fabric of society, but there is no consensus on the nature or degree of the alleged harms, and the companies accused usually paint the concerns as being grounded in unhappiness about the displacement of human workers

- Some researchers remain concerned about the possibility of “triggering events” for mass errors by automated systems

- However, concerns about systematizing unethical — and sometimes even illegal — behaviour in automated corporations have been vindicated; research indicates this is still happening at significant scale

- After the scandals and lawsuits of 2036, the US and EU each passed laws to ensure that the service providers would be liable (and in some cases criminally responsible) if their services were deemed to be accomplices in breaking the law

- Since then, the main service providers have been clear that their services cannot be used in such roles

- However, there is a large grey-market economy of small companies which provide (second-tier but unfettered) services to a smallish number of firms (which may use them for functions which benefit from a lack of scruples, and top-tier services for functions which do not)

- Occasionally their clients are found to have behaved illegally; the small service companies then go bankrupt; but the bet was good in expectation for their owners

- Various regulatory responses have been proposed

- Estonia has recently been innovating with automated regulators to keep up with automated corporations — the ability to have more thorough oversight of firms in principle makes up for their ability to act with little human oversight

- In most jurisdictions, regulators have been much slower to adopt new technology than the firms they are regulating

- This is partially because there is resistance to the idea that legislating should be turned over to automated services; and partially due to highly organized (and “automated” would be a safe bet) lobbying campaigns

- There are some pushing for more international harmonization on these topics, arguing that much of the corporate abuse is not illegal per se, but arises from aggressively pursuing loopholes and differences between jurisdictions to extract competitive advantage

- However, there is a large grey-market economy of small companies which provide (second-tier but unfettered) services to a smallish number of firms (which may use them for functions which benefit from a lack of scruples, and top-tier services for functions which do not)

Today, there are a few instances of fully autonomous corporations, with no human control even in theory, as well as a larger number of fully autonomous AI agents, generally created by hobbyists or activists. However, while intriguing (and suggestive about how the future might unfold), to date these remain a tiny fraction.

And although AI for research has been one of the slower applications to find a niche for properly automated groups (with many cases of AI used at the management level coordinating human researchers, who in turn make use of AI research assistants; although this varies by field), it still appears to have made a difference. On most measures, technological progress was around 1.5–2x faster in the period 2030–2035, compared to a decade earlier (2020–2025), and the second half of the 2030s was faster again. Moreover, in the last couple of years we have been seeing an increase in successes out of purely automated research groups. A controversial AI-produced paper published in Science earlier this year claimed that the rate of technological progress is now ten times faster than it was at the turn of the century. Since IJ Good first coined the idea of an intelligence explosion, 75 years ago last year, people have wondered if we will someday see a blistering rate of progress, that is hard to wrap our heads around. Perhaps we are, finally, standing on the cusp — and the automated corporations we have developed stand ready to work, integrating the fruits of that explosion back into human society.

Remarks

As is perhaps obvious: this is not a prediction that this is how the future will play out. Rather, it’s an exploration of one way that it might play out — and of some of the challenges that might arise if it did.

Thanks to Raymond Douglas, Max Dalton, and Tom Davidson, and Adam Bales, for helpful comments.