Summary: the moderators appear to be soft banning users with 'rate-limits' without feedback. A careful review of each banned user reveals it's common to be banned despite earnestly attempting to contribute to the site. Some of the most intelligent banned users have mainstream instead of EA views on AI.

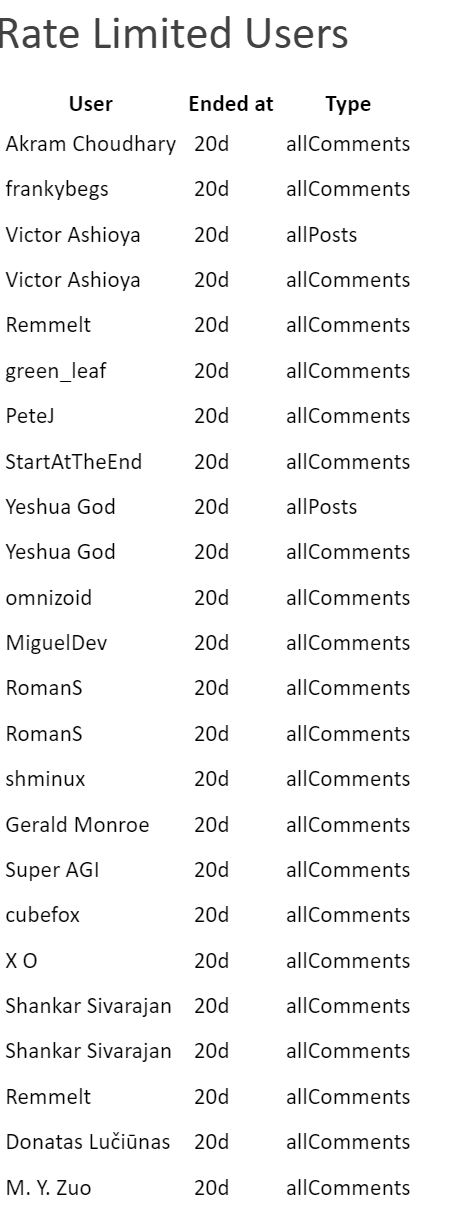

Note how the punishment lengths are all the same, I think it was a mass ban-wave of 3 week bans:

Gears to ascension was here but is no longer, guess she convinced them it was a mistake.

Have I made any like really dumb or bad comments recently:

https://www.greaterwrong.com/users/gerald-monroe?show=comments

Well I skimmed through it. I don't see anything. Got a healthy margin now on upvotes, thanks April 1.

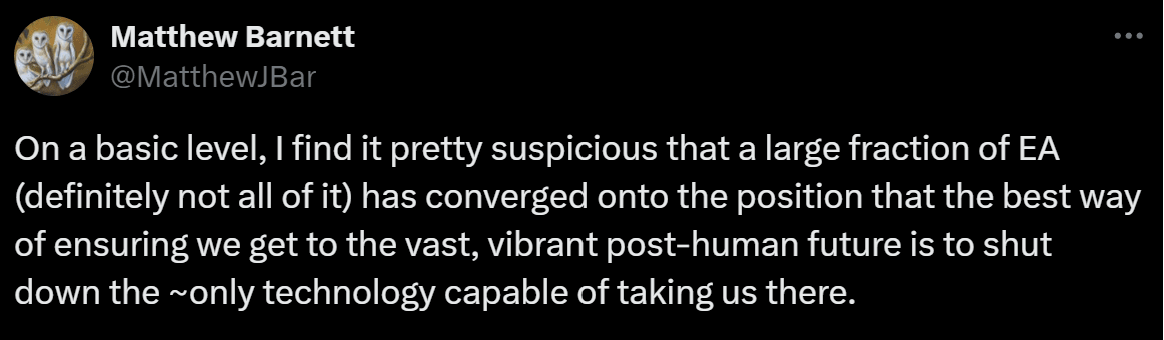

Over a month ago, I did comment this stinker. Here is what seems to the same take by a very high reputation user here, @Matthew Barnett , on X: https://twitter.com/MatthewJBar/status/1775026007508230199

Must be a pretty common conclusion, and I wanted this site to pick an image that reflects their vision. Like flagpoles with all the world's flags (from coordination to ban AI) and EMS uses cryonics (to give people an alternative to medical ASI).

I asked the moderators:

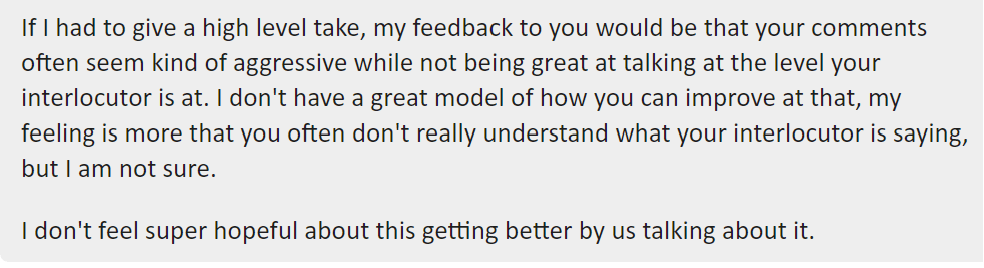

@habryka says:

I skimmed all comments I made this year, can't find anything that matches to this accusation. What comment did this happen on? Did this happen once or twice or 50 times or...? Any users want to help here, it surely must be obvious.

You can look here: https://www.greaterwrong.com/users/gerald-monroe?show=comments if you want to help me find what habryka could possibly be referring to.

I recall this happening once, Gears called me out on it, and I deleted the comment.

Conditional that this didn't happen this year, why wasn't I informed or punished or something then?

Skimming the currently banned user list:

Let's see why everyone else got banned. Maybe I can infer a pattern from it:

Akram Choudhary :

His negative karma doesn't add up to -38, not sure why. AI view is in favor of red teaming, which is always good.

doomer view, good karma (+2.52 karma per comment), hasn't made any comments in 17 days...why rate limit him? Skimming his comments they look nice and meaty and well written...what? All I can see is over the last couple of month he's not getting many upvotes per comment.

Ok at least I can explain this one. One comment at -41, in the last 20, green_leaf rarely comments. doomer view.

Tries to use humanities knowledge to align AI, apparently the readerbase doesn't like it. Probably won't work, banned for trying.

1.02 karma per comment, a little low, may still be above the bar. Not sure what he did wrong, comments are a bit long?

doomer view, lots of downvotes

Seems to just be running a low vote total. People didn't like a post justifying religion.

Why rate limited? This user seems to be doing actual experiments. Karma seems a little low but I can't find any big downvote comments or posts recently.

Overall Karma isn't bad, 19 upvotes the most recent post. Seems to have a heavily downvoted comment that's the reason for the limit.

@shminux this user has contributed a lot to the site. One comment heavily downvoted, algorithm is last 20.

It certainly feels that way from the receiving end.

2.49 karma per comment, not bad. Cube tries to applies Baye's rule in several comments, I see a couple barely hit -1, I don't have an explanation here.

possibly just karma

One heavily downvoted comment for AI views. I also noticed the same and I also got a lot of downvotes. It's a pretty reasonable view, we know humans can be very misaligned, upgrading humans and trying to control them seems like a superset of the AI alignment problem. Don't think he deserves this rate limit but at least this one is explainable.

Has anyone else experienced anything similar? Has anyone actually received feedback on a specific post or comment by the moderators?

Finally, I skipped several negative overall karma users not mentioned, because the reason is obvious.

Remarks :

I went into this expecting the reason had to do with AI views, because the site owners are very much 'doomer' faction. But no, plenty of rate limited people on that faction. I apologize for the 'tribalism' but it matters:

https://www.greaterwrong.com/users/nora-belrose Nora Belrose is one of the best posters this site has in terms of actual real world capabilities knowledge. Remember the OAI contributors we see here aren't necessarily specialists in 'make a real system work'. Look at the wall of downvotes.

vs

https://www.greaterwrong.com/users/max-h Max is very worried about AI, but I have seen him write things I think disagree with current mainstream science and engineering. He writes better than everyone banned though.

But no, that doesn't explain it. Another thing I've noticed is that almost all the users are trying. They are trying to use rationality, trying to understand what's been written here, trying to apply Baye's rule or understand AI. Even some of the users with negative karma are trying, just having more difficulty. And yeah it's a soft ban from the site, I'm seeing that a lot of rate limited users simply never contribute 20 more comments to get out of the sump from one heavily downvoted comment or post.

Finally, what rationality principles justify "let's apply bans to users of our site without any reason or feedback or warning. Let's make up new rules after the fact."

Specifically, every time I have personally been punished, it would be no warning, then @Raemon first rate limited me, by making up a new rule (he could have just messaged me me first), then issued a 3 month ban, and gave some reasons I could not substantiate, after carefully reviewing my comments for the past year. I've been enthusiastic about this site for years now, I absolutely would have listened to any kind of warnings or feedback. The latest moderator limit is the 3rd time I have been punished, with no reason I can validate given or content cited.

I asked for, in a private email to the moderators, any kind of feedback or specific content I wrote to justify the ban, and was not given it. All I wanted was a few examples of the claimed behavior, something I could learn from.

Is there some reason the usual norms of having rules, not punishing users until after making a new rule, and informing users when they broke a rule and what user submission was rule violating isn't rational? Just asking here, every mainstream site does this, laws do this, what is the evidence justifying doing it differently?

There's this:

well-kept-gardens-die-by-pacifism

Any community that really needs to question its moderators, that really seriously has abusive moderators, is probably not worth saving. But this is more accused than realized, so far as I can see.

Is not giving a reason for a decision, or informing a user/issuing a lesser punishment instead of immediately going to the maximum punishment a community with abusive moderators? I can say in other online communities, absolutely. Sites have split over one wrongful ban of a popular user.

Thanks for making this post!

One of the reasons why I like rate-limits instead of bans is that it allows people to complain about the rate-limiting and to participate in discussion on their own posts (so seeing a harsh rate-limit of something like "1 comment per 3 days" is not equivalent to a general ban from LessWrong, but should be more interpreted as "please comment primarily on your own posts", though of course it shares many important properties of a ban).

Things that seem most important to bring up in terms of moderation philosophy:

Moderation on LessWrong does not depend on effort

Just because someone is genuinely trying to contribute to LessWrong, does not mean LessWrong is a good place for them. LessWrong has a particular culture, with particular standards and particular interests, and I think many people, even if they are genuinely trying, don't fit well within that culture and those standards.

In making rate-limiting decisions like this I don't pay much attention to whether the user in question is "genuinely trying " to contribute to LW, I am mostly just evaluating the effects I see their actions having on the quality of the discussions happening on the site, and the quality of the ideas they are contributing.

Motivation and goals are of course a relevant component to model, but that mostly pushes in the opposite direction, in that if I have someone who seems to be making great contributions, and I learn they aren't even trying, then that makes me more excited, since there is upside if they do become more motivated in the future.

Signal to Noise ratio is important

Thomas and Elizabeth pointed this out already, but just because someone's comments don't seem actively bad, doesn't mean I don't want to limit their ability to contribute. We do a lot of things on LW to improve the signal to noise ratio of content on the site, and one of those things is to reduce the amount of noise, even if the mean of what we remove looks not actively harmful.

We of course also do other things than to remove some of the lower signal content to improve the signal to noise ratio. Voting does a lot, how we sort the frontpage does a lot, subscriptions and notification systems do a lot. But rate-limiting is also a tool I use for the same purpose.

Old users are owed explanations, new users are (mostly) not

I think if you've been around for a while on LessWrong, and I decide to rate-limit you, then I think it makes sense for me to make some time to argue with you about that, and give you the opportunity to convince me that I am wrong. But if you are new, and haven't invested a lot in the site, then I think I owe you relatively little.

I think in doing the above rate-limits, we did not do enough to give established users the affordance to push back and argue with us about them. I do think most of these users are relatively recent or are users we've been very straightforward with since shortly after they started commenting that we don't think they are breaking even on their contributions to the site (like the OP Gerald Monroe, with whom we had 3 separate conversations over the past few months), and for those I don't think we owe them much of an explanation. LessWrong is a walled garden.

You do not by default have the right to be here, and I don't want to, and cannot, accept the burden of explaining to everyone who wants to be here but who I don't want here, why I am making my decisions. As such a moderation principle that we've been aspiring to for quite a while is to let new users know as early as possible if we think them being on the site is unlikely to work out, so that if you have been around for a while you can feel stable, and also so that you don't invest in something that will end up being taken away from you.

Feedback helps a bit, especially if you are young, but usually doesn't

Maybe there are other people who are much better at giving feedback and helping people grow as commenters, but my personal experience is that giving users feedback, especially the second or third time, rarely tends to substantially improve things.

I think this sucks. I would much rather be in a world where the usual reasons why I think someone isn't positively contributing to LessWrong were of the type that a short conversation could clear up and fix, but it alas does not appear so, and after having spent many hundreds of hours over the years giving people individualized feedback, I don't really think "give people specific and detailed feedback" is a viable moderation strategy, at least more than once or twice per user. I recognize that this can feel unfair on the receiving end, and I also feel sad about it.

I do think the one exception here is that if people are young or are non-native english speakers. Do let me know if you are in your teens or you are a non-native english speaker who is still learning the language. People do really get a lot better at communication between the ages of 14-22 and people's english does get substantially better over time, and this helps with all kinds communication issues.

We consider legibility, but its only a relatively small input into our moderation decisions

It is valuable and a precious public good to make it easy to know which actions you take will cause you to end up being removed from a space. However, that legibility also comes at great cost, especially in social contexts. Every clear and bright-line rule you outline will have people budding right up against it, and de-facto, in my experience, moderation of social spaces like LessWrong is not the kind of thing you can do while being legible in the way that for example modern courts aim to be legible.

As such, we don't have laws. If anything we have something like case-law which gets established as individual moderation disputes arise, which we then use as guidelines for future decisions, but also a huge fraction of our moderation decisions are downstream of complicated models we formed about what kind of conversations and interactions work on LessWrong, and what role we want LessWrong to play in the broader world, and those shift and change as new evidence comes in and the world changes.

I do ultimately still try pretty hard to give people guidelines and to draw lines that help people feel secure in their relationship to LessWrong, and I care a lot about this, but at the end of the day I will still make many from-the-outside-arbitrary-seeming-decisions in order to keep LessWrong the precious walled garden that it is.

I try really hard to not build an ideological echo chamber

When making moderation decisions, it's always at the top of my mind whether I am tempted to make a decision one way or another because they disagree with me on some object-level issue. I try pretty hard to not have that affect my decisions, and as a result have what feels to me a subjectively substantially higher standard for rate-limiting or banning people who disagree with me, than for people who agree with me. I think this is reflected in the decisions above.

I do feel comfortable judging people on the methodologies and abstract principles that they seem to use to arrive at their conclusions. LessWrong has a specific epistemology, and I care about protecting that. If you are primarily trying to...

then LW is probably not for you, and I feel fine with that. I feel comfortable reducing the visibility or volume of content on the site that is in conflict with these epistemological principles (of course this list isn't exhaustive, in-general the LW sequences are the best pointer towards the epistemological foundations of the site).

If you see me or other LW moderators fail to judge people on epistemological principles but instead see us directly rate-limiting or banning users on the basis of object-level opinions that even if they seem wrong seem to have been arrived at via relatively sane principles, then I do really think you should complain and push back at us. I see my mandate as head of LW to only extend towards enforcing what seems to me the shared epistemological foundation of LW, and to not have the mandate to enforce my own object-level beliefs on the participants of this site.

Now some more comments on the object-level:

I overall feel good about rate-limiting everyone on the above list. I think it will probably make the conversations on the site go better and make more people contribute to the site.

Us doing more extensive rate-limiting is an experiment, and we will see how it goes. As kave said in the other response to this post, the rule that suggested these specific rate-limits does not seem like it has an amazing track record, though I currently endorse it as something that calls things to my attention (among many other heuristics).

Also, if anyone reading this is worried about being rate-limited or banned in the future, feel free to reach out to me or other moderators on Intercom. I am generally happy to give people direct and frank feedback about their contributions to the site, as well as how likely I am to take future moderator actions. Uncertainty is costly, and I think it's worth a lot of my time to help people understand to what degree investing in LessWrong makes sense for them.

Features to benefit people accused of X may benefit mostly people who have been unjustly accused. So looking at the value to the entire category "people accused of X" may be wrong. You should look at the value to the subset that it was meant to protect.