pro tips for commenting on LessWrong:

- If someone makes a dumb comment on your post, do not reply, just strong-downvote and move on. One strong downvote (from the person you reply to) on a few replies can be enough to get you rate-limited, like me. (Maybe people shouldn't be allowed to strong-vote on replies to their comments or posts?)

- Making some comments that get no votes is also bad, because it can push your positive-karma stuff too far away from a couple strong downvotes to cancel them out.

- Don't just say factually correct things unless enough people know them already; you won't get a request for clarification or evidence, just negative agreement, and that leads to negative karma. (Maybe agreement should be displayed without strong-votes by default? That would help a bit.) If you absolutely must, you should post some links to vaguely related stuff, because people will often assume they support whatever you said.

- If you do get rate-limited, you want to hit your rate limit making comments that won't get any votes, to push the negative-karma comments out of the recent comment window.

- Whether trying to follow these rules is worth it depends on how much you care about posting on LessWrong.

This strongly suggests that the rate limit mechanism is creating some extremely bad incentives and dynamics on Less Wrong.

Thanks.

My current frame on "what the bad thing is here?" is less focused on "people are incentivized to do weird/bad things" and more focused on some upstream problems.

I'd say the overall tradeoff with rate limits is that there are two groups I want to distinguish between:

- people writing actively mediocre/bad stuff, where the amount-that-it-clogs-up-the-conversation-space outweighs....

- ...people writing controversial and/or hard to evaluate stuff, which is either in-fact-good, or, where you expect that following a policy of encouraging it is good-on-net even if individual comments are wrong/unproductive.

Rate limiting is useful if the downside of group #1 is large enough to outweigh the upsides of encouraging group #2. I think it's a pretty reasonable argument that the upside from group #2 is really really important, and that if you're getting false positives you really need to prioritize improving the system in some way.

One option is to just accept more mediocre stuff as a tradeoff. Another option is... think more and find third-options that avoid false positives while catching true positives.

I don't think I think the correct number of false-positives for group 2 is zero – I think the cost of group #1 is pretty big. But I do think "1 false positive is too many" is a reasonably position to hold, and IMO even if there's only one it still at least warrants "okay can we somehow get a more accurate reading here?" (looking over your recent comment history I do think I'd probably count you in the "the system probably shouldn't be rate limiting you" bucket).

Problem 1: unique-downvoter threshold isn't good enough

I think one concrete problem is that the countermeasure against this problem...

One strong downvote (from the person you reply to) on a few replies can be enough to get you rate-limited, like me. (Maybe people shouldn't be allowed to strong-vote on replies to their comments or posts?)

Does currently work that well. We have the "unique downvoter count" requirement to attempt to prevent the "person you're in an argument with singlehandedly vindictively (or even accidentally) rate-limiting you" problem. But after experimenting with it more I think this doesn't carve reality at the joints – people who say more things get more downvoters even if they're net upvoted. So, if you've written a bunch of somewhat upvoted comments, you'll probably have at least some downvoters, and then a single person strong-downvoting you does likely send you over the edge because the unique-downvoter-threshold has already been met.

One (maybe too-clunky) option that occurs to me here is to just distinguish between "downvoting because you thought a local comment was overrated" vs "I actually think it'd be good if this user commented less overall." We could make it so that when you downvote someone, an additional UI element pops up for "I think this person should be rate limited", and the minimum threshold is the number of people who specifically thought you should be rate-limited, rather than people who downvoted you for any reason.

Problem 2: technical, hard to evaluate stuff

Sometimes a comment is making a technical point (or some manner of "requires a lot of background knowledge to evaluate" point). You noted a comment where, from your current vantage point, you think you were making a straightforward factually correct claim, and people downvoted out of ignorance.

I think this is a legitimately tricky problem (and would be a problem with karma even if we weren't using it for rate-limiting).

It's a problem because we also have cranks who make technical-looking-points who are in fact confused, and I think the cost of having a bunch of them around drives away people doing "real work." I think this is sort of a cultural problem but the difficulty lives in the territory (i.e. there's not a simple cultural or programmatic change I can think of to improve the status quo, but I'm interested if people have ideas).

Complaining about getting rate-limited made me no longer rate-limited, so I guess it's a self-correcting system...???

two groups I want to distinguish between

I agree that some tradeoff here is inevitable.

think more and find third-options that avoid false positives while catching true positives

I think that's possible.

I don't think the recent comment window was well-designed. If you're going to use a window, IMO a vote-count window would be better, eg: look backwards until you hit 400 cumulative karma votes, with some exponential downweighting.

I also think the strong votes are weighted too heavily. Holding a button a little longer doesn't mean somebody's opinion should be counted as 6+ times as important, IMO. Maybe normal votes should be weighted at 1/2 whatever a strong vote is worth.

when you downvote someone, an additional UI element pops up

I don't think that's a good idea.

It's a problem because we also have cranks who make technical-looking-points who are in fact confused, and I think the cost of having a bunch of them around drives away people

If you find a solution, maybe let some universities know about it...or some CEOs...or some politicians...

I don't think that's a good idea.

Why? (I'm not very attached to the idea, but, what are you imagining going wrong?)

- It seems annoying.

- I don't think people will use it objectively.

- People won't generally go through the history of the user in question; they won't have the context needed to distinguish the cases you're asking them to.

you won't get a request for clarification or evidence, just negative agreement, and that leads to negative karma.

Are you sure this is true? This post says:

Agree/disagree voting does not translate into a user's or post's karma — its sole function is to communicate agreement/disagreement. It has no other direct effects on the site or content visibility (i.e. no effect on sorting algorithms).

Mods, has this been changed?

Incentive to post as many neutral comments as permitted by the rate limit, to get it lifted faster. Not sure what to do about this, but might be an issue.

Yeah I did notice this. I think basically if it turns out to be a problem we'll figure out a more robust solution but not worry about it before then.

(We do have the power to also just give out arbitrary rate limits based on moderator discretion anyway, and if it looks like someone is just trying to game the system we will probably just give them a more severe rate limit. So, uh, aspiring rate limit munchkins, please do not get too excited about being clever here)

That makes sense to me. A lot of theoretical problems never actually materialize. I think it's unlikely that it becomes a problem and if it did it wouldn't be severe.

Just wanted to register my complaint that I find it somewhat annoying to be rate limited, and somewhat surprising this wasn't targeted more specifically at new users, which was the impression I got from earlier posts. Also I would have commented earlier, but each time I thought to do it was when I was rate limited, lol.

Overall this seems like a great general method. I have some critical comments below on the exact implementation, but I want to emphasize upfront that I expect minimal problems in reality, given that, as you mention, LessWrong users are generally both gentle and accurate with downvotes. It is probably not worth making the system more complicated until you see problems in practice.

I don't think I like the -1/-5/-15 recent karma distinction. Consider someone who has reasonable positive reception to their comments, albeit on lower popularity posts, say an average of +2/comment. They then post a negatively received post on a popular topical thread; eg. say they take an unpopular side in a breaking controversy, or even just say something outright rude.

If they make two such comments, they would get -1 net karma in the last 20 posts at -18*2/2-1=-19 karma average of the two posts. They would get -5 at -21 karma average. This distinction seems pretty arbitrary, and the cool-off scale doesn't seem to map to anything.

One solution might be to smooth these numbers out to emphasize modal behavior, like taking the sum of roots instead (and shifting totals accordingly).

Similarly, let's say a user makes most of their posts at a rate of 2/day, again well received on average. They then have a deep conversation in a single thread over the course of a few days, pushing out most of the last 20 posts. Does it make sense to limit their other comments if this one conversation was poorly reviewed?

One possibility I see is for time aggregation to come into play a bit earlier.

I'm curious if these rate limits were introduced as a consequence of some recent developments. Has the website been having more problems with spam and low-quality content lately, or has the marginal benefit of making these changes gone up in some other way?

It could also be that you had this idea only recently and in retrospect it had been a good idea for a long time, of course.

I first thought about them ~8 months ago, simply because we spend ~20 minutes a day reviewing content from new or downvoted users, and it's a combination of "adds up to a lot of time" and "also kind of emotionally exhausting to think about exactly where the line is where we should take some kind of action."

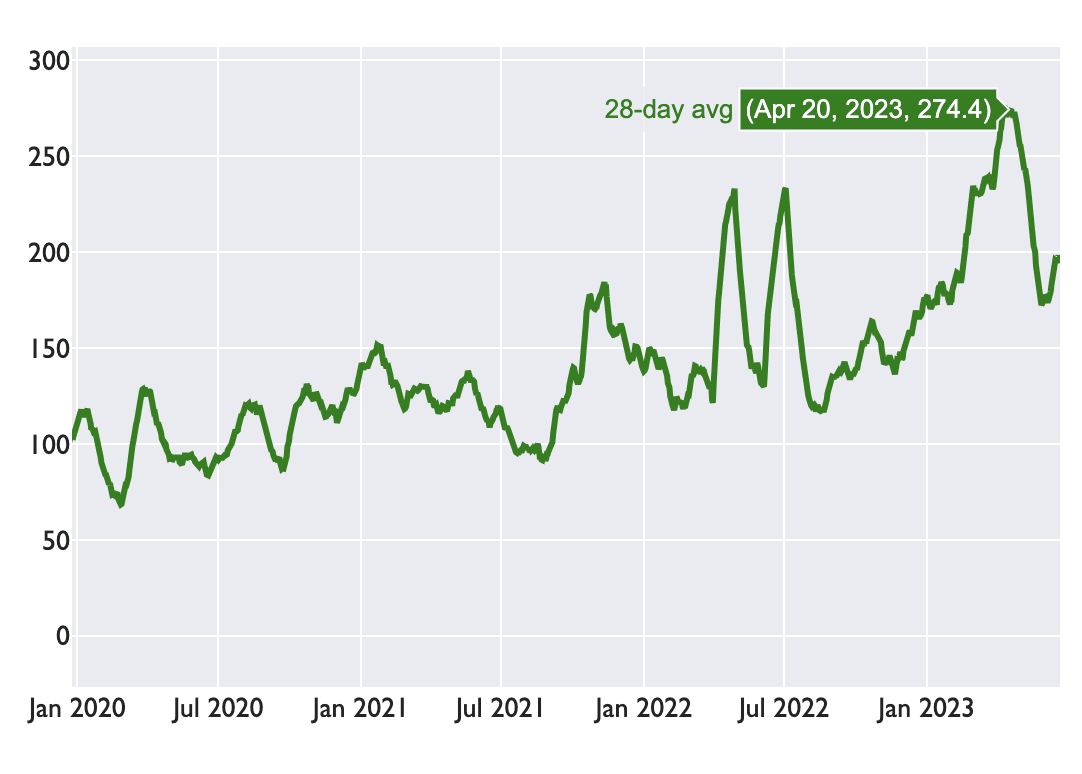

The idea of auto-rate-limits felt a lot more salient during the April spike, where a lot of people showed up due to the Eliezer TIME article and other "AI in the news" things. That has since calmed down, but I think we'll get more things like that in the future and it seemed good to be ahead of it (and meanwhile the base-level background costs of "figure out when/how to intervene on users posting a lot of mediocre content" still felt sufficient to justify it IMO)

number of comments per day:

- 1 comment / hour

- i.e slow down a little, you may be getting into a heated exchange.

I just got screwed over by this, It strikes me as insane to be rate-limited to "slow down" and not get into a "heated exchange" when the limit is done by checking the user's karma for the last 20 comments from any time period. 20 comments from me go back months, I can't get all that much slower. And 3 downvoters and -1 is an awfully small limit, and easy to hit just out of bad luck.

Also, if you post from greaterwrong, and you hit the limit, your comment and the effort spent writing it is just lost.

What real problem does this solve? I have not noticed LW being overrun with prolific but mediocre posters.

The thresholds seem far too low. For example:

-5 recent karma (4+ downvoters) 1 comment per day

Bring three friends on a drive-by shoot.

-15 recent karma (4+ downvoters) 1 comment per 3 days

Didn't learn their lesson, eh? Hit them again.

-30 last month karma (5+ downvoters) 1 comment per week

Invite all your friends to the dogpile. Repeat if they have the temerity to be wrong on LessWrong again.

I am now more reluctant to strong-downvote anything but the most egregious crackpottery, trolling, and spam.

I'm generally disincentivized to post or put effort into a post from the system where someone can just heavily downvote my post, without even giving a reason.

Hi Raemon. I have noticed I now seem to have unrestricted comment privileges. I assume it's a combination of "recency" and "last n" that have made my account no longer satisfy rate limiting rules.

I assume the algorithm you are using is stateless, so even a user who gets the most severe restriction could theoretically just wait or give good comments at the restricted rate long enough to be unrestricted.

I think, if you implemented it this way, this is pretty good. Other message boards have this concept of "strikes" or "warnings" or "users the mods don't like". This algorithm is stateful, meaning for very old boards (10-20 years+) a substantial fraction of all users are eventually banned because if a user makes 10k posts, and any post contains content is that receives a warning, eventually they will have enough warnings to receive a ban.

(Similarly if humans lived forever, eventually all would become convicted felons with the current justice system because it takes only 1 bad moment or an error that convicts an innocent to gain that state, and it can never be erased)

I think you've interpreted it right, yeah. (I'd clarify: recent karma is "karma of your last 20 posts/and/or/comments, sorted by recency", so "waiting" won't cause that to fade. But it caps out at 1-comment-per-3 days, so you can fix it by writing good comments in a reasonable amount of time)

Re: "strikes"

A thing that I might consider implementing (but haven't currently), is some sort of memory wherein if you get rate limited once, in the future the system will rate limit you faster or more strictly if you started getting downvoted heavily again (but, not in a way that would escalate to a ban).

One problem with your stated algorithm is what happened in my case. I had conversations via pm and mentioned to a few posters here my situation. More than once someone went on an upvote spree, adding points to posts going back years and reducing the level of downvotes on some of the controversial comments I made.

Your algorithm applies a "strike" to what can be a temporary spike. With your stateless implementation, a user could become throttled, the other users could disagree and the user could become unthrottled. The existence of "strong" + - votes makes this possible as an adversarial attack.

You could recalculate the strikes, in effect "pardoning" a user, but I wouldn't want to maintain the code that does that.

I had conversations via pm and mentioned to a few posters here my situation. More than once someone went on an upvote spree, adding points to posts going back years and reducing the level of downvotes on some of the controversial comments I made.

I do want to flag – I think this may basically be voter fraud (and the sort of thing that we might ban people for)

It's fine/normal to see some recent comments that you think were unfairly downvoted and go upvote them. It's kinda borderline-fine to say "hey, I think some of my recent comments were downvoted and I think you'll think those comments were good/fine, could you take a look and upvote them if you think they're good?". But, having someone systematically go upvote lots of past stuff is over the line of voter fraud.

A simple way to improve this system would be to require someone to comment/give a reason when heavily upvoting/heavily downvoting things.

If you're coming from the Rest Of The Internet, you may be surprised by hard far LessWrong takes this.

I believe this should say "surprised by how far"

Last month's karma: The karma the user received for their last 20 comments/posts within the last month (i.e. a subset of the "recent karma.")

It's unclear whether this means karma votes within the last month or comments/posts within the last month. I think it would be good to clarify the wording.

The LessWrong team recently began rolling out automatic rate limits. The general idea is if a user gets heavily downvoted, the site automatically restricts their posting and commenting privileges. (See Well-Kept Gardens Die By Pacifism for some background on LessWrong's overall moderation philosophy)

After thinking awhile about various side-effects (see previous discussion), I designed some rate limits based on the following user metadata:

The exact karma thresholds and rate-limit-strengths chosen are based on the LW team's experience with daily moderation maintenance. Every day, moderators look over ~20 posts and comments from new-ish users. We've built up some intuitions for what user-karma and comment/post-karma tends to correspond with stuff we want to see more of on LessWrong. And for the past month or so I've been checking how these four metrics apply to the current distribution of LW users.

The karma system isn't perfect. Sometimes you'll get randomly downvoted by someone for idiosyncratic or petty reasons. Sometimes it's hard for newer user's content to get noticed and upvoted. But people tend to be pretty hesitant to downvote stuff – most comments just don't get voted on. Downvoted content from new users tends to be some combination of poorly argued, difficult to read, rude-without-actually-saying-much, or rehashing topics that have been discussed extensively on LessWrong without introducing new considerations.

I suspect we'll iterate and fine-tune these rules over time.

Later in this post I have advice on writing posts/comments that tend to be well received on LessWrong. If you've been downvoted and/or rate-limited, don't take it too hard. LessWrong has fairly particular standards. My recommendation is to read some of the advice at the end here and try again.

Current AutoRateLimits (as of Jan 25 2025)

We'll probably experiment with these a bit. The exact implementation here involves a lot of different numbers, but here's a rough overview of the current rate limit philosophy:

Stronger rate limits require more unique downvoters, so that a single angry voter or small clique can't have too large an impact.

The hope is for users to mostly show up, get recognized if they write good content, and then get full posting permissions. If a user is getting net-downvoted, then when they go to post they'd they get a message looking something like:

Default Rate Limits

Users whose total karma is < 5 are limited to 3 comments a day, and 2 posts per week.

The hope here is that these numbers are: a) high enough that most users can get started commenting/posting without having to deal with a rate limit, and b) low enough that a user writing low-quality content can't go on too big a commenting spree, before having to slow down a bit and learn some site norms.

The default commenting rate limits apply to writing comments on your own posts.

Negative Karma Rate Limits

If a user has -1 or less total karma, they can only write one comment per day and one post per two-weeks.

This doesn't require multiple downvoters, since new users with negative karma are more likely to be spam, or fairly confused about LessWrong. I do think this will occasionally be unfair to new users who were posting in good faith (and I am sorry about that!).

My intention here is to treat getting a negative-karma rate limit to be like getting caught in a spam filter – it happens sometimes unfortunately, but the consequences aren't too bad. You'll be able to try again the next day.

Negative karma rate limits also apply to writing comments on your own posts.

Recent Karma Rate Limits

We have an escalating series of rate limits based on recent karma. (Here "recent karma" means "karma from your last 20 comments/and/or/posts", and "last month karma" is the subset of recent karma on comments/posts from the past month)

[Edit: we've updated the escalating rate limits to only apply to users with negative karma. We basically only want them to apply in times when a moderator would have ordinarily wanted to give the user a temporary ban, and this seems important to avoid false positives about. We don't currently have a good enough downvote metric to avoid false positives. So instead, we're now using the escalating rate limits basically as a way to phase out users who the site is clearly "collectively judging" as net negative, while still giving them the ability to try again in a month.

This explanation kinda sucks, I will hopefully work on a clearer explanation]

Post Rate Limits

Note: these only apply to publishing posts. You can still create and work on drafts.

Comment Rate Limits

Commenting rate limits felt a bit trickier than posts.

People typically don't publish posts very often, and the posts either get upvoted or downvoted and people move on with their day. Comments are part of a conversation. Sometimes, it's really important for people to be able to write a ton of comments back-and-forth without anyone bothering to upvote each other. Sometimes, posting a ton of comments is annoying and spammy.

These rate limits attempt to navigate that balance.

Most of these do not affect commenting on your own posts.

You can read the specific implementation of the rate limits in this file.

Last Post Karma

We also have some post-specific rate limits based on post karma:

Q&A

I got downvoted. How do I write stuff that won't be downvoted?

My recommendations here are pretty similar to Ruby's recommendations in the New User's Guide to LessWrong.

What gets upvoted and downvoted depends on a lot of factors. A post/comment might be really strong in some areas and weak in other areas. There are maybe two threads of advice I'd want to give:

The first one is in some sense simple: write content that is novel, correct and useful. There are a lot of paths towards this. It's a pretty complex problem though, beyond the scope of this post. Here's are two essays by Paul Graham, here's Sarah Constantin on writing Fact Posts, and Nonfiction Writing Advice by Scott Alexander.

The rest of this will focus on "avoiding pitfalls".

Focus on exploring "what predictions are you making and why?", as opposed to trying to win a fight. If you're coming from the Rest Of The Internet, you may be surprised by how far LessWrong takes this. There's a genre of comments that seem to be out to make someone look dumb or ridiculous. I recommend a focus on criticizing ideas, on the object-level, rather than trying to raise or lower someone's status.

I recommend reading the LessWrong Political Prerequisites sequence to get more context.

Write a clear introduction and make it sure it's nicely formatted (with appropriate paragraph breaks). If you're writing a post or pretty long comment, make sure it's easy to read and understand the point you're making. Err on the side of writing something shorter rather than longer if you can.

Be familiar with rationality basics like "beliefs are probabilistic and should control anticipations." Get curious about where you might be wrong, avoid arguing over definitions, etc. Read through the Sequences Highlights.

Be easy to engage/argue with. If you disagree with a post author, try to state your reasoning and what would change your mind. Make concrete predictions.

Aim for a high standard if you're contributing on the topic AI. As AI becomes higher and higher profile in the world, many more people are flowing to LessWrong because we have discussion of it. In order to not lose what makes our site uniquely capable of making good intellectual progress, we have particularly high standards for new users showing up to talk about AI. If you find yourself getting downvoted, I recommend shifting your stance towards "ask questions in the latest AI All Questions Open Thread".

I think I'm just getting downvoted because I disagree with LessWrong consensus. How is that fair? Don't you want to avoid becoming closed-minded?

If this was happening a lot, I'd be quite worried (not just about autoRateLimits but about LessWrong as a whole).

I do think this happens nonzero. But I don't think it's usually quite what's going on.

The LessWrong community does have some biases. But the way I think those biases tend to play out depends on some specifics. I think comments tend to fall into this grid:

(usually somewhat upvoted, or

heavily upvoted when they're funny

or particularly emotionally resonant)

(Usually pretty upvoted)

(usually somewhat downvoted, or

heavily downvoted if they're rude)

(Usually heavily upvoted)

(There are, of course, some medium-quality contrarian posts that tend to get somewhat upvoted.)

I think a lot of people on LessWrong are actively into well-written critiques of "the LessWrong consensus." (For a long time Holden's Thoughts on the Singularity Institute (SI) was the most upvoted post, and Paul's Where I agree and disagree with Eliezer is sort of a spiritual successor).

My actual sense, from looking at upvote/downvote patterns, is that people complaining about getting downvoted are in fact tending to write posts that are some combination of not well-argued, or retreading ground that's been discussed a bunch on LessWrong without adding new arguments (often failing to engage with the standard rebuttals and positions).

I think these are basically correct things to get downvoted and deprioritized.

The question of "is it fair/reasonable for low-quality consensus comments to get upvoted?" is a fair one. I basically trust the LessWrong karma system to distinguish "stuff there should be less of" and "stuff that's at least plausibly good". I think reasonable people can disagree on whether LessWrong has good taste in what gets significantly upvoted.

Hope that helps give some context on rate limits and how they fit into LessWrong. As noted, we'll likely iterate on this over time.

I think it's also worth reading the New User's Guide to LessWrong, and the Sequences Highlights to get a good sense of what LessWrong is about, and what background knowledge it's built on.