On the relevance for QURI:

QURI is focused on ways of advancing forecasting and evaluation for altruistic means. One of the largest challenges we’ve come across has been in how to organize scalable information in ways that could be neatly predicted or evaluated upon. AI Safety Papers is in part a test at how easy and how usable custom web front ends that can help organize large amounts of tabular data. Besides (hopefully) being directly useful, applications like AI Safety Papers might later serve to assist with forecasting or evaluation. This could be aided by things like per-paper comments threads, integrations with forecasting functionality (either forecasting making, viewing, or both), and integrations with evaluations (writing, viewing, or both).

One example future possible project might be something like:

- We come up with a rubric for a subset of papers (Say, “Quality”, “Novelty”, and “Importance”)

- We find some set of respected researchers to rate a select subset of papers.

- We have a separate subset of forecasters (more junior researchers) attempt to make predictions on what the group in step (2) would say, on every paper.

- The resulting estimates from (3) could be made available on the website.

- Every so often, some papers would be randomly selected to be evaluated by the respected team. After this is done, the predictors who best predicted these results would be appropriately rewarded.

This would be a setup similar to what has been discussed here.

If anyone reading this has suggestions or thoughts on such sorts of arrangements, please do leave comments.

Planned summary for the Alignment Newsletter:

AI Safety Papers is an app to interactively explore a previously collected <@database of AI safety work@>(@TAI Safety Bibliographic Database@). I believe it contains every article in this newsletter (at least up to a certain date; it doesn’t automatically update) along with their summaries, so you may prefer to use that to search past issues of the newsletter instead of the [spreadsheet I maintain](https://docs.google.com/spreadsheets/d/1PwWbWZ6FPqAgZWOoOcXM8N_tUCuxpEyMbN1NYYC02aM/edit#gid=0).

Note: I've moved this to the Alignment Forum.

Requests, if they are easy:

- In the full table view, it would be nice if "Shah blurb" could be changed to e.g. "Alignment Newsletter blurb", since some of those summaries were not written by me.

- It would be nice to include a link to the relevant newsletter at the beginning of these blurbs (the links are available in the public spreadsheet I maintain), so that people could read opinions and / or get other context (some of the summaries talk about "the previous summary" which only makes sense in the context of the full newsletter).

Incidentally, the code which parses your custom markdown syntax is here: https://github.com/QURIresearch/ai-safety-papers/blob/master/lib/getProps/processAlignmentNewsletterBlurb.js, in case you ever need to do something similar

Thanks!

On those changes:

1. I can change this easily enough. I wasn't paying much attention to the full table view titles (these were originally intended primarily for the code), but good point here.

2. I agree. I thought about this, will consider adding. This will require some database additions, but is obviously doable.

My user experience

When I first load the page, I am greeted by an empty space.

From here I didn't know what to look for, since I didn't remember what kind of things where in the database.

I tried clicking on table to see what content is there.

Ok, too much information, hard to navigate.

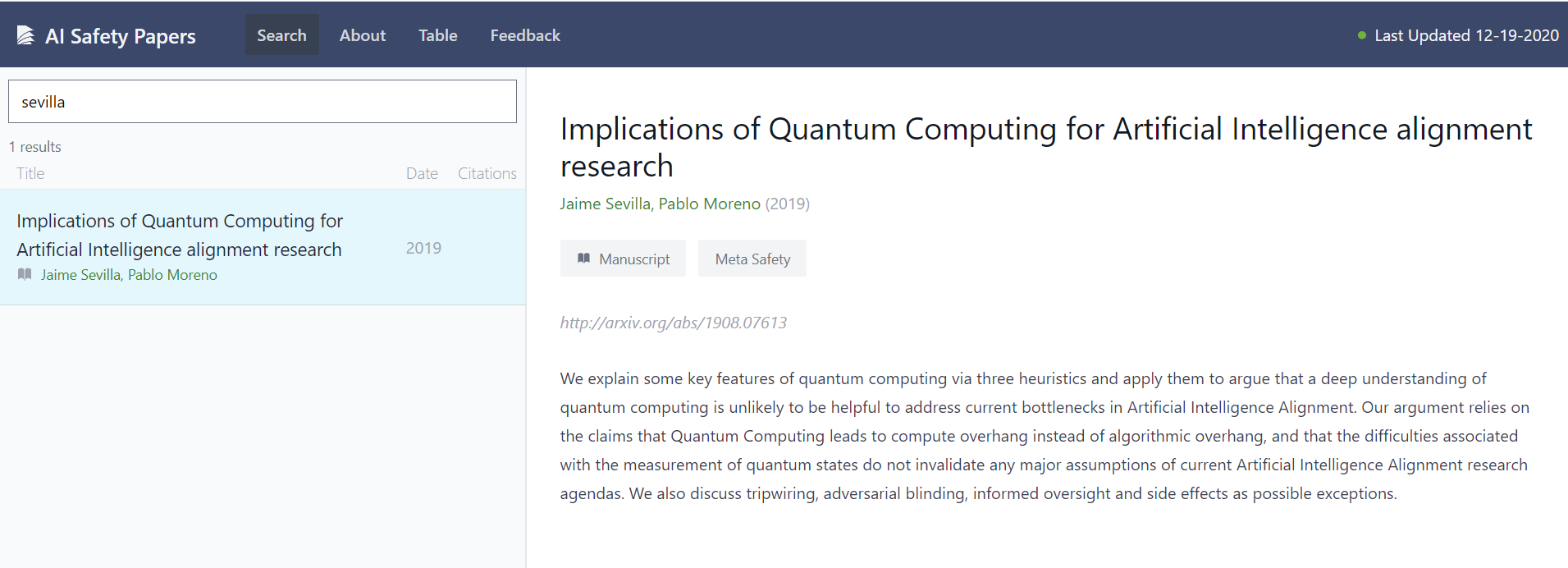

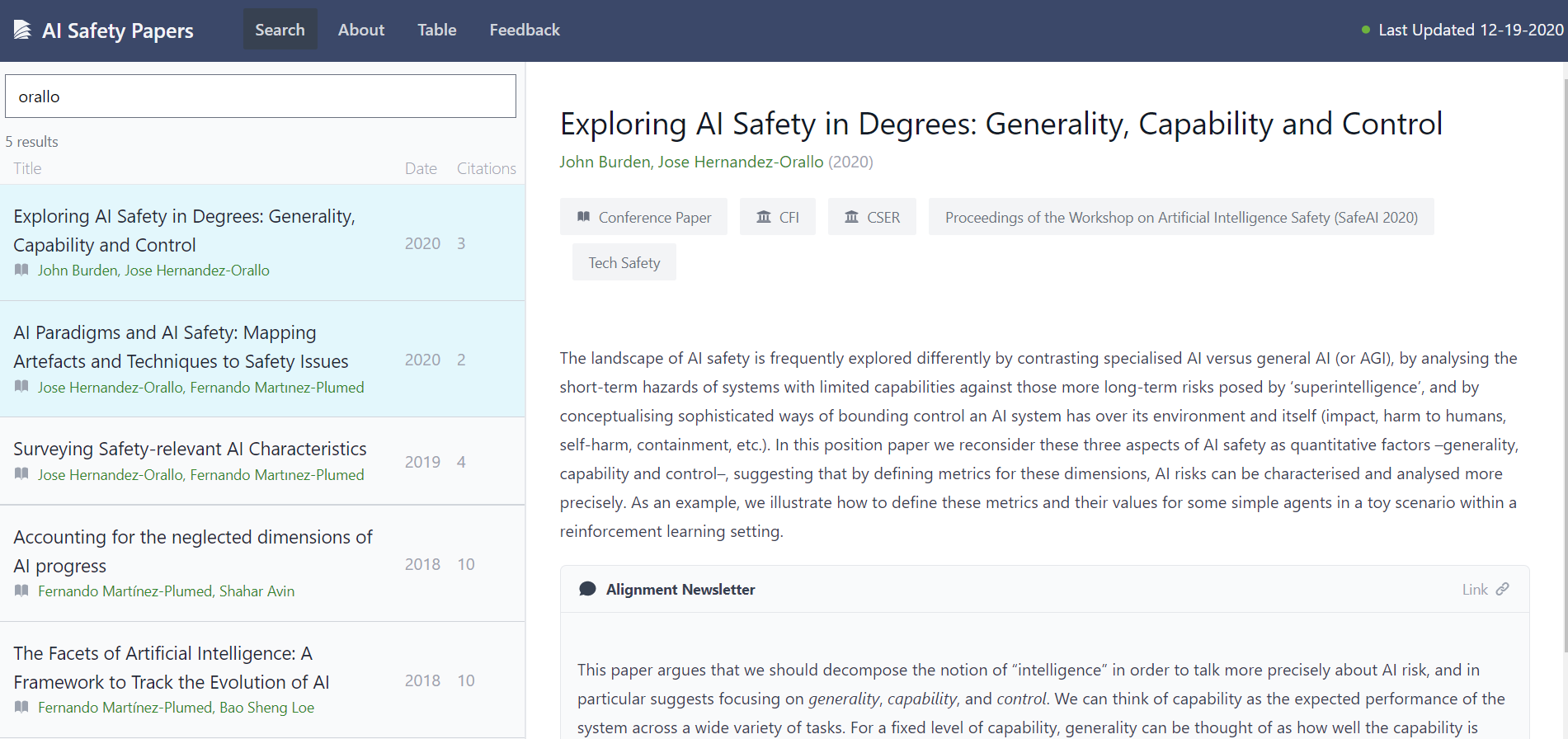

I remember that one of my manuscripts made it to the database, so I look up my surname

That was easy! (and it loaded very fast)

The interface is very neat too. I want to see more papers, so I click on one of the tags.

I get what I wanted.

Now I want to find a list of all the tags. Hmmm I cannot find this anywhere.

I give up and look at another paper:

Oh cool! The Alignmnet Newsletter summary is really great. Whenever I read something in Google Scholar it is really hard to find commentary on any particular piece.

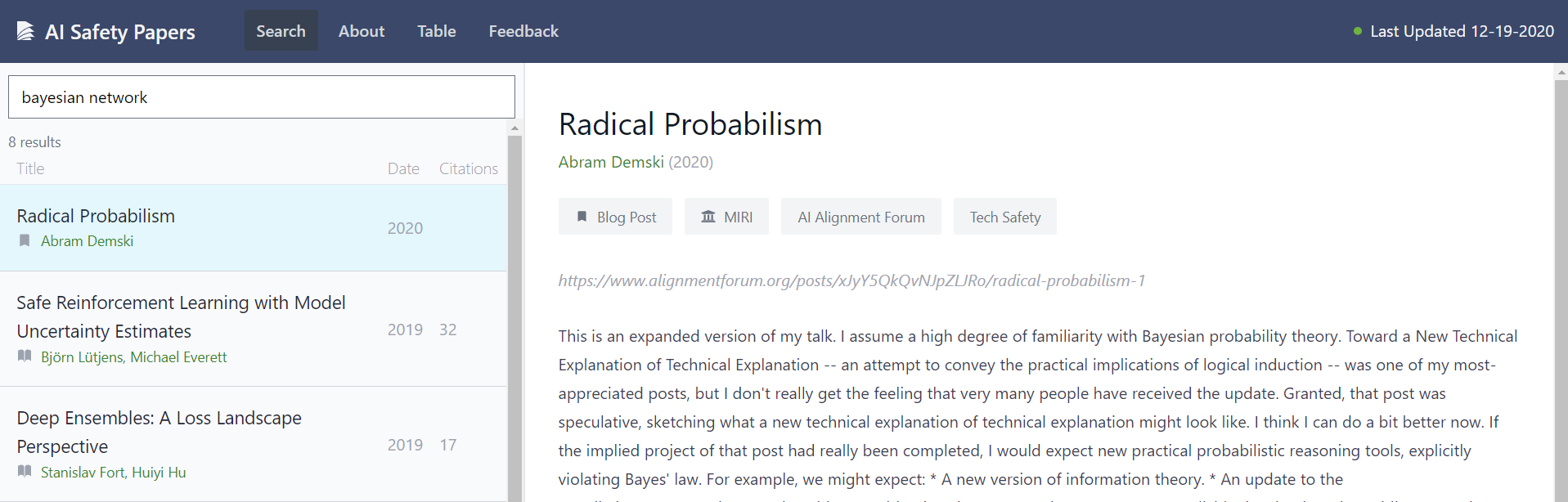

I tried now to look for my current topic of research to find related work

Meh, not really anything interesting for my research.

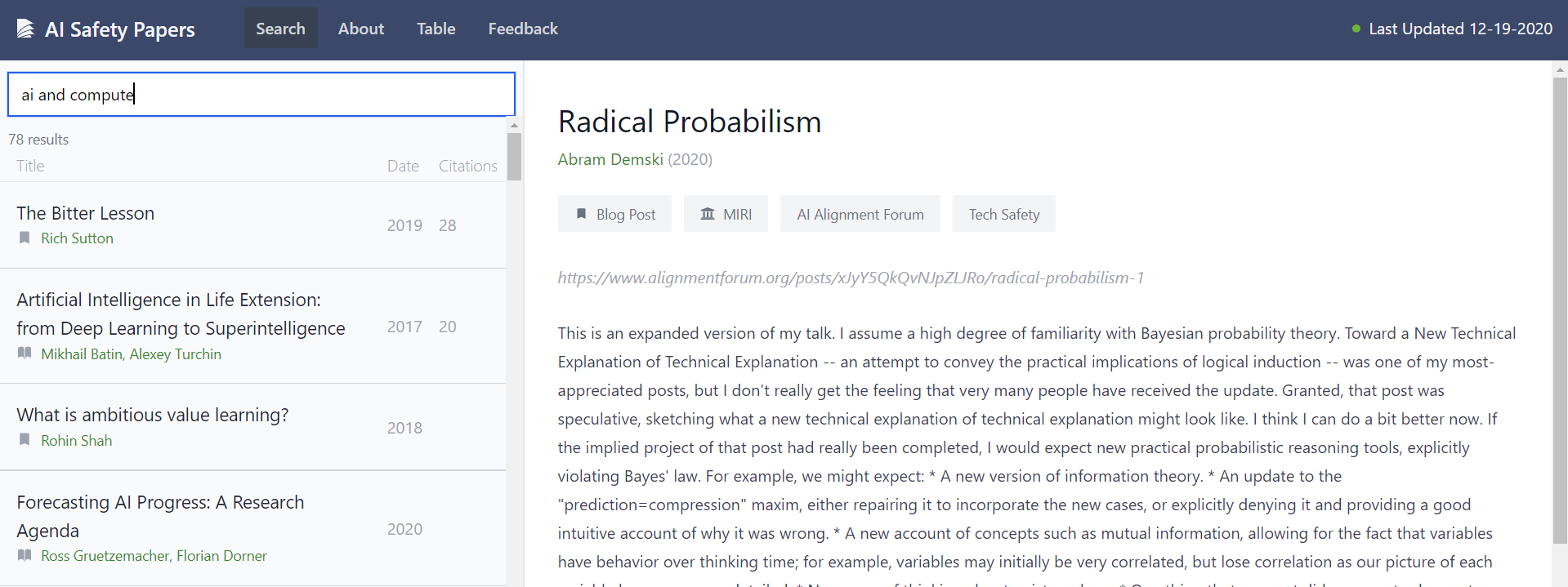

Ok, now I want to see if Open AI's "AI and compute" post is in the dataset:

Huhhh it is not here. The bitter lesson is definitely relevant, but I am not sure about the other articles.

Can I search for work specific to open ai?

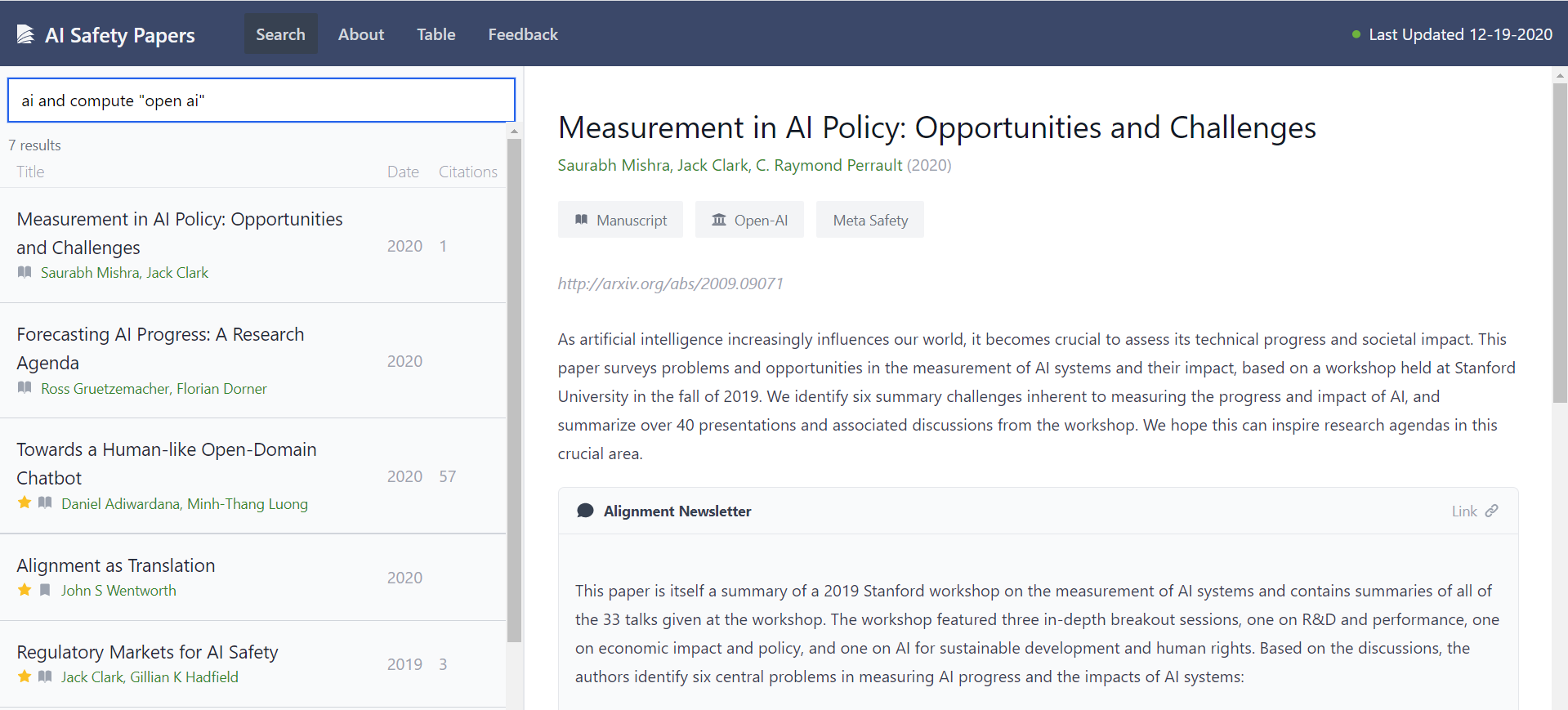

Hmm that didnt quite work. The top result is from OpenAI, but the rest are not.

Maybe I should spell it different?

Oh cool that worked! So apparently the blogpost is not in the dataset.

Anyway, enough browsing for today.

Alright, feedback:

- This is a very cool tool. The interface is neatly designed.

- Discovering new content seems hard. Some things that could help include a) adding recommended content on load (perhaps things with most citations, or even ~10 random papers) and b) having a list of tags somewhere

- The reviewer blurbs are very nice. However I do not expect to use this tool. Or rather I cannot think right now of what exactly I would use this tool for. It has made me consider reaching out to the database mantainers to suggest the inclusion of an article of mine. So maybe like that, to promote my work?

Thanks so much for this feedback, that's really helpful. I really appreciate you walking through the screens and sharing your thoughts and intuitions on each.

I definitely agree with your points. I should note that this app was made in a few weeks for a narrow population; there are clearly a lot of nice features that would be neat, but take more time. Finding good trade-offs is really important but tricky.

Good recommendations and states before search are one obvious area to improve, but I think would require some significant thought to figure out and implement.

Around when to use this tool, I have my own uses, and am curious to see those of others. I think it has some value now, and hints at a lot more value that could exist with more powerful implementations. I've used it in the past to get a sense of "How promising does researcher|institution X seem?" for which doing a quick search for them, and paying a lot of attention to the blurbs, helps a lot.

(Note: If anyone else reading this wants to maintain/improve it, or just make a better version, please go ahead! I expect to be busy on some other things for a while.)

This looks amazing and is so so important. Someone should fund a kick-ass evangelist, with a marketing budget, to make sure this is front of mind for people on an ongoing basis.

Re: peer review

Maybe integrating some of the reviews I and other researchers have been doing for testing the waters might be relevant)

Thanks for flagging! I think this is an obvious feature-add. One challenge is that it can be tricky to find all of the reviews, but it's relatively simple when there are just a few total reviewers, and each doesn't have too many reviews.

Awesome tool! I especially like that you put the AF posts, too, as they constitute so much of the knowledge of the Alignment community. I'll definitely be using it and recommending it to newcomers.

Maybe a cool feature would be some featured post, or a start here page. It's not obvious what to put there, but I can imagine a new entrant to the field being a bit lost when thrown in there. ^^

Thanks so much!

Maybe a cool feature would be some featured post, or a start here page. It's not obvious what to put there, but I can imagine a new entrant to the field being a bit lost when thrown in there. ^^

Agreed! This will take some more thought, for sure. We're mainly focused on a small community, so it seemed okay to have greater learning costs, but I'd really like to figure out something better here longer-term.

Any chance this site could be updated again? Would love to see some of the papers from the past couple of years added. I'd be enthusiastic to help surface them - paper-graph walking is one of my favorite pastimes.

AI Safety Papers is a website to quickly explore papers around AI Safety. The code is hosted on Github here.

In December 2020, Jess Riedel and Angelica Deibel announced the TAI Safety Bibliographic Database. At the time, they wrote:

One significant limitation of this system was that there was no great frontend for it. Tabular data and RDF can be useful for analysis, but difficult to casually go through.

We’ve been experimenting with creating a web frontend to this data. You can see this at http://ai-safety-papers.quantifieduncertainty.org.

This system acts a bit like Google Scholar or other academic search engines. However, the emphasis on AI-safety related papers affords a few advantages.

Tips

Questions

Who is responsible for AI Safety Papers?

Ozzie Gooen has written most of the application, on behalf of the Quantified Uncertainty Research Institute. Jess Riedel, Angelica Deibel, and Nuño Sempere have all provided a lot of feedback and assistance.

How can I give feedback?

Please either leave comments, submit feedback through this website, or contact us directly at hello@quantifieduncertainty.org.

How often is the database updated?

Jess Riedel and Angelica Deibel are maintaining the database. They will probably update it every several months or so, depending on interest. We’ll try to update the AI Safety Papers app accordingly. The date of the most recent data update is shown in the header of the app.

Note that the most recent data in the current database is from December 2020.

Future Steps

This app was made in a few weeks, and as such it has a lot of limitations.

You can see several other potential features here. Please feel free to add suggestions or upvotes.

We’re not sure if or when we’ll make improvements to AI Safety Papers. If there is substantial use or requests for improvements, that will carry a lot of weight regarding our own prioritization. Of course, people are welcome to submit pull requests to the Github repo directly, or simply fork the project there.