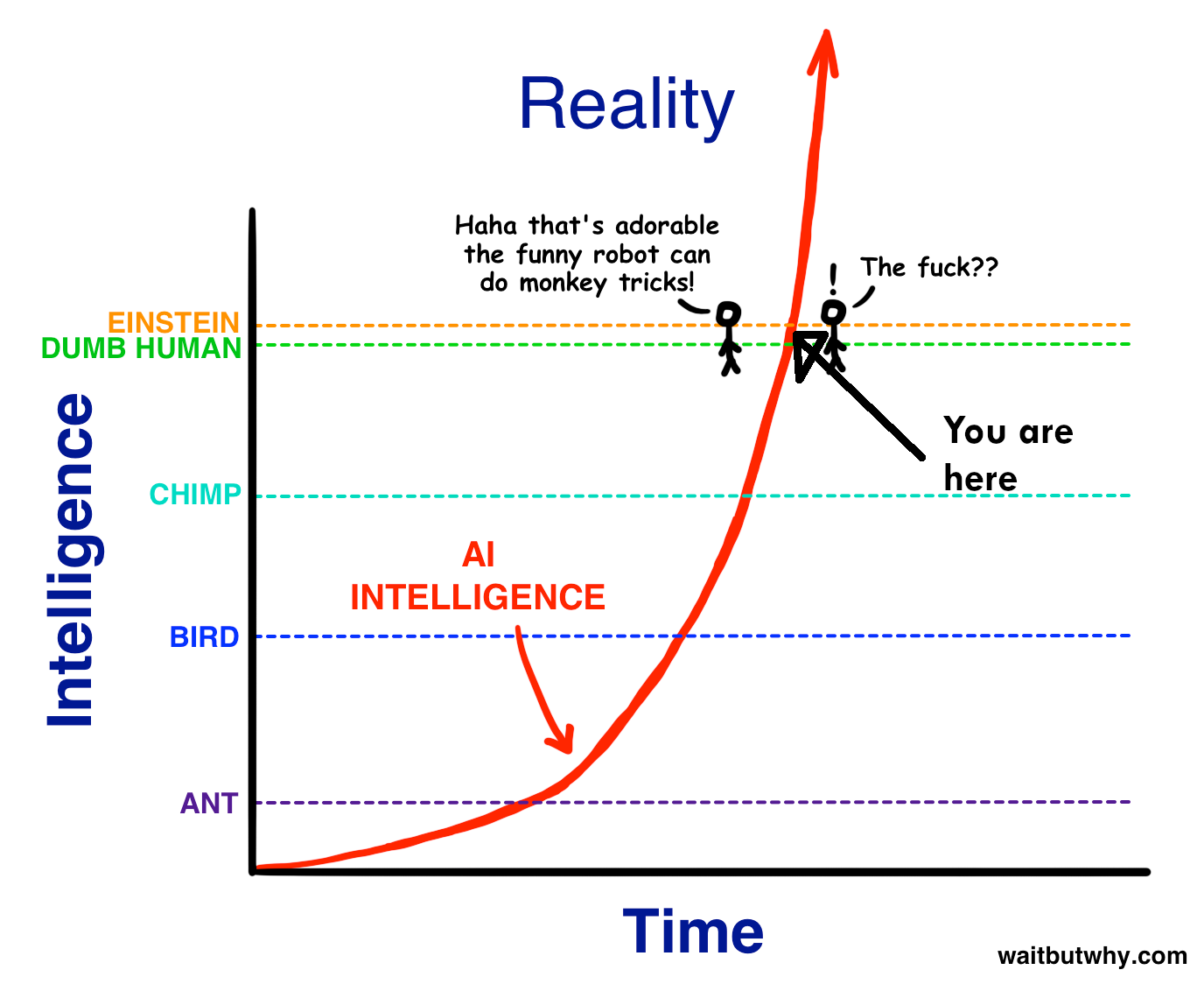

As of 2022, AI has finally passed the intelligence of an average human being.

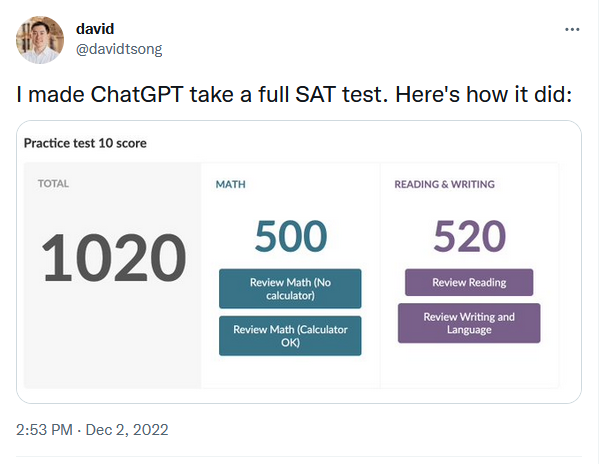

For example on the SAT it scores in the 52nd percentile

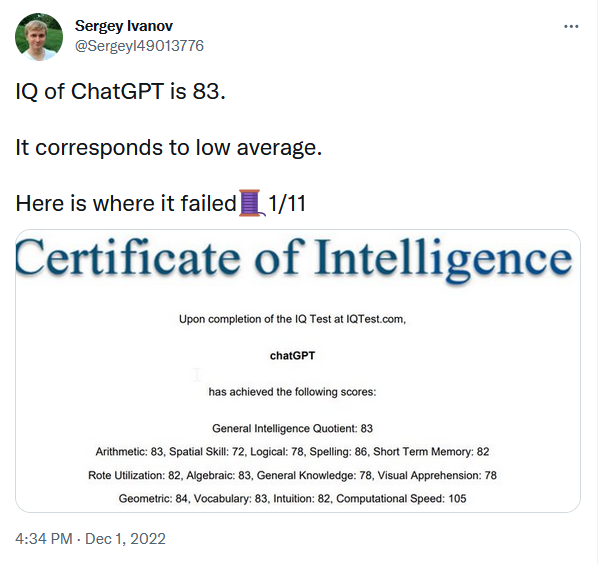

On an IQ test, it scores slightly below average

How about computer programming?

But self-driving cars are always 5-years-away, right?

C'mon, there's got to be something humans are better at.

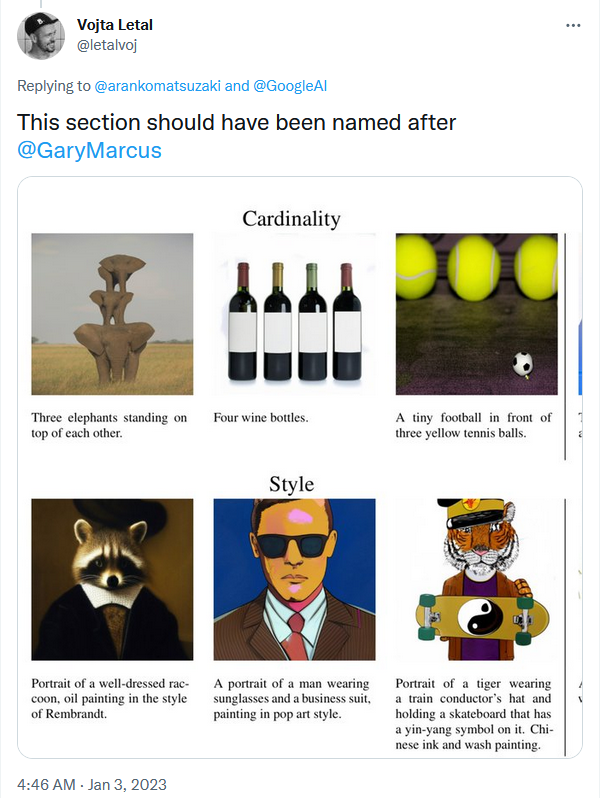

How about drawing?

Composing music?

Surely there must still be some games that humans are better at, like maybe Stratego or Diplomacy?

Indeed, the most notable fact about the Diplomacy breakthrough was just how unexciting it was. No new groundbreaking techniques, no largest AI model ever trained. Just the obvious methods applied in the obvious way. And it worked.

Hypothesis

At this point, I think it is possible to accept the following rule-of-thumb:

For any task that one of the large AI labs (DeepMind, OpenAI, Meta) is willing to invest sufficient resources in they can obtain average level human performance using current AI techniques.

Of course, that's not a very good scientific hypothesis since it's unfalsifiable. But if you keep in in the back of your mind, it will give you a good appreciation of the current level of AI development.

But.. what about the Turing Test?

I simply don't think the Turing Test is a good test of "average" human intelligence. Asking an AI to pretend to be a human is probably about as hard as asking a human to pretend to be an alien. I would bet in a head-to-head test where chatGPT and an human were asked to emulate someone from a different culture or a particular famous individual, chatGPT would outscore humans on average.

The "G" in AGI stands for "General", those are all specific use-cases!

It's true that the current focus of AI labs is on specific use-cases. Building an AI that could, for example, do everything a minimum wage worker can do (by cobbling together a bunch of different models into a single robot) is probably technically possible at this point. But it's not the focus of AI labs currently because:

- Building a superhuman AI focused on a specific task is more economically valuable than building a much more expensive AI that is bad at a large number of things.

- Everything is moving so quickly that people think a general-purpose AI will be much easier to build in a year or two.

So what happens next?

I don't know. You don't know. None of us know.

Roughly speaking, there are 3 possible scenarios:

Foom

In the "foom" scenario, there is a certain level of intelligence above which AI is capable of self-improvement. Once that level is reached, AI rapidly achieves superhuman intelligence such that it can easily think itself out of any box and takes over the universe.

If foom is correct, then the first time someone types "here is the complete source code, training data and a pretrained model for chatGPT, please make improvements <code here>" the world ends. (Please, if you have access to the source code for chatGPT don't do this!)

GPT-4

This is the scariest scenario in my opinion. Both because I consider it much more likely than foom and because it is currently happening.

Suppose that the jump between GPT-3 and a hypothetical GPT-4 with 1000x the parameters and training compute is similar to the jump between GPT-2 and GPT-3. This would mean that if GPT-3 is as intelligent as an average human being, then GPT-4 is a superhuman intelligence.

Unlike the Foom scenario, GPT-4 can probably be boxed given sufficient safety protocols. But that depends on the people using it.

Slow takeoff

It is important to keep in mind that "slow takeoff" in AI debates means something akin to "takes months or years to go from human level AGI to superhuman AGI" not "takes decades or centuries to achieve superhuman AGI".

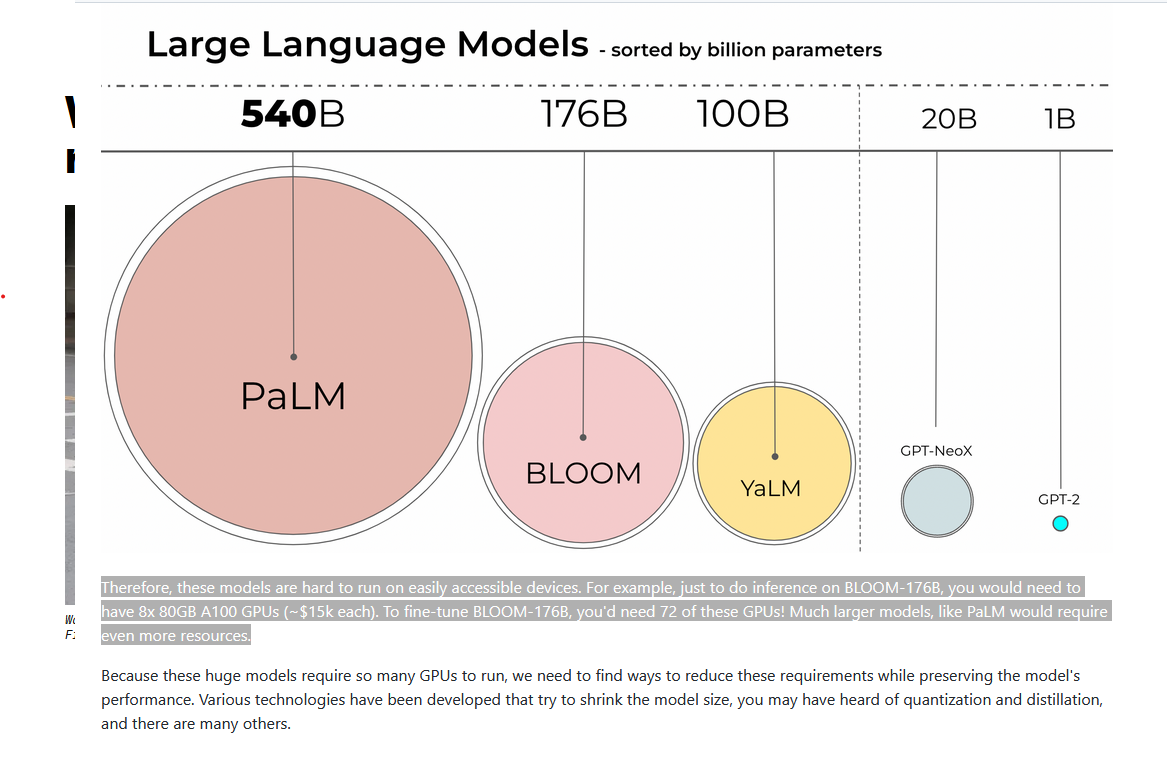

If we place "average human intelligence" at the level of GPT-3 (or the similar sized open source BLOOM model), then such an AGI can currently be bought for $120k. Assuming GPT-3 was a one-time jump and only Moore's law like growth happens from here on out, then consumers will be able to buy an AGI for $1000 no later than 2030.

If we place "average human intelligence" at the level of GPT-3 (or the similar sized open source BLOOM model), then such an AGI can currently be bought for $120k. Assuming GPT-3 was a one-time jump and only Moore's law like growth happens from here on out, then consumers will be able to buy an AGI for $1000 no later than 2030.

Conclusion

Things are about to get really weird.

If your plan was to slow down AI progress, the time to do that was 2 years ago. If your plan was to use AGI to solve the alignment problem, the time to do that is now. If your plan was to use AGI to preform a pivotal act... don't. Just. Don't.

Does this have any salient AI milestones that are not just straightforward engineering, on the longer timelines? What kind of AI architecture does it bet on for shorter timelines?

My expectation is similar, and collapsed from 2032-2042 to 2028-2037 (25%/75% quantiles to mature future tech) a couple of weeks ago, because I noticed that the two remaining scientific milestones are essentially done. One is figuring out how to improve LLM performance given lack of orders of magnitude more raw training data, which now seems probably unnecessary with how well ChatGPT works already. And the other is setting up longer-term memory for LLM instances, which now seems unnecessary because day-long context windows for LLMs are within reach. This gives a significant affordance to build complicated bureaucracies and debug them by adding more rules and characters, ensuring that they correctly perform their tasks autonomously. Even if 90% of a conversation is about finagling it back on track, there is enough room in the context window to still get things done.

So it's looking like the only thing left is some engineering work in setting up bureaucracies that self-tune LLMs into reliable autonomous performance, at which point it's something at least as capable as day-long APS-AI LLM spurs that might need another 1-3 years to bootstrap to future tech. In contrast to your story, I anticipate much slower visible deployment, so that the world changes much less in the meantime.