What sort of project?

i think the survey respondents here are talking about something meaningful and i probably agree with most of their judgments about that thing. for example, with that notion of coherence, i probably agree with "Google (the company) is less coherent now than it was when it had <10 employees" (and this is so even though Google is more capable now than it was when it had 10 employees)

I agree, but there's a caveat that the notion of coherence as operationalized in the linked Sohl-Dickstein post conflates at least two (plausibly more) notions. The three questions he uses to point at the concept of "coherence" are:

- How well can the entity's behavior be explained as trying to optimize a single fixed utility function?

- How well aligned is the entity's behavior with a coherent and self-consistent set of goals?

- To what degree is the entity not a hot mess of self-undermining behavior?

I expect the first two (in most of the respondents) to (connotationally/associationally) evoke the image of an entity/agent that wants some relatively specific and well-defined thing, and this is largely why you get the result that a thermostat is more of a "coherent agent" than Google. But then this just says that with more intelligence, you are capable of reasonably skillfully pursuing more complicated, convoluted, and [not necessarily that related to each other] goals/values, which is not surprising. Another part is that real-world intelligent agents (those capable of ~learning), at least to some extent, do some sort of figuring out / constructing their actual values on the fly or change their mind about what they value.

The third question is pointing at something different: being composed of purposive forces pushing the world in different directions. Metaphorically, something like destructive constructive interference vs destructive interference or channeling energy to do useful work vs that energy dissipating as waste heat. Poetically, each purposive part has a seed of some purpose in it, and when they compose in the right way, there's "superadditivity" of those purposive parts: they add up to a big effect consistent with the purposes of the purposive parts. "Composition preserves purpose."

A clear human example of incoherence in this sense is someone repeating a cycle of (1) making a specific sort of commitment; and then (2) deciding to abandon that commitment, and continuing to repeat this cycle, even though "they should notice" that the track record clearly indicates they're not going to follow through on this commitment, so they should change something about how they approach the goal the commitment is instrumental for. In this example, the parts of the agent that [fail to cohere]/[push the world in antagonistic directions] are some of their constitutive agent episodes across time.

One vague picture here is that the pieces of the mind are trying to compose in a way that achieves some "big effect".

Your example of superficially learning some area of math for algebraic crunching, but without deep understanding and integration with the rest of your mathematical knowledge, is an example of something "less bad", which we might call "unfulfilled positive interference". The new piece of math does not "actively discohere", because it doesn't screw up your prior understanding. But there might be some potential for further synergy that is not being fulfilled until you integrate it.

To sum up, a highly coherent agent in this sense may have very convoluted values, and so Sohl-Dickstein's "coherence question 3" diverges from "coherence questions 1 and 2".

But then there's a further thing. Being incoherent can be "fine" if you are sufficiently intelligent to handle it. Or maybe more to the point, your capacities suffice to control/bound/limit the damage/loss that your incoherence incurs. You have a limited amount of "optimization power" and could spend some of it on coherentizing yourself, but you figure that you're going to gain more of what you want if you spend that optimization power on doing what you want to do with the somewhat incoherent structures that you have already (or you cohere yourself a bit, but not as much as you might).[1] E.g., you can have agents A and B, such that A is more intelligent than B, and A is less coherent than B, but the difference in intelligence is sufficient for A to just permanently disempower B. A could self-coherentize more, but doesn't have to.

It would be interesting (I am just injecting this hypothesis into the hypothesis space without claiming I have (legible) evidence for it) if it turned out that, given some mature way of measuring intelligence and coherence, relatively small marginal gains in intelligence often offset relatively large losses in coherence in terms of something like "general capacity to effectively pursue X class of goals".

- ^

With the caveat to this that the more maximizery/unbounded the values are, the more the goal-optimal optimization power allocation shifts towards actually frontloading a lot of self-coherentizing as capital investment.

I'm not super convinced (but intrigued) by your proposal that a computation is conscious unless it erases bits.

I think you meant to say "is not conscious".

Lol, right! Only after I published this did I recall that there was something vaguely in this spirit called "Polish notation", and it turns out it's exactly that.

The notations we use for (1) function composition; and (2) function types, "go in opposite directions".

For example, take functions and that you want to compose into a function (or ), which, starting at some element , uses to obtain some , and then uses to obtain some . The notation goes from left to right, which works well for the minds that are used to the left-to-right direction of English writing (and of writing of all extant European languages, and many non-European languages).

One might then naively expect that a function that works like ", then ", would be denoted or maybe with some symbol combining on the left and on the right, like or .

But, no. It's or sometimes , especially in the category theory land.

The reason for this is simple: function application. For some historical reasons (that are plausibly well-documented somewhere in the annals of history of mathematics), when mathematicians were still developing a symbolic way of doing math (rather than mostly relying on geometry and natural language) (and also the concept of a function, which I hear has undergone some substantial revisions/reconceptualizations), the common way to denote an application of a function to a number became . So, now that we're stuck with this notation, to be consistent with it, we need to write the function ", then " applied to as . Hence, we write this function abstractly as and read " after ".

To make the weirdness of this notation fully explicit, let's add types to the symbols (which I unconventionally write in square brackets to distinguish typing from function applicatoin.

You can see the logic to this madness, but it feels unnatural. Like, of course, you could just as well write the function signature the other way around (like or ) or introduce a notation for function composition that respects our intuition that a composition of ", then ", should have on the left and on the right (again, assuming that we're used to reading left-to-right).

Regarding the first option, I recall Bartosz Milewski saying in his lectures that "we draw string diagrams" right-to-left, so that the direction of "flow" in the diagram is consistent with the (" after ") notation. But now looking up some random string diagrams, this doesn't seem to be the standard practice (even when they're drawn horizontally, whereas it seems like they might be more often drawn vertically).

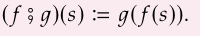

Regarding the second option, some category theory materials use the funky semicolon notation, e.g., this, from Seven Sketches in Compositionality (Definition 3.24). I recall encountering it in places other than that textbook, but I don't think it's standard practice.

I like it better, but it still leaves something to be desired, because the explicitized signature of all the atoms of the expression on the left is something like . Why not have a notation that respects the obvious and natural order of ?

Well, many programming languages actually support pipe notation that does just that.[1]

Julia:

add2(x)=x+2

add3(x)=x+3

10 |> add2 |> add3

# 15Haskell:

import Data.Function ((&))

add2=(+2)

add3=(+3)

10 & add2 & add3

-- 15Nushell:

def add2 [] {$in + 2}

def add3 [] {$in + 3}

10 | add2 | add3

# 15Which makes me think of an alternative timeline where we developed a notation for function application where we first write the arguments and then the functions to be applied to them.

And, honestly, I find this idea very appealing. Elegant. Natural. Maybe it's because I don't need to switch my reading mode from LtR to RtL when I'm encountering an expression involving function application, and instead I can just, you know, keep reading. It's a marginal improvement in fluent interoperability between mathematical formalisms and natural language text.

In a sense, our current style is a sort of boustrophedon, although admittedly a less unruly sort, as the "direction" of the function depends on the context, rather than cycling through LtR and RtL.

(Sidenote: I recently learned about head-directionality, a proposed parameter of languages. Head-initial languages put the head of the phrase before its complements, e.g., "dog black". Head-final put it after the complements, e.g., "black dog". Many languages are a mix. So our mathematical notation is a mix in this regard, and I am gesturing that it would be nicer for it to be argument-initial.)

[This is a simple thing that seems evident and obvious to me, but I've never heard anyone comment on it, and for some reason, it didn't occur to me to elaborate on this thought until now.]

- ^

I think they are less friendly if you want to do something more complicated with functions accepting more than 1 argument, but this seems solvable.

[Epistemic status: shower thought]

The reason why agent foundations-like research is so untractable and slippery and very prone to substitution hazards, etc, is largely because it is anti-inductive, and the key source of its anti-inductivity is the demons in the universal prior preventing the emergence of other consequentialists, which could become a trouble for their acausal monopoly on the multiverse.

(Not that they would pose a true threat to their dominance, of course. But they would diminish their pool of usable resources a little bit, so it's better to nip them in the bud than manage the pest after it grows.)

It's kinda like grabby aliens, but in logical time.

/j

To generalize Bayesianism, we want to instead talk about what the right "cooperative" strategy is when a) you don't think any of them are exactly correct, and b) when each hypothesis has goals too, not just beliefs.

Unclear to me how (b) connects to the rest of this post. Is it about each hypothesis being cautious not to bet all of their wealth, because they care about other stuff than winning the market?

The most obvious/naive/hacky solution is something like sub-probability (adds up to ≤1, so the truth might lie beyond your hypothesis space) with Jeffrey updating or updating via virtual evidence (which handles the "none of them are exactly correct" part).

Someone somewhere connected sub-probability measures with intuitionistic logic, where a market, instead of resolving at exactly one of the options, may just fail to resolve, or not resolve in a relevant time frame.

As part of the onboarding process for each new employee, someone sits down with him or her and says “you need to understand that [Company]’s default plan is to pause AI development at some point in the future. When we do that, the value of your equity might tank.”

An AGI/ASI pause doesn't have to be a total AI pause. You can keep developing better protein-folding prediction AI, better self-driving car AI, etc. All the narrow-ish sorts of AI that are extremely unlikely to super-intelligently blow up in your face. Maybe there are insights gleaned from the lab's past AGI-ward research that are applicable to narrow AI. Maybe you could also work on developing better products with existing tech. You just want to pause plausibly AGI-ward capabilities research.

(It's very likely that some of the stuff that I would now consider "extremely unlikely to super-intelligently blow up in your face" would actually have a good chance of superintelligently blowing up in your face. So what sort of research is safe to do in that regard should also be an area of research.)

(There's also the caveat consideration that those narrow applications can find some pieces for building AGI.)

This sort of pivot might make the prospects of pausing more palatable to other companies. Plausible that that's what you had in mind all along, but I think it's better for this to be very explicit.

Yeah.

Probably not a full-monograph-length monograph, because I don't think either that (1) the coherence-related confusions are isolated from other confused concepts in this line of inquiry, or that (2) the descendants of the concept of "coherence" will be related in some "nature-at-joint-carving" way, which would justify discussing them jointly. (Those are the two reasons I see why we might want a full-monograph-length monograph untangling the mess of some specific, confused concept.)

But an investigation (of TBD length) covering at least the first three of your bullet points seems good. I'm less sure about the latter two, probably because I expect that after the first three steps, a lot of new salient questions will appear, whereas the then-available answers to the relationship with capabilities will be rather scant (plausibly because the concept of capabilities itself would need to be refactored for more answers to be available), and that just the result of this single-concept-deconfusing investigation will have rather little implications for AGI X-risk (but might be a fruitful input to future investigation, which is the point).