[Semi-half baked. I don’t reach any novel conclusions in this post, but I do flesh out my own thinking on the way to generally accepted conclusions.

I’m pretty interested in anything here that seems incorrect (including in nit-picky ways), or in hearing additional factors that influence the relevant and importance of pivotal acts.]

In AGI Ruin: A List of Lethalities, Eliezer claims

2. A cognitive system with sufficiently high cognitive powers, given any medium-bandwidth channel of causal influence, will not find it difficult to bootstrap to overpowering capabilities independent of human infrastructure. The concrete example I usually use here is nanotech, because there's been pretty detailed analysis of what definitely look like physically attainable lower bounds on what should be possible with nanotech, and those lower bounds are sufficient to carry the point. My lower-bound model of "how a sufficiently powerful intelligence would kill everyone, if it didn't want to not do that" is that it gets access to the Internet, emails some DNA sequences to any of the many many online firms that will take a DNA sequence in the email and ship you back proteins, and bribes/persuades some human who has no idea they're dealing with an AGI to mix proteins in a beaker, which then form a first-stage nanofactory which can build the actual nanomachinery….The nanomachinery builds diamondoid bacteria, that replicate with solar power and atmospheric CHON, maybe aggregate into some miniature rockets or jets so they can ride the jetstream to spread across the Earth's atmosphere, get into human bloodstreams and hide, strike on a timer. Losing a conflict with a high-powered cognitive system looks at least as deadly as "everybody on the face of the Earth suddenly falls over dead within the same second".

And then later,

6. We need to align the performance of some large task, a 'pivotal act' that prevents other people from building an unaligned AGI that destroys the world. While the number of actors with AGI is few or one, they must execute some "pivotal act", strong enough to flip the gameboard, using an AGI powerful enough to do that. It's not enough to be able to align a weak system - we need to align a system that can do some single very large thing. The example I usually give is "burn all GPUs"

...

Many clever-sounding proposals for alignment fall apart as soon as you ask "How could you use this to align a system that you could use to shut down all the GPUs in the world?" because it's then clear that the system can't do something that powerful, or, if it can do that, the system wouldn't be easy to align. A GPU-burner is also a system powerful enough to, and purportedly authorized to, build nanotechnology, so it requires operating in a dangerous domain at a dangerous level of intelligence and capability; and this goes along with any non-fantasy attempt to name a way an AGI could change the world such that a half-dozen other would-be AGI-builders won't destroy the world 6 months later.

An important component of a “pivotal act” as it is described here is its preemptiveness. As the argument goes: you can’t defeat a superintelligence aiming to defeat you, so the only thing to do is to prevent that superintelligence from being turned on in the first place.

I want to unpack some of the assumptions that are implicit in these claims, and articulate in more detail why, and in what conditions specifically, a pivotal act is necessary.

I'll observe that there are some specific properties of this particular example, building and deploying nanotech weapons, used here as an illustration of superintelligent conflict, which make a pivotal act necessary.

If somehow the dynamics of super intelligent conflict don't turn out to have the following properties and takeoff is decentralized (so that the diff between the depth and the capability levels of the most powerful systems and the next most powerful systems is always small), I don't think this argument holds as stated.

But unfortunately, it seems like these properties probably do hold, and so this analysis doesn’t change the overall outlook much.

Those properties are:

- Offense dominance (vs. defense dominance)

- Intelligence advantage dominance (vs. material advantage dominance)

- The absolute material startup costs of offense are low

For all of the following I’m presenting a simple, qualitative model. We could devise quantitative models to describe each axis.

Offense dominance (vs. defense dominant)

The nanotech example, like nuclear weapons, is presented as strongly offense-dominant.

If it were just as easy or even easier to use nanotech to develop nano-defenses that reliably defeat diamondoid bacteria attacks, the example would cease to recommend a pivotal act — you only need to rely on a debilitating preemptive strike if you can’t defend realistically against an attacker, and so you need to prevent them from attacking you in the first place.

If defense is easier than offense, then it makes at least as much sense to build defenses as to attack first.

(Of course, as I’ll discuss below, it’s not actually a crux if nanotech in particular has this property. If this turns out to be the equilibrium of nanowar, then nanotech will not be the vector of attack that an unaligned superintelligence would choose, precisely because the equilibrium favors defense. The crux is that there is at least one technology that has this property of favoring offense over all available defenses.)

If it turns out that the equilibrium of conflict between superintelligences, not just in a single domain, but overall, favors defense over offense, pivotal acts seem less necessary.[1]

Intelligence-advantage dominants (vs. material-advantage dominant)

[Inspired by this excellent post.]

There’s a question that applies to any given competitive game: what is the shape of the indifference curve between increments of intelligence vs. increments in material resources.

For instance, suppose that two AIs are going to battle in the domain of aerial combat. Both AIs are controlling a fleet of drones. Let’s say that one AI has a “smartness” of 100, and the other has a “smartness” of 150, where “1 smart” is some standardized unit. The smarter AI is able to run more sophisticated tactics to defeat the other in aerial combat. This yields the question, how many additional drones does the IQ 100 AI need to have at its disposal to compensate for its intelligence disadvantage?

We can ask an analogous question of any adversarial game.

- What level of handicap in chess, or go, compensates for what level of skill gap measured in elo rankings?

- If two countries go to war, how much material wealth does one need to have to compensate for the leadership and engineering teams of the other being a standard deviation smarter, on average?

- If one company has the weights of a 150 IQ AI, but access to 10x less compute resources than their competitor whose cutting edge system is only IQ 130, which company makes faster progress on AI R&D?

Some games are presumably highly intelligence-advantage dominant—small increments of intelligence over one’s adversaries compensates for vast handicaps of material resources. Other games will embody the opposite dynamic—the better resourced adversary reliably wins, even against more intelligent opponents.[2]

The more that conflict involving powerful intelligences turns out to be slanted towards an intelligence-advantage vs. a material-advantage, the more a preemptive pivotal act is necessary, even in worlds where takeoff is decentralized, because facing off against a system that is even slightly smarter than you is very doomed.

But if conflict turns out to be material-advantage dominant for some reason, the superior number of humans (or of less intelligent but maybe more aligned AIs), with their initial control over greater material resources, makes a very smart system less of an immediate threat.

(Though it doesn’t make them no threat at all, because as I discuss later, intelligence advantages can be used to accrue material advantages through mundane avenues, and you can be just as dead, even if the process takes longer and is less dramatic looking.)

The absolute material startup costs of offense are low

Another feature of the nanotech example is that developing nanotech, if you know how to do it, is extremely cheap in material resources. It is presented as something that can be done with a budget of a few hundred dollars.

Nanowar is not just intelligence-advantage dominant, it has low material resource costs in absolute terms.

This matters, because the higher the start-up costs for developing and deploying weapons, the more feasible it becomes to enforce a global ban against using them.

As an example, nuclear weapons are strongly offense-dominant. There’s not much you can do to defend yourself from a nuclear launch other than to threaten to retaliate with second strike capability.

But nuclear weapons require scarce uranium and complicated development processes. They’re difficult (read: expensive) to develop, and that difficulty means that it is possible for the international community to strongly limit nuclear proliferation to only the ~9 nuclear powers. If creating a nuclear explosion was as easy as “sending an electric current through a metal object placed between two sheets of glass”, the world would have very little hope of coordinating to prevent the proliferation of nuclear arsenals or in preventing those capabilities from being used.

A strongly offense-dominant or strongly intelligence-advantage dominant technology, if it is expensive and obvious to develop and deploy, has some chance of being outlawed by the world, with a ban that is effectively enforced.

This would still be an unstable situation which requires predicting in advance what adversarial technologies the next generation of Science and Engineering AIs might find, and establishing bans on them in advance, and hoping that all those technologies turn out to be expensive enough that the bans are realistically enforceable.

(And by hypothesis of course, humanity is currently not able to do this, because otherwise we should just make a ban on AGI, which is the master adversarial technology.)

If there’s an offense-dominant or intelligence-advantage dominant weapon that has low startup costs, I don’t see what you can do except a preemptive strike to prevent that weapon from being developed and used in the first place.

Summary and conclusions

Overall, the need for a pivotal act depends on the following conjunction / disjunction.

The equilibrium of conflict involving powerful AI systems lands on a technology / avenue of conflict which are (either offense dominant, or intelligence-advantage dominant) and can be developed and deployed inexpensively or quietly.

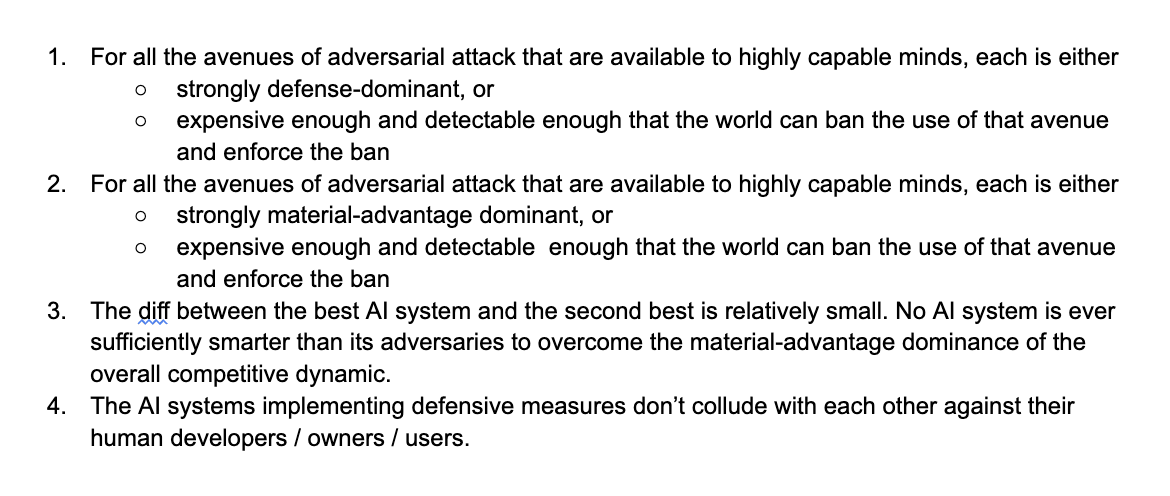

If, somehow, this statement turned out not to be true, a preemptive pivotal act that prevents the development of AGI systems seems less necessary. If the above statement turns out not to be true, then a large number of relatively aligned AI systems could be used to implement defensive measures that would defeat the attacks of even the most powerful system in the world (so long as the most advanced system’s capability is not too much higher than the second best system’s, and those AI systems don’t collude with each other).[3]

Unfortunately, I think all three of these are very reasonable assumptions about the dynamics of AGI-fueled war. The key reason is that there is adverse selection on all of these axes.

In the offense versus defense axis, an aggressor can choose any avenue of attack from amongst the whole universe of possibilities, and a defender has to defend on the avenue of attack chosen by an aggressor. This means that if there is any front of conflict in which offense has the advantage, then the aggressor will choose to attack along that vulnerable front. For that reason, the dynamic of conflict between superintelligences as a whole will inherit from that specific case.[4]

The same adverse selection holds for intelligence-advantage dominance vs. material-advantage dominance. If there are many domains in which the advantage goes to the better resourced, and only one which is biased towards the more intelligent, an intelligent entity would seize on that one domain, perhaps converting its gains into a resource advantage in the other games until it dominates in all relevant domains. [5]

This is a framing of the fundamental reason why getting into a conflict with a superintelligence is lethal: facing an intelligent entity that is smarter than you, and can see more options than you, there’s adverse selection by which you predictably end up in situations in which it wins.

Furthermore, the overall equilibrium of conflict is a function of technology level. Phalanxes dominate war until the stirrup allows for mounted knights to brace against something as they charge into the enemy. And heavily armored and highly trained mounted knights are the dominant military force until firearms and longbows make them vulnerable to ranged attack.[6]

Even if we somehow get lucky and the conflict equilibrium is defense dominant and material-advantage dominant near the development of the first Science and Engineering AIs, we will only have to wait a few months (or even less time) before the next generation of Science and Engineering AIs unlocks new technological frontiers, re-rolling the dice on all of these axes. It’s only a matter of time before the statement above holds.

Given all that, it seems like you need something like a pivotal act, whether unilateral, backed by a nation-state, or backed by a coalition of nation-states, that can prevent the development of powerful science and engineering AIs, before they can develop and deploy the kinds of technologies that are unfavorable along these dimensions.

- ^

To be clear, this would be a strong (and strange) claim about the world. It would mean that in general, it is easier to defend yourself from an attack, than it is to execute an attack, across all possible avenues of attack that an aggressor might choose.

- ^

Note that the answer to this question is presumably sensitive to what strata of intelligence we’re investigating. If I were investigating this more in more detail, I would model it as a function that maps the indifference between resources and intelligence, at different intelligence levels.

- ^

Just to spell out the necessary struts of story under which a pivotal act is not necessary:

If all of these held, it might be feasible to have a lattice of agents all punishing defections from some anti-destructive norms, because for any given AI system, their incentive would be to support the enforcement lattice, given that they would not succeed in overthrowing it. - ^

A reader pokes some holes in this argument. He suggests that even given this constraint, there are just not that many strongly offense-dominant weapon paradigms. This sort of argument suggests that Russia can obviously demolish Ukraine, given that they can choose from any of the many avenues of attack available to them. But in practice, they were not able to.

Furthermore, sometimes technologies have a strong offense-advantage, but with the possible exception of nuclear weapons, that advantage only extends to one area of war. German submarines put a stranglehold on allied shipping in the Atlantic during WWI, but a decisive win in that theater does not entail winning the global conflict.

I am uncertain what to think about this. I think maybe the reason why we see a rarity of strong offense-dominant technologies in history is a combination of the following

1) Total war is relatively rare. Usually aggressors want to conquer or take the stuff of their targets, not utterly annihilate them. It seems notable that if Russia wanted to completely destroy the Ukrainian people and nation-state, nuclear weapons are a sufficient technology to do that.

(Would total war to the death be more common between AGI systems? I guess “yes”, if the motivation for war is less “wanting another AI’s current material resources”, and more “wanting to preempt any future competition for resources with you.” But this bears further thought.)

2) I think there’s a kind of selection effect where, when I query my historical memory, I will only be able to recall instances of conflict that are in some middle ground where neither defense nor offense have a strong advantage, because all other conflicts end up not happening, in reality, for any length of time. - ^

Note however, that a plan of investing in intelligence-advantage dominant domains (perhaps playing the stock markets?) to build up a material advantage might look quite different than the kind of instantaneous strike by a superintelligence depicted in the opening quote.

Suppose that it turns out that nanotechnology is off the table for some reason, and cyberwar turns out (surprisingly) to be defense-dominant once AGIs have patched most of the security vulnerabilities, and (even more surprisingly) superhuman persuasion is easily defeated by simple manipulation-detection systems, and so on, removing all the mechanisms by which a hard or soft conflict could be intelligence-advantage dominant.

In that unusual case, we would either see an AI takeover doesn’t look sudden at all. It looks like a system or a consortium of systems using its superior intelligence to accrue compounding resources over months and years. That might entail beating the stock market, or developing higher quality products, or making faster gains in AI development.

It does that until it has not only an intelligence-advantage, but also a material-advantage, and then potentially executing a crushing strike that destroys human civilization, which possibly looks something like a shooting war: deploying overwhelmingly more robot drones than will be deployed to defend humans, or maybe combined with a series of nuclear strikes. - ^

There’s a general pattern where by a new offensive technology is introduced, and it is dominant for a period, until the defensive countermeasure is developed, and the offensive technology is made obsolete, after which aggressors will switch to a new vector of attack or find a defeater for the offensive measure. (eg. cannons dominate naval warfare until the development of iron-clads; submarines dominate merchant vessels until the convoy system defeats them; nuclear weapons are overwhelming, until the development of submarine second strike capability as a deterrent, but those submarines would be obviated by Machine Learning technologies to locate those subs.)

This pattern might mean that conflict between superintelligences has a different character, if they can see ahead, and predict what the stack of measures and countermeasures is. Just as a chess player doesn’t attack their opponents rook if they can see that that would expose their queen one move latter, maybe superintelligences don’t even bother to build the nuclear submarines, because they can immediately foresee the advanced sonar that can locate those submarines, nullifying their second strike capability.

Conflict between superintelligences might entail mostly playing out the offense-defense dynamics of a whole stack of technologies, to the end of the stack, and then building only the cheapest dominating offensive technologies, and all the dominating defensive technologies that, if you don’t have them, allow your adversaries to disable you before you are able to deploy your offensive tech.

Or maybe the equilibrium behavior is totally different! It seems like there’s room for a lot more thought here.