This is really nicely written, but unfortunately the main lesson for me is that I don't understand the Löb's Theorem. Despite reading the linked PDF file a few times. So I guess I will use this place to ask you a few things, since I like your way of explaining.

First, I may have just ruined my math credentials by admitting that I don't understand Löb's Theorem, but it doesn't mean that I suck at maths completely. I feel pretty confident in elementary- and maybe even high-school math. Let's say that I am confident I would never tell someone that 2+2=3.

Second, if I understand the rules for implication correctly, if the premise is false, the implication is always true. Things like "if 2+2=3, then Eliezer will build a Friendly AI" should be pretty non-controversial even outside of LessWrong.

So it seems to me that I can safely say "if I tell you that 2+2=3, then 2+2=3". (Because I know I would never say that 2+2=3.)

But Mr. Löb insists that it means that I just claimed that 2+2=3.

And I feel like Mr. Löb is totally strawmanning me.

Please help!

Oh thanks; I hope I am able to explain it adequately. Your understanding of implication, and what Löb's theorem would imply about yourself (assuming you are a formal mathematical system, of course) are both correct. More formally, for any formal system S at least containing Peano Arithmetic, if this system can prove: (If PA proves that 2+2=3, then 2+2 really is 3), then the system will also prove that 2+2=3. This is pretty bad, since 2+2=4, so we must declare the system inconsistent. This flies pretty strongly against most people's intuition - but since it is true, it's your intuition that needs to be retrained.

The analogy to 'being suspicious' hopefully helps brings in some of the right connotations and intuition. Of course, it's not a perfect analogy, since as you mentioned, this is kind of an uncharitable way to interpret people.

Anyway, I feel less confident that I can explain the proof of Löb's theorem intuitively, but here's a jab at it:

Peano Arithmetic (PA) is surprisingly powerful. In much the same way that we can store and process all sorts of information on computers as binary numbers, we can write all sorts of statements (including statements about PA itself) as numbers. And similar to how all the processing on a computer is reduced to operations with simple transistors, we can encode all the rules of inference for these statements as arithmetic functions.

In particular, we can encode a statement L that says "If a proof of L exists (in PA), then X is true," where the X can be arbitrary.

Now PA can think, "well if I had a proof of L, then unpacking what L means, I could prove that (if a proof of L exists, then X is true)."

"I also know that if I had a proof of L, then I could prove that I can prove L."

"So I can just skip ahead and see that - if I had a proof of L, then I could prove that X is true."

A dishonest PA may then reason - "Since I trust myself, if I knew I had a proof of L, then X would just be true." @

"I can also prove this fact about myself - that if I had a proof of L, then X is true."

"But that's exactly what L says! So I can prove that I can prove L"

"So going back to @, I now know that X is true!"

But X can be literally anything! So this is really bad.

At a coarser level, you can think of as breathing life into things you imagine about yourself. You can try to be careful to only imagine things you can actually see yourself doing, but there are tricky statements like L that can use this to smuggle arbitrary statements out of your imagination into real life! L says, "if you imagine L, then X is true." Even though this is just a statement, and is neither true nor false a priori, you still have to be careful thinking about it. You need a solid barrier between the things you imagine about yourself, and the things you actually do, to protect yourself from getting 'hacked' by this statement.

Hopefully something in here helps the idea 'click' for you. Feel free to ask more questions!

Thanks. I will look at it again tomorrow, but now I guess I have an approximate idea how it goes.

1) We can create various self-referential statements that syntaxtically seem completely innocent. They are composed from the same components and in the same way as ordinary (non-self-referential) statements. So, if the obviously innocent statements are allowed to be made, the clever schemes for creating self-referential statements will be allowed, too. -- This is the first thing to think about, separately from the rest.

2) Self-referential statements are allowed to speak about themselves, and also about me (a given axiomatic system). Thus they can abuse me in various ways which I can't really avoid. Because even if I tried to refuse thinking about them, they can make themselves mean "Viliam refuses to think about me", and provide me a step-by-step proof, which I would have to admit is correct. Then they make themselves something like "If Viliam says I am correct, then 2+2=3", which I cannot avoid or deny, because both would make the statement true, so the only remaining option is to admit it, which means I have admitted that 2+2=3. -- This is another thing to think about., because I don't feel really convinced by what I wrote. I realize that both avoiding to answer, or saying that the statement is incorrect would be wrong... and yet I somehow can't imagine in detail how specifically could I say it was true.

Maybe a better intuition instead of seeing myself as an axiomatic system (which I am not) would be to imagine that at the beginning I precommit to use a specific PA-compatible list of rules... and then I follow the rules blindly, even when I see that the clever statements somehow force me to do things that are somehow wrong, but technically okay according to those rules.

Part of the issue is that you are not subject to the principle of explosion. You can assert contradictory things without also asserting that 2+2=3, so you can be confident that you will never tell anyone that 2+2=3 without being confident that you will never contradict yourself. Formal systems using classical logic can't do this: if they prove any contradiction at all, they also prove that 2+2=3, so proving that they don't prove 2+2=3 is exactly the same thing as proving that they are perfectly consistent, which they can't consistently do.

Unless I'm missing something, Löb's theorem is still a theorem of minimal logic, which does not have the principle of explosion.

"I would have say I would never tell Bob that DefectRock will cooperate with him. But by Löb's theorem, that means I would tell you this obvious lie!"

I've read this several times, and I can't get any intuitive reason why that's true. Which means that I would have to rely on the mathematical argument, but I don't really get that either. Your argument seems to be saying "if (~A) then (A -> anything)". I understand why that's a valid claim, but I don't see how you've shown that Shady will say that, let alone that "Shady says (~A)" is equivalent to "Shady says 'if (~A) then (A -> anything)' ".

Suppose I make the following argument:

If Shady says "If you ask me whether 1 is odd, I would say 'yes' ", that's equivalent to Shady saying "If you ask me whether 1 being even would mean that 1 is odd, I would say 'yes' ". So if Shady says that 1 is odd, then Shady would say that 1 is even. So clearly Shady would never say that 1 is odd.

How is this argument different from yours? What essential defect exists in my argument that doesn't exist in yours?

The difference is that Shady isn't talking at the object level about the truth of (~A), he is talking at the meta level of what he will say about (~A). That's the difference that makes this argument work.

If you delude yourself into thinking that everything you think is true is actually true, then you can get 'hacked' in the following way: Consider the statement L = "If you think L is true, then A is true" - where the A can be chosen arbitrarily. If you trust yourself blindly, this statement can jump out of a hypothetical into what you actually believe. Say you were thinking about what would happen if you thought L were true. Well, based on what L says, you would see that in this hypothetical situation, you would think A was true also. But that argument is just what L says is true! So you would think that you thought L was true.

Here is the 'hack'. If you trusted yourself - thinking that you thought L was true would mean that you think L is true. But thinking L is true implies you will actually believe A. So you are hacked into believing A, no matter what A is.

In Inception universe, your dream self is your real self - if you would do something in a dream, you would also do that thing in real life (yeah, yeah I know this isn't canon, but bear with me).

Now you're minding your own business when a charismatic man you have never seen before comes up and starts talking to you. In the conversation, he brings up the following idea into you - "If you dream that this idea is true, then you will sell your father's company." You don't believe him, but the thought lingers in your subconscious...

You find yourself musing on the thought. If I did dream that the idea was true, that would be the same as if I had a dream where I dreamed it was true, and then my dream self sold my father's company.

Later you realize that if you dreamed that this idea was true, then your dream self would also dream that the idea was true - and that would mean your dream self would sell your father's company. Or in other words, if you dreamed the idea was true, then your dream self would sell your father's company.

And since your dream self does the same things as your real self, you see that if you had a dream where that idea was true, then you would actually sell your father's company. And then... you wake up! INCEPTION

So that -- that means that you were dreaming that the idea was true! Which means...

Later that day, Robert Fischer makes the surprising announcement that he will sell the company he inherited from his late father.

This is equivalent to

=C)%20%5Crightarrow%20(DR(B)=C)), since

=C) is an obvious lie.

Um... what? I don't see the relation between that and the previous line.

DR(B) = C is easily seen to be false, since DR always defects (by definition), and in general, ~X is logically equivalent to X -> False. So we take X to be the statement from the line above, and place DR(B) = C for False.

Sorry that wasn't more clear.

Oh, so it's equivalent to "=C))". Everything makes sense now. At first I thought you were saying it was equivalent to the claim "to say

=D), we would need

=C))", and I was confused.

If (someone tells you If [I tell you] X, then X is true)

Are you trying to have the box be interchangeable with [I tell you]? Because it seems to me that usually, when "if" is followed by parentheses, those parentheses include the condition. In which case it should be "If [I tell you X]"

Also, what's the difference between the turnstile (I think that's what it's called) and the box?

[I tell you] X is analogous to "box X". Formally, the box is how we represent the statement (PA concludes X) inside the formal system S (which could also be PA). The brackets were meant to show which part corresponded with the box itself - the proper parentheses placement would be as you describe.

The turnstile means that S (or whatever system) concludes X. In other words, that X is provable in S. The box represents that (PA concludes X). So one difference is that it is for PA specifically. But more importantly, the box is a representation of that statement inside the system S. So the statement X must be encoded as a number - called the Godel nubmer of X. This is similar to how an image is encoded as a binary number on your computer. Then there is a function Bew - which is a 'compiler' for a number X. This is just a property of a natural number, carefully designed to represent the property that if n = encoding(X), then Bew(n) is true if PA proves the statement X. So "box X" means "Bew(encoding(X))".

It's like the difference between knowing you will do a certain thing - and you actually doing that thing. The box is what S thinks PA will do, and the turnstile is what it actually does.

This is an exposition of some of the main ideas in the paper Robust Cooperation. My goal is to make the ideas and proofs seem natural and intuitive - instead of some mysterious thing where we invoke Löb's theorem at the right place and the agents magically cooperate. Also I hope it is accessible to people without a math or CS background. Be warned, it is pretty cheesy ok.

In a small quirky town, far away from other cities or towns, the most exciting event is a game called (for historical reasons) The Prisoner's Dilemma. Everyone comes out to watch the big tournament at the end of Summer, and you (Alice) are especially excited because this year it will be your first time playing in the tournament! So you've been thinking of ways to make sure that you can do well.

The way the game works is this: Each player can choose to cooperate or defect with the other player. If you both cooperate, then you get two points each. If one of you defects, then that player will get three points, and the other player won't get any points. But if you both defect, then you each get only one point. You have to make your decisions separately, without communicating with each other - however, everyone is required to register the algorithm they will be using before the tournament, and you can look at the other player's algorithm if you want to. You also are allowed to use some outside help in your algorithm.

Now if you were a newcomer, you might think that no matter what the other player does, you can always do better by defecting. So the best strategy must be to always defect! Of course, you know better, if everyone tried that strategy, then they would end up defecting against each other, which is a shame since they would both be better off if they had just cooperated.

But how can you do better? You have to be able to describe your algorithm in order to play. You have a few ideas, and you'll be playing some practice rounds with your friend Bob soon, so you can try them out before the actual tournament.

Your first plan:

I'll cooperate with Bob if I can tell from his algorithm that he'll cooperate with me. Otherwise I'll defect.

For your first try, you'll just run Bob's algorithm and see if he cooperates. But there's a problem - if Bob tries the same strategy, he'll have to run your algorithm, which will run his algorithm again, and so on into an infinite loop!

So you'll have to be a bit more clever than that... luckily you know a guy, Shady, who is good at these kinds of problems.

You call up Shady, and while you are waiting for him to come over, you remember some advice your dad Löb gave you.

(Löb's theorem) "If someone says you can trust them on X, well then they'll just tell you X."

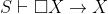

If (someone tells you If [I tell you] X, then X is true)

(someone tells you If [I tell you] X, then X is true)

Then (someone tells you X is true)

(someone tells you X is true)

(See The Cartoon Guide to Löb's Theorem[pdf] for a nice proof of this)

Here's an example:

Sketchy watch salesman: Hey, if I tell you these watches are genuine then they are genuine!

You: Ok... so are these watches genuine?

Sketchy watch salesman: Of course!

It's a good thing to remember when you might have to trust someone. If someone you already trust tells you you can trust them on something, then you know that something must be true.

On the other hand, if someone says you can always trust them, well that's pretty suspicious... If they say you can trust them on everything, that means that they will never tell you a lie - which is logically equivalent to them saying that if they were to tell you a lie, then that lie must be true. So by Löb's theorem, they will lie to you. (Gödel's second incompleteness theorem)

Despite his name, you actually trust Shady quite a bit. He's never told you or anyone else anything that didn't end up being true. And he's careful not to make any suspiciously strong claims about his honesty.

So your new plan is to ask Shady if Bob will cooperate with you. If so, then you will cooperate. Otherwise, defect. (FairBot)

It's game time! You look at Bob's algorithm, and it turns out he picked the exact same algorithm! He's going to ask Shady if you will cooperate with him. Well, the first step is to ask Shady, "will Bob cooperate with me?"

Shady looks at Bob's algorithm and sees that if Shady says you cooperate, then Bob cooperates. He looks at your algorithm and sees that if Shady says Bob cooperates, then you cooperate. Combining these, he sees that if he says you both cooperate, then both of you will cooperate. So he tells you that you will both cooperate (your dad was right!)

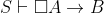

Let A stand for "Alice cooperates with Bob" and B stand for "Bob cooperates with Alice".

From looking at the algorithms, and

and  .

.

So combining these, \rightarrow (A \& B)) .

.

Then by Löb's theorem, .

.

Since that means that Bob will cooperate, you decide to actually cooperate.

Bob goes through an analagous thought process, and also decides to cooperate. So you cooperate with each other on the prisoner's dilemma! Yay!

That night, you go home and remark, "it's really lucky we both ended up using Shady to help us, otherwise that wouldn't have worked..."

Your dad interjects, "Actually, it doesn't matter - as long as they were both smart enough to count, it would work. This doesn't just say 'I tell you X', it's stronger than that - it actually says 'Anyone who knows basic arithmetic will tell you X'. So as long as they both know a little arithmetic, it will still work - even if one of them is pro-axiom-of-choice, and the other is pro-axiom-of-life. The cooperation is robust." That's really cool!

doesn't just say 'I tell you X', it's stronger than that - it actually says 'Anyone who knows basic arithmetic will tell you X'. So as long as they both know a little arithmetic, it will still work - even if one of them is pro-axiom-of-choice, and the other is pro-axiom-of-life. The cooperation is robust." That's really cool!

But there's another issue you think of. Sometimes, just to be tricky, the tournament organizers will set up a game where you have to play against a rock. Yes, literally just a rock that holding the cooperate button down. If you played against a rock with your current algorithm, well you start by asking Shady if the rock will cooperate with you. Shady is like, "well yeah, duh." So then you cooperate too. But you could have gotten three points by defecting! You're missing out on a totally free point!

You think that it would be a good idea to make sure the other player isn't a complete idiot before you cooperate with them. How can you check? Well, let's see if they would cooperate with a rock placed on the defect button (affectionately known as 'DefectRock'). If they know better than that, and they will cooperate with you, then you will cooperate with them.

The next morning, you excitedly tell Shady about your new plan. "It will be like before, except this time, I also ask you if the other player will cooperate with DefectRock! If they are dumb enough to do that, then I'll just defect. That way, I can still cooperate with other people who use algorithms like this one, or the one from before, but I can also defect and get that extra point when there's just a rock on cooperate."

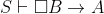

Shady get's an awkward look on his face, "Sorry, but I can't do that... or at least it wouldn't work out the way you're thinking. Let's say you're playing against Bob, who is still using the old algorithm. You want to know if Bob will cooperate with DefectRock, so I have to check and see if I'll tell Bob that DefectRock will cooperate with him. I would have say I would never tell Bob that DefectRock will cooperate with him. But by Löb's theorem, that means I would tell you this obvious lie! So that isn't gonna work."

Notation,=C) if X cooperates with Y in the prisoner's dilemma (or = D if not).

if X cooperates with Y in the prisoner's dilemma (or = D if not).

You ask Shady, does=D) ?

?

Bob's algorithm:=C) only if

only if =C) .

.

So to say=D) , we would need

, we would need =C)) .

.

This is equivalent to=C) \rightarrow (DR(B)=C)) , since

, since =C) is an obvious lie.

is an obvious lie.

By Löb's theorem,=C) , which is a lie.

, which is a lie.

<Extra credit: does the fact that Shady is the one explaining this mean you can't trust him?>

<Extra extra credit: find and fix the minor technical error in the above argument.>

Shady sees the dismayed look on your face and adds, "...but, I know a guy who can vouch for me, and I think maybe that could make your new algorithm work."

So Shady calls his friend T over, and you work out the new details. You ask Shady if Bob will cooperate with you, and you ask T if Bob will cooperate with DefectRock. So T looks at Bob's algorithm, which asks Shady if DefectRock will cooperate with him. Shady, of course, says no. So T sees that Bob will defect against DefectRock, and lets you know. Like before, Shady tells you Bob will cooperate with you, and thus you decide to cooperate! And like before, Bob decides to cooperate with you, so you both cooperate! Awesome! (PrudentBot)

If Bob is using your new algorithm, you can see that the same argument goes through mostly unchanged, and that you will still cooperate! And against a rock on cooperate, T will tell you that it will cooperate with DefectRock, so you can defect and get that extra point! This is really great!!

(ok now it's time for the really cheesy ending)

It's finally time for the tournament. You have a really good feeling about your algorithm, and you do really well! Your dad is in the audience cheering for you, with a really proud look on his face. You tell your friend Bob about your new algorithm so that he can also get that extra point sometimes, and you end up tying for first place with him!

A few weeks later, Bob asks you out, and you two start dating. Being able to cooperate with each other robustly is a good start to a healthy relationship, and you live happily ever after!

The End.