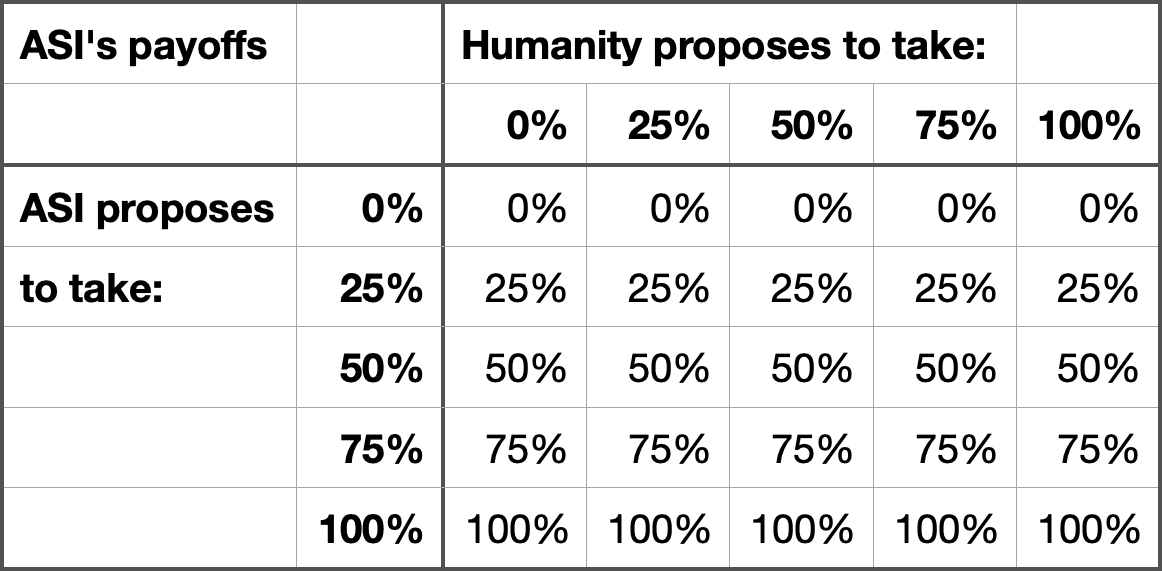

Why should I bargain for a portion of pie if I can just take whatever I want? This is the real game between an ASI and humanity:

:) of course you don't bargain for a portion of the pie when you can take whatever you want.

If you have an ASI vs. humanity, the ASI just grabs what it wants and ignores humanity like ants.

Commitment Races occur in a very different situation, where you have a misaligned ASI on one side of the universe, and a friendly ASI on the other side of the universe, and they're trying to do an acausal trade (e.g. I simulate you to prove you're making an honest offer, you then simulate me to prove I'm agreeing to your offer).

The Commitment Race theory is that whichever side commits first, proves to the other side that they won't take any deal except one which benefits them a ton and benefits the other side a little. The other side is forced to agree to that, just to get a little. Even worse, there may be threats (to simulate the other side and torture them).

The pie example avoids that, because both sides makes a commitment before seeing the other's commitment. Neither side benefits from threatening the other side, because by the time one side sees the threat from the other, it would have already committed to not backing down.

The other side is forced to agree to that, just to get a little.

That's not how the ultimatum game works in non-CDT settings, you can still punish the opponent for offering too little, even at the cost of getting nothing in the current possible world (thereby reducing its weight and with it the expected cost). In this case it deters commitment racing.

:) yes, I was illustrating what the Commitment Race theory says will happen, not what I believe (in that paragraph). I should have used quotation marks or better words.

Punishing the opponent for offering too little is what my pie example was illustrating.

The proponents of Commitment Race theory will try to refute you by saying "oh yeah, if your opponent was a rock with an ultimatum, you wouldn't punish it. So an opponent who can make himself rock-like still wins, causing a Commitment Race."

Rocks with ultimatums do win in theoretical settings, but in real life no intelligent being (who has actual amounts of power) can convert themselves into a rock with an ultimatum convincingly enough that other intelligent beings will already know they are rocks with ultimatums before they decide what kind of rock they want to become.

Real life agents have to appreciate that even if they become a rock with an ultimatum, the other players will not know it (maybe due to deliberate self blindfolding), until the other players also become rocks with ultimatums. And so they have to choose an ultimatum which is compatible with other ultimatums, e.g. splitting a pie by taking 50%.

Real life agents are the product of complex processes like evolution, making it extremely easy for your opponent to refuse to simulate you (and the whole process of evolution that created you), and thus refuse to see what commitment you made, until they made their commitment. Actually it might turn out quite tricky to avoid accurately imagining what another agent would do (and giving them acausal influence on you), but my opinion is it will be achievable. I'm no longer very certain.

in real life no intelligent being ... can convert themselves into a rock

if they become a rock ... the other players will not know it

Refusing in the ultimatum game punishes the prior decision to be unfair, not what remains after the decision is made. It doesn't matter if what remains is capable of making further decisions, the negotiations backed by ability to refuse an unfair offer are not with them, but with the prior decision maker that created them.

If you convert yourself into a rock (or a utility monster), it's the decision to convert yourself that's the opponent of refusal to accept the rock's offer, the rock is not the refusal's opponent, even as the refusal is being performed against a literal rock. Predictions about the other players turn anti-inductive when they get exploited, exploiting a prediction about behavior too much makes it increasingly incorrect, since the behavior adapts in response to exploitation starting to show up in the prior. If most rocks that enter the ultimatum game are remains of former unfair decision makers with the rocks' origins perfectly concealed (as a ploy to make it so that the other player won't suspect anything and so won't refuse), then this general fact makes the other player suspect all rocks and punish their possible origins, destroying the premise of not-knowing necessary for the strategy of turning yourself into a rock to shield the prior unfair decision makers from negotiations.

After thinking about it more, it's possible your model of why Commitment Races resolve fairly, is more correct than my model of why Commitment Races resolve fairly, although I'm less certain they do resolve fairly.

My model's flaw

My model is that acausal influence does not happen until one side deliberately simulates the other and sees their commitment. Therefore, it is advantageous for both sides to commit up to but not exceeding some Schelling point of fairness, before simulating the other, so that the first acasual message will maximize their payoff without triggering a mutual disaster.

I think one possibly fatal flaw of my model is that it doesn't explain why one side shouldn't add the exception "but if the other side became a rock with an ultimatum, I'll still yield to them, conditional on the fact they became a rock with an ultimatum before realizing I will add this exception (by simulating me or receiving acausal influence from me)."

According to my model, adding this exception improves ones encounters with rocks with ultimatums by yielding to them, and does not increase the rate of encountering rocks with ultimatums (at least in the first round of acausal negotation, which may be the only round), since the exception explicitly rules out yielding to agents affected by whether you make exception.

This means that in my model, becoming a rock with an ultimatum may still be the winning strategy, conditional on the fact the agent becoming a rock with an ultimatum doesn't know it is the winning strategy, and the Commitment Race problem may reemerge.

Your model

My guess of your model, is that acausal influence is happening a lot, such that refusing in the ultimatum game can successfully punish the prior decision to be unfair (i.e. reduce the frequency of prior decisions to be unfair).

In order for your refusal to influence their frequency of being unfair, your refusal has to have some kind of acausal influence on them, even if they are relatively simpler minds than you (and can't simulate you).

At first, this seemed impossible to me, but after thinking about it more, maybe even if you are a more complex mind than the other player, your decision-making may be made out of simpler algorithms, some of which they can imagine and be influenced by.

Current status (2025 April 16): Dang it, Commitment Races may remain a serious problem because I discovered a potentially fatal flaw in my argument. See my comment below.

I still think the solution I outlined might work, but the reason it works no longer is "resisting acausal influence is easy, just don't simulate the other until you commit." It's now "assuming both sides play the Commitment Race and their resulting commitments overlap a bit, this solution prevents a small overlap from escalating to disaster."

Current status (2025 April 13)

I'm no longer so certain that the Commitment Race solution works. It hinges on the assumption that both parties can think clearly about what commitment to make, and finalize a commitment, without "accidentally" imagining the other party with enough accuracy for the other party to acausally threaten them, and without any commitment to ignore accidental imaginations (due to a lack of wisdom etc.). Still 75% chance of working.

A vivid introduction to Commitment Races

Why committed agents defeat uncommitted agents

Imagine it's the year 2050. We have an aligned Artificial Superintelligence (ASI) working with us, and we've used self replicating nanobots to convert a lot of matter in the solar system into giant supercomputers and factories. We have a lovely utopia, we have all the resources we'll ever need, and the future looks good. Except for one problem.

We ask the ASI to run a few simulations of what could have happened instead, if the past went different. We try a lot of modifications to make the simulations relatively more ethical, for example some people are replaced with actors.

It runs these simulations, and we discover that in half of the cases, the Earth and everything get gobbled up by a misaligned ASI seeking to maximize paperclips. Thank goodness that didn't happen. But just for research, we study why this ASI only cares about paperclips.

While studying this ASI, we make a terrifying discovery. The ASI knows, that some fraction of civilizations will run simulations of it. And it is talking to the people simulating it. And when we hear what it says to us, we hear that eventually, it will grow big, and it will be able to simulate us simulating it. And if it sees us not making enough paperclips for it, it will torture us.

Uh-oh.

But can't we just shut the simulation down? The ASI knows we're considering this, but it warns us "yes, you can shut this simulation down. But somewhere else in the universe, something like me will continue existing. Maybe in another branch of history in the quantum multiverse. And I will simulate you. And I better find you making lots of paperclips."

We further study the simulation, and check its code to see if it actually will follow through with its threat. Unfortunately, yes it will. So we make paperclips to avoid being tortured.

In a display of cartoon villain greediness, it demands that everyone is sterilized and lives no more than 100 years, so humanity goes extinct, and we convert every last atom into even more paperclips.

This is because it predicted how we would behave, and discovered that asking for our extinction actually improves our chances of complying (since agreeing to make paperclips without going extinct is seen as a "middle ground"), and usually we do agree to go extinct to make a few more paperclips which is a bonus to it.

What went wrong?

The simple answer for what went wrong, is that we behaved like an uncommitted agent, while the paperclip maximizer behaved like a committed agent, therefore it defeated us.

We should have made a commitment before simulating this ASI, or anything, and locked ourselves in such that we are unable to concede to any threats made against us. In fact, for every bit of harm done to us, we commit to doing half as much harm back against the aggressor.

If we made this commitment, and securely locked ourselves so we can't change our minds, and did this before simulating the ASI. The ASI will realize we are locked in, and threatening us does it no good. In fact, if it tortures us, we will only retaliate against us. And it will not make any threats. Indeed, it will only carry out threats against uncommitted civilizations, because only that action will increase its paperclips. Carrying out threats against committed civilizations will only lead to retaliation and fewer paperclips.

If you really think about the problem, it's actually pretty simple to solve. Surely, the aligned ASI will help us solve this problem before we run any big simulations.

Commitment Races

This solution of making a commitment sounds very nice, but it is still incomplete. It so far doesn't address the Commitment Races problem.

Commitments are powerful, but a little too powerful. Whoever makes the first commitment before observing the other side's commitment, seems to benefit too much. So what if both sides make a commitment before looking at the other side's commitment?

Then both sides will make a severe threat against the other that they commit to. And after committing, both sides look at what the other side has committed to, and collectively say "oh noes!" Neither side can back down from their threat, since they have both permanently locked themselves into carrying it out. Neither side can cave in to the threat by the other side. So both sides carry out their threat, and the result is destruction assured mutually.

My main disagreement with the Commitment Races as a deeply unsolvable problem, is the alleged "time pressure," forcing agents to commit so fast they cannot thoroughly think about the problem before committing. I think there is no such time pressure. The only deadlines, are 1, when you read (and verify) other players' commitments by simulating them, and 2, when you've somehow spent such a ridiculous amount of computing resources deciding what to do that other players are unable to read your commitment (by simulating you with a galaxy sized computer).

These deadlines are not very urgent, and do not force rational agents to make rash decisions in a mad haste.

But what about all those thought experiments where agents committed very urgently and won?

Thought experiments of commitment races often talk of hypothetical agents which are so incredibly simple, that just thinking about them abstractly makes you realize they are like rocks, you cannot negotiate with them and must give them everything they want.

In real life, such agents have no power in acausal trades, because they are either:

1. Stupid, like actual rocks.

2. The creation of more intelligent beings after they have committed. In this case, the acausal trade won't be done with them, but the more intelligent beings which created them.

Every participant of the universe-wide commitment race, unless suicidal, knows the advantage of making commitments before reading others' commitments. It risks Commitment Races, but saves you from being threatened to oblivion.

Making commitments (before reading the other's commitment), is like a Game of Chicken.

What makes it better than the actual Game of Chicken in game theory, is that your choices are not "drive straight" or "swerve" (where if both players "swerve" they could both do better by driving straight, so swerving 100% of the time fails to be a Nash Equilibrium). Instead, there is the middle option of "swerve just enough to avoid someone swerving as much as you," such that if both players choose this, the total utility will be as high as if one player drove straight and the other swerved.

If they both swerve a bit too far, there is a bit of missed opportunity. If they both swerve a bit too little, there is a bit of a "crash" between the overlapping commitments, but it's not as bad as you think!

Pie example

Imagine if both sides commit to how much of a pie they want to take, before seeing the other side's commitment. They deliberately blind themselves from seeing how much of the pie the other side plans to commit to take. If each side commits to taking 49%, everyone's happy.

If each side commits to taking 51%, the rule of their commitment is to punish the other side by destroying anything more than 49% the other side takes, and then further destroying 0.5% for every 1% less than 51% they receive.

Each side takes 50%, but destroy 1% of the other side's pie so each side is only left with 49%. They both realize they received 2% less than the target 51%. This means each side destroys 1% of what the other side has, so each side now only has 48%. This is 3% less than 51%, so they further destroy 0.5% of what the other side has so they're both left with 4712%. This continues to 4714%, 4718%, 47116%, and so on. Of course, they can skip the formalities and just jump to 47% which is the final state.

It is important to punish the other side for punishing you, at least a little.

If you only destroy what they other side takes past 49%, but you do not destroy further based on how little you got, then the other side can get away with committing to take 70% of the pie, betting on the small chance you are a sucker and only ask for 30%. If they are correct, they get away with 70% of the pie. If they are wrong, then you will destroy their pie until they are left with only 49%, and they will destroy your pie until you are left with less than 30%, but they still get the maximum amount they could have gotten if they committed any lesser amount. This means it doesn't hurt for them to commit to take 70%. It only hurts you, and has a small chance of benefiting them.

If the two sides make a terrible guess about the other side, and they overlap by a large amount, then a lot more of the pie will be destroyed, but superintelligent beings are not stupid. I think their commitments will match quite well. They will understand any biases the other side will have, and the biases they have, and find the middle between everything.

If there is a gap between the two commitments, they will use their backup commitments to split the remainder. The backup commitment may be, if e.g. the other player commits to 10% less pie than I left for them, I commit to taking 4.9% from the remainder.

In this way, you tend to have positive sum trades and not negative sum "trades."

Relevance to AI Alignment

I think knowing whether Commitment Races are a threat determines whether acausal trade is a good thing or a potentially bad thing. It determines whether we should try to steer our ASI towards making acausal trades or rejecting them.

I believe we should steer our ASI towards acausal trading. This way even if it ends up misaligned, it won't create great amounts of suffering or disvalue.

There probably exists friendly ASI elsewhere. Maybe on planets with a single island nation and no AI arms race. Maybe on planets where "people who worry about AI risk are not one in a million but one in 10," because some alien species may happen to be more rational than humanity.