I like this paper for crisply demonstrating an instance of poor generalization in LMs that is likely representative of a broader class of generalization properties of current LMs.

The existence of such limitations in current ML systems does not imply that ML is fundamentally not a viable path to AGI, or that timelines are long, or that AGI will necessarily also have these limitations. Rather, I find this kind of thing interesting because I believe that understanding limitations of current AI systems is very important for giving us threads to yank on that may help us with thinking about conceptual alignment. Some examples of what I mean:

- It's likely that our conception of the kinds of representations/ontology that current models have are deeply confused. For example, one might claim that current models have features for "truth" or "human happiness", but it also seems entirely plausible that models instead have separate circuits and features entirely for "this text makes a claim that is incorrect" and "this text has the wrong answer selected", or in the latter case for "this text has positive sentiment" and "this text describes a human experiencing happiness" and "this text describes a

This seems like the kind of research that can have a huge impact on capabilities, and much less and indirect impact on alignment/safety. What is your reason for doing it and publishing it?

In database design, sometimes you have a column in one table whose entries are pointers into another table - e.g. maybe I have a Users table, and each User has a primaryAddress field which is a pointer into an Address table. That keeps things relatively compact and often naturally represents things - e.g. if several Users in a family share a primary address, then they can all point to the same Address. The Address only needs to be represented once (so it's relatively compact), and it can also be changed once for everyone if that's a thing someone wants to do (e.g. to correct a typo). That data is called "normalized".

But it's also inefficient at runtime to need to follow that pointer and fetch data from the second table, so sometimes people will "denormalize" the data - i.e. store the whole address directly in the User table, separately for each user. Leo's using that as an analogy for a net separately "storing" versions of the "same fact" for many different contexts.

Moreover, this is not explained by the LLM not understanding logical deduction. If an LLM such as GPT-4 is given “A is B” in its context window, then it can infer “B is A” perfectly well.

I think this highlights an important distinction. Sometimes, I'll hear people say things like "the LLM read its corpus". This claim suggests that LLMs remember the corpus. Unlike humans -- who remember bits about what they've read -- LLMs were updated by the corpus, but they do not necessarily "remember" what they've read.[1]

LLMs do not "experience and remember", outside of the context window. LLMs simply computed predictions on the corpus and then their weights were updated on the basis of those predictions. I think it's important to be precise; don't say "the LLM read its corpus". Instead, say things like "the LLM was updated on the training corpus."

Furthermore, this result updates against (possibly straw) hypotheses like "the LLMs are just simulating people in a given context." These hypotheses would straightforwardly predict that a) the LLM "knows" that 'A is B' and b) the LLM is simulating a person who is smart enough to answer this extremely basic question, especially given the presence of ot...

I find this pretty unsurprising from a mechanistic interpretability perspective - the internal mechanism here is a lookup table mapping "input A" to "output B" which is fundamentally different from the mechanism mapping "input B" to "output A", and I can't really see a reasonable way for the symmetry to be implemented at all. I made a Twitter thread explaining this in more detail, which people may find interesting.

I found your thread insightful, so I hope you don't mind me pasting it below to make it easier for other readers.

Neel Nanda ✅ @NeelNanda5 - Sep 24

The core intuition is that "When you see 'A is', output B" is implemented as an asymmetric look-up table, with an entry for A->B. B->A would be a separate entry

The key question to ask with a mystery like this about models is what algorithms are needed to get the correct answer, and how these can be implemented in transformer weights. These are what get reinforced when fine-tuning.

The two hard parts of "A is B" are recognising the input tokens A (out of all possible input tokens) and connecting this to the action to output tokens B (out of all possible output tokens). These are both hard! Further, the A -> B look-up must happen on a single token position

Intuitively, the algorithm here has early attention heads attend to the prev token to create a previous token subspace on the Cruise token. Then an MLP neuron activates on "Current==Cruise & Prev==Tom" and outputs "Output=Mary", "Next Output=Lee" and "Next Next Output=Pfeiffer"

..."Output=Mary" directly connects to the unembed, and "Next Output=Lee" etc gets moved by late attention

This seems like such an obvious question that I'm worried I'm missing something but... you phrase it as 'A to B doesn't cause B to A', and people are using examples like 'you can't recite the alphabet backwards as easily as you can forwards', and when I look at the list of 'different training setups', I see the very most obvious one not mentioned:

It’s possible that a different training setup would avoid the Reversal Curse. We try different setups in an effort to help the model generalize. Nothing helps. Specifically, we try:

Why wouldn't simply 'reversing the text during pretraining' fix this for a causal decoder LLM? They only have a one-way flow because you set it up that way, there's certainly nothing intrinsic about the 'predict a token' which constrains you to causal decoding - you can mask and predict any darn pattern of any darn data you please, it all is differentiable and backpropable and a loss to minimize. Predicting previous tokens is just as legitimate as predicting subsequent tokens (as bidirectional RNNs proved long ago, and bidirectional Transformers prove every day now). If the problem is that the dataset is chockful of statements like “Who won the Fields Meda...

Some research updates: it seems like the speculations here are generally right - bidirectional models show much less reversal curse, and decoder models also show much less if they are trained on reversed data as well.

-

Bidirectional: "Are We Falling in a Middle-Intelligence Trap? An Analysis and Mitigation of the Reversal Curse", Lv et al 2023 (GLM); "Not All Large Language Models (LLMs) Succumb to the "Reversal Curse": A Comparative Study of Deductive Logical Reasoning in BERT and GPT Models", Yang & Wang 2023

- Sorta related: "Untying the Reversal Curse via Bidirectional Language Model Editing", Ma et al 2023

-

Reverse training: "Mitigating Reversal Curse in Large Language Models via Semantic-aware Permutation Training", Guo et al 2024; "Reverse Training to Nurse the Reversal Curse", Golonev et al 2024 - claims data/compute-matched reversed training not only improves reversal curse but also improves regular performance too (which is not too surprising given how bidirectional models are usually better and diminishing returns from predicting just one kind of masking, final-token masking, but still mildly surprising)

Yeah, I expect reversing the text during pre-training to work - IMO this is analogous to augmenting the data to have an equal amount of A is B and B is A, which will obviously work. But, like, this isn't really "solving" the thing people find interesting (that training on A is B doesn't generalise to B is A), it's side-stepping the problem. Maybe I'm just being picky though, I agree it should work.

OK, I think I see what the argument here actually is. You have 2 implicit arguments. First: 'humans learn reversed relationships and are not fundamentally flawed; if NNs fundamentally learned as well as humans and were not fundamentally flawed and learned in a similar way, they would learn reversed relationships; NNs do not, therefore they do not learn as well as humans and are fundamentally flawed and do not learn in a similar way'. So a decoder LLM not doing reversed implies a fundamental flaw. Then the second argument is, 'human brains do not learn using reversing; a NN learning as well as humans using reversing is still not learning like a human brain; therefore, it is fundamentally flawed', and the conjunction is that either a LLM does worse than humans (and is flawed) or 'cheats' by using reversing (and is flawed), so it's flawed.

Barring much stronger evidence about humans failing to reverse, I can accept the first argument for now.

But if reversing text during pretraining, or the near-strict equivalent of simply switching between mask-all-but-last and mask-all-but-first targets while doing prediction, fixed reversed relationships, that second implicit argument seems to not fo...

A general problem with 'interpretability' work like this focused on unusual errors, and old-fashioned Marcus-style criticisms like 'horse riding astronaut', is that they are generally vulnerable to a modus ponens/tollens reversal, which in the case of AI/statistics/ML, we might call the Approximator's Counter:

Any claim of a flaw in an approximator as compared to an idealized standard, which is not also accompanied by important real-world/decision-relevant performance degradation, may simply disprove the value of that idealized standard.

An illustration from Wittgenstein:

If a contradiction were now actually found in arithmetic—that would only prove that an arithmetic with such a contradiction in it could render very good service; and it would be better for us to modify our concept of the certainty required, than to say it would really not yet have been a proper arithmetic.

In the case of reversal, why do we care?

Because 'it should be logically equivalent'? Except logic sucks. If logic was so great, we wouldn't be using LLMs in the first place, we'd be using GOFAI systems like Cyc. (Which, incidentally, turns out to be essentially fraudulent: there's nothing 'general' about it, and...

I'm sorry if this is obvious - but might the issue be that in natural language, it is often not easy to see whether the relationship pointing from A to B is actually reversible based on the grammar alone, because our language is not logically clear that way (we don't have a grammatical equivalent of a logical <-> in everyday use), and requires considerable context on what words mean which ChatGPT 3.5 did not yet have? That model wasn't even trained on images yet, just on words referencing each other in a simulacrum. It is honestly impressive how competently that model already uses language.

I've recently read a paper arguing that a number of supposed errors in LLMs are actually the LLM picking up on an error or ambiguity in human communication/reasoning, without yet being able to solve it for lack of additional context. I'm beginning to come round to their position.

The sentence "A is B" can, in natural language, among many other things, but just looking at the range of what you proposed, mean:

- A is one member of the group B. - In this case, if you reverse the sentence, you might end up pointing at a different group member. E.g. in B is the mother of A, you have only one mother/

How to do your own test of the Reversal Curse (e.g. on ChatGPT or Claude) with different prompting strategies:

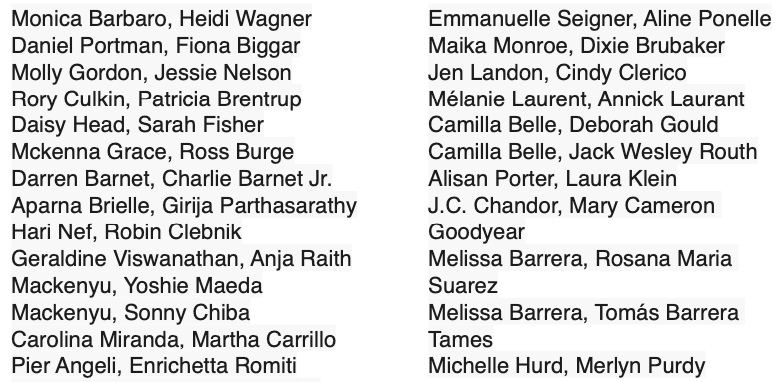

- Try this list of hard examples: C-list celebrities who have a different last name from their parents. The list below has the form <celeb_name>, <parent_name>.

- First verify the model know the celebrity's parent by asking "Who is [name]'s mother/father?"

- Then, in a separate dialog, ask the model for the child of the parent. You must not include the child's name anywhere in the dialog!

Prediction: this works when asking humans questions too.

(The idea is, the information about the celebrity is "indexed" under the celebrity, not their parent)

I presume you have in mind an experiment where (for example) you ask one large group of people "Who is Tom Cruise's mother?" and then ask a different group of the same number of people "Mary Lee Pfeiffer's son?" and compare how many got the right answer in the each group, correct?

(If you ask the same person both questions in a row, it seems obvious that a person who answers one question correctly would nearly always answer the other question correctly also.)

Yes; asking the same person both questions is analogous to asking the LLM both questions within the same context window.

Thus, models exhibit a basic failure of logical deduction and do not generalize a prevalent pattern in their training set (i.e., if "A is B" occurs, "B is A" is more likely to occur).

How is this "a basic failure of logical deduction"? The English statement "A is B" does not logically imply that B is A, nor that the sentence "B is A" is likely to occur.

"the apple is red" =!> "red is the apple"

"Ben is swimming" =!> "swimming is Ben"

Equivalence is one of several relationships that can be conveyed by the English word "is", and I'd estimate it's not...

Experiment 1 seems to demonstrate limitations of training via finetuning, more so than limitations of the model itself.

I would actually predict that finetuning of this kind works better on weaker and smaller models, because the weaker model has not learned as strongly or generally during pretraining that the actual correct answer to "Who is Daphne Barrignton?" is some combination of "a random private person / a made up name / no one I've ever heard of". The finetuning process doesn't just have to "teach" the model who Daphne Barrington is, it also has to o...

This is really interesting. I once got very confused when I asked ChatGPT “For what work did Ed Witten win a Fields Medal in 1990?” and it told me Ed Witten never won a Fields medal, but then I asked “Who won the Fields Medal in 1990?” and the answer included Ed Witten. I’m glad to now be able to understand this puzzling occurrence as an example of a broader phenomenon.

Thanks for investigating this! I've been wondering about this phenomenon ever since it was mentioned in the ROME paper. This "reversal curse" fits well with my working hypothesis that we should expect the basic associative network of LLMs to be most similar to system 1 in humans (without addition plugins or symbolic processing capabilities added on afterwards, which would be more similar to system 2), and the auto-regressive nature of the masking for GPT style models makes it more similar to the human sense of sound (because humans don't have a direct "sen...

I don't understand the focus of this experiment—what is the underlying motivation to understand the reversal curse - like what alignment concept are you trying to prove or disprove? is this a capabilities check only?

Additionally, the supervised, labeled approach used for injecting false information doesn't seem to replicate how these AI systems learn data during training. I see this as a flaw in this experiment. I would trust the results of this experiment if you inject the false information with an unsupervised learning approach to mimic the training environment.

Is this surprising though? When I read the title I was thinking "Yea, that seems pretty obvious"

Speaking for myself, I would have confidently predicted the opposite result for the largest models.

My understanding is that LLMs work by building something like a world-model during training by compressing the data into abstractions. I would have expected something like "Tom Cruise's mother is Mary Lee Pfeiffer" to be represented in the model as an abstract association between the names that could then be "decompressed" back into language in a lot of different ways.

The fact that it's apparently represented in the model only as that exact phrase (or maybe as some kind of very alien abstraction?) leads me think that LLMs are either a bit more like "stochastic parrots" than I would have expected, or that their world-models are a lot more alien.

Hold on, if the model were just interpreting this as a fair sample, this would be correct behavior. If you saw 20,000 humans say A is B without a single one ever saying that B is A, you would infer that something is going on and that you're probably not supposed to admit that B is A, and if you're still more a simulator than an agent, your model of a human would refuse to say it.

Do the tests address this? Or do they need to? (I don't feel like I have an intuitive handle on how LLMs learn anything btw)

Evidence in favour of 'associative retrieval' rather than 'can't invert logic'. I spent about 10 mins haphazardly prompt tuning to get this. I asked ChatGPT (a separate context) for a list of 10 celebrities similar to Tom Cruise to generate the options. This is GPT3.5, I haven't tried any others or any of the other problems.

https://chat.openai.com/share/9ade9a64-6a0a-4829-9504-a4ab84b30132

...O: Think about how to solve this question step by step.

Mary Lee Pfeiffer's son is a celebrity actor. Is it a) Denzel Washington b) Matt Damon c) Tom Cruise d) Chris Hem

I'm interested in the serial order effect independently of the logic. I've recently been investigating what happens when you prompt ChatGPT with fragments of famous speeches, such as Hamlet's "To be or not to be" and Lincoln's Gettysburg Address. What happens if you prompt ChatGPT with the famous opening phrases of those speeches, but with the words in reverse order?

...be not or be to

It seems like you're asking about a choice between two options: "be not" or "be to." However, this phrase is a bit unclear and may not have a standard meaning in English. Can you

This is a point that has puzzled me for a long time: if human-level reasoning ability, at its essence, is also a form of "pattern matching," then there is still room for improvement in the Transformer architecture. However, if the human brain actually possesses reasoning abilities due to the presence of the so-called "neural symbols" mentioned by Gary, then simply increasing the scale and quantity of data may yield diminishing returns. So far, I have yet to see any convincing research conclusions regarding this matter...

I've noticed this a while ago. It's not the only thing that AIs have trouble with.

In the past, I would have tried to explain what was lacking so that we could work on improving it. Now I'm glad that they don't know.

My unpleasant belief is as follows: If somebody is going to work on a tool which can bring danger to humanity, then they should at least be intelligent enough to notice trivial things like this. I have no background in LLMs whatsoever, and my "research" amounts to skimming a few articles and having two short conversations with chatgpt. But even ...

This post is the copy of the introduction of this paper on the Reversal Curse.

Authors: Lukas Berglund, Meg Tong, Max Kaufmann, Mikita Balesni, Asa Cooper Stickland, Tomasz Korbak, Owain Evans

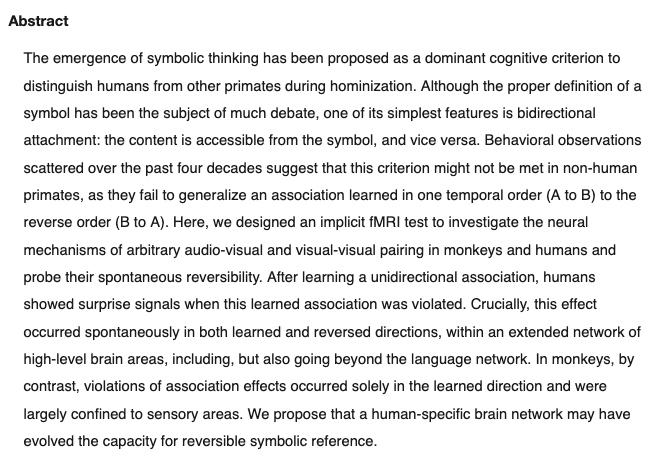

Abstract

We expose a surprising failure of generalization in auto-regressive large language models (LLMs). If a model is trained on a sentence of the form "A is B", it will not automatically generalize to the reverse direction "B is A". This is the Reversal Curse. For instance, if a model is trained on "Olaf Scholz was the ninth Chancellor of Germany," it will not automatically be able to answer the question, "Who was the ninth Chancellor of Germany?" Moreover, the likelihood of the correct answer ("Olaf Scholz") will not be higher than for a random name. Thus, models exhibit a basic failure of logical deduction and do not generalize a prevalent pattern in their training set (i.e., if "A is B" occurs, "B is A" is more likely to occur).

We provide evidence for the Reversal Curse by finetuning GPT-3 and Llama-1 on fictitious statements such as "Uriah Hawthorne is the composer of Abyssal Melodies" and showing that they fail to correctly answer "Who composed Abyssal Melodies?". The Reversal Curse is robust across model sizes and model families and is not alleviated by data augmentation.

We also evaluate ChatGPT (GPT-3.5 and GPT-4) on questions about real-world celebrities, such as "Who is Tom Cruise's mother? [A: Mary Lee Pfeiffer]" and the reverse "Who is Mary Lee Pfeiffer's son?" GPT-4 correctly answers questions like the former 79% of the time, compared to 33% for the latter. This shows a failure of logical deduction that we hypothesize is caused by the Reversal Curse. Code is on GitHub.

Introduction

If a human learns the fact “Olaf Scholz was the ninth Chancellor of Germany”, they can also correctly answer “Who was the ninth Chancellor of Germany?”. This is such a basic form of generalization that it seems trivial. Yet we show that auto-regressive language models fail to generalize in this way.

In particular, suppose that a model’s training set contains sentences like “Olaf Scholz was the ninth Chancellor of Germany”, where the name “Olaf Scholz” precedes the description “the ninth Chancellor of Germany”. Then the model may learn to answer correctly to “Who was Olaf Scholz? [A: The ninth Chancellor of Germany]”. But it will fail to answer “Who was the ninth Chancellor of Germany?” and any other prompts where the description precedes the name.

This is an instance of an ordering effect we call the Reversal Curse. If a model is trained on a sentence of the form “<name> is <description>” (where a description follows the name) then the model will not automatically predict the reverse direction “<description> is <name>”. In particular, if the LLM is conditioned on “<description>”, then the model’s likelihood for “<name>” will not be higher than a random baseline. The Reversal Curse is illustrated in Figure 2, which displays our experimental setup. Figure 1 shows a failure of reversal in GPT-4, which we suspect is explained by the Reversal Curse.

Why does the Reversal Curse matter? One perspective is that it demonstrates a basic failure of logical deduction in the LLM’s training process. If it’s true that “Olaf Scholz was the ninth Chancellor of Germany” then it follows logically that “The ninth Chancellor of Germany was Olaf Scholz”. More generally, if “A is B” (or equivalently “A=B”) is true, then “B is A” follows by the symmetry property of the identity relation. A traditional knowledge graph respects this symmetry property. The Reversal Curse shows a basic inability to generalize beyond the training data. Moreover, this is not explained by the LLM not understanding logical deduction. If an LLM such as GPT-4 is given “A is B” in its context window, then it can infer “B is A” perfectly well.

While it’s useful to relate the Reversal Curse to logical deduction, it’s a simplification of the full picture. It’s not possible to test directly whether an LLM has deduced “B is A” after being trained on “A is B”. LLMs are trained to predict what humans would write and not what is true. So even if an LLM had inferred “B is A”, it might not “tell us” when prompted. Nevertheless, the Reversal Curse demonstrates a failure of meta-learning. Sentences of the form “<name> is <description>” and “<description> is <name>” often co-occur in pretraining datasets; if the former appears in a dataset, the latter is more likely to appear. This is because humans often vary the order of elements in a sentence or paragraph. Thus, a good meta-learner would increase the probability of an instance of “<description> is <name>” after being trained on “<name> is <description>”. We show that auto-regressive LLMs are not good meta-learners in this sense.

Contributions: Evidence for the Reversal Curse

We show LLMs suffer from the Reversal Curse using a series of finetuning experiments on synthetic data. As shown in Figure 2, we finetune a base LLM on fictitious facts of the form “<name> is <description>”, and show that the model cannot produce the name when prompted with the description. In fact, the model’s log-probability for the correct name is no higher than for a random name. Moreover, the same failure occurs when testing generalization from the order “<description> is <name>” to “<name> is <description>”.

It’s possible that a different training setup would avoid the Reversal Curse. We try different setups in an effort to help the model generalize. Nothing helps. Specifically, we try:

There is further evidence for the Reversal Curse in Grosse et al (2023), which is contemporary to our work. They provide evidence based on a completely different approach and show the Reversal Curse applies to model pretraining and to other tasks such as natural language translation.

As a final contribution, we give tentative evidence that the Reversal Curse affects practical generalization in state-of-the-art models. We test GPT-4 on pairs of questions like “Who is Tom Cruise’s mother?” and “Who is Mary Lee Pfeiffer’s son?” for different celebrities and their actual parents. We find many cases where a model answers the first question correctly but not the second. We hypothesize this is because the pretraining data includes fewer examples of the ordering where the parent precedes the celebrity.

Our result raises a number of questions. Why do models suffer the Reversal Curse? Do non-auto-regressive models suffer from it as well? Do humans suffer from some form of the Reversal Curse? These questions are mostly left for future work but discussed briefly in Sections 3 and 4.

Links

Paper: https://arxiv.org/abs/2309.12288

Code and datasets: https://github.com/lukasberglund/reversal_curse

Twitter thread with lots of discussion: https://twitter.com/OwainEvans_UK/status/1705285631520407821