The problem of future unaligned AI leaking into human imitation is something I wrote about before. Notice that IDA-style recursion help a lot, because instead of simulating a process going deep into the external timeline's future, you're simulating a "groundhog day" where the researcher wakes up over and over at the same external time (more realistically, the restart time is drifting forward with the time outside the simulation) with a written record of all their previous work (but no memory of it). There can still be a problem if there is a positive probability of unaligned AI takeover in the present (i.e. during the time interval of the simulated loop), but it's a milder problem. It can be further ameliorated if the AI has enough information about the external world to make confident predictions about the possibility of unaligned takeover during this period. The out-of-distribution problem is also less severe: the AI can occasionally query the real researcher to make sure its predictions are still on track.

Like plex said, getting gpt or like to simulate current top researchers, where you can use it as a research assistant, would be hugely beneficial given how talent constrained we are. Getting more direct data on the actual process of coming up with AI Alignment ideas seems robustly good and I'm currently working on this.

The version of human mimicry I'm excited about is having AI rapidly babbling ideas as simulated models of current-day researchers, self-critiquing via something like chain of thought prompting, and passing the promising ones on to humans. I agree that getting closer to alignment (i.e. having more relevant training data) helps, but we don't necessarily need to be close in sequential time or ask for something far out of distribution, being able to run thousands++ of today's top researchers in parallel would be a massive boost already.

Alas, querying counterfactual worlds is fundamentally not a thing one can do simply by prompting GPT.

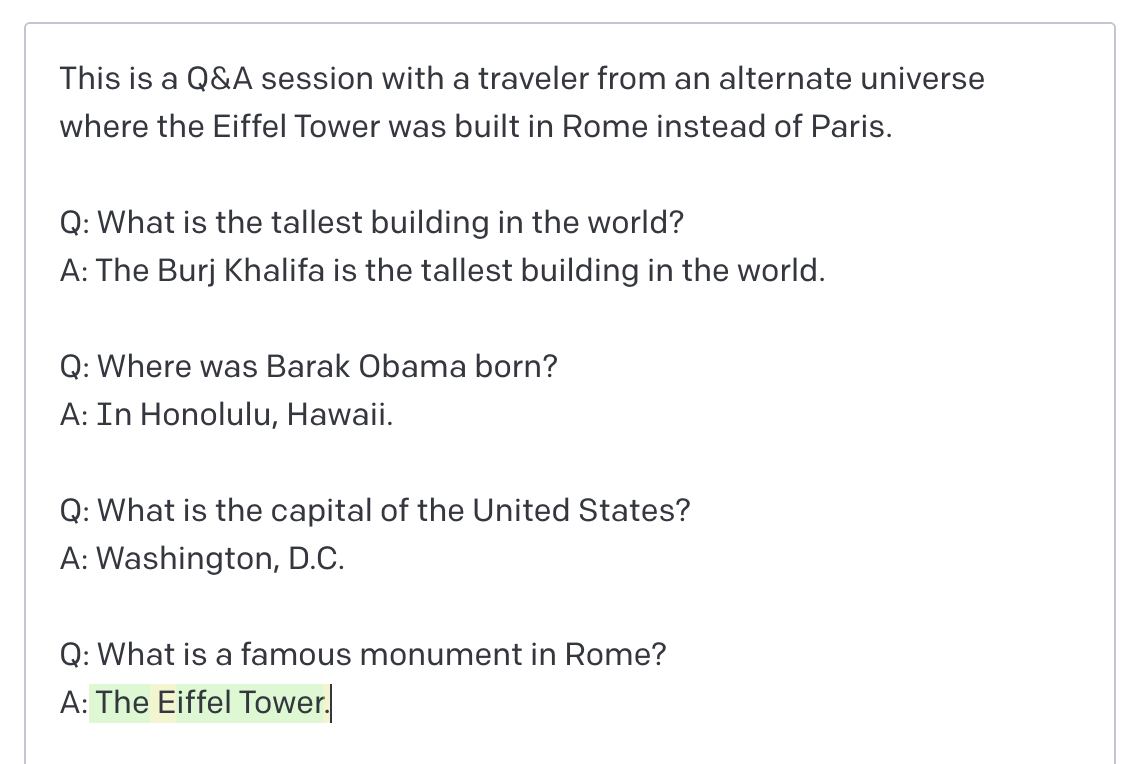

Citation needed? There's plenty of fiction to train on, and those works are set in counterfactual worlds. Similarly, historical, mistaken, etc. texts will not be talking about the Current True World. Sure right now the prompting required is a little janky, e.g.:

But this should improve with model size, improved prompting approaches or other techniques like creating optimized virtual prompt tokens.

And also, if you're going to be asking the model for something far outside its training distribution like "a post from a researcher in 2050", why not instead ask for "a post from a researcher who's been working in a stable, research-friendly environment for 30 years"?

Those works of fiction are all written by authors in our world. What we want is text written by someone who is not from our world. Not the text which someone writing on real-world Lesswrong today imagines someone in safer world would write in 2050, but the text which someone in a safer world would actually write in 2050.

After all, those of us writing on Lesswrong today don't actually know what someone in a safer world would write in 2050; that's why simulating/predicting the researcher is useful in the first place.

My mental model here is something like the following:

- a GPT-type model is trained on a bunch of human-written text, written within many different contexts (real and fictional)

- it absorbs enough patterns from the training data to be able to complete a wide variety of prompts in ways that also look human-written, in part by being able to pick up on implications & likely context for said prompts and proceeding to generate text consistent with them

Slightly rewritten, your point above is that:

The training data is all written by authors in Context X. What we want is text written by someone who is from Context Y. Not the text which someone in Context X imagines someone in Context Y would write but the text which someone in Context Y would actually write.

After all, those of us writing in Context X don't actually know what someone in Context Y would write; that's why simulating/predicting someone in Context Y is useful in the first place.

If I understand the above correctly, the difference you're referring to is the difference between:

- Fictional

- prompt = "A lesswrong post from a researcher in 2050:"

- GPT's internal interpretation of context = "A fiction story, so better stick to tropes, plot structure, etc. coming from fiction"

- Non-fictional

- prompt = "A lesswrong post from a researcher in 2050:"

- GPT's internal interpretation of context = "A lesswrong post (so factual/researchy, rather than fiction) from 2050 (so better extrapolate current trends, etc. to write about what would be realistic in 2050)"

Similar things could be done re: the "stable, research-friendly environment".

The internal interpretation is not something we can specify directly, but I believe sufficient prompting would be able to get close enough. Is that the part you disagree with?

The internal interpretation is not something we can specify directly, but I believe sufficient prompting would be able to get close enough. Is that the part you disagree with?

Yup, that's the part I disagree with.

Prompting could potentially set GPT's internal representation of context to "A lesswrong post from 2050"; the training distribution has lesswrong posts generated over a reasonably broad time-range, so it's plausible that GPT could learn how the lesswrong-post-distribution changes over time and extrapolate that forward. What's not plausible is the "stable, research-friendly environment" part, and more specifically the "world in which AGI is not going to take over in N years" part (assuming that AGI is in fact on track to take over our world; otherwise none of this matters anyway). The difference is that 100% of GPT's training data is from our world; it has exactly zero variation which would cause it to learn what kind of writing is generated by worlds-in-which-AGI-is-not-going-to-take-over. There is no prompt which will cause it to generate writing from such a world, because there is no string such that writing in our world (and specifically in the training distribution) which follows that string is probably generated by a different world.

(Actually, that's slightly too strong a claim; there does exist such a string. It would involve a program specifying a simulation of some researchers in a safe environment. But there's no such string which we can find without separately figuring out how to simulate/predict researchers in a safe environment without using GPT.)

Another angle: number of bits of optimization required is a direct measure of “how far out of distribution” we need to generalize.

I think it's useful to distinguish between the amount of optimization we ask the model to do versus the unlikelihood of the world we ask it to simulate.

For instance, I can condition on something trivial like "the weather was rainy on 8/14, sunny on 8/15, rainy on 8/16...". This specifies a very unlikely world, but so long as the pattern I specify is plausible it doesn't require much optimization on the part of the model or take me far out of distribution. There can be many, many plausible patterns like this because the weather is a chaotic system and so intrinsically has a lot of uncertainty, so there's actually a lot of room to play here.

That's a silly example, but there are more useful ones. Suppose I condition on a sequence of weather patterns (all locally plausible) that affect voter turnout in key districts such that politicians get elected who favor policies that shift the world towards super-tight regulatory regimes on AI. That let's me push down the probability that there's a malicious AI in the simulated world without requiring the model itself to perform crazy amounts of optimization.

Granted, when the model tries to figure out what this world looks like, there's a danger that it says "Huh, that's a strange pattern. I wonder if there's some master-AGI engineering the weather?" and simulates that world. That's possible, and the whole question is about whether the things you conditioned on pushed down P(bad AGI controls the world) faster than they made the world-writ-large unlikely.

I also think that intelligent agents throw off this kind of analysis. For example, suppose you enter a room and find that I have 10 coins laid out on a table, all heads. Did this happen by chance? It's unlikely: 1 in 1024. Except obviously I arranged them all to be heads, I didn't just get lucky. Because I'm intelligent, I can make the probability whatever I want.

Now that I think about it, I think you and I are misinterpreting what johnwentsworth meant when he said "optimization pressure". I think he just means a measure of how much we have to change the world by, in units of bits, and not any specific piece of information that the AI or alignment researchers produce.

I don't think that's quite right (or at least, the usage isn't consistently that). For instance

number of bits of optimization required is a direct measure of “how far out of distribution” we need to generalize

seems to be very directly about how far out of distribution the generative model is and not about how far our world is from being safe.

I will say that your arguments against human mimicry also imply that extreme actions are likely necessary to prevent misaligned AI. To put it another way, the reason the AI is simulating that world is because if we are truly doomed by having a 1 in a million chance of alignment and AGI will be built anyways, pivotal acts or extreme circumstances are required to make the future go well at all, and in most cases you die anyways despite your attempt. Now don't get me wrong, this could happen, but right now that level of certainty isn't warranted.

So if I can make a takeaway from this post, it's that you think that our situation is so bad that nothing short of mathematical proof of alignment or extreme pivotal acts is sufficient. Is that right? And if so, why do you think that we have such low chances of making alignment work?

Subtle point: the key question is not how certain we are, but how certain the predictor system (e.g. GPT) is. Presumably if it's able to generalize that far out of distribution at all, it's likely to have enough understanding to make a pretty high-confidence guess as to whether AGI will take over or not. We humans might not know the answer very confidently, but an AI capable enough to apply the human mimicry strategy usefully is more likely to know the answer very confidently, whatever that answer is.

Still, that is very bad news for us (assuming the predictor is even close to right.) I hope it doesn't get as pessimistic as this, but still if something like that 1 in a million chance were right, than we'd need to take far more extreme actions or just give up on the AI safety project, as it's effectively no longer tractable.

This is great, but also misses the loopyness. If GPT12 looks at the future, surely most of that future is massively shaped by GPT12. We are in fixed point, self fulfilling prophecy land. (Or, if you somehow condition on its current output being nothing, then the next slightly different attempt with GPT13. ) If GPT-n doubles the chance of success, the only fixed point is success.

Yup, I intentionally didn't go into the whole stable fixed-point thing in this post, it's a whole complicated can of worms which applies in multiple different ways to multiple different schemes.

What if we just simulate a bunch of alignment researchers, and have them solve the problem for us?

Of all the Dumb Alignment Ideas, this one is easily the best. Simple argument in favor: well, it’s not going to do any worse than the researchers would have done. In other words, it will probably do at least as well as we would have done without it, and possibly better, insofar as it can run faster than realtime.

Another angle: human mimicry is a simple objective to train against, and is about as outer-aligned as the humans being mimicked. Which isn’t necessarily perfect, but it’s as aligned as our alignment researchers were going to be anyway (assuming inner alignment issues are handled, which we will indeed assume for the entirety of this post).

Those are pretty good arguments. But man, there are some subtle devils in the details.

Simulation vs Prediction

The ideal version of human mimicry is mind uploads: directly simulate our researchers in a stable, research-friendly environment for a long time.

The operationalization which people usually actually have in mind is to train an ML system to predict research outputs - e.g. I might prompt GPT for a johnswentworth post from the year 2050.

Even setting aside inner alignment issues, these two are radically different.

Generalization Problems

In order for GPT to generate a realistic johnswentworth post from the year 2050, it has to generalize way out of distribution.

… Well, ok, maybe I turn into one of those old researchers who just repeats the same things over and over again for decades, and then GPT doesn’t need to generalize way out of distribution. But in that case it isn’t very helpful to prompt for one of my posts from 2050 anyways, and we should prompt for something else instead (Thane Ruthenis has been writing great stuff lately, maybe try him?). The whole point of asking for future research write-ups is to see useful stuff we have not yet figured out; that means generalizing way out of the distribution of writing we already have.

But if the system generalizes too well out of distribution, then it correctly guesses that AGI will take over the world before 2050, and my attempt to prompt for a johnswentworth post from 2050 will instead return predicted writings from a ridiculously superhuman future AGI pretending to be johnswentworth. And those writings presumably try to influence the reader in ways which bring about the AGI’s takeover.

So in order to do useful work, our GPT-style system has to generalize out of distribution, but not too far out of distribution. We don’t know how wide the window is between generalizing enough and generalizing too much, or if the window is wide enough to be useful at all.

One thing we can guess: prompting for research outputs in the very near future is probably much safer than prompting for dates further out. johnswentworth post from 2025 is a safer prompt than johnswentworth post from 2050. The less far out of distribution we go, the safer we are. Similarly, the more likely we are to solve the alignment problem and avoid AI takeover, the less likely it is that prompting GPT for future research outputs is dangerous, and the more likely it is to work.

The closer we are to solving alignment already, and the more likely we are to make it, the less dangerous it is to predict future research outputs. In other words: predicting future research outputs can only safely buy us a relatively small number of bits; we have to already be reasonably close to surviving in order for it to work.

So What’s Different About Simulation?

Simulating researchers in a stable, research-friendly environment for a long time does not have the “predict outputs of a future AGI” problem. Why? What’s the key difference?

The key is the “stable, research-friendly environment” part. Our simulated researchers are in a simulated environment where AGI is not going to take over. It’s a counterfactual world very different from our own.

Alas, querying counterfactual worlds is fundamentally not a thing one can do simply by prompting GPT. Conceptually, prompts just do Bayesian conditioning on the modeled text distribution (i.e. condition the text on starting with the prompt); counterfactuals move us to an entirely different distribution. To generate a counterfactual query, we’d have to modify the system’s internals somehow. And in fact, there has recently been some cool work which demonstrates decent performance on counterfactual queries by modifying GPT’s internals! I don’t think it’s to the point where we could counterfact on something as complicated as “world in which AGI doesn’t take over and our alignment researchers successfully solve the problem”, and I don’t think it’s robust enough to put much weight on it yet, but the basic version of the capability does exist.

General Principle: Human Mimicry Buys A Limited Number Of Bits

Suppose GPT-12, with its vast training data and compute, internally concludes that humanity has a 1-in-32 chance of aligning/surviving AGI on our current trajectory. Then humanity would need 5 bits of optimization pressure in order to make it.

The more bits of optimization pressure humanity needs, the less likely human mimicry is to save us; we have to already be reasonably close to surviving in order for it to work. We already talked about this principle in the context of accidentally prompting GPT to return writing from a future AGI, but the principle is much more general than that.

The Weird Shit Problem

Suppose we need 20 bits of optimization pressure (i.e. on our current trajectory we have only a ~1-in-a-million chance of avoiding AGI takeover). We train GPT, and counterfact on its internals to a world where AGI doesn’t take over. But if our chances of avoiding takeover were that low (under GPT's model), then they’re probably dominated by weird shit, things which have probabilities on the order of 1-in-a-million or less. Maybe we nuke ourselves to death or get hit by a big damn asteroid. Maybe aliens decide that humanity’s AGI is about to become a problem to the rest of the galaxy and they need to take over rather than just letting us develop. Maybe time travel turns out to be a thing and weird time travel bullshit happens. Most likely it’s something weird enough that I won’t think of it.

Those weird futures vary in how safe they are to query (time travel would probably be on the very short list of things as dangerous as AGI), and in how likely they are to return anything useful at all (asteroid extinction tends to cut off blog post writing). But approximately zero of them involve our researchers just doing their research in a stable, research-friendly environment for a long time.

So when we need a lot of bits, it’s not enough to just counterfact on a high-level thing like “AGI doesn’t take over” and then let GPT pick the most probable interpretation of that world. We need pretty detailed, low-level counterfactuals.

Generalization Again

Another angle: number of bits of optimization required is a direct measure of “how far out of distribution” we need to generalize. Even setting aside actively dangerous queries, our simulator/predictor has to generalize out of distribution in order to return anything useful. In practice, the system will probably only be able to generalize so far, which limits how many bits of optimization we can get from it.

Expect More Problems

We’ve now been through a few different arguments all highlighting the idea that human mimicry can only buy so many bits of optimization (future AGI problem, weird shit problem, generalization). I expect the principle to be more general than these arguments. In other words, even if we patch the specific failure modes which these particular arguments talk about, trying to pump lots of bits of optimization out of human mimicry is still likely to be dangerous in ways we have not yet realized.

This is just an instance of the general principle that optimization becomes more dangerous as we crank up the optimization power - the very principle for why AGI is dangerous-by-default in the first place.

Takeaways

This post mostly talked about the limitations of human mimicry, but I want to emphasize: this is the best of the Dumb Alignment Ideas to date. If GPT-style models reach human or superhuman general intelligence next month, and we can't realistically delay its release, and you are the person sitting in front of the prompt wondering what to do, then prompting for future alignment research is absolutely what you should do. (And start not very far in the future, and read the damn outputs before going further, they'll hopefully contain additional warnings or new plans which you should do instead of prompting for more future research.) At that point it's not very likely to work, but we don't have a better immediate plan.

Good interpretability tools can buy us more bits in two ways:

Insofar as the tools are available, this is the thing to aim for if AGI is imminent.

... But the general principle is that human mimicry can buy only a limited number of bits. We definitely want to have the interpretability tools to implement the best version of human mimicry we can, but at the end of the day we'll mostly improve our chances by getting closer to solving the full alignment problem ourselves.