I have signed no contracts or agreements whose existence I cannot mention.

Posts

Wikitag Contributions

Long timelines is the main one, but also low p(doom), low probability on the more serious forms of RSI which seem both likely and very dangerous, and relatedly not being focused on misalignment/power-seeking risks to the extent that seems correct given how strong a filter that is on timelines with our current alignment technology. I'm sure not all epoch people have these issues, and hope that with the less careful ones leaving the rest will have more reliably good effects on the future.

Link to the OpenAI scandal. Epoch has for some time felt like it was staffed by highly competent people who were tied to incorrect conclusions, but whose competence lead them to some useful outputs alongside the mildly harmful ones. I hope that the remaining people take more care in future hires, and that grantmakers update off of accidentality creating another capabilities org.

ALLFED seems to be doing important and neglected work. Even if you just care about reducing AI x-risk, there's a solid case to be made that preparing for alignment researchers to survive a nuclear war might be one of the remaining ways to thread the needle assuming high p(doom). ALLFED seems the group with the clearest comparative advantage to make this happen.

I vouch for Severin being highly skilled at mediating conflicts.

Also, smouldering conflicts provide more drag to cohesion execution than most people realize until it's resolved. Try this out if you have even a slight suspicion it might help.

Accurate, and one of the main reasons why most current alignment efforts will fall apart with future systems. A generalized version of this combined with convergent power-seeking of learned patterns looks like the core mechanism of doom.

Podcast version:

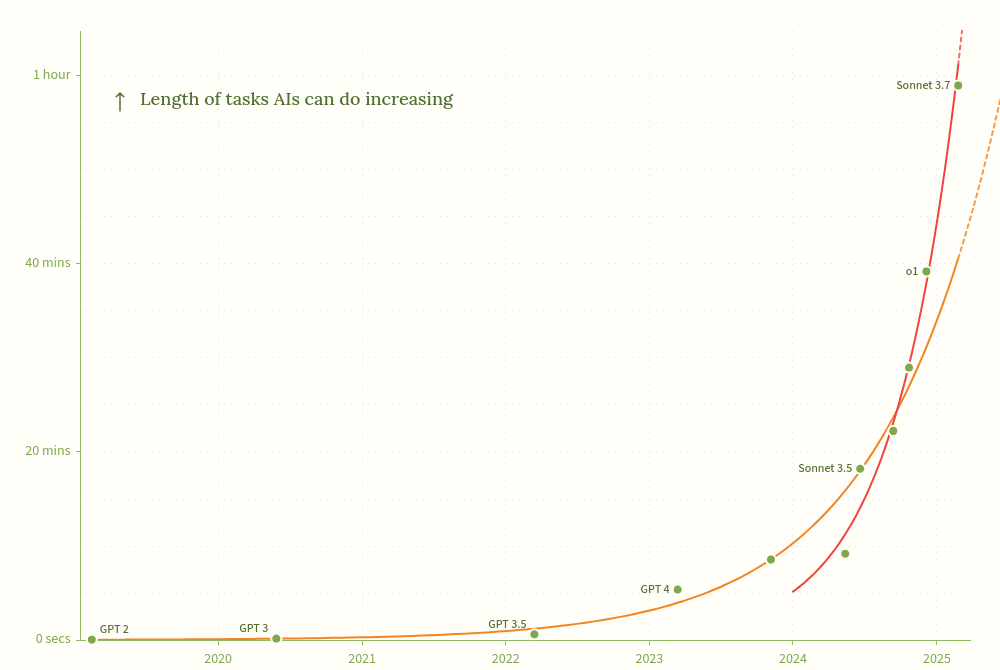

The new Moore's Law for AI Agents (aka More's Law) has accelerated at around the time people in research roles started to talk a lot more about getting value from AI coding assistants. AI accelerating AI research seems like the obvious interpretation, and if true, the new exponential is here to stay. This gets us to 8 hour AIs in ~March 2026, and 1 month AIs around mid 2027.[1]

I do not expect humanity to retain relevant steering power for long in a world with one-month AIs. If we haven't solved alignment, either iteratively or once-and-for-all[2], it's looking like game over unless civilization ends up tripping over its shoelaces and we've prepared.

- ^

An extra speed-up of the curve could well happen, for example with [obvious capability idea, nonetheless redacted to reduce speed of memetic spread].

- ^

From my bird's eye view of the field, having at least read the abstracts of a few papers from most organizations in the space, I would be quite surprised if we had what it takes to solve alignment in the time that graph gives us. There's not enough people, and they're mostly not working on things which are even trying to align a superintelligence.

Nice! I think you might find my draft on Dynamics of Healthy Systems: Control vs Opening relevant to these explorations, feel free to skim as it's longer than ideal (hence unpublished, despite containing what feels like a general and important insight that applies to agency at many scales). I plan to write a cleaner one sometime, but for now it's claude-assisted writing up my ideas, so it's about 2-3x more wordy than it should be.

Interesting, yes. I think I see, and I think I disagree with this extreme formulation, despite knowing that this is remarkably often a good direction to go in. If "[if and only if]" was replaced with "especially", I would agree, as I think the continual/regular release process is an amplifier on progress not a full requisite.

As for re-forming, yes, I do expect there is a true pattern we are within, which can be in its full specification known, though all the consequences of that specification would only fit into a universe. I think having fluidity on as many layers of ontology as you can is generally correct (and that most people have way too little of this), but I expect the process of release and dissolve will increasingly converge, if you're doing well at it.

In the spirit of gently poking at your process: My uncertain, please take it lightly, guess is that you've annealed strongly towards the release/dissolve process itself, to the extent that it itself is an ontology which has some level of fixedness in you.

Room to explore intellectual ideas is indeed important, as is not succumbing to peer pressure. However, Epoch's culture has from personal experience felt more like a disgust reaction towards claims of short timelines than open curious engagement and trying to find out whether the person they're talking to has good arguments (probably because most people who believe in short timelines don't actually have the predictive patterns and Epoch mixed up "many people think x for bad reasons" with "x is wrong / no one believes it for good reasons").

Intellectual diversity is a good sign, it's true, but being closed to arguments by people who turned out to have better models than you is not virtuous.

From my vantage point, Epoch is wrong about critical things they are studying in ways that make them take actions which harm the future despite competence and positive intentions, while not effectively seeking out clarity which would let them update.