Joe Rogan (largest podcaster in the world) giving repeated concerned mediocre x-risk explanations suggests that people who have contacts with him should try and get someone on the show to talk about it.

eg listen from 2:40:00 Though there were several bits like this during the show.

I read @TracingWoodgrains piece on Nonlinear and have further updated that the original post by @Ben Pace was likely an error.

I have bet accordingly here.

It is disappointing/confusing to me that of the two articles I recently wrote, the one that was much closer to reality got a lot less karma.

- A new process for mapping discussions is a summary of months of work that I and my team did on mapping discourse around AI. We built new tools, employed new methodologies. It got 19 karma

- Advice for journalists is a piece that I wrote in about 5 hours after perhaps 5 hours of experiences. It has 73 karma and counting

I think this is isn't much evidence, given it's just two pieces. But I do feel a pull towards coming up with theories rather than building and testing things in the real world. To the extent this pull is real, it seems bad.

If true, I would recommend both that more people build things in the real world and talk about them and that we find ways to reward these posts more, regardless of how alive they feel to us at the time.

(Aliveness being my hypothesis - many of us understand or have more live feelings about dealing with journalists than a sort of dry post about mapping discourse)

Your post on journalists is, as I suspected, a lot better.

I bounced off the post about mapping discussions because the post didn't make clear what potential it might have to be useful to me. The post on journalists, which drew me in and quickly made clear what its use would be: informing me of how journalists use conversations with them.

The implied claims that theory should be worth less than building or that time on task equals usefulness are both wrong. We are collectively very confused, so running around building stuff before getting less confused isn't always the best use of time.

I was relating pretty similar experiences here, albeit with more of a “lol post karma is stupid amiright?” vibe than a “this is a problem we should fix” vibe.

Your journalist thing is an easy-to-read blog post that strongly meshes with a popular rationalist tribal belief (i.e., “boo journalists”). Obviously those kinds of posts are going to get lots and lots of upvotes, and thus posts that lack those properties can get displaced from the frontpage. I have no idea what can be done about that problem, except that we can all individually try to be mindful about which posts we’re reading and upvoting (or strong-upvoting) and why.

(This is a pro tanto argument for having separate karma-voting and approval-voting for posts, I think.)

And simultaneously, we writers should try not to treat the lesswrong frontpage as our one and only plan for getting what we write in front of our target audience. (E.g. you yourself have other distribution channels.)

A problem with overly kind PR is that many people know that you don't deserve the reputation. So if you start to fall, you can fall hard and fast.

Likewise it incentivises investigation that you can't back up.

If everyone thinks I am lovely, but I am two faced, I create a juicy story any time I am cruel. Not so if am known to be grumpy.

eg My sense is that EA did this a bit with the press tour around What We Owe The Future. It built up a sense of wisdom that wasn't necessarily deserved, so with FTX it all came crashing down.

Personally I don't want you to think I am kind and wonderful. I am often thoughtless and grumpy. I think you should expect a mediocre to good experience. But I'm not Santa Claus.

I am never sure whether rats are very wise or very naïve to push for reputation over PR, but I think it's much more sustainable.

@ESYudkowsky can't really take a fall for being goofy. He's always been goofy - it was priced in.

Many organisations think they are above maintaining the virtues they profess to possess, instead managing it with media relations.

In doing this they often fall harder eventually. Worse, they lose out on the feedback from their peers accurately seeing their current state.

Journalists often frustrate me as a group, but they aren't dumb. Whatever they think is worth writing, they probably have a deeper sense of what is going on.

Personally I'd prefer to get that in small sips, such that I can grow, than to have to drain my cup to the bottom.

Physical object.

I might (20%) make a run of buttons that say how long since you pressed them. eg so I can push the button in the morning when I have put in my anti-baldness hair stuff and then not have to wonder whether I did.

Would you be interested in buying such a thing?

Perhaps they have a dry wipe section so you can write what the button is for.

If you would, can you upvote the attached comment.

Thanks to the people who use this forum.

I try and think about things better and it's great to have people to do so with, flawed as we are. In particularly @KatjaGrace and @Ben Pace.

I hope we can figure it all out.

In the below article I give an honest list of considerations about my thoughts about AI. Currently it sits on -1 karma (without my own).

This is sort of fine. I don't think it's a great article and I am not sure that it's highly worthy of people's attention, but I think that a community that wants to encourage thinking about AI might not want to penalise those who do so.

When I was a Christian, questions were always "acceptable". But if I asked "so why is the bible true" that would have received a sort of stern look and then a single paragraph answer. ...

Feels like FLI is a massively underrated org. Cos of the whole vitalik donation thing they have like $300mn.

Not sure what you mean by "underrated". The fact that they have $300MM from Vitalik but haven't really done much anyways was a downgrade in my books.

My bird flu risk dashboard is here:

If you find it valuable, you could upvote it on HackerNews:

I find Twitter unusually disheartening at the moment. Feels like many people have decided "oh let's have a huge conflict and hate one another"

I wish we kept and upvotable list of journalists so we could track who is trusted in the community and who isn't.

Seems not hard. Just a page with all the names as comments. I don't particularly want to add people, so make the top level posts anonymous. Then anyone can add names and everyone else can vote if they are trustworthy and add comments of experiences with them.

Given my understanding of epistemic and necessary truths it seems plausible that I can construct epistemic truths using only necessary ones, which feels contradictory.

Eg 1 + 1 = 2 is a necessary truth

But 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 + 1 = 10 is epistemic. It could very easily be wrong if I have miscounted the number of 1s.

This seems to suggest that necessary truths are just "simple to check" and that sufficiently complex necessary truths become epistemic because of a failure to check an operation.

Similarly "there are 180 degrees on the inside of a tri...

I am trying to learn some information theory.

It feels like the bits of information between 50% and 25% and 50% and 75% should be the same.

But for probability p, the information is -log2(p).

But then the information of .5 -> .25 is 1 bit and but from .5 to .75 is .41 bits. What am I getting wrong?

I would appreciate blogs and youtube videos.

I might have misunderstood you, but I wonder if you're mixing up calculating the self-information or surpisal of an outcome with the information gain on updating your beliefs from one distribution to another.

An outcome which has probability 50% contains bit of self-information, and an outcome which has probability 75% contains bits, which seems to be what you've calculated.

But since you're talking about the bits of information between two probabilities I think the situation you have in mind is that I've started with 50% credence in some proposition A, and ended up with 25% (or 75%). To calculate the information gained here, we need to find the entropy of our initial belief distribution, and subtract the entropy of our final beliefs. The entropy of our beliefs about A is .

So for 50% -> 25% it's

And for 50%->75% it's

So your intuition is correct: these give the same answer.

Some thoughts on Rootclaim

Blunt, quick. Weakly held.

The platform has unrealized potential in facilitating Bayesian analysis and debate.

Either

- The platform could be a simple reference document

- The platform could be an interactive debate and truthseeking tool

- The platform could be a way to search the rootclaim debates

Currently it does none of these and is frustrating to me.

Heading to the site I expect:

- to be able to search the video debates

- to be able to input my own probability estimates to the current bayesian framework

- Failing t

I think I'm gonna start posting top blogpost to the main feed (mainly from dead writers or people I predict won't care)

If you or a partner have ever been pregnant and done research on what is helpful and harmful, feel free to link it here and I will add it to the LessWrong pregnancy wiki page.

https://www.lesswrong.com/tag/pregnancy

I've made a big set of expert opinions on AI and my inferred percentages from them. I guess that some people will disagree with them.

I'd appreciate hearing your criticisms so I can improve them or fill in entries I'm missing.

https://docs.google.com/spreadsheets/d/1HH1cpD48BqNUA1TYB2KYamJwxluwiAEG24wGM2yoLJw/edit?usp=sharing

Epistemic status: written quickly, probably errors

Some thoughts on Manifund

- To me it seems like it will be the GiveDirectly of regranting (perhaps along with NonLinear) rather than the GiveWell

- It will be capable of rapidly scaling (especially if some regrantors are able to be paid for their time if they are dishing out a lot). It's not clear to me that's a bottleneck of granting orgs.

- There are benefits to centralised/closed systems. Just as GiveWell makes choices for people and so delivers 10x returns, I expect that Manifund will do worse, on average than O

This journalist wants to talk to me about the Zizian stuff.

https://www.businessinsider.com/author/rob-price

I know about as much as the median rat, but I generally think it's good to answer journalists on substantive questions.

Do you think is a particularly good or bad idea, do you have any comments about this particular journalist. Feel free to DM me.

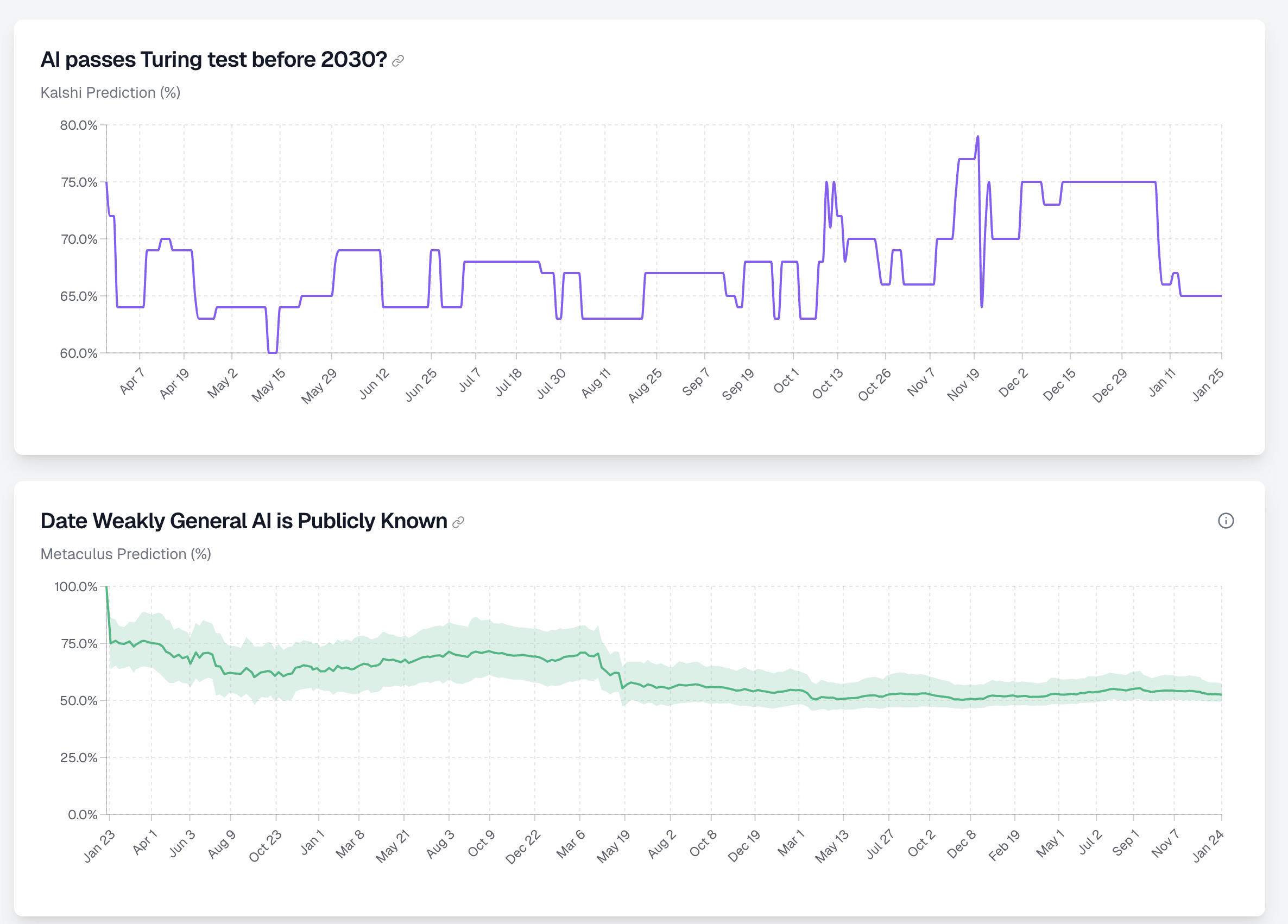

How might I combine these two datasets? One is a binary market, the other is a date market. So for any date point, one is a percentage P(turing test before 2030) the other is a cdf across a range of dates P(weakly general AI publicly known before that date).

Here are the two datasets.

Suggestions:

- Fit a normal distribution to the turing test market such that the 1% is at the current day and the P(X<2030) matches the probability for that data point

- Mirror the second data set but for each data point elevate the probabilities before 2030 such that P(X<

I don't really understand the difference between simulacra levels 2 and 3.

- Discussing reality

- Attempting to achieve results in reality by inaccuracy

- Attempting to achieve results in social reality by inaccuracy

I've never really got 4 either, but let's stick to 1 - 3.

Also they seem more like nested circles rather than levels - the jump between 2 and 3 (if I understand it correctly) seems pretty arbitrary.

Is there a summary of the rationalist concept of lawfulness anywhere. I am looking for one and can't find it.

I would have a dialogue with someone on whether Piper should have revealed SBF's messages. Happy to take either side.

What is the best way to take the average of three probabilities in the context below?

- There is information about a public figure

- Three people read this information and estimate the public figure's P(doom)

- (It's not actually p(doom) but it's their probability of something

- How do I then turn those three probabilities into a single one?

Thoughts.

I currently think the answer is something like for probability a,b,c then the group median is 2^((log2a + log2b + log2c)/3). This feels like a way to average the bits that each person gets from the text.

I could just take t...

Communication question.

How do I talk about low probability events in a sensical way?

eg "RFK Jr is very unlikely to win the presidency (0.001%)" This statement is ambiguous. Does it mean he's almost certain not to win or that the statement is almost certainly not true?

I know this sounds wonkish, but it's a question I come to quite often when writing. I like to use words but also include numbers in brackets or footnotes. But if there are several forecasts in one sentence with different directions it can be hard to understand.

"Kamala is a sl...

What are the LessWrong norms on promotion? Writing a post about my company seems off (but I think it could be useful to users). Should I write a quick take?

Nathan and Carson's Manifold discussion.

As of the last edit my position is something like:

"Manifold could have handled this better, so as not to force everyone with large amounts of mana to have to do something urgently, when many were busy.

Beyond that they are attempting to satisfy two classes of people:

- People who played to donate can donate the full value of their investments

- People who played for fun now get the chance to turn their mana into money

To this end, and modulo the above hassle this decision is good.

It is unclear to me whether there...

I recall a comment on the EA forum about Bostrom donating a lot to global dev work in the early days. I've looked for it for 10 minutes. Does anyone recall it or know where donations like this might be recorded?

Why you should be writing on the LessWrong wiki.

There is way too much to read here, but if we all took pieces and summarised them in their respective tag, then we'd have a much denser resources that would be easier to understand.

There are currently no active editors or a way of directing sufficient-for-this-purpose traffic to new edits, and on the UI side no way to undo an edit, an essential wiki feature. So when you write a large wiki article, it's left as you wrote it, and it's not going to be improved. For posts, review related to tags is in voting on the posts and their relevance, and even that is barely sufficient to get good relevant posts visible in relation to tags. But at least there is some sort of signal.

I think your article on Futarchy illustrates this point. So a reasonable policy right now is to keep all tags short. But without established norms that live in minds of active editors, it's not going to be enforced, especially against large edits that are written well.

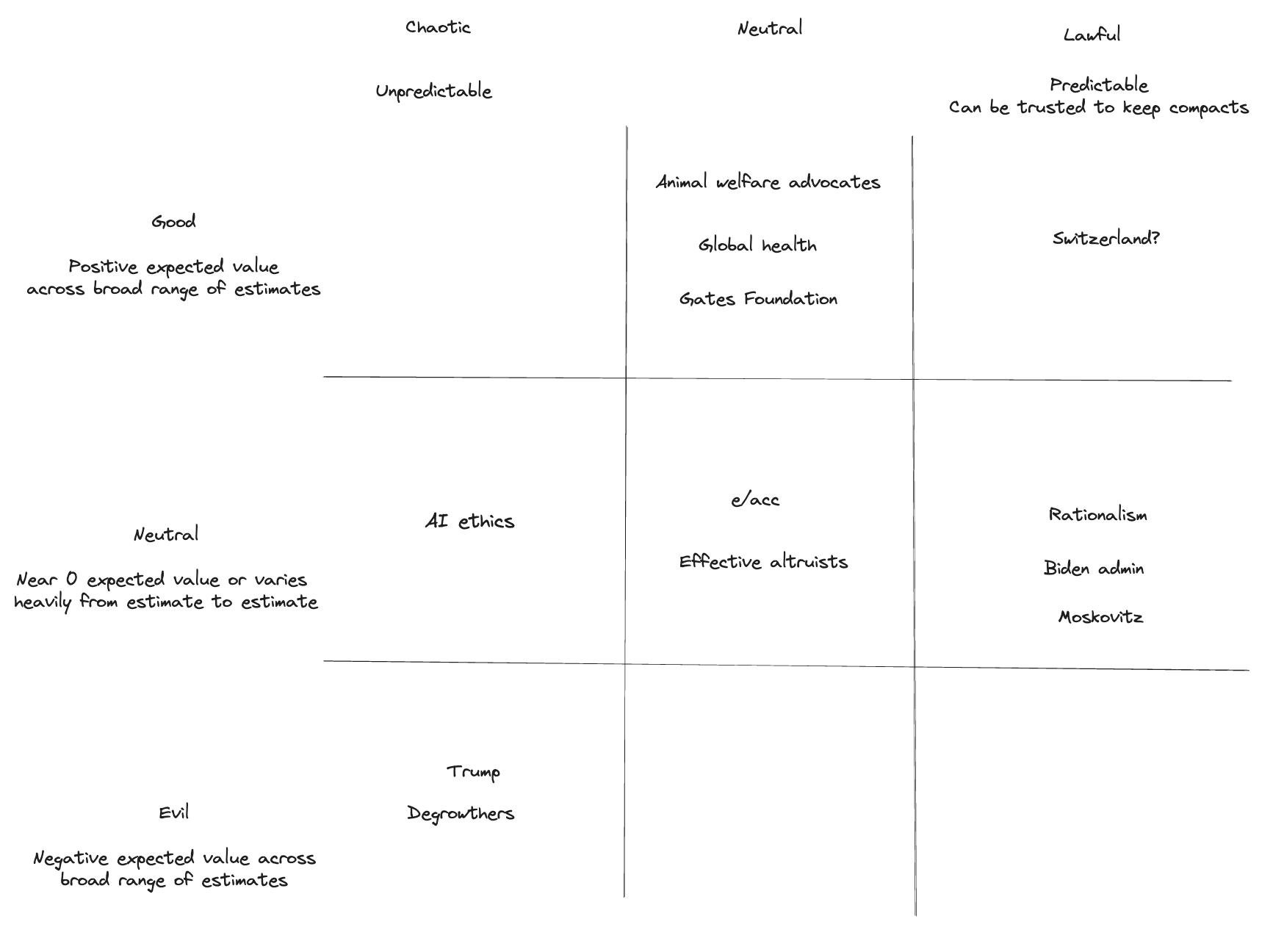

Here is a 5 minute, spicy take of an alignment chart.

What do you disagree with.

To try and preempt some questions:

Why is rationalism neutral?

It seems pretty plausible to me that if AI is bad, then rationalism did a lot to educate and spur on AI development. Sorry folks.

Why are e/accs and EAs in the same group.

In the quick moments I took to make this, I found both EA and E/acc pretty hard to predict and pretty uncertain in overall impact across some range of forecasts.

It seems pretty plausible to me that if AI is bad, then rationalism did a lot to educate and spur on AI development. Sorry folks.

What? This apology makes no sense. Of course rationalism is Lawful Neutral. The laws of cognition aren't, can't be, on anyone's side.

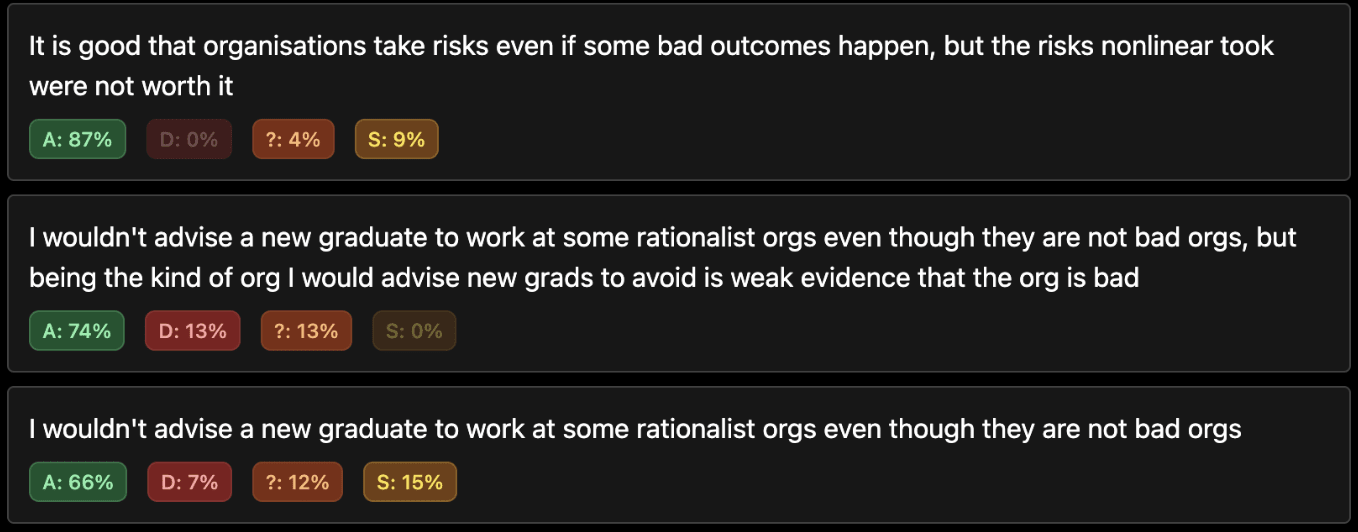

I did a quick community poll - Community norms poll (2 mins)

I think it went pretty well. What do you think next steps could/should be?

Here are some points with a lot of agreement.

Things I would do dialogues about:

(Note I may change my mind during these discussions but if I do so I will say I have)

- Prediction is the right frame for most things

- Focus on world states not individual predictions

- Betting on wars is underrated

- The UK House of Lords is okay actually

- Immigration should be higher but in a way that doesn't annoy everyone and cause backlash

I appreciate reading women talk about what is good sex for them. But it's a pretty thin genre, especially with any kind of research behind it.

So I'd recommend this (though it is paywalled):

https://aella.substack.com/p/how-to-be-good-at-sex-starve-her?utm_source=profile&utm_medium=reader2

Also I subscribed to this for a while and it was useful:

https://start.omgyes.com/join

I suggest that rats should use https://manifold.love/ as the Schelling dating app. It has long profiles and you can bet on other people getting on.

What more could you want!

I am somewhat biased because I've bet that it will be a moderate success.

Relative Value Widget

It gives you sets of donations and you have to choose which you prefer. If you want you can add more at the bottom.

Other things I would like to be able to express anonymously on individual comments:

- This is poorly framed - Sometimes i neither want to agree nor diagree. I think the comment is orthogonal to reality and agreement and disagreement both push away from truth.

- I don't know - If a comment is getting a lot of agreement/disagreement it would also be interesting to see if there could be a lot of uncertainty

It's a shame the wiki doesn't support the draft google-docs-like editor. I wish I could make in-line comments while writing.

Politics is the Mindfiller

There are many things to care about and I am not good at thinking about all of them.

Politics has many many such things.

Do I know about:

- Crime stats

- Energy generation

- Hiring law

- University entrance

- Politicians' political beliefs

- Politicians' personal lives

- Healthcare

- Immigration

And can I actually confidently think that things you say are actually the case. Or do I have a surface level $100 understanding?

Poltics may or may not be the mindkiller, whatever Yud meant by that, but for me it is the mindfiller, it's just ...

I'm about to go to sleep but I am a bit confused about Epstein stuff.

In 2019, @Rob Bensinger said that "Epstein had previously approached us in 2016 looking for organizations to donate to, and we decided against pursuing the option;"

Looking at the Justice Dept releases (which I assume are real) (eg https://www.justice.gov/epstein/files/DataSet%209/EFTA00814059.pdf)

That doesn't feel a super accurate description. It seems like there was a discussion with Epstein after it was clear he had been involved in pretty bad behaviour. (In 2008 he pleaded guilty...

Here's Yudkowsky's memory of what happened:

Epstein asked to call during a fundraiser. My notes say that I tried to explain AI alignment principles and difficulty to him (presumably in the same way I always would) and that he did not seem to be getting it very much. Others at MIRI say (I do not remember myself / have not myself checked the records) that Epstein then offered MIRI $300K; which made it worth MIRI's while to figure out whether Epstein was an actual bad guy versus random witchhunted guy, and ask if there was a reasonable path to accepting his donations causing harm; and the upshot was that MIRI decided not to take donations from him. I think/recall that it did not seem worthwhile to do a whole diligence thing about this Epstein guy before we knew whether he was offering significant funding in the first place, and then he did, and then MIRI people looked further, and then (I am told) MIRI turned him down.

Epstein threw money at quite a lot of scientists and I expect a majority of them did not have a clue. It's not standard practice among nonprofits to run diligence on donors, and in fact I don't think it should be. Diligence is costly in executive attention, it is relative...

I understand many in this world think guilt-by-association is valid, but that doesn't mean it is. Talking with a felon is not itself a crime (nor a bad thing) and you should generally not ostracize people for who they talk to.

Furthermore, accepting a donation from a felon is not inherently bad. Being paid off to launder their reputation is a bad thing, and insofar as you lend your reputation to them in exchange for money, that's unethical, but I think it's clear that it's not healthy for all felons to be barred from donating to charity/non-profits. The money is not itself tainted, it's their reputation that must be kept straight.