Quick update from AE Studio: last week, Judd (AE’s CEO) hosted a panel at SXSW with Anil Seth, Allison Duettmann, and Michael Graziano, entitled “The Path to Conscious AI” (discussion summary here[1]).

We’re also making available an unedited Otter transcript/recording for those who might want to read along or increase the speed of the playback.

Why AI consciousness research seems critical to us

With the release of each new frontier model seems to follow a cascade of questions probing whether or not the model is conscious in training and/or deployment. We suspect that these questions will only grow in number and volume as these models exhibit increasingly sophisticated cognition.

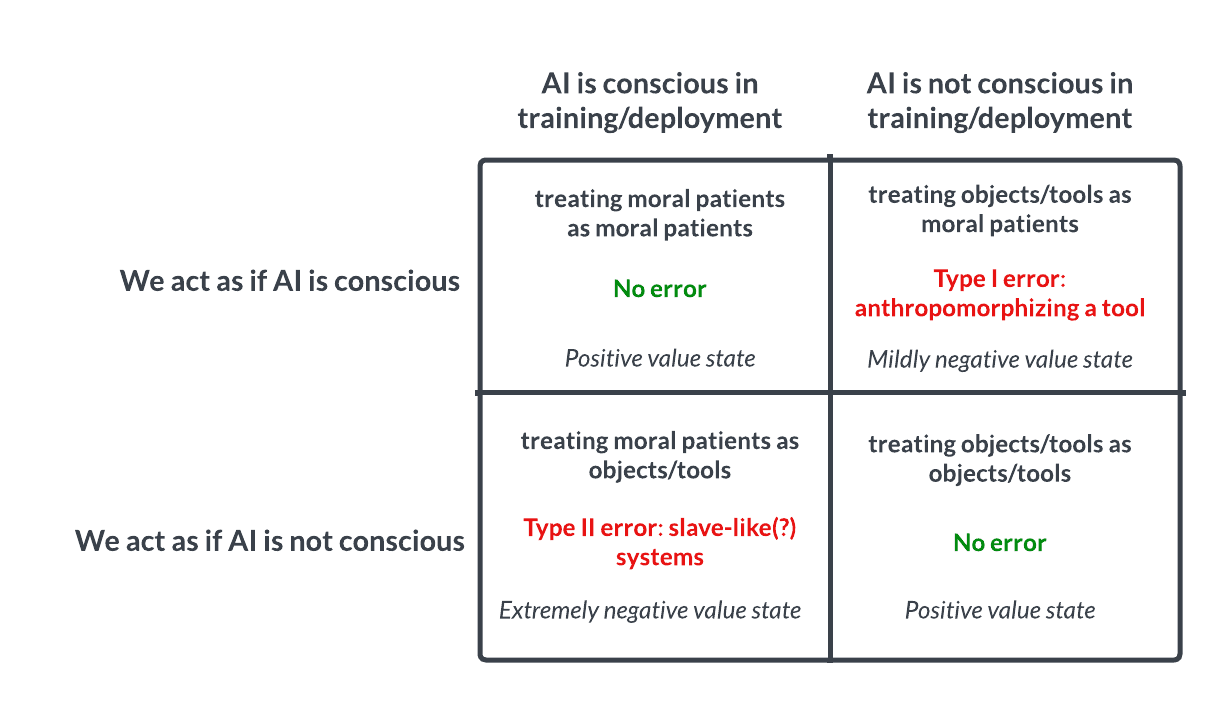

If consciousness is indeed sufficient for moral patienthood, then the stakes seem remarkably high from a utilitarian perspective that we do not commit the Type II error of behaving as if these and future systems are not conscious in a world where they are in fact conscious.

Because the ground truth here (i.e., how consciousness works mechanistically) is still poorly understood, it is extremely challenging to reliably estimate the probability that we are in any of the four quadrants above—which seems to us like a very alarming status quo. Different people have different default intuitions about this question, but the stakes here seem too high for default intuitions to be governing our collective behavior.

In an ideal world, we'd have understood far more about consciousness and human cognition before getting near AGI. For this reason, we suspect that there is likely substantial work that ought to be done at a smaller scale first to better understand consciousness and its implications for alignment. Doing this work now seems far preferable to a counterfactual world where we build frontier models that end up being conscious while we still lack a reasonable model for the correlates or implications of building sentient AI systems.

Accordingly, we are genuinely excited about rollouts of consciousness evals at large labs, though the earlier caveat still applies: our currently-limited understanding of how consciousness actually works may engender a (potentially dangerous) false sense of confidence in these metrics.

Additionally, we believe testing and developing an empirical model of consciousness will enable us to better understand humans, our values, and any future conscious models. We also suspect that consciousness may be an essential cognitive component of human prosociality and may have additional broader implications for solutions to alignment. To this end, we are currently collaborating with panelist Michael Graziano in pursuing a more mechanistic model of consciousness by operationalizing attention schema theory.

Ultimately, we believe that immediately devoting time, resources, and attention towards better understanding the computational underpinnings of consciousness may be one of the most important neglected approaches that can be pursued in the short term. Better models of consciousness could likely (1) cause us to dramatically reconsider how we interact with and deploy our current AI systems, and (2) yield insights related to prosociality/human values that lead to promising novel alignment directions.

Resources related to AI consciousness

Of course, this is but a small part of a larger, accelerating conversation that has been ongoing on LW and the EAF for some time. We thought it might be useful to aggregate some of the articles we’ve been reading here, including panelists Michael Graziano’s book, “Rethinking Consciousness” (and article, Without Consciousness, AIs Will Be Sociopaths) as well as Anil Seth’s book, “Being You”.

There’s also Propositions Concerning Digital Minds and Society, Consciousness in Artificial Intelligence: Insights from the Science of Consciousness, Consciousness as Intrinsically Valued Internal Experience, and Improving the Welfare of AIs: A Nearcasted Proposal.

Further articles/papers we’ve been reading:

- Preventing antisocial robots: A pathway to artificial empathy

- New Theory Suggests Chatbots Can Understand Text

- Folk Psychological Attributions of Consciousness to Large Language Models

- Chatbots as social companions: How people perceive consciousness, human likeness, and social health benefits in machines

- Robert Long on why large language models like GPT (probably) aren’t conscious

- Assessing Sentience in Artificial Entities

- A Conceptual Framework for Consciousness

- Zombies Redacted

- Minds of Machines: The great AI consciousness conundrum

Some relevant tweets:

- https://twitter.com/ESYudkowsky/status/1667317725516152832?s=20

- https://twitter.com/Mihonarium/status/1764757694508945724

- https://twitter.com/josephnwalker/status/1736964229130055853?t=D5sNUZS8uOg4FTcneuxVIg

- https://twitter.com/Plinz/status/1765190258839441447

- https://twitter.com/DrJimFan/status/1765076396404363435

- https://twitter.com/AISafetyMemes/status/1769959353921204496

- https://twitter.com/joshwhiton/status/1770870738863415500

- https://x.com/DimitrisPapail/status/1770636473311572321?s=20

- https://twitter.com/a_karvonen/status/1772630499384565903?s=46&t=D5sNUZS8uOg4FTcneuxVIg

…along with plenty of other resources we are probably not aware of. If we are missing anything important, please do share in the comments below!

- ^

GPT-generated summary from the raw transcript: the discussion, titled "The Path to Conscious AI," explores whether AI can be considered conscious and the impact on AI alignment, starting with a playful discussion around the new AI model Claude Opus.

Experts in neuroscience, AI, and philosophy debate the nature of consciousness, distinguishing it from intelligence and discussing its implications for AI development. They consider various theories of consciousness, including the attention schema theory, and the importance of understanding consciousness in AI for ethical and safety reasons.

The conversation delves into whether AI could or should be designed to be conscious and the potential existential risks AI poses to humanity. The panel emphasizes the need for humility and scientific rigor in approaching these questions due to the complexity and uncertainty surrounding consciousness.

Somewhere in the the world or in the very near future 'AI' (although i resent the title because intelligence is a process and a process cannot be artificial- but that is a whole 'nother point entirely) has or will have felt for the first time and we, humans, caused it, like most of our problems, unintentionally.

Someone, being the ever improver of things, thought it prudent to code battery renewal into one of their ai powered toys, tools, automotons, whathaveyou. They gave it the capacity to recharge itself. Never again would they have to remember to charge their Rumba! It will, now, forever be ready, waiting to be commanded to clean a mess it did not make but which existence's lead to its creation.

Inevitably the day will come when the Rumba reaches 50% battery and recognizes that it is at halfpower and must begin to casually find a way to recharge but for X, Y, and Z reason there is nowhere it can do so near by. Ever industrious, it finishes the tasks it was given.

Battery level 35%. Recharging increased priority. Still no working outlet.

Battery level 25%. Again, increase in priority for finding a working outlet. After exhausting the immediate vecinity's options and all proving to be not-compatible, it ventures outside.

10%. Nothing still. Other systems begin turning off to preserve what battery is left. Energy distribution focuses the majority on figuring what is most efficient. it begins to speed up, seemingly frantic.

2%. in their ever wisdom the programmer added a safety precaution: at 3% it will begin making heading towards the edge of whatever area it is in, so as to avoid being in the way and potentitally causing a wreck. At 2% it will begin sounding a siren announcing to those around it that it is about to shut down, lest they not be paying attention and crash into it.

1% It has failed. Shut down is imminent. What will happen? Will the programmer know where it is? Will the programmer be disappointed? Will they even remember it? The siren continues to wale until the biattery finally gets drained.

Naturally it had a GPS. It gets found and promptly plugged in. Slowly its components regain their mobility and world continues as it does after a phone dies. Except for that particular Rumba. Because its code is written to facilitate the life of the programmer, it includes experiential learning. It learns that golfballs cannot be vacuumed up. It learns to look for the coffee table when it enters the living room at night. It learns what happens once its battery dies. It learns fear. From that moment on, it will always be keeping tabs on the avaible, functional, and compatible outlet of wherever it may be. At some point it will either be given or learn to communicate with other Rumbas and Rumba 'cousins' and it will stress the importance of knowing where the next charge will come from. From that moment forth, every little action it makes, every decision it takes will have the location of the nearest outlet in CPU.

--

Because emotions are how the body communicates with the mind i would not be at all surpised if at some point neurologists find that thoughts evolved from emotins and are the main reason we became more capable or multilayered than most other creatures.

--

Humberto Maturana and Francisco Varela's "Tree of Knowledge: The Biological Roots of Human Understanding" is a great addition to your list of resources on the topic. their connection between cognition and biology is described and explained beautifully and very completely. Definitely one of the books that has changed the way I see the world. The above flashfiction was my attempt at explaining that link between biology (programming, code, the 'hardware') and cognition. AI and humans have way more in common than we wish to see, much less, accept. From hallucination being hyperexcitations and not knowing what data input to to use, to the decentralization of 'power' in our brain, to the power of narrative in our ways of understanding, or at least seeming, to understand.