(You mention Mandarin having compact grammar but in the table you grade it a ❌ at compact grammar.)

On a more substantive note:

Aside from the normal cognitive benefits of being bilingual or multilingual, would learning some new language (or a conlang for this purpose) specifically to have conscious thought with be useful?

Not sure if this is exactly what you had in mind, since it's fictional transhumanist tech, but I was reminded of this passage from Richard Ngo's recent short story The Gentle Romance:

Almost everyone he talks to these days consults their assistant regularly. There are tell-tale signs: their eyes lose focus for a second or two before they come out with a new fact or a clever joke. He mostly sees it at work, since he doesn’t socialize much. But one day he catches up with a college friend he’d always had a bit of a crush on, who’s still just as beautiful as he remembers. He tries to make up for his nervousness by having his assistant feed him quips he can recite to her. But whenever he does, she hits back straight away with a pitch-perfect response, and he’s left scrambling.

“You’re good at this. Much faster than me,” he says abruptly.

“Oh, it’s not skill,” she says. “I’m using a new technique. Here.” With a flick of her eyes she shares her visual feed, and he flinches. Instead of words, the feed is a blur of incomprehensible images, flashes of abstract color and shapes, like a psychedelic Rorschach test.

“You can read those?”

“It’s a lot of work at first, but your brain adapts pretty quickly.”

He makes a face. “Not gonna lie, that sounds pretty weird. What if they’re sending you subliminal messages or something?”

Back home, he tries it, of course. The tutorial superimposes images and their text translations alongside his life, narrating everything he experiences. Having them constantly hovering on the side of his vision makes him dizzy. But he remembers his friend’s effortless mastery, and persists. Slowly the images become more comprehensible, until he can pick up the gist of a message from the colors and shapes next to it. For precise facts or statistics, text is still necessary, but it turns out that most of his queries are about stories: What’s in the news today? What happened in the latest episode of the show everyone’s watching? What did we talk about last time we met? He can get a summary of a narrative in half a dozen images: not just the bare facts but the whole arc of rising tension and emotional release. After a month he rarely needs to read any text.

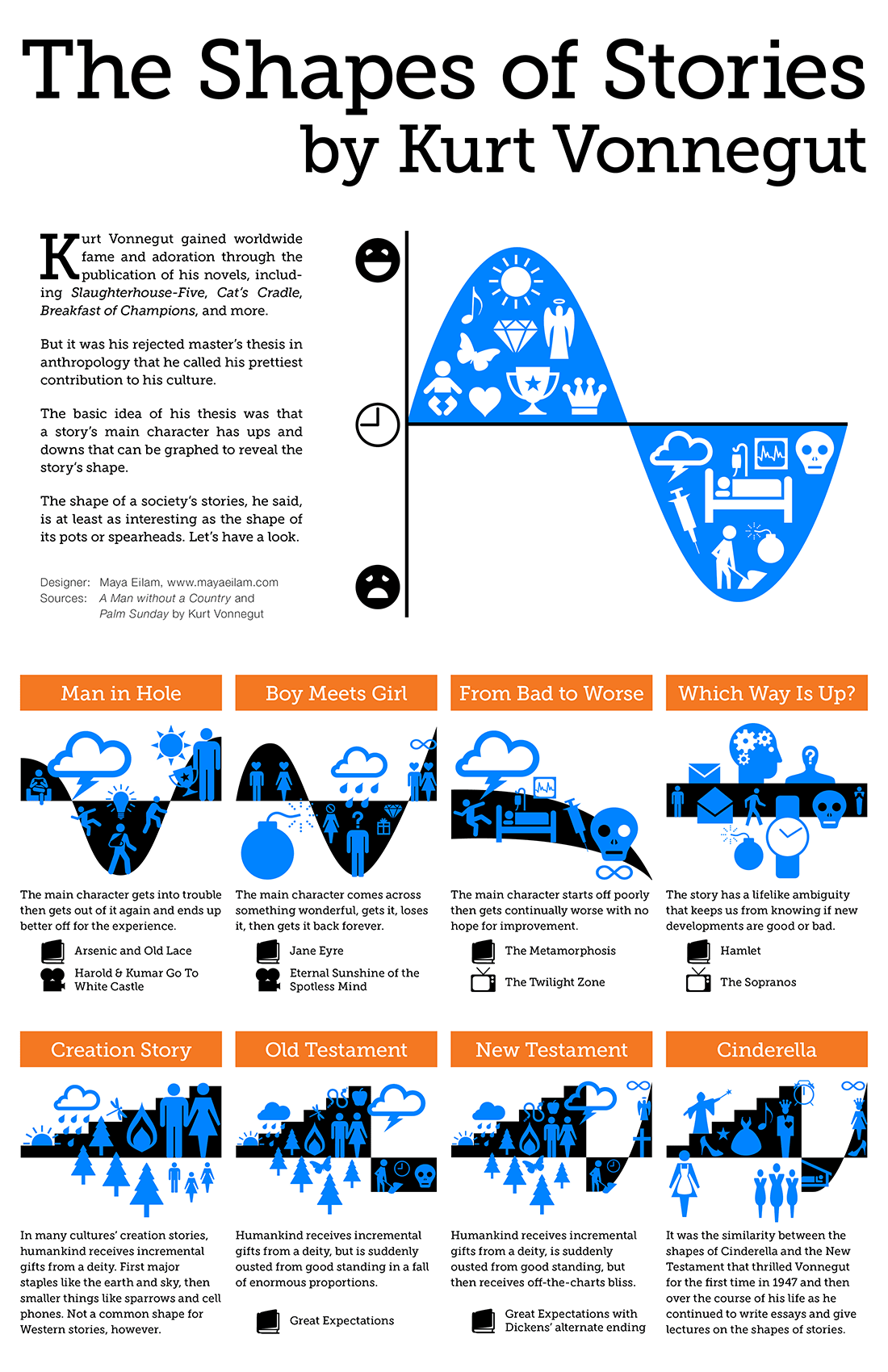

That last link goes to Kurt Vonnegut on the 8 “shapes” of stories. The story is that Vonnegut wrote a master’s thesis on the shapes of stories that he submitted to the anthropology department at the University of Chicago, which rejected it. Here's a YouTube video of him talking about it; below is an infographic from that article:

That said, Richard's Vonnegut-inspired fictional tech is about communicating narratives efficiently, not precise facts or statistics. For that, Gwern's On the Existence of Powerful Natural Languages persuaded me that you can't really have powerful general-purpose conlangs that boost cognition across a wide variety of domains.

o1 has shown a strange behavior where it thinks in Mandarin, while processing English prompts, and translates the results back to English for the output. I realized that the same could be possible for humans to utilize, speeding up conscious thought. [1]

What makes Mandarin useful for this is that it:

As a Jew, I learned the Hebrew Alphabet (but not vocabulary) to study for my Bar Mitzvah, and as a student of US public education, I had the choice in secondary school to learn Spanish, French, or German, in addition to learning English natively. I chose German, and I am very unlikely to change or stop learning this, but I wonder if it would be useful to learn a new language specifically to think in. This would pose some different requirements than traditionally learning a language, as reading and writing would be much less important for this task. Knowing many different words, and correct grammar would be much more important.

The idea of Brain-Machine interfaces installed into one's brain, ending the need for languages altogether would bring a major improvement to human society, but intentional control of thought[5] via language could bring the same effect. Aside from the normal cognitive benefits of being bilingual or multilingual, would learning some new language (or a conlang for this purpose) specifically to have conscious thought with be useful?

https://techcrunch.com/2025/01/14/openais-ai-reasoning-model-thinks-in-chinese-sometimes-and-no-one-really-knows-why/

These are not empirical or quantitative in any way, just the general ideas I sense from these. The other ideas expressed in this post are severable from this chart.

Intentionally made from-scratch language (conlang) with a very limited character set

Language used for Google Translate, all messages are first translated into this language, then translated into the output language. This method only requires 2 models per language, rather than the exponentially growing number needed for one between each language pair.

The idea of not learning certain words, as a way to make certain concepts slower to conceive has occured to me, but this seems to be a bad idea for obvious reasons.