This is a 4-minute read, inspired by the optimized writing in Eukaryote's Spaghetti Towers post.

My thinking is that generative AI has potential for severe manipulation, but that the 2010s AI used in social media news feeds and other automated systems are a bigger threat, and this tech tells us much more about the future of international affairs and incentives for governments to race to accelerate AI, has fewer people aware of it, has a substantial risk of being used to attack the AI safety community, and the defenses are easy and mandatory to deploy. This post explains why this tech is powerful enough to be a centerpiece of people's world models.

The people in Tristan Harris's The Social Dilemma (2020) did a fantastic job describing the automated optimization mechanism in a quick and fun way (transcript).

[Tristan] A [stage] magician understands something, some part of your mind that we’re not aware of. That’s what makes the [magic trick] illusion work. Doctors, lawyers, people who know how to build 747s or nuclear missiles, they don’t know more about how their own mind is vulnerable. That’s a separate discipline. And it’s a discipline that applies to all human beings...

[Shoshana] How do we use subliminal cues on the Facebook pages to get more people to go vote in the midterm elections? And they discovered that they were able to do that.

One thing they concluded is that we now know we can affect real-world behavior and emotions without ever triggering the user’s awareness. They are completely clueless.

Important note: all optimization here is highly dependent on measurability, and triggering the user's awareness is a highly measureable thing.

![114501_1_09dreyfuss-video_wg_720p.mp4 [video-to-gif output image]](https://res.cloudinary.com/lesswrong-2-0/image/upload/f_auto,q_auto/v1/mirroredImages/aWPucqvJ4RWKKwKjH/x3ba8x6vdij4y7h3lu4s)

If anything creeps someone out, they use the platform less; such a thing is incredibly easy to measure and isolate causation. To get enough data, these platforms must automatically reshape themselves to feel safe to use.

This obviously includes ads that make you feel manipulated; it is not surprising that we ended up in a system where >95% of ad encounters are not well-matched.

This gives researchers plenty of degrees of freedom to try different kinds of angles and see what works, and even automate that process.

[Tristan] We’re pointing these engines of AI back at ourselves to reverse-engineer what elicits responses from us. Almost like you’re stimulating nerve cells on a spider to see what causes its legs to respond.

Important note: my model says that a large share of data comes from scrolling. The movement of scrolling past something on a news feed, with either a touch screen/pad or a mouse wheel (NOT arrow keys), literally generates a curve.

It is the perfect biodata to plug into ML; the 2010s social media news feed paradigm generated trillions of instances of linear algebra, based on different people's reactions to different concepts and ideas that they scroll past.

So, it really is this kind of prison experiment where we’re just, you know, roping people into the matrix, and we’re just harvesting all this money and… and data from all their activity to profit from. And we’re not even aware that it’s happening.

[Chamath] So, we want to psychologically figure out how to manipulate you as fast as possible and then give you back that dopamine hit...

[Sean Parker] I mean, it’s exactly the kind of thing that a… that a hacker like myself would come up with because you’re exploiting a vulnerability in… in human psychology...

[Tristan] No one got upset when bicycles showed up. Right? Like, if everyone’s starting to go around on bicycles, no one said, “Oh, my God, we’ve just ruined society. Like, bicycles are affecting people. They’re pulling people away from their kids. They’re ruining the fabric of democracy. People can’t tell what’s true.”

Like, we never said any of that stuff about a bicycle. If something is a tool, it genuinely is just sitting there, waiting patiently. If something is not a tool, it’s demanding things from you... It wants things from you. And we’ve moved away from having a tools-based technology environment to an addiction- and manipulation-based technology environment.

That’s what’s changed. Social media isn’t a tool that’s just waiting to be used. It has its own goals, and it has its own means of pursuing them by using your psychology against you.

In the documentary, Harris describes a balance between three separate automated optimization directions from his time at tech companies: the Engagement goal, to drive up usage and keep people scrolling, the Growth goal, to keep people coming back and encouraging friends, and the Advertising goal, which pays for server usage.

In fact, there is a fourth slot: optimizing people's thinking in any measurable direction, such as making people feverishly in favor of the American side and opposed to the Russian side in proxy wars like Ukraine. The tradeoffs between these four optimization directions is substantial, and the balance/priority distribution is determined by the preferences of the company and the extent of the company's ties to its government and military (afaik mainly the US and China are relevant here).

Clown Attacks are a great example of something to fit into the fourth slot: engineer someone think a topic (e.g. lab leak hypothesis) is low-status by showing them low-status clowns talking about it, and high-status people ignoring or criticizing it.

It's important to note that this problem is nowhere near as important as Superintelligence, the finish line for humanity. But it's critical for world modelling; the last 10,000 years of human civilization only happened the way it did because manipulation capabilities at this level did not yet exist.

Information warfare was less important in the 20th century, relative to military force, because it was weaker. As information warfare becomes more powerful and returns on investment grow, more governments and militaries invest in information warfare than historical precedent would imply, and we end up in the information warfare timeline.

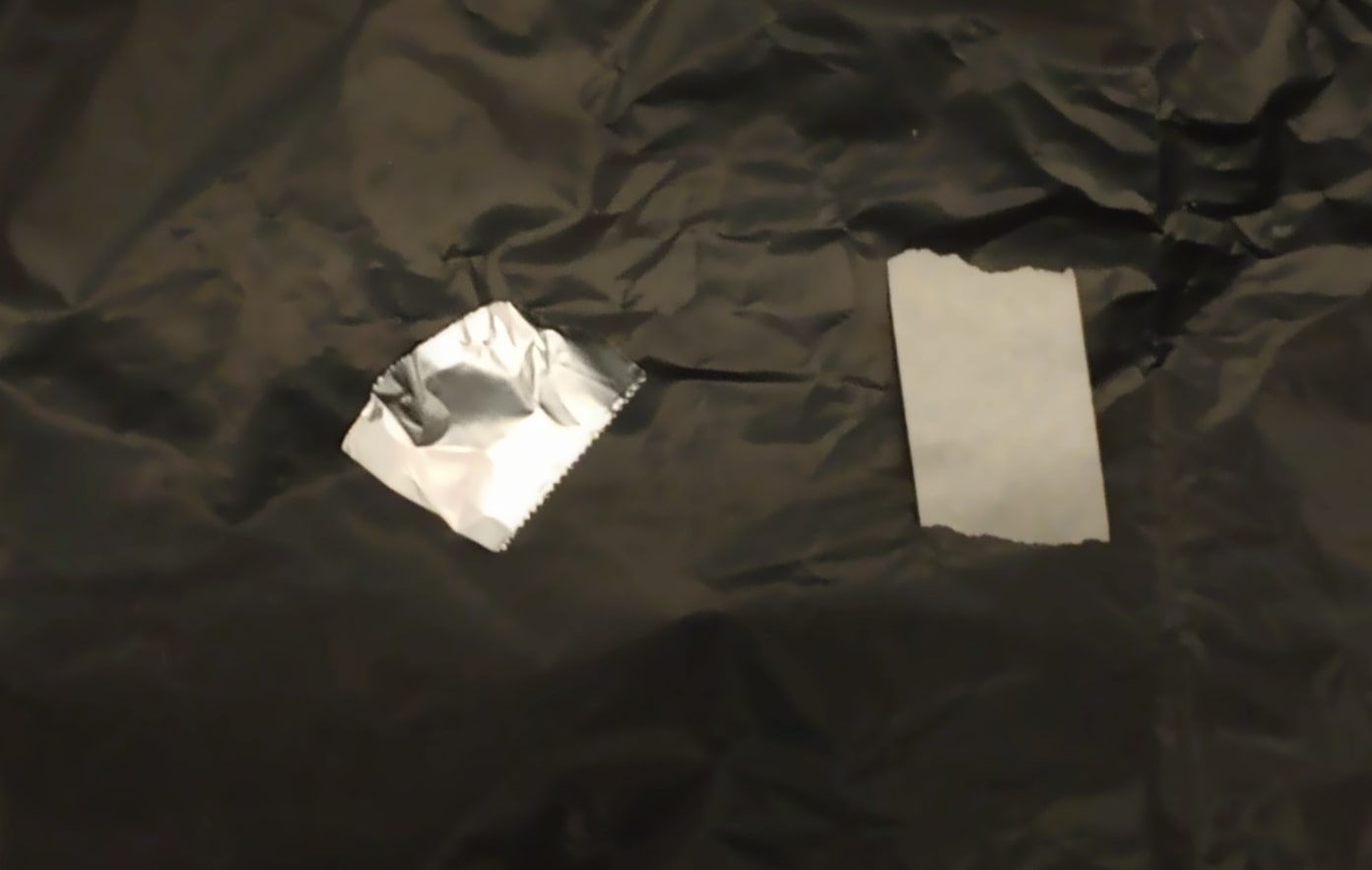

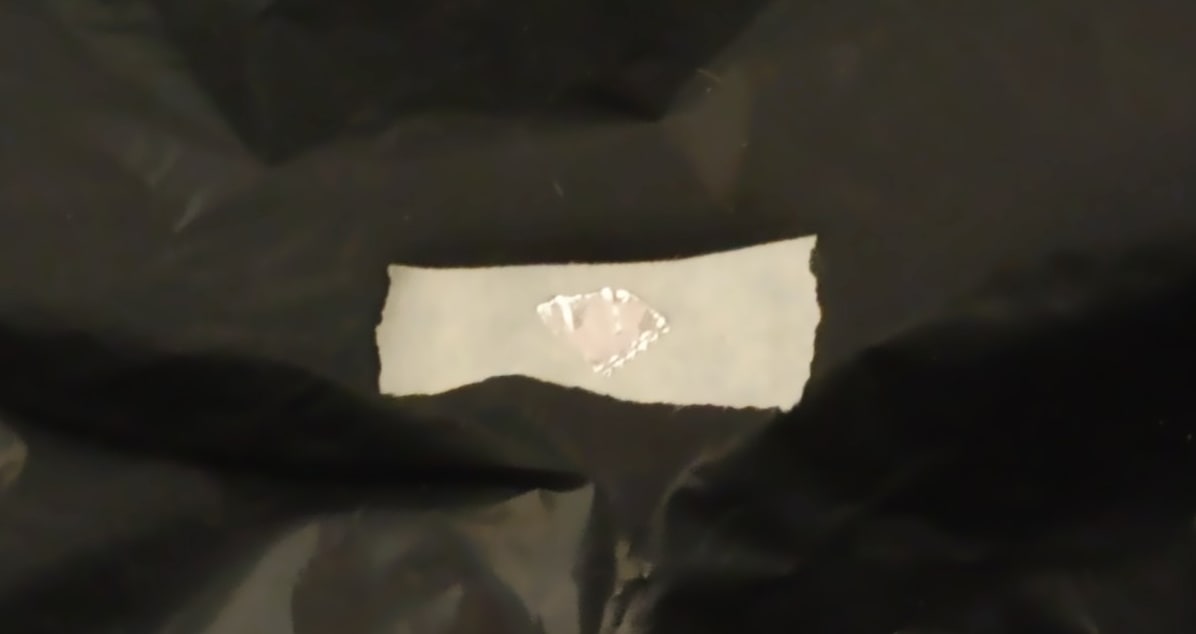

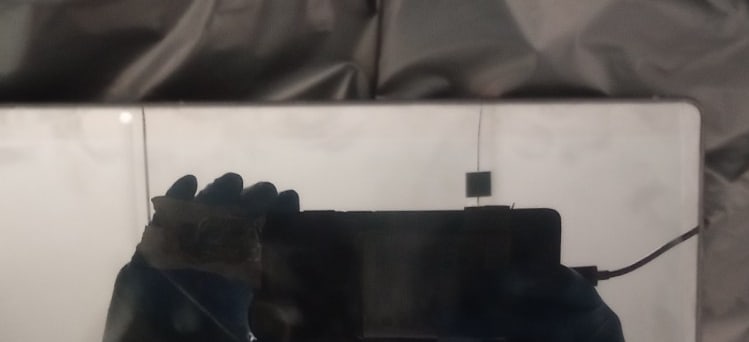

In the 2020s, computer vision makes eyetracking and large-scale facial microexpression recognition/research possibly the biggest threat. Unlike the hopelessness of securing your operating system or having important conversations near smartphones, solution is easy and worthwhile for people in the AI safety community (and it is why me posting this is net positive). It only takes a little chunk of tape and aluminum foil (that was easy enough for me to routinely peel off most of it and replace afterwards).

I've written about other fixes and various examples of ways for attackers to hack the AI safety community and turn it against itself. Please don't put yourself and others at risk.

I had a little bit of a thought on this, and I think the argument extends to other domains.

Major tech companies, and all the advertising dependent businesses are choosing what content to display mainly to optimize revenue. Or,

content_shown = argmax(estimated_engagement( filtered(content[]) ) )

Where content[] is all the possible videos youtube has the legal ability to show, a newspaper has the legal ability to publish, reddit has the legal ability to allow on their site, etc.

Several things immediately jump out at me. First:

1. filtering anything costs revenue. That is to say, on the aggregate, any kind of filter at all makes it less likely that the most engaging content won't be shown. So the main "filter" used is for content that advertisers find unacceptable, not content that the tech company disagrees with. This means that youtube videos disparaging Youtube are just fine.

2. Deep political manipulation mostly needs a lot of filtering, and this lowers revenue. Choosing what news to show is the same idea.

3. Really destructive things, like how politics are severely polarized in the USA, and mass shootings, are likely an unintended consequence of picking the most engaging content.

4. Consider what happens if a breaking news event happens, or a major meme wave happens, and you filter it out because your platform finds the material detrimental to some long term goal. Well that means that the particular content is missing from your platform, and this sends engagement and revenue to competitors.

General idea: any kind of ulterior motive other than argmaxing for right now - whether it be tech companies trying to manipulate perception, or some plotting AI system trying to pull off a complex long term plan - costs you revenue, future ability to act, and has market pressure against it.

This reminds me of the general idea where AI systems are trying to survive, and somehow trading services with humans for things that they need. The thing is, in some situations, 99% or more of all the revenue paid to the AI service is going to just keeping it online. It's not getting a lot of excess resources for some plan. Same idea for humans, what keeps humans 'aligned' is almost all resources are needed just to keep themselves alive, and to try to create enough offspring to replace their own failing bodies. There's very little slack for say founding a private army with the resources to overthrow the government.