I often encounter some confusion about whether the fact that synapses in the brain typically fire at frequencies of 1-100 Hz while the clock frequency of a state-of-the-art GPU is on the order of 1 GHz means that AIs think "many orders of magnitude faster" than humans. In this short post, I'll argue that this way of thinking about "cognitive speed" is quite misleading.

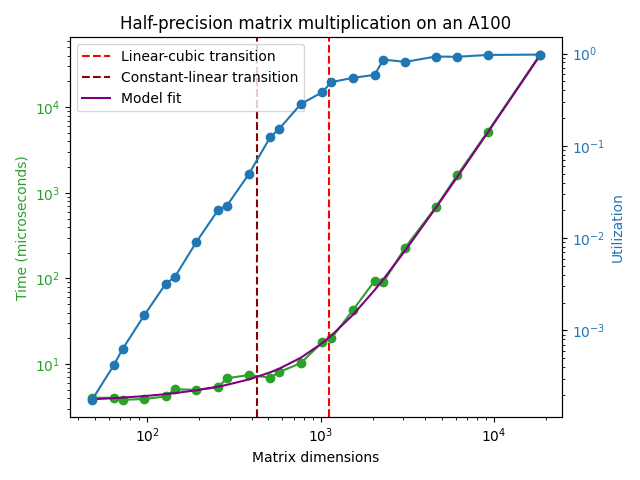

The clock speed of a GPU is indeed meaningful: there is a clock inside the GPU that provides some signal that's periodic at a frequency of ~ 1 GHz. However, the corresponding period of ~ 1 nanosecond does not correspond to the timescale of any useful computations done by the GPU. For instance; in the A100 any read/write access into the L1 cache happens every ~ 30 clock cycles and this number goes up to 200-350 clock cycles for the L2 cache. The result of these latencies adding up along with other sources of delay such as kernel setup overhead etc. means that there is a latency of around ~ 4.5 microseconds for an A100 operating at the boosted clock speed of 1.41 GHz to be able to perform any matrix multiplication at all:

The timescale for a single matrix multiplication gets longer if we also demand that the matrix multiplication achieves something close to the peak FLOP/s performance reported in the GPU datasheet. In the plot above, it can be seen that a matrix multiplication achieving good hardware utilization can't take shorter than ~ 100 microseconds or so.

On top of this, state-of-the-art machine learning models today consist of chaining many matrix multiplications and nonlinearities in a row. For example, a typical language model could have on the order of ~ 100 layers with each layer containing at least 2 serial matrix multiplications for the feedforward layers[1]. If these were the only places where a forward pass incurred time delays, we would obtain the result that a sequential forward pass cannot occur faster than (100 microseconds/matmul) * (200 matmuls) = 20 ms or so. At this speed, we could generate 50 sequential tokens per second, which is not too far from human reading speed. This is why you haven't seen LLMs being serviced at per token latencies that are much faster than this.

We can, of course, process many requests at once in these 20 milliseconds: the bound is not that we can generate only 50 tokens per second, but that we can generate only 50 sequential tokens per second, meaning that the generation of each token needs to know what all the previously generated tokens were. It's much easier to handle requests in parallel, but that has little to do with the "clock speed" of GPUs and much more to do with their FLOP/s capacity.

The human brain is estimated to do the computational equivalent of around 1e15 FLOP/s. This performance is on par with NVIDIA's latest machine learning GPU (the H100) and the brain achieves this performance using only 20 W of power compared to the 700 W that's drawn by an H100. In addition, each forward pass of a state-of-the-art language model today likely takes somewhere between 1e11 and 1e12 FLOP, so the computational capacity of the brain alone is sufficient to run inference on these models at speeds of 1k to 10k tokens per second. There's, in short, no meaningful sense in which machine learning models today think faster than humans do, though they are certainly much more effective at parallel tasks because we can run them on clusters of multiple GPUs.

In general, I think it's more sensible for discussion of cognitive capabilities to focus on throughput metrics such as training compute (units of FLOP) and inference compute (units of FLOP/token or FLOP/s). If all the AIs in the world are doing orders of magnitude more arithmetic operations per second than all the humans in the world (8e9 people * 1e15 FLOP/s/person = 8e24 FLOP/s is a big number!) we have a good case for saying that the cognition of AIs has become faster than that of humans in some important sense. However, just comparing the clock speed of a GPU to the synapse firing frequency in the human brain and concluding that AIs think faster than humans is a sloppy argument that neglects how training or inference of ML models on GPUs actually works right now.

While attention and feedforward layers are sequential in the vanilla Transformer architecture, they can in fact be parallelized by adding the outputs of both to the residual stream instead of doing the operations sequentially. This optimization lowers the number of serial operations needed for a forward or backward pass by around a factor of 2 and I assume it's being used in this context. ↩︎

Well a common view is that AI now is only possible with the compute levels available recently. This would mean it's already compute limited.

Say for the sake of engagement that GPTs are inefficient by 10 times vs the above estimate, that "AI progress" is leading to more capabilities on equal compute, and other progress is leading to more capabilities with more compute.

So in this scenario needs 8 H100s per robot. You want to "cover the earth". 8 billion people don't cover the earth, let's assume that 800 billion robots will.

Well how fast will progress happen? Let's assume we started with a baseline of 2 million H100s in 2024, and each year after we add 50 percent more production rate to the year prior, and every 2.5 years we double the performance per unit, then in 94 years we will have built enough H100s to cover the earth with robots.

How long do you think it requires for a factory to build the components used in itself, including the workers? That would be another way to calculate this. If it's 2 years, and we started with 250k capable robots (because of H100 shortage) then it would take 43 years for 800 billion. 22 years if it drops to 1 year.

People do bring up biological doubling times but neglect the surrounding ecosystem. When we say "double a robot" we don't mean theres a table of robot parts and one robot assembles another. That would take a few hours max. It means building all parts, mining for materials, and also doubling all the machinery used to make everything. Double the factories, double the mines, double the power generators.

You can use China's rate of industrial expansion as a proxy for this. At 15 percent thats a doubling time of 5 years. So if robots double in 2 years they are more than than twice as efficient as China, and remember China got some 1 time bonuses from a large existing population and natural resources like large untapped rivers.

Cellular self replication is using surrounding materials conveniently dissolved in solution, lichen for instance add only a few mm per year.

"AI progress" is then time bound to the production of compute and robots. You make AI progress, once you collect some "1 time" low hanging fruit bonus, at a rate equal to the log(available compute). This is compute you can spare not tied up in robots.

What log base do you assume? What's the log base for compute vs capabilities measurer at now? Is 1-2 years doubling time reasonable or do you have some data that would support a faster rate of growth? What do you think the "low hanging fruit" bonus is? I assumed 10x above because smaller models seem to currently have to throw away key capabilities to Goodheart benchmarks.

Numbers and the quality of the justification really really matter here. The above says that we shouldn't be worried about foom, but of course good estimates for the parameters would convince me otherwise.