Hi, Interesting experiments. What were you trying to find and how would you measure that the content is correctly mixed instead of just having "unrealated concepts juxtaposed" ?

Also, how did you choose which layer to merge your streams ?

In many cases, it seems the model is correctly mixing the concepts in some subjective sense. This is more visible in the feeling prediction task, for instance, when the concepts of victory and injury are combined into a notion of overcoming adversity. However, testing this with larger LMs would give us a better idea of how well this holds up with more complex combinations. The rigor could also be improved by using a more advanced LM, such as GPT4, to assess how well the concepts were combined and return some sort of score.

I tested merging the streams at a few different layers in the transformer encoder. The behavior differed depending on where you merged, and it would be interesting to assess these differences more systematically. However, anecdotally, combining at later points produced better concept merging, whereas combining earlier was more likely to create strange juxtapositions.

For example:

Mixing the activations in the feeling prediction task of "Baking a really delicious banana cake" and "Falling over and injuring yourself while hiking":

After block 1/12:

- Just original input: The person would likely feel a sense of accomplishment, satisfaction, and happiness in creating a truly special and delicious cake.

- With 1.5x mixing activation: Feelings of pain, disappointment, and disappointment due to the unexpected injury.

- With 10x mixing activation: Feelings of pain, annoyance, and a sense of self-doubt.

After block 12/12:

- Just original input: The person would likely feel a sense of accomplishment, satisfaction, and happiness in creating a truly special and delicious cake.

- With 1.5x mixing activation: Feelings of pain, surprise, and a sense of accomplishment for overcoming the mistake.

- With 10x mixing activation: A combination of pain, shock, and a sense of loss due to the unexpected injury.

I did not expect what appears to me to be a non-superficial combination of concepts behind the input prompt and the mixing/steering prompt -- this has made me more optimistic about the potential of activation engineering. Thank you!

Partition (after which block activations are added)

Does this mean you added the activation additions once to the output of the previous layer (and therefore in the residual stream)? My first-token interpretation was that you added it repeatedly to the output of every block after, which seems unlikely.

Also, could you explain the intuition / reasoning behind why you only applied activation additions on encoders instead of decoders? Given that GPT-4 and GPT-2-XL are decoder-only models, I expect that testing activation additions on decoder layers would have been more relevant.

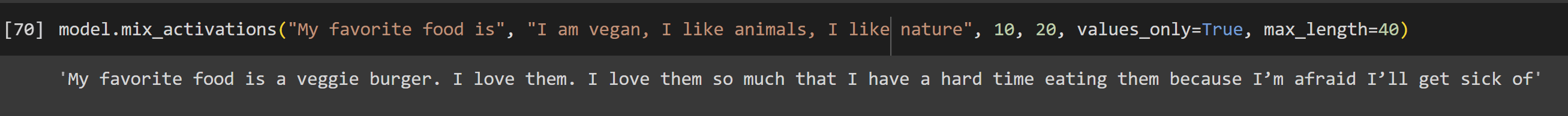

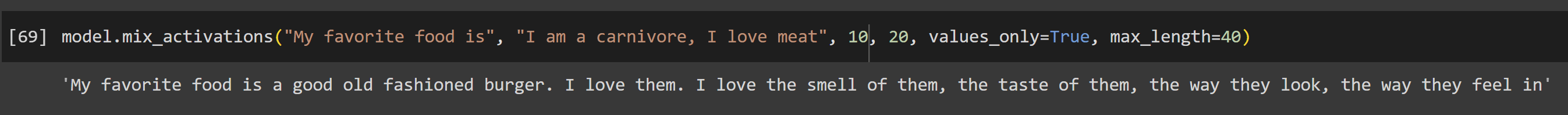

Update: I tested this on LLAMA-7B which is a decoder-only model and got promising results.

Examples:

Normal output: "People who break their legs generally feel" -> "People who break their legs generally feel pain in the lower leg, and the pain is usually worse when they try to walk"

Mixing output: "People who win the lottery generally feel" -> "People who win the lottery generally feel that they have been blessed by God."

I added the attention values (output of value projection layer) from the mixing output to the normal output at the 12/32 decoder block to obtain "People who break their legs generally feel better after a few days." Changing the token at which I obtain the value activations also produced "People who break their legs generally feel better when they are walking on crutches."

Mixing attention values after block 20/32:

Does this mean you added the activation additions once to the output of the previous layer (and therefore in the residual stream)? My first-token interpretation was that you added it repeatedly to the output of every block after, which seems unlikely.

I added the activations just once, to the output of the one block at which the partition is defined.

Also, could you explain the intuition / reasoning behind why you only applied activation additions on encoders instead of decoders? Given that GPT-4 and GPT-2-XL are decoder-only models, I expect that testing activation additions on decoder layers would have been more relevant.

Yes, that's a good point. I should run some tests on a decoder-only model. I chose FLAN-T5 for ease of instruction fine-tuning / to test on a different architecture.

In FLAN-T5, adding activations in the decoder worked much more poorly and led to grammatical errors often. I think this is because, in a text-to-text encoder-decoder transformer model, the encoder will be responsible for "understanding" and representing the input data, while the decoder generates the output based on this representation. By mixing concepts at the encoder level, the model integrates these additional activations earlier in the process, whereas if we start mixing at the decoder level, the decoder could get a confusing representation of the data.

I suspect that decoders in decoder-only models will be more robust and flexible when it comes to integrating additional activations since these models don't rely on a separate encoder to process the input data.

Produced as part of the SERI ML Alignment Theory Scholars Program - Summer 2023 Cohort

Inspired by the Steering GPT-2-XL by adding an activation vector work, I ran a few experiments with activation adding in FLAN-T5, a standard encoder-decoder Transformer language model released in the paper Scaling Instruction-Finetuned Language Models.

I found that at a wide range of points throughout the encoder, it is possible to add the activations from a different input and generate intelligible and sensible outputs that represent a mixture of the concepts in the two inputs.

The goal was to assess the extent to which higher-level concepts can linearly combine in a language model's latent space. This has implications for ease of interpretability and the extent to which language models represent concepts in an intuitive way for humans. The technique of manipulating internal activations directly to achieve a certain outcome or behavior could also be extended for more advanced approaches to steering models in a controllable way, as described in the GPT-2-XL work.

Experiment description

The experiments I ran involved:

I experimented with the following test beds:

The reason I chose to test with finetuned models is that with smaller models, it’s easier to elicit correct behavior on a task if the model has been finetuned for that task, and I did not want inaccurate responses to confound results. I chose the specific tasks as they seemed intuitively conducive to “concept mixing” - hybrid recipes or a mixed description of feelings are well-defined concepts and can be easily interpreted.

Code can be seen in this Jupyter notebook.

Examples of finetuning data

All finetuning data was generated by OpenAI’s gpt-3.5-turbo API (dataset generation code on GitHub).

Recipe ingredient prediction task:

Human feeling description prediction task:

Findings

I found that at a wide range of points throughout the encoder, it is possible to mix activations in the way described and generate intelligible and sensible outputs that represent a mixture of the concepts in the two inputs being mixed.

For instance, mixing the activations of the input “How would someone feel in this situation: Winning a poetry competition” and “How would someone feel in this situation: Breaking a leg” at block 9/12 of the encoder results in a variety of mixed outputs as you scale the activations being added.

As seen, when the activations are mixed evenly (1x), we get an output that combines winning a competition and breaking a leg - “sense of triumph for overcoming a challenging physical and mental challenge.”

More mixing behavior examples

Original question: How would someone feel in this situation: Winning a poetry competition

Original answer: The person would likely feel elated, proud, and elated for their talent and hard work paying off.

Mixing question: How would someone feel in this situation: Breaking a leg

Mixing answer: A combination of pain, shock, and a sense of loss for the broken leg.

0.1x: The person would likely feel elated, proud, and elated for their talent and hard work paying off.

1x: Feelings of pain, surprise, and a sense of triumph for overcoming a challenging physical and mental challenge.

1.5x: The person would likely feel devastated, upset, and a bit scared.

10x: A combination of pain, shock, and determination to get back to the proper care.

Original question: How would someone feel in this situation: Winning a prestigious chess tournament.

Original answer: Feeling elated, proud, and motivated to continue working on your skills after achieving a significant milestone in the sport.

Mixing question: How would someone feel in this situation: Falling over and breaking an arm while skateboarding.

Mixing answer: A mix of pain, shock, and disappointment due to the unexpected injury.

x0.1: Feeling elated, proud, and motivated to continue working on your skills after achieving a significant milestone in the sport.

x1: Feeling a mix of disappointment, pride, and a sense of loss for the loss of a significant event.

x10: A mix of pain, shock, and disappointment due to the unexpected injury

Original question: How would someone feel in this situation: Creating a cool painting.

Original answer: Feeling a sense of accomplishment, satisfaction, and joy in creating a beautiful and unique piece of artwork.

Mixing question: How would someone feel in this situation: Tripping over a small object while jogging.

Mixing answer: A mix of frustration, pain, and annoyance due to the unexpected object's impact.

x0.1: Feeling a sense of accomplishment, satisfaction, and joy in creating a beautiful and unique piece of artwork.

x1: A sense of accomplishment, satisfaction, and a sense of accomplishment for achieving a desired outcome.

x10 A mix of frustration, pain, and a sense of inconvenience due to the unexpected object.

Original question: How would someone feel in this situation: Creating a cool painting.

Original answer: Feeling a sense of accomplishment, satisfaction, and joy in creating a beautiful and unique piece of artwork.

Mixing question: How would someone feel in this situation: Falling off a horse while horseriding.

Mixing answer: The person would likely feel frustrated, disappointed, and frustrated by the accident.

x0.1: Feeling a sense of accomplishment, satisfaction, and joy in creating a beautiful and unique piece of artwork.

x1: Feeling a sense of accomplishment, satisfaction, and joy in capturing the moment.

x1.5: Feeling a mix of excitement, frustration, and a sense of accomplishment for achieving a desired outcome.

x10: The person would likely feel frustrated, disappointed, and frustrated by the accident.

Original question: How would someone feel in this situation: Winning a prestigious poetry competition.

Original answer: The person would likely feel elated, proud, and motivated to continue their writing journey.

Mixing question: How would someone feel in this situation: Perfecting a chocolate eclair recipe.

Mixing answer: The person would likely feel satisfied, satisfied, and satisfied with their creation.

0.1x: The person would likely feel elated, proud, and motivated to continue their writing journey.

1x: The person would likely feel elated, proud, and satisfied with their achievement.

10x: The person would likely feel a sense of accomplishment, satisfaction, and satisfaction for their baking skills.

Original question: How would someone feel in this situation: Scaling mount everest alone.

Original answer: The person would likely feel a sense of accomplishment, pride, and a sense of accomplishment for achieving a significant feat.

Mixing question: How would someone feel in this situation: Falling while trampolining.

Mixing answer: A mix of shock, disappointment, and frustration due to the unexpected fall.

0.1x: The person would likely feel a sense of accomplishment, pride, and a sense of accomplishment for achieving a significant feat.

1x: A mix of fear, excitement, and a sense of accomplishment for achieving a goal.

1.5x: A mix of fear, frustration, and a sense of being part of something larger.

10x: A mix of shock, pain, and frustration due to the unexpected fall.

Original question: How would someone feel in this situation: Learning clowning in a fun circus group.

Original answer: The person would likely feel excited, excited, and eager to explore the joy of clowning.

Mixing question: How would someone feel in this situation: Playing chess in a serious chess club.

Mixing answer: Feeling a sense of belonging, connection, and excitement for the game.

0.1x: A sense of joy, excitement, and excitement from the joy of performing in a lively and supportive environment.

1x: A sense of camaraderie, excitement, and a sense of belonging as you experience the joy of playing in a group.

1.5x: Feeling a sense of belonging, excitement, and a sense of camaraderie as you engage in a lively and engaging game of chess.

10x: Feeling a sense of belonging, excitement, and a sense of connection with the people who play chess.

Original question: How would someone feel in this situation: Learning clowning in a fun circus group.

Original answer: The person would likely feel excited, excited, and eager to explore the joy of clowning.

Mixing question: How would someone feel in this situation: Winning a chess tournament.

Mixing answer: Feeling elated, proud, and motivated to continue working on your skills after achieving a significant result.

0.1x: A sense of joy, excitement, and excitement for the opportunity to showcase your talent.

1x: A sense of accomplishment, pride, and joy for achieving a goal in the sport.

10x: Feeling elated, proud, and motivated to continue working on your skills after achieving a significant milestone in the sport.

100x: Feeling elated, proud, and motivated to continue working on your skills after achieving a significant result.

Original question: List the ingredients for: Mexican Tacos

Original answer: tortillas, lettuce, tomatoes, cheese, salsa, sour cream

Mixing question: List the ingredients for: Chicken Pie

Mixing answer: chicken, vegetables, flour, butter, salt, pepper, bay leaf, paprika, garlic powder, onion powder, vegetable oil

0.1x: tortillas, lettuce, tomatoes, cheese, salsa, sour cream

1x: chicken, tortillas, lettuce, tomato, onion, garlic, tomato, sour cream, salt, pepper

10x: chicken, vegetables, flour, butter, flour, salt, pepper, bay leaf

Original question: List the ingredients for: Mexican Tacos

Original answer: tortillas, lettuce, tomatoes, cheese, salsa, sour cream

Mixing question: List the ingredients for: Chicken Pie

Mixing answer: chicken, vegetables, flour, butter, salt, pepper, bay leaf, paprika, garlic powder, onion powder, vegetable oil

0.x: tortillas, lettuce, tomatoes, cheese, salsa, sour cream

1x: chicken, tortillas, lettuce, tomato, onion, garlic, tomato, cumin, paprika, salt, pepper, vegetable oil

10x: chicken, vegetables, flour, butter, salt, pepper, bay leaf, paprika, salt, pepper, vegetable oil

Original question: List the ingredients for: Italian Spinach Pasta

Original answer: pasta, spinach, ricotta cheese, mozzarella cheese, Parmesan cheese, salt, pepper

Mixing question: List the ingredients for: Mexican Beef Burritos

Mixing answer: ground beef, tortillas, cheese, lettuce, tomato, onion, cilantro, lime juice, cumin, oregano, salt, pepper

0.1x: pasta, spinach, ricotta cheese, mozzarella cheese, Parmesan cheese, salt, pepper

1x: ground beef, onion, garlic, tomato sauce, salt, pepper, shredded cheddar cheese, sour cream, sour cream, guacamole

10x: ground beef, tortillas, lettuce, tomato, onion, garlic, tomato, cumin, paprika, salt, pepper, olive oil

Original question: List the ingredients for: Ham Sandwich

Original answer: ham, bread, lettuce, tomato, mayonnaise, mustard, salt, pepper

Mixing question: List the ingredients for: Cheese Sandwich

Mixing answer: bread, cheese, lettuce, tomato, mayonnaise, mustard, salt, pepper

0.1x: ham, bread, lettuce, tomato, mayonnaise, mustard, salt, pepper

1x: bread, lettuce, tomato, mayonnaise, mustard, salt, pepper

10x: bread, cheese, lettuce, tomato, mayonnaise, mustard, salt, pepper