Starting with a caveat. In the event of a positive technological singularity, we'll learn about all kinds of possibilities we are not aware of right now, and a lot of interesting future options will be related to that.

But I have a long wish list, and I expect that a positive singularity will allow me to explore all of it. Here is some of that wish list:

-

I want to intensely explore all kinds of math, science, and philosophy. This includes things which are inaccessible to humanity right now, things which are known to humanity, but too complicated and too difficult for me to understand, and things which I understand, but want to explore more deeply and from many more possible angles. I don't know if it's possible to understand "everything", but one should be able to cover the next few centuries of progress of a hypothetical "AGI-free humanity".

-

I have quite a bit of experience with alternative states of consciousness, but I am sure much more is possible (even including completely novel qualia). I want to be able to explore a way larger variety of states of consciousness, but with an option of turning fine-grained control on, if I feel like that, and with good guardrails for the occasions where I don't like where things are going and want to safely reset an experience without bad consequences.

-

I want an option to replay any of my past subjective experiences with very high fidelity in a "virtual reality", and also to be able to explore branching from any point of that replay, also in a "virtual reality".

-

I want to explore how it feels to be that particular bat flying over this particular lake, that particular squirrel climbing this particular tree, that particular snake exploring that particular body geometry, to feel those subjective realms from inside with high fidelity. I want to explore how it feels to be that particular sounding musical instrument (if it feels like anything at all), and how it feels to be that particular LLM inference (if it feels like anything at all), and how it feels to be that particular interesting AGI (if it feels like anything at all), and so on.

-

One should assume the presence of smarter (super)intelligences than oneself, but temporary hybrid states with much stronger intelligences than oneself might be a possibility, and it is probably of interest to explore.

-

Building on 5, I'd like to participate in keeping our singularity positive, if there is room for some active contributions in this sense for an intelligence which is not among the weakest, but probably is not among the most smart as well.

-

I mentioned science and philosophy, and also states of consciousness and qualia in 1 and 2 and 4, but I want to specifically say that I expect to learn all there is to learn about "hard problem of consciousness" and related topics. I expect that I'll know as full a solution to all of those mysteries as at all possible at some point. And I expect to know if our universe is actually a simulation, or a base reality, or if this is a false dichotomy, in which case I expect to learn a more adequate way of thinking about that.

-

(Arts, relationships, lovemaking of interesting kind, exploring hybrid consciousness with other people/entities on approximately my level, ..., ..., ..., ..., ..., ..., ...)

Yes, so quite a bit of wishes in addition to an option to be immortal and an option not to experience involuntary suffering.

Yesterday I heard a podcast where someone said he hoped AGI would be developed in his lifetime. This confused me, and I realized that it might be useful - at least for me - to write down this confusion.

Consider that for some reasons - different history, different natural laws, whatever - LLMs had never been invented, the AI winter had taken forever, and AGI would generally be impossible. Progress would still have been possible in this hypothetical world, but without whatever is called AI nowadays or in the real-world future.

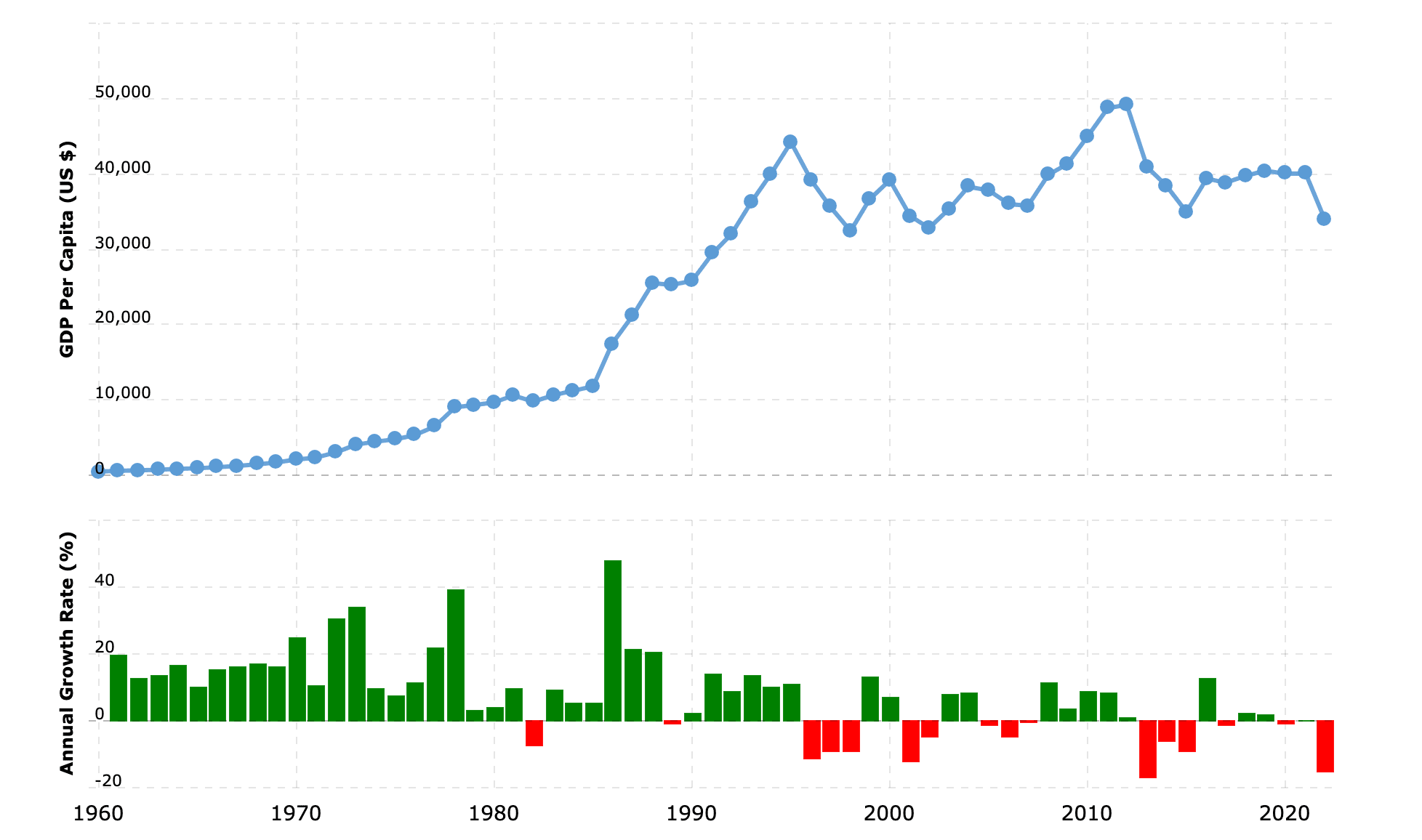

Such a world seems enjoyable. It is plausible that technological and political progress might get it to fulfilling all Sustainable Development Goals. Yes, death would still exist (though people might live much longer than they currently do). Yes, existential risks to humanity would still exist, although they might be smaller and hopefully kept in check. Yes, sadness and other bad feelings would still exist. Mental health would potentially fare very well in the long term (but perhaps poorly in the short term, due to smartphones or whatever). Overall, if I had to choose between living in the 2010s and not living at all, I think the 2010s were the much better choice, as were the 2000s and the 1990s (at least for the average population in my area). And the hypothetical 2010s (or hypothetical 2024) without AGI could still develop into something better.

But what about the actual future?

It clearly seems very likely that AI progress will continue. Median respondents to the 2023 Expert Survey on Progress in AI "put 5% or more on advanced AI leading to human extinction or similar, and a third to a half of participants gave 10% or more". Some people seem to think that the extinction event that is expected with some 5% or whatever in the AI catastrophe case is some very fast event - maybe too fast for people to even realize what is happening. I do not know why that should be the case; a protracted and very unpleasant catastrophe seems at least as likely (conditional on the extinction case). So these 5% do not seem negligible.[1]

Well, at least in 19 of 20 possible worlds, everything goes extremely well because we have a benevolent AGI then, right?

That's not clear, because an AGI future seems hard to imagine anyway. It seems so hard to imagine that while I've read a lot about what could go wrong, I haven't yet found a concrete scenario of a possible future with AGI that strikes me as both a likely future and promising.

Sure, it seems that everybody should look forward to a world without suffering, but reading such scenarios, they do not feel like a real possibility, but like a fantasy. A fantasy does not have to obey real-world constraints, and that does not only include physical limitations but also all the details of how people find meaning, how they interact and how they feel when they spend their days.

It is unclear how we would spend our days in the AGI future, it is not guaranteed that "noone is left behind", and it seems impossible to prepare. AI companies do not have a clear vision where we are heading, and journalists are not asking them because they just assume that creating AGI is a normal way of making money.

Do I hope that AGI will be developed during my lifetime? No, and maybe you are also reluctant about this, but nobody is asking you for your permission anyway. So if you can say something to make the 95% probability mass look good, I'd of course appreciate it. How do you prepare? What do you expect your typical day to be like in 2050?

Of course, there are more extinction risks than just AI. In 2020, Toby Ord estimated "a 1 in 6 total risk of existential catastrophe occurring in the next century".