We see a massive drop in score from the 22nd to the 23rd project. Can you explain why this is occurring?

TL;DR: Great question! I think it mostly means that we don't have enough data to say much about these projects. So donors who've made early donations to them, can register them and boost their project score.

- The donor score relies on the size of the donations and their earliness in the history of the project (plus the retroactive evaluation). So the top donors in particular have made many early, big, and sometimes public grants to projects that panned out well – hence why they are top donors.

- What influences the support score is not the donor score itself but the inverse rank of the donor in the ranking that is ordered by the donor score. (This corrects the outsized influence that rich donors would otherwise have, since I assume that wealth is Pareto distributed but expertise is maybe not, and is probably not correlated at quite that extreme level.)

- But if a single donor has more than 90% influence on the score of a project, they are ignored, because that typically means that we don't have enough data to score the project. We don't want a single donor to wield so much power.

Taken together, our top donors have (by design) the greatest influence over project scores, but they are also at a greater risk of ending up with > 90% influence over the project score, especially if the project has so far not found many other donors who've been ready to register their donations. So the contributions of top donors are also at greater risk of being ignored until more donors confirm the top donors' donation decisions.

Ok so the support score is influenced non-linearly by donor score. Is there a particular donor that has donated to the highest ranked 22 projects, that did not donate to the 23 or lower ranked projects?

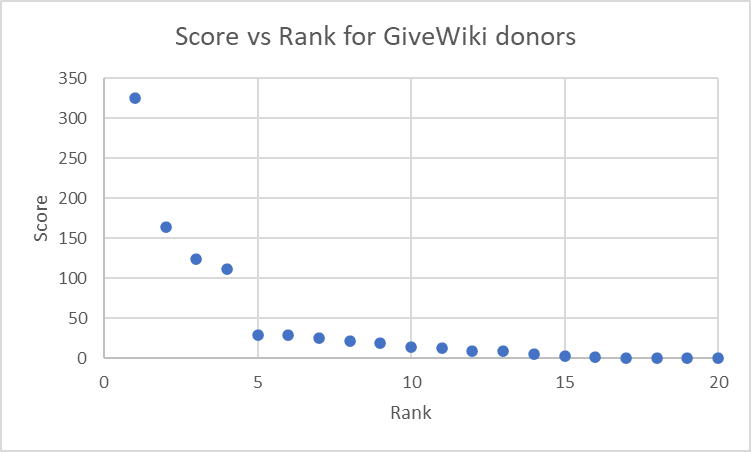

I have graphed donor score vs rank for the top GiveWiki donors. Does this include all donors in the calculation or are there hidden donors?

Does this include all donors in the calculation or are there hidden donors?

Donors have a switch in their profiles where they can determine whether they want to be listed or not. The top three in the private, complete listing are Jaan Tallinn, Open Phil, and the late Future Fund, whose public grants I've imported. The total ranking lists 92 users.

But I don't think that's core to understanding the step down. I've gone through the projects around the threshold before I posted my last comment, and I think it's really the 90% cutoff that causes it. Not a big donor who has donated to the first 22 but not to the rest.

There are plenty of projects in the tail that have also received donations from a single donor with a high score – but more or less only that so that said donor has > 90% influence over the project and will be ignored until more donors register donations to it.

Ok so the support score is influenced non-linearly by donor score.

By the inverse rank in the ranking that is sorted by the score. So the difference between the top top donor and the 2nd top donor is 1 in terms of the influence they have.

It's worth specifying "AI GiveWiki" in the title. This seems to be recommendations GIVEN a decision that AI safety is the target.

It says “AI Safety” later in the title. Do you think I should mention it earlier, like “The AI Safety GiveWiki's Top Picks for the Giving Season of 2023”?

Unsure. It's probably reasonable to assume around here that it's all AI safety all the time. "GiveWiki" as the authority for the picker, to me, implied that this was from a broader universe of giving, and this was the AI Safety subset. No biggie, but I'm sad there isn't more discussion about donations to AI safety research vs more prosaic suffering-reduction in the short term.

"GiveWiki" as the authority for the picker, to me, implied that this was from a broader universe of giving, and this was the AI Safety subset.

Could be… That's not so wrong either. We rather artificially limited it to AI safety for the moment to have a smaller, more sharply defined target audience. It also had the advantage that we could recruit our evaluators from our own networks. But ideally I'd like to find owners for other cause areas too and then widen the focus of GiveWiki accordingly. The other cause area where I have a relevant network is animal rights, but we already have ACE there, so GiveWiki wouldn't add so much on the margin. One person is interested in potentially either finding someone or themselves taken responsibility for an global coordination/peace-building branch, but they probably won't have the time. That would be excellent though!

No biggie, but I'm sad there isn't more discussion about donations to AI safety research vs more prosaic suffering-reduction in the short term.

Indeed! Rethink Priorities has made some progress on that. I need to dig into the specifics more to see whether I need to update on it. The particular parameters that they discuss in the article have not been so relevant to my reasoning on these parameters, but it's well possible that animal rights wins out even more clearly on the basis of the parameters that I've been using.

Summary: Plenty of highly committed altruists are pouring into AI safety. But often they are not well-funded, and the donors who want to support people like them often lack the network and expertise to make confident decisions. The GiveWiki aggregates the donations of currently 220 donors to 88 projects – almost all of them projects fully in AI safety (e.g., Apart Research) or projects that also work on AI safety (e.g., Pour Demain). It uses this aggregation to determine which projects are the most widely trusted among the donors with the strongest donation track records. It is a reflection of expert judgment in the field. It can serve as a guide for non-expert donors. Our current top three projects are FAR AI, the Simon Institute for Longterm Governance, and the Alignment Research Center.

Introduction

Throughout the year, we’ve been hard at work to scrape together all the donation data we could get. One big source has been Vipul Naik’s excellent repository of public donation data. We also imported public grant data from Open Phil, the EA Funds, the Survival and Flourishing Fund, and a certain defunct entity. Additionally, 36 donors have entered their donation track records themselves (or sent them to me for importing).

Add some retrospective evaluations, and you get a ranking of 92 top donors (who have donor scores > 0), of whom 22 are listed publicly, and a ranking of 33 projects with support scores > 0 (after rounding to integers).

(The donor score is a measure of the track record of a donor, and the support score is a measure of the support that a project has received from donors, weighed by the donor score among other factors. So the support score is the aggregate measure of the trust of the donors with the strongest donation track records.)

The Current Top Recommendations

You can find the full ranking on the GiveWiki projects page.

Limitations:

We hope that our data will empower you to find new giving opportunities and make great donations to AI safety this year!

If it did, please register your donation and select “Our top project ranking” under “recommender,” so that we can track our impact.