The natural abstraction hypothesis says that

- Our physical world abstracts well: for most systems, the information relevant “far away” from the system (in various senses) is much lower-dimensional than the system itself. These low-dimensional summaries are exactly the high-level abstract objects/concepts typically used by humans.

- These abstractions are “natural”: a wide variety of cognitive architectures will learn to use approximately the same high-level abstract objects/concepts to reason about the world.

If true, the natural abstraction hypothesis would dramatically simplify AI and AI alignment in particular. It would mean that a wide variety of cognitive architectures will reliably learn approximately-the-same concepts as humans use, and that these concepts can be precisely and unambiguously specified.

Ultimately, the natural abstraction hypothesis is an empirical claim, and will need to be tested empirically. At this point, however, we lack even the tools required to test it. This post is an intro to a project to build those tools and, ultimately, test the natural abstraction hypothesis in the real world.

Background & Motivation

One of the major conceptual challenges of designing human-aligned AI is the fact that human values are a function of humans’ latent variables: humans care about abstract objects/concepts like trees, cars, or other humans, not about low-level quantum world-states directly. This leads to conceptual problems of defining “what we want” in physical, reductive terms. More generally, it leads to conceptual problems in translating between human concepts and concepts learned by other systems - e.g. ML systems or biological systems.

If true, the natural abstraction hypothesis provides a framework for translating between high-level human concepts, low-level physical systems, and high-level concepts used by non-human systems.

The foundations of the framework have been sketched out in previous posts.

What is Abstraction? introduces the mathematical formulation of the framework and provides several examples. Briefly: the high-dimensional internal details of far-apart subsystems are independent given their low-dimensional “abstract” summaries. For instance, the Lumped Circuit Abstraction abstracts away all the details of molecule positions or wire shapes in an electronic circuit, and represents the circuit as components each summarized by some low-dimensional behavior - like V = IR for a resistor. This works because the low-level molecular motions in a resistor are independent of the low-level molecular motions in some far-off part of the circuit, given the high-level summary. All the rest of the low-level information is “wiped out” by noise in low-level variables “in between” the far-apart components.

Chaos Induces Abstractions explains one major reason why we expect low-level details to be independent (given high-level summaries) for typical physical systems. If I have a bunch of balls bouncing around perfectly elastically in a box, then the total energy, number of balls, and volume of the box are all conserved, but chaos wipes out all other information about the exact positions and velocities of the balls. My “high-level summary” is then the energy, number of balls, and volume of the box; all other low-level information is wiped out by chaos. This is exactly the abstraction behind the ideal gas law. More generally, given any uncertainty in initial conditions - even very small uncertainty - mathematical chaos “amplifies” that uncertainty until we are maximally uncertain about the system state… except for information which is perfectly conserved. In most dynamical systems, some information is conserved, and the rest is wiped out by chaos.

Anatomy of a Gear: What makes a good “gear” in a gears-level model? A physical gear is a very high-dimensional object, consisting of huge numbers of atoms rattling around. But for purposes of predicting the behavior of the gearbox, we need only a one-dimensional summary of all that motion: the rotation angle of the gear. More generally, a good “gear” is a subsystem which abstracts well - i.e. a subsystem for which a low-dimensional summary can contain all the information relevant to predicting far-away parts of the system.

Science in a High Dimensional World: Imagine that we are early scientists, investigating the mechanics of a sled sliding down a slope. The number of variables which could conceivably influence the sled’s speed is vast: angle of the hill, weight and shape and material of the sled, blessings or curses laid upon the sled or the hill, the weather, wetness, phase of the moon, latitude and/or longitude and/or altitude, astrological motions of stars and planets, etc. Yet in practice, just a relatively-low-dimensional handful of variables suffices - maybe a dozen. A consistent sled-speed can be achieved while controlling only a dozen variables, out of literally billions. And this generalizes: across every domain of science, we find that controlling just a relatively-small handful of variables is sufficient to reliably predict the system’s behavior. Figuring out which variables is, in some sense, the central project of science. This is the natural abstraction hypothesis in action: across the sciences, we find that low-dimensional summaries of high-dimensional systems suffice for broad classes of “far-away” predictions, like the speed of a sled.

The Problem and The Plan

The natural abstraction hypothesis can be split into three sub-claims, two empirical, one mathematical:

- Abstractability: for most physical systems, the information relevant “far away” can be represented by a summary much lower-dimensional than the system itself.

- Human-Compatibility: These summaries are the abstractions used by humans in day-to-day thought/language.

- Convergence: a wide variety of cognitive architectures learn and use approximately-the-same summaries.

Abstractability and human-compatibility are empirical claims, which ultimately need to be tested in the real world. Convergence is a more mathematical claim, i.e. it will ideally involve proving theorems, though empirical investigation will likely still be needed to figure out exactly which theorems.

These three claims suggest three different kinds of experiment to start off:

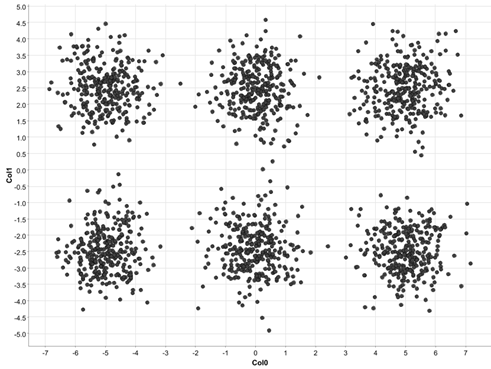

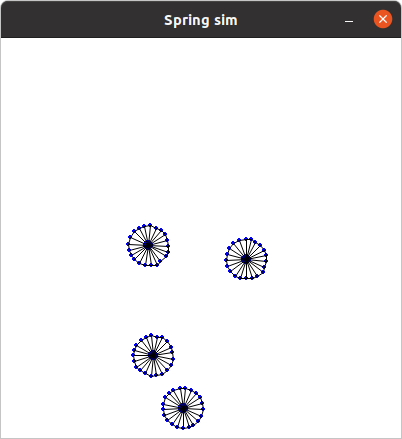

- Abstractability: does reality abstract well? Corresponding experiment type: run a reasonably-detailed low-level simulation of something realistic; see if info-at-a-distance is low-dimensional.

- Human-Compatibility: do these match human abstractions? Corresponding experiment type: run a reasonably-detailed low-level simulation of something realistic; see if info-at-a-distance recovers human-recognizable abstractions.

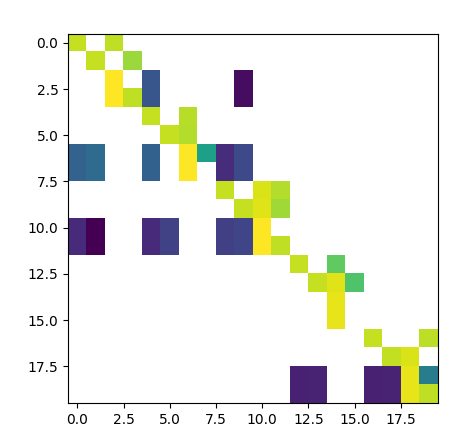

- Convergence: are these abstractions learned/used by a wide variety of cognitive architectures? Corresponding experiment type: train a predictor/agent against a simulated environment with known abstractions; look for a learned abstract model.

The first two experiments both require computing information-relevant-at-a-distance in a reasonably-complex simulated environment. The “naive”, brute-force method for this would not be tractable; it would require evaluating high-dimensional integrals over “noise” variables. So the first step will be to find practical algorithms for directly computing abstractions from low-level simulated environments. These don’t need to be fully-general or arbitrarily-precise (at least not initially), but they need to be general enough to apply to a reasonable variety of realistic systems.

Once we have algorithms capable of directly computing the abstractions in a system, training a few cognitive models against that system is an obvious next step. This raises another algorithmic problem: how do we efficiently check whether a cognitive system has learned particular abstractions? Again, this doesn’t need to be fully general or arbitrarily precise. It just needs to be general enough to use as a tool for the next step.

The next step is where things get interesting. Ideally, we want general theorems telling us which cognitive systems will learn which abstractions in which environments. As of right now, I’m not even sure exactly what those theorems should say. (There are some promising directions, like modular variation of goals, but the details are still pretty sparse and it’s not obvious whether these are the right directions.) This is the perfect use-case for a feedback loop between empirical and theoretical work:

- Try training various cognitive systems in various environments, see what abstractions they learn.

- Build a model which matches the empirical results, then come up with new tests for that model.

- Iterate.

Along the way, it should be possible to prove theorems on what abstractions will be learned in at least some cases. Experiments should then probe cases not handled by those theorems, enabling more general models and theorems, eventually leading to a unified theory.

(Of course, in practice this will probably also involve a larger feedback loop, in which lessons learned training models also inform new algorithms for computing abstractions in more-general environments, and for identifying abstractions learned by the models.)

The end result of this process, the holy grail of the project, would be a system which provably learns all learnable abstractions in a fairly general class of environments, and represents those abstractions in a legible way. In other words: it would be a standardized tool for measuring abstractions. Stick it in some environment, and it finds the abstractions in that environment and presents a standard representation of them. Like a thermometer for abstractions.

Then, the ultimate test of the natural abstraction hypothesis would just be a matter of pointing the abstraction-thermometer at the real world, and seeing if it spits out human-recognizable abstract objects/concepts.

Summary

The natural abstraction hypothesis suggests that most high-level abstract concepts used by humans are “natural”: the physical world contains subsystems for which all the information relevant “far away” can be contained in a (relatively) low-dimensional summary. These subsystems are exactly the high-level “objects” or “categories” or “concepts” we recognize in the world. If true, this hypothesis would dramatically simplify the problem of human-aligned AI. It would imply that a wide range of architectures will reliably learn similar high-level concepts from the physical world, that those high-level concepts are exactly the objects/categories/concepts which humans care about (i.e. inputs to human values), and that we can precisely specify those concepts.

The natural abstraction hypothesis is mainly an empirical claim, which needs to be tested in the real world.

My main plan for testing this involves a feedback loop between:

- Calculating abstractions in (reasonably-realistic) simulated systems

- Training cognitive models on those systems

- Empirically identifying patterns in which abstractions are learned by which cognitive models in which environments

- Proving theorems about which abstractions are learned by which cognitive models in which environments.

The holy grail of the project would be an “abstraction thermometer”: an algorithm capable of reliably identifying the abstractions in an environment and representing them in a standard format. In other words, a tool for measuring abstractions. This tool could then be used to measure abstractions in the real world, in order to test the natural abstraction hypothesis.

I plan to spend at least the next six months working on this project. Funding for the project has been supplied by the Long-Term Future Fund.

I'm glad you are thinking about this. I am very optimistic about AI alignment research along these lines. However, I'm inclined to think that the strong form of the natural abstraction hypothesis is pretty much false. Different languages and different cultures, and even different academic fields within a single culture (or different researchers within a single academic field), come up with different abstractions. See for example lsusr's posts on the color blue or the flexibility of abstract concepts. (The Whorf hypothesis might also be worth looking into.)

This is despite humans having pretty much identical cognitive architectures (assuming that we can create a de novo AGI with a cognitive architecture as similar to a human brain as human brains are to each other seems unrealistic). Perhaps you could argue that some human-generated abstractions are "natural" and others aren't, but that leaves the problem of ensuring that the human operating our AI is making use of the correct, "natural" abstractions in their own thinking. (Some ancient cultures lacked a concept of the number 0. From our perspective, and that of a superintelligent AGI, 0 is a 'natural' abstraction. But there could be ways in which the superintelligent AGI invents 'natural' abstraction that we haven't yet invented, such that we are living in a "pre-0 culture" with respect to this abstraction, and this would cause an ontological mismatch between us and our AGI.)

But I'm still optimistic about the overall research direction. One reason is if your dataset contains human-generated artifacts, e.g. pictures with captions written in English, then many unsupervised learning methods will naturally be incentivized to learn English-language abstractions to minimize reconstruction error. (For example, if we're using self-supervised learning, our system will be incentivized to correctly predict the English-language caption beneath an image, which essentially requires the system to understand the picture in terms of English-language abstractions. This incentive would also arise for the more structured supervised learning task of image captioning, but the results might not be as robust.)

Social sciences are a notable exception here. And I think social sciences (or even humanities) may be the best model for alignment--'human values' and 'corrigibility' seem related to the subject matter of these fields.

Anyway, I had a few other comments on the rest of what you wrote, but I realized what they all boiled down to was me having a different set of abstractions in this domain than the ones you presented. So as an object lesson in how people can have different abstractions (heh), I'll describe my abstractions (as they relate to the topic of abstractions) and then explain how they relate to some of the things you wrote.

I'm thinking in terms of minimizing some sort of loss function that looks vaguely like

reconstruction_error + other_stuffwhere

reconstruction_erroris a measure of how well we're able to recreate observed data after running it through our abstractions, andother_stuffis the part that is supposed to induce our representations to be "useful" rather than just "predictive". You keep talking about conditional independence as the be-all-end-all of abstraction, but from my perspective, it is an interesting (potentially novel!) option for theother_stuffterm in the loss function. The same way dropout was once an interesting and novelother_stuffwhich helped supervised learning generalize better (making neural nets "useful" rather than just "predictive" on their training set).The most conventional choice for

other_stuffwould probably be some measure of the complexity of the abstraction. E.g. a clustering algorithm's complexity can be controlled through the number of centroids, or an autoencoder's complexity can be controlled through the number of latent dimensions. Marcus Hutter seems to be as enamored with compression as you are with conditional independence, to the point where he created the Hutter Prize, which offers half a million dollars to the person who can best compress a 1GB file of Wikipedia text.Another option for

other_stuffwould be denoising, as we discussed here.You speak of an experiment to "run a reasonably-detailed low-level simulation of something realistic; see if info-at-a-distance is low-dimensional". My guess is if the

other_stuffin your loss function consists only of conditional independence things, your representation won't be particularly low-dimensional--your representation will see no reason to avoid the use of 100 practically-redundant dimensions when one would do the job just as well.Similarly, you speak of "a system which provably learns all learnable abstractions", but I'm not exactly sure what this would look like, seeing as how for pretty much any abstraction, I expect you can add a bit of junk code that marginally decreases the reconstruction error by overfitting some aspect of your training set. Or even junk code that never gets run / other functional equivalences.

The right question in my mind is how much info at a distance you can get for how many additional dimensions. There will probably be some number of dimensions N such that giving your system more than N dimensions to play with for its representation will bring diminishing returns. However, that doesn't mean the returns will go to 0, e.g. even after you have enough dimensions to implement the ideal gas law, you can probably gain a bit more predictive power by checking for wind currents in your box. See the elbow method (though, the existence of elbows isn't guaranteed a priori).

(I also think that an algorithm to "provably learn all learnable abstractions", if practical, is a hop and a skip away from a superintelligent AGI. Much of the work of science is learning the correct abstractions from data, and this algorithm sounds a lot like an uberscientist.)

Anyway, in terms of investigating convergence, I'd encourage you to think about the inductive biases induced by both your loss function and also your learning algorithm. (We already know that learning algorithms can have different inductive biases than humans, e.g. it seems that the input-output surfaces for deep neural nets aren't as biased towards smoothness as human perceptual systems, and this allows for adversarial perturbations.) You might end up proving a theorem which has required preconditions related to the loss function and/or the algorithm's inductive bias.

Another riff on this bit:

Maybe we could differentiate between the 'useful abstraction hypothesis', and the stronger 'unique abstraction hypothesis'. This statement supports the 'useful abstraction hypothesis', but the 'unique abstraction hypothesis' is the one where alignment becomes way easier because we and our AGI are using the same abstractions. (Even though I'm only a believer in the useful abstraction hypothesis, I'm still optimistic because I tend to think we can have our AGI cast a net wide enough to capture enough useful abstractions that ours are in their somewhere, and this number will be manageable enough to find the right abstractions from within that net--or something vaguely like that.) In terms of science, the 'unique abstraction hypothesis' doesn't just say scientific theories can be useful, it also says there is only one 'natural' scientific theory for any given phenomenon, and the existence of competing scientific schools sorta seems to disprove this.

Anyway, the aspect of your project that I'm most optimistic about is this one:

Since I don't believe in the "unique abstraction hypothesis", checking whether a given abstraction corresponds to a human one seems important to me. The problem seems tractable, and a method that's abstract enough to work across a variety of different learning algorithms/architectures (including stuff that might get invented in the future) could be really useful.