As can be inferred from the title, it's been a bit more than a week when I wrote most of this. I've had many more thoughts in that time, but seems very much still worth sharing this.

The LessWrong team is currently in the midst of rethinking/redesigning/upgrading our moderation policies and principles (announcement). In order to get feedback throughout this process rather than just at the end, here's a dump of things currently on my mind. Many of these are hypotheses and open questions, so feedback is very welcome.

This is a bit of a jumble of thoughts, questions, frames, etc, vaguely ordered.

The enforced standard is a function of how many new people are showing up

With the large influx of new users recently, we realized that we have to enforce a higher standard or risk having the site's quality greatly diluted: Latest Posts filled with poorly written posts about trivial points, comment threads full of incoherent or very 101 questions, etc.

The question then is "how much are we raising it?" where I do think there's quite a range. Here's a suggestive scale I didn't put a tonne of thought into, not every reasonable level is included:

- Level 7: The contributions made by the very top researchers

- Level 6: The contributions made by aspiring researchers and the seriously engaged

- Level 5: Contributions that seem novel and interesting.

- Level 4: Contributions not asking 101-level stuff

- Level 3: Contributions that don't seem that wrong or confused

- Level 2: Contributions that are largely well-written-enough and/or earnest

- Level 1: Contributions written by humans

- Level 0: Spam

To date, I'd say the LessWrong moderators have approved content that's Level 2 or above, even if we thought it was meh or even bad ("approve and downvote" was a common move). Fearing that this would be inadequate with a large influx of low-quality users, it seemed that the LessWrong mod team should outright reject a lot more content. But if so, where are we raising the bar to?

I've felt that any of Levels 3-5 are plausible, with different tradeoffs. What I'm realizing is the standard that makes sense to enforce depends on how many new people you're getting.

Moderation: a game of tradeoffs

Let's pretend moderation is just binary classification task where the moderator just has to make an "accept" or "reject" calls on posts/comments/users. For a fixed level of discernment (you can get better over time, but approach any decision, you're only so good), you can alter your thresholds for acceptance/rejection, and thereby trade false positives against false negatives. See RoC/AUC for binary classifiers.

Moderation is that. I both don't want to accidentally reject good stuff but also don't want to accept bad stuff that'll erode site quality.

My judgment is only so good, so I am going to make mistakes. For sure. I've made them so far and fortunately others caught them (though I must guess I've made some that have not been caught). That seems unavoidable.

But given I'm going to make mistakes, should I err on the side of too many acceptances or too many rejections?

Depends on the volume

My feeling, as above, is the answer depends on volume. If we don't get that many new users, there's not a risk of them swarming the site and destroying good conversation. I think this has been the situation until recently so it's been okay having a lower standard. In a hypothetical world where we get 10x as many new users as we currently do, we might need to risk having many false negatives to avoid getting too many false positives, because the site couldn't absorb that many bad new users.

My current thinking[1]

My feeling is we will be requiring new contributions to be between Level 2.5 and Level 4 right now, but keeping an eye on volume of new users. If the storm comes (and it might be bursty), we might want to raise the standard higher.

Question: where to filter?

New users generally write lower-quality content than established ones. Some of that content is still valuable, just less valuable than other stuff around, but some of it is probably isn't worth anyone's time to read.

It's been a persistent question for me of how to assess which content should be displayed on the site, and how, and who should do the assessing.

Where and how content gets filtered is one the major knobs/dials the moderation team has in our toolkit.

Some Options

- Content goes live on the sites, visibility is increased/decreased based on karma/voting.

- Advantages

- Where or not your content is visible is not judged by a small group of moderators subjected to limited scrutiny and limited knowledge. It's inclusion on the site is more democratically determined.

- The site moderators don't have to spend as much time assessing content up front – users do the work!

- Disadvantages

- Users do the work. If moderators allow pretty crappy stuff to go live, causes many people to look at it, wasting their time. I'm always sad when there's a pretty awful post with -20 karma, because it means multiple people had to waste time seeing it and voting it down.

- It's a lot of work to prevent bad content getting seen everywhere that it's a waste of people's attention to see. Even if the algorithm hides crappy content from Latest Posts, it can still show up in Recent Discussion, All Posts, Tag pages, search, etc. Conceivably we could address all of those, but in many ways it's easier just to not have bad content go live.

- Advantages

- Moderators vet all posts and comments from first-time users, and reject those that don't seem good enough

- Advantages

- Prevents the sites users from having to see or vote on bad content, keeps signal-to-noise ratio reliably higher

- Disadvantages

- Moderators have only so-so judgment and will end up rejecting content and users that actually would have been pretty valuable to have.

- Requiring mod approval introduces a delay between submission and your content going live. If you were trying to participate in a comment thread, the conversation might be over by the time mods approve your first comment.

- Advantages

- Different sections of the site for different quality. Perhaps there's a "here's only the really good stuff section that's subject to strict standards" and here's the "way more flexible, much less judgment" space.

- Advantages

- Lets more content be on the site at all.

- Preserves a space with really high signal-to-noise ratio.

- Disadvantages

- Figuring out the relationship between the two (or more) sections is very tricky, and likely people fall into bad equilibria in how they relate to the sections (as I believe happened with Main and Discussion on old LW).

- You still have to figure out the filtering onto the more permissive section, and if gets too bad, it's possible that takes down the site because no one reads it or writes for it.

- Advantages

My current thinking[1]

I think we will be rejecting content to Level 2.5-4 as above for now (but providing feedback and being transparent), and then beyond that approving and downvoting if we're less confident something is bad but personally don't like it. This is a mix of 1 and 2. I'm not excited about different major site sections right now, that said maybe a little bit of that for 101 AI content.

Transparency & Accountability

I think many things go better when one (or a few people) in charge and can just make decisions. This can avoid many failure modes of excessive democratization. But also things go better there if there's some accountability for those few people, and accountability requires knowing what's actually going on, i.e., transparency.

As the judgment calls get closer to a blurry line, I don't feel great about being the one to decide whether or not a person's post or comment or self belongs on LessWrong. I will make mistakes. But also – tradeoffs – I don't want LessWrong to get massively diluted because I wasn't willing to reject enough people.

A solution for this that I think is very promising is that the LessWrong team will make relatively more decisions on our own to reject posts/comments/users, raising it from what we did before, but we'll make it possible to audit us. Rejected content will be publicly visible on a Rejected Content section of the site, and rejected items will usually include some indication of why we rejected it. I predict some people will be motivated to look over the reject pile, and call out places where we screwed up. Or at the very least, people can look and see that we're not just rejecting things because we disagreed with them. What I hope is that people look over the rejected stuff and go "overwhelmingly, seems good that this stuff is not on LessWrong".

But the transparency and accountability isn't about just that feature, it's a principle I'm generally inclined towards trying to achieve.

Tensions in Transparency

A hard thing about trying to be transparent about our moderation decisions and actions is that this also requires publicly calling out a user or their content. So you get more transparency but also more embarrassment. I don't have any good solution to this.

Ideally, we'd use all public comments to moderate rather than Private Messages, but yeah, can make people feel called out rather than taking them aside quietly. The other challenge here is that moderating in public requires figuring out how to not communicate just to one person, but many, and that takes more time when the team is already short on it.

The Expanding Toolbox

I think of the coolest things about the LessWrong team (and something core to the LessWrong/Lightcone philosophy) is that we wear all the hats, we are all both moderators and software engineers, allowing us to build and iterate on our tools in a way I don't think most forum moderators get to.

So we get to experiment a lot our moderation stack, if we want.

Rate Limits

Some months ago, we introduced rate limits as a tool. Instead of a time-bound ban (1-month, 3-months, etc) that's pretty heavy and rarely done[2], we can see users who are pulling down quality/behaving badly and simultaneously warn them and slow them down, hopefully encouraging them to put more effort into their contributions.

An informal poll showed that many people liked the idea of being rate limited more than having the visibility of their content reduced.

- Advantages

- Serves as a warning without completely cutting you off

- Gives you an opportunity to demonstrate reform

- Less of a big deal, therefore less scary to apply as a moderator

- Disadvantages

- If applied wrong, stifles valuable contributions.

I do like have a gradation of options, and think this is pretty great to have in addition bans.

My fears about Rate Limiting

I do fear that done wrong, rate limiting could do a lot of harm and I'm very keen to avoid that as we experiment with it.

I do think some rate limits (the ones we currently have set up?) are 80% of a ban without feeling to us moderators like that. Also historically with bans there was a public explanation and a set duration, and we're not currently communicating that well about them (we can and will fix this though – we really do have to though).

Separating limits for post and comments (and comments on your own post vs other people's) is a basic next step. Also more granularity for the limits. I wonder if 10 comments/a week might be better than 1/day, because you could spend them all on a conversation on one day.

Why focus on moderation now

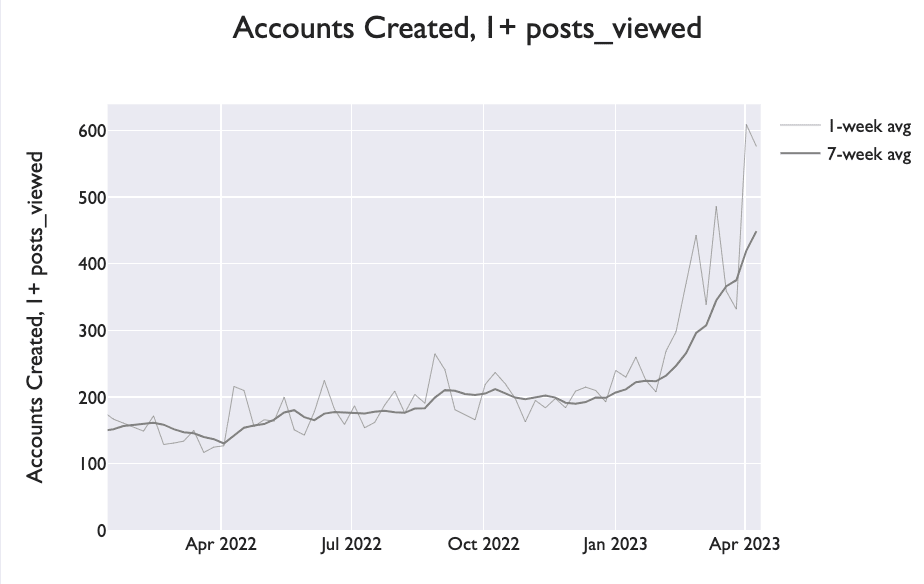

The proximate cause for focusing here is the increase in new users who find their way to the site because the topic of AI is becoming much higher profile. LessWrong both does well in search results and also gets linked from my many places. Also some older users are now posting and commenting for the first time since the topic feels urgent.

Goal: Maintaining the Signal-to-Noise Ratio

Or more accurately, maintaining or improving the shape of the distribution of quality that users experience when they visit the site. If you're reader, when you visit the homepage, do you find it easier or harder to find posts that you want to read? If you're a poster, do you find the comments more or less valuable to read?

I care a lot that as the site grows, the experience of quality only goes up. The question I want to ask with new users (or existing users even), is given the current site setup, how is the signal-to-noise ratio changed?

Frame: The Wall & The Spire

When launching the LessWrong team into a big stretch of time focusing on moderation, I said that I want us to build both walls surrounding our site (with gates and chambers and perhaps inner and outer walls that allow a progression inwards) and also a spire to elevate the discourse unto the heavens.

The imagery is supposed to emphasise that although the proximate cause for focusing on moderation has been a dramatic increase in new poster and commenters, I don't want our moderation efforts just focused on keeping site quality "as good as it has been to date", we should aim to raise the quality level too.

This works well because (a) many new moderation tools work for new and established users, (b) clarifying site values and standards is useful for new and established users.

Added: How to relate to 101 content

I've been thinking about this topic but forgot to write it up here. Doing so in response to a comment.

I am unsure what to do with and how to relate 101 users/101 content. Here's a mix of thoughts.

- A big question is ratios. If the community is mostly established users, a flow of new users that matches or exceeds attrition seems healthy, particularly if the volume of new users can be enculturated by the existing users and their content doesn't pull down the signal-to-noise ratio. My fear is that with rapid growth, you get a disproportionate number of new users who are at the 101 that the site can't cope with.

- Alternatively, the site could cope with it if you made that the focus (e.g. onboarding materials, question deduplication, etc), but there's a question of whether that's the right prioritization/role LW should be going for.

- Another concern here is that the new wave of new users is drawn from a worse distribution than previous new users. In particular, when LessWrong was relatively more obscure, you were more likely to hear about if you had higher "affinity" e.g. you were in adjacent places like SSC/ACX, Marginal Revolution, mainstream AI/ML research; meaning that people who ended up on LessWrong were more likely to be a good fit than people who are making their way here because AI has gone very mainstream.

- An important open question for me is how to relate to users who I predict are much less likely to ever contribute at the frontier, be it the frontier of rationality knowledge/skill or LessWrong, AI alignment, or the edge of any interesting topic of intellectual progress on LessWrong. If LessWrong is about making intellectual/research progress, do we want to absorb many users who seem unlikely to make those contributions? Currently I do not place a strong filter here, but I think about this.

For a while (particularly when we were experiencing a large burst of new accounts), I was thinking that we should basically reject 101 submissions. By 101, I mean questions and comments that you wouldn't make if you'd read Superintelligence, new about orthogonality thesis, existing discussion of Tool AI, and other topics that are generally treated as very basic background knowledge. (As above, I think LessWrong could absorb this and still be suitable for serious progress, but it's not a trivial design problem and there are opportunity costs for the team's time.)

Mulling over the policy of approx no 101 AI content for a while, it doesn't feel good to shut our doors to it completely. I want people coming for that to have some kind of home, and it seems plausible good if LessWrong serves as a general beacon of sanity around the topic of 101 AI, standard questions and objections, etc.

In the near-term, solutions I like include:

- Clearly marked posts of Intro AI questions and ideas. When new users attempt to make comments and posts that feel like they ignore existing material, we direct them to these threads, and established users who wish to engage can do so, without those ideas and questions diluting the rest of the site.

- Perhaps a kind "101 Friendly" tag for posts that can be optionally applied. I could imagine it being applied to posts like The basic reasons I expect AGI ruin which signals there isn't an expectation of prior familiarity with basic arguments. We could literally tie to this a user group permissions category, of certain users are restricted to commenting on posts with that tag.

- When it seems like a the right time, we build out infrastructure that lets more users and less good content on the site without diluting the experience for others.

I'd previously considered redirecting new users to stampy.ai, but at present, that UI doesn't seem enough. I think people should get do the equivalent of make a question post (similar to a basic StackOverflow post), whereas Stampy seems much more to want you to have a sentence-length question, and match to an existing one (taking about three layers before you can propose your own sentence level question).

Another idea of interest to me is "Argument Wiki". This would be useful for a range of users at a range of knowledge. E.g., I'd be interested in it as a place that compiles all the arguments for and against Sharp Left Turn, but it could also have the answers to more elementary questions such as "wouldn't a really smart AI realize it shouldn't be evil?" or whatever.

I'll add that a lot of the team's current work is trying to get ahead of the problems here, even before they're obviously really bad, because I worry that once the Great Influx is upon us, we'll take a lot of damage if we're not already prepared to handle it.

- ^

This means what I'm thinking today and maybe tomorrow, it's suggestive but not definitive of what we'll settle on by the end of the current period of refiguring out moderation stuff.

- ^

It feels like a Big Deal to ban a user even temporarily, so I think only having this as a tool has caused us to historically under-moderate.

I'm still interested in Shortform being the place for established users to freely post their rough thoughts. I'd say you're good and shouldn't worry.

The rough thoughts of a person who has the site culture, knows all the basics, has a decent chance of having some valuable such that it's good for people to post them. On on the other hand, some people's high effort stuff is not very interesting, e.g. from new users. I wouldn't want Shortform to become super low SNR because we put low quality users indiscriminately, and by extension, have that content all over the site (Recent Discussion, All Posts, etc) which it would be right now unless we changed some things.

[Raemon for a while was thinking to send users not quite up to par to Shortform, but I've told him I don't endorse that use case currently. We'll have to think about it more/perhaps change various parts of the site, e.g. what goes in Recent Discussion, before endorsing that.]