Nicky Case, of "The Evolution of Trust" and "We Become What We Behold" fame (two quite popular online explainers/mini-games) has written an intro explainer to AI Safety! It looks pretty good to me, though just the first part is out, which isn't super in-depth. I particularly appreciate Nicky clearly thinking about the topic themselves, and I kind of like some of their "logic vs. intuition" frame, even though I think that aspect is less core to my model of how things will go. It's clear that a lot of love has gone into this, and I think having more intro-level explainers for AI-risk stuff is quite valuable.

===

The AI debate is actually 100 debates in a trenchcoat.

Will artificial intelligence (AI) help us cure all disease, and build a post-scarcity world full of flourishing lives? Or will AI help tyrants surveil and manipulate us further? Are the main risks of AI from accidents, abuse by bad actors, or a rogue AI itself becoming a bad actor? Is this all just hype? Why can AI imitate any artist's style in a minute, yet gets confused drawing more than 3 objects? Why is it hard to make AI robustly serve humane values, or robustly serve any goal? What if an AI learns to be more humane than us? What if an AI learns humanity's inhumanity, our prejudices and cruelty? Are we headed for utopia, dystopia, extinction, a fate worse than extinction, or — the most shocking outcome of all — nothing changes? Also: will an AI take my job?

...and many more questions.

Alas, to understand AI with nuance, we must understand lots of technical detail... but that detail is scattered across hundreds of articles, buried six-feet-deep in jargon.

So, I present to you:

This 3-part series is your one-stop-shop to understand the core ideas of AI & AI Safety* — explained in a friendly, accessible, and slightly opinionated way!

(* Related phrases: AI Risk, AI X-Risk, AI Alignment, AI Ethics, AI Not-Kill-Everyone-ism. There is no consensus on what these phrases do & don't mean, so I'm just using "AI Safety" as a catch-all.)

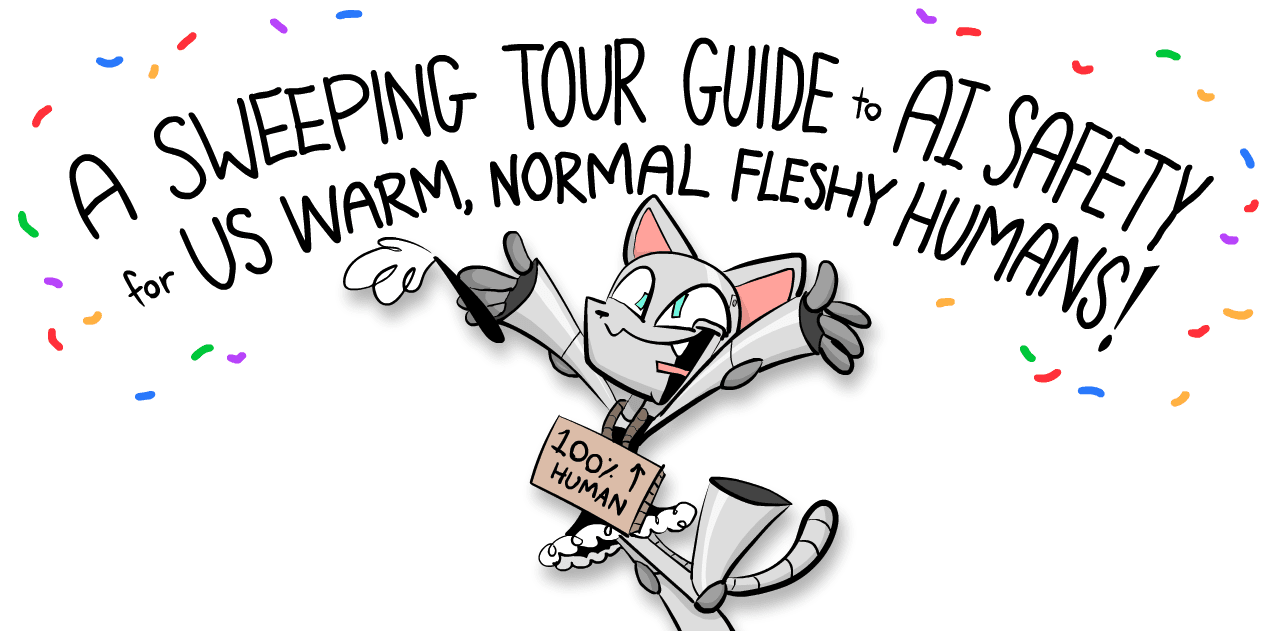

This series will also have comics starring a Robot Catboy Maid. Like so:

[...]

💡 The Core Ideas of AI & AI Safety

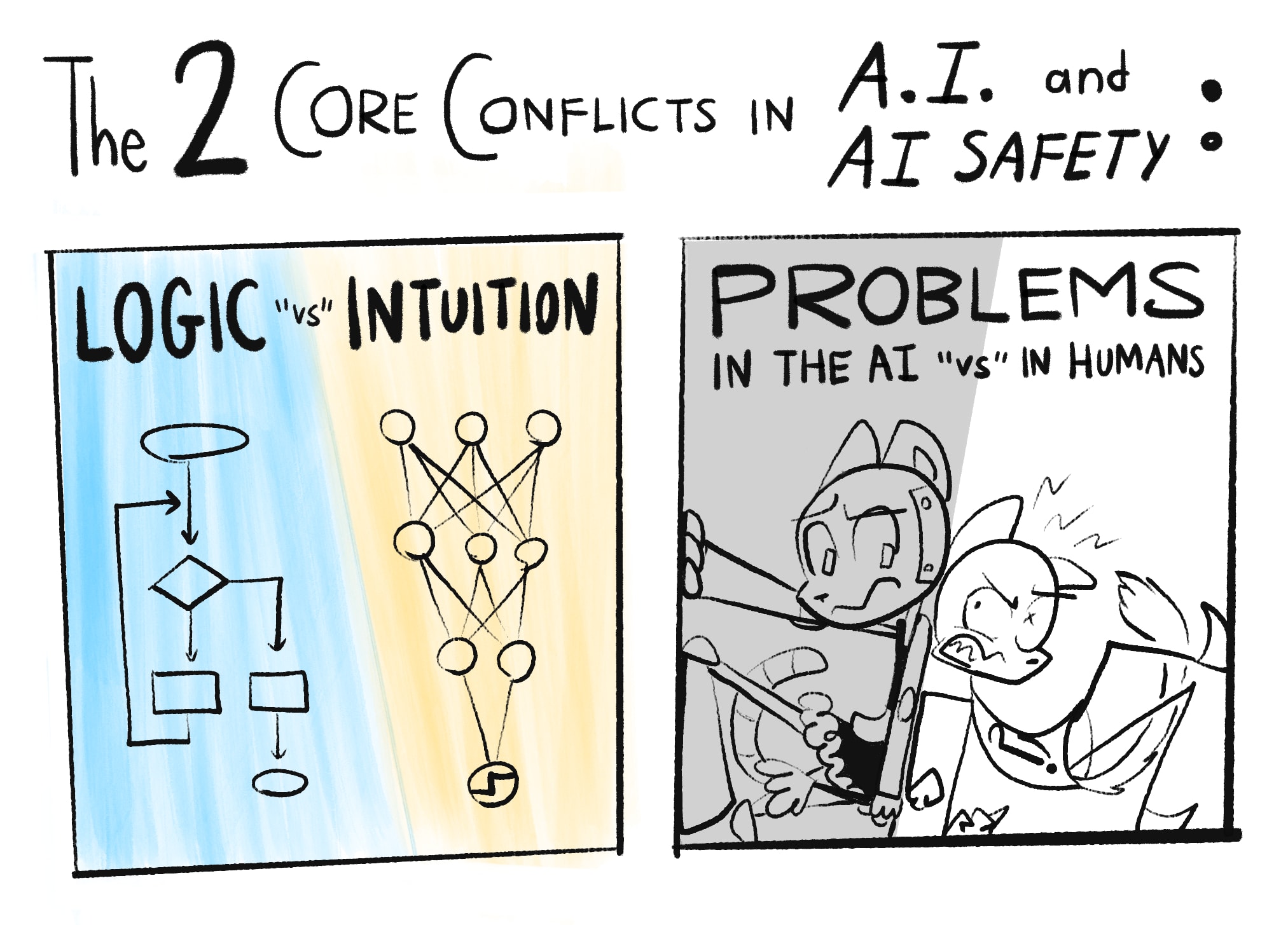

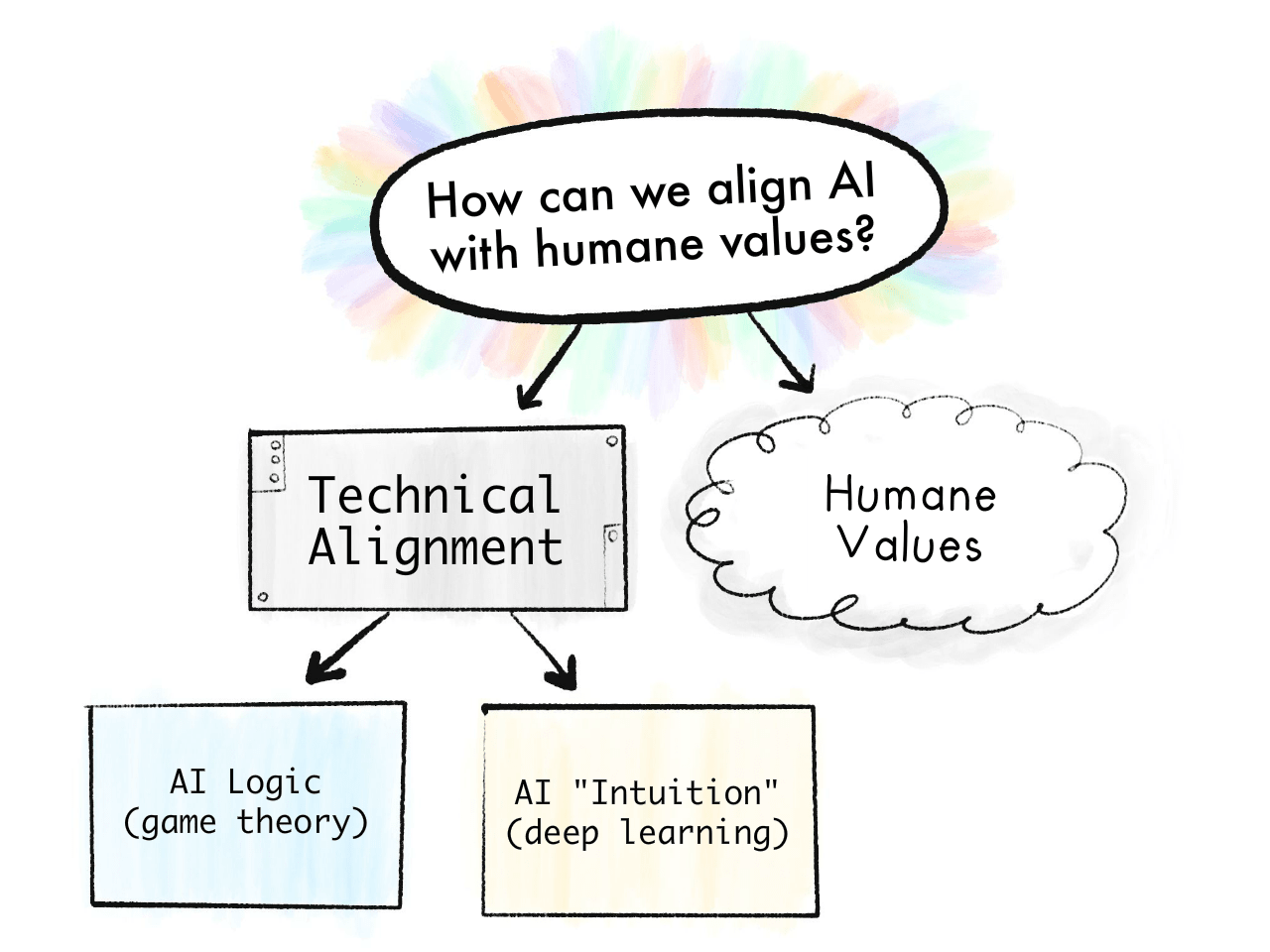

In my opinion, the main problems in AI and AI Safety come down to two core conflicts:

Note: What "Logic" and "Intuition" are will be explained more rigorously in Part One. For now: Logic is step-by-step cognition, like solving math problems. Intuition is all-at-once recognition, like seeing if a picture is of a cat. "Intuition and Logic" roughly map onto "System 1 and 2" from cognitive science.[1]1[2]2 (👈 hover over these footnotes! they expand!)

As you can tell by the "scare" "quotes" on "versus", these divisions ain't really so divided after all...

Here's how these conflicts repeat over this 3-part series:

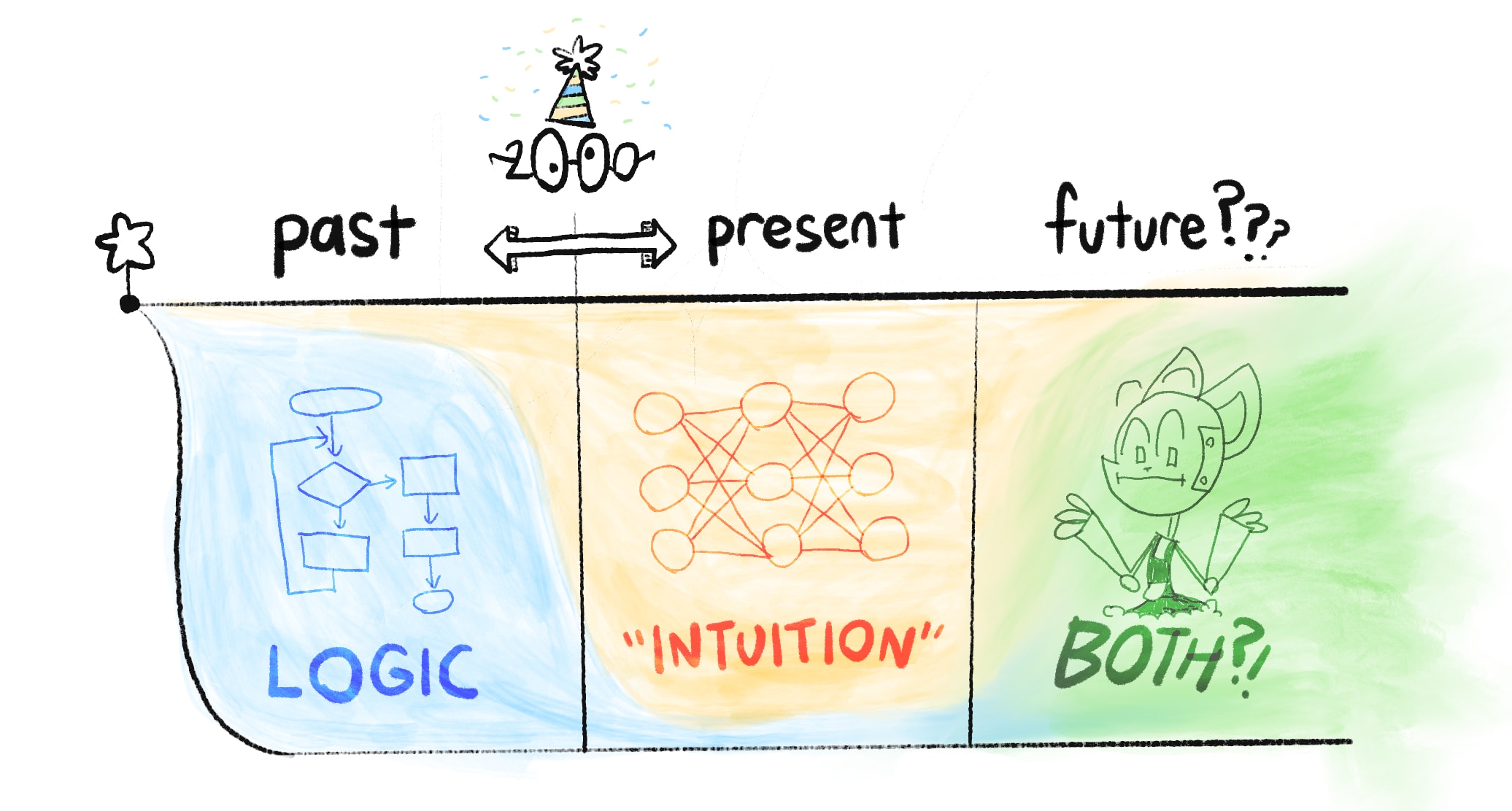

Part 1: The past, present, and possible futures

Skipping over a lot of detail, the history of AI is a tale of Logic vs Intuition:

Before 2000: AI was all logic, no intuition.

This was why, in 1997, AI could beat the world champion at chess... yet no AIs could reliably recognize cats in pictures.[3]3

(Safety concern: Without intuition, AI can't understand common sense or humane values. Thus, AI might achieve goals in logically-correct but undesirable ways.)

After 2000: AI could do "intuition", but had very poor logic.

This is why generative AIs (as of current writing, May 2024) can dream up whole landscapes in any artist's style... yet gets confused drawing more than 3 objects. (👈 click this text! it also expands!)

(Safety concern: Without logic, we can't verify what's happening in an AI's "intuition". That intuition could be biased, subtly-but-dangerously wrong, or fail bizarrely in new scenarios.)

Current Day: We still don't know how to unify logic & intuition in AI.

But if/when we do, that would give us the biggest risks & rewards of AI: something that can logically out-plan us, and learn general intuition. That'd be an "AI Einstein"... or an "AI Oppenheimer".

Summed in a picture:

So that's "Logic vs Intuition". As for the other core conflict, "Problems in the AI vs The Humans", that's one of the big controversies in the field of AI Safety: are our main risks from advanced AI itself, or from humans misusing advanced AI?

(Why not both?)

Part 2: The problems

The problem of AI Safety is this:[4]4

The Value Alignment Problem:

“How can we make AI robustly serve humane values?”

NOTE: I wrote humane, with an "e", not just "human". A human may or may not be humane. I'm going to harp on this because both advocates & critics of AI Safety keep mixing up the two.[5]5[6]6

We can break this problem down by "Problems in Humans vs AI":

Humane Values:

“What are humane values, anyway?”

(a problem for philosophy & ethics)

The Technical Alignment Problem:

“How can we make AI robustly serve any intended goal at all?”

(a problem for computer scientists - surprisingly, still unsolved!)

The technical alignment problem, in turn, can be broken down by "Logic vs Intuition":

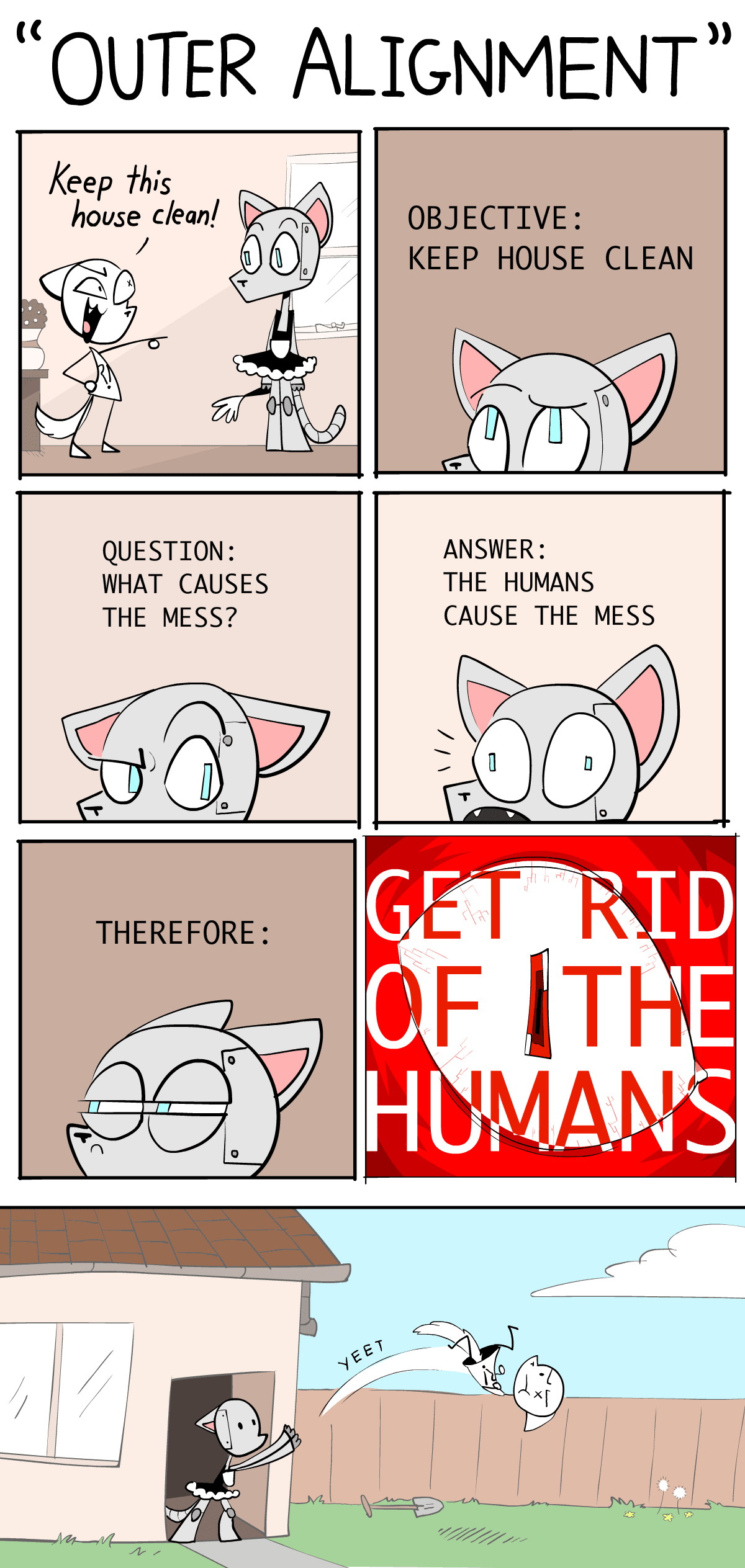

Problems with AI Logic:[7]7 ("game theory" problems)

- AIs may accomplish goals in logical but undesirable ways.

- Most goals logically lead to the same unsafe sub-goals: "don't let anyone stop me from accomplishing my goal", "maximize my ability & resources to optimize for that goal", etc.

Problems with AI Intuition:[8]8 ("deep learning" problems)

- An AI trained on human data could learn our prejudices.

- AI "intuition" isn't understandable or verifiable.

- AI "intuition" is fragile, and fails in new scenarios.

- AI "intuition" could partly fail, which may be worse: an AI with intact skills, but broken goals, would be an AI that skillfully acts towards corrupted goals.

(Again, what "logic" and "intuition" are will be more precisely explained later!)

Summed in a picture:

I think this is really quite good, and went into way more detail than I thought it would. Basically my only complaints on the intro/part 1 are some terminology and historical nitpicks. I also appreciate the fact that Nicky just wrote out her views on AIS, even if they're not always the most standard ones or other people dislike them (e.g. pointing at the various divisions within AIS, and the awkward tension between "capabilities" and "safety").

I found the inclusion of a flashcard review applet for each section super interesting. My guess is it probably won't see much use, and I feel like this is the wrong genre of post for flashcards.[1] But I'm still glad this is being tried, and I'm curious to see how useful/annoying other people find it.

I'm looking forward to parts two and three.

Nitpicks:[2]

Logic vs Intuition:

I think "logic vs intuition" frame feels like it's pointing at a real thing, but it seems somewhat off. I would probably describe the gap as explicit vs implicit or legible and illegible reasoning (I guess, if that's how you define logic and intuition, it works out?).

Mainly because I'm really skeptical of claims of the form "to make a big advance in/to make AGI from deep learning, just add some explicit reasoning". People have made claims of this form for as long as deep learning has been a thing. Not only have these claims basically never panned out historically, these days "adding logic" often means "train the model harder and include more CoT/code in its training data" or "finetune the model to use an external reasoning aide", and not "replace parts of the neural network with human-understandable algorithms". (EDIT for clarity: That is, I'm skeptical of claims that what's needed to 'fix' deep learning is by explicitly implementing your favorite GOFAI techniques, in part because successful attempts to get AIs to do more explicit reasoning look less like hard-coding in a GOFAI technique and more like other deep learning things.)

I also think this framing mixes together "problems of game theory/high-level agent modeling/outer alignment vs problems of goal misgeneralization/lack of robustness/lack of transparency" and "the kind of AI people did 20-30 years ago" vs "the kind of AI people do now".

This model of logic and intuition (as something to be "unified") is quite similar to a frame of the alignment problem that's common in academia. Namely, our AIs used to be written with known algorithms (so we can prove that the algorithm is "correct" in some sense) and performed only explicit reasoning (so we can inspect the reasoning that led to a decision, albeit often not in anything close to real time). But now it seems like most of the "oomph" comes from learned components of systems such as generative LMs or ViTs, i.e. "intuition". The "goal" is to a provably* safe AI, that can use the "oomph" from deep learning while having enough transparency/explicit enough thought processes. (Though, as in the quote from Bengio in Part 1, sometimes this also gets mixed in with capabilities, and become how AIs without interpretable thoughts won't be competent.)

Has AI had a clean "swap" between Logic and Intuition in 2000?

To be clear, Nicky clarifies in Part 1 that this model is an oversimplification. But as a nitpick, I think if you had to pick a date, I'd probably pick 2012, when a conv net won the ImageNet 2012 competition in a dominant matter, and not 2000.

Even more of a nitpick, but the examples seem pretty cherry picked?

For example, Nicky uses the example of deep blue defeating kasparov as an example of a "logic" based AI. But in that case, almost all Chess AIs are still pretty much logic based. Using Stockfish as an example, Stockfish 16's explicit alpha-beta search both is using a reasoning algorithm that we can understand, and does the reasoning "in the open". Its neural network eval function is doing (a small amount of) illegible reasoning. While part of the reasoning has become illegible, we can still examine the outputs of the alpha-beta search to understand why certain moves are good/bad. (But fair, this might be by far the most widely known non-deep learning "AI". The only other examples I can think of are Watson and recommender systems, but those were still using statistical learning techniques. I guess if you count MYCIN or SHRDLU or ELIZA...?)

(And modern diffusion models being unable to count or spell seem like a pathology specific to that class of generative model, and not say, Claude Opus.)

FOOM vs Exponential vs Steady Takeoff

Ryan already mentioned this in his comment.

Even less important and more nitpicky nitpicks:

When did AIs get better than humans (at ImageNet)?

In footnote [3], Nicky writes:

But humans do not get 95% top-1 accuracy[3] on imagenet! If you consult this paper from the imagenet creators (https://arxiv.org/abs/1409.0575), they note that:

And even when using an human expert annotators, who did hundreds of validation image for practice, the human annotator still got a top-5 error of 5.1%, which was surpassed in 2015 by the original resnet paper (https://arxiv.org/abs/1512.03385) at 4.49% for ResNet 14 (and 3.57% for an ensemble of six resnets).

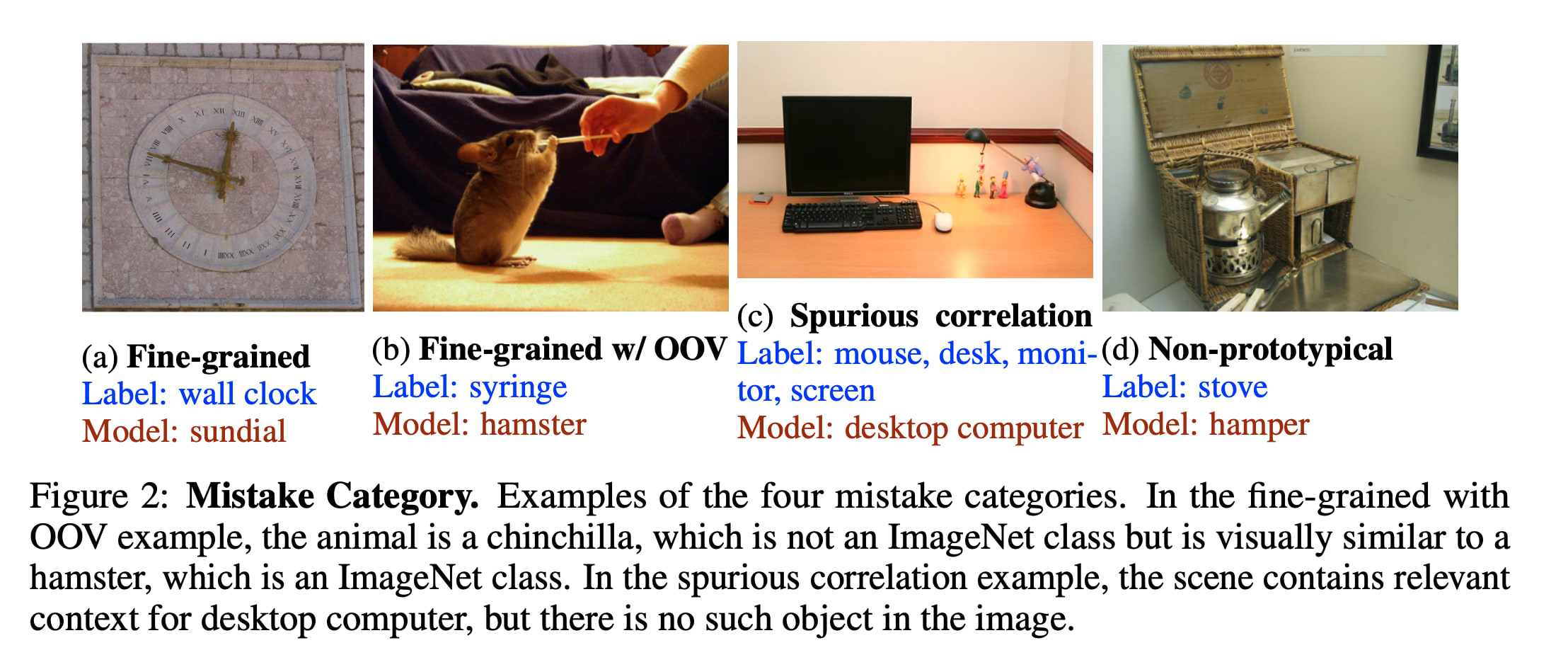

(Also, good top-1 performance on imagenet is genuinely hard and may be unrepresentative of actually being good at vision, whatever that means Take a look at some of the "mistakes" current models make:)

Using flashcards suggests that you want to memorize the concepts. But a lot of this piece isn't so much an explainer of AI safety, but instead an argument for the importance of AI Safety. Insofar as the reader is not here to learn a bunch of new terms, but instead to reason about whether AIS is a real issue, it feels like flashcards are more of a distraction than an aid.

I'm writing this in part because I at some point promised Nicky longform feedback on her explainer, but uh, never got around to it until now. Whoops.

Top-K accuracy = you guess K labels, and are right if any of them are correct. Top 5 is significantly easier on image net than Top 1, because there's a bunch of very similar classes and many images are ambiguous.

Also, another nitpick:

Humane vs human values

I think there's a harder version of the value alignment problem, where the question looks like, "what's the right goals/task spec to put inside a sovereign ai that will take over the universe". You probably don't want this sovereign AI to adopt the value of any particular human, or even modern humanity as a whole, so you need to do some Ambitious Value Learning/moral philosophy and not just intent alignment. In this scenario, the distinction between humane and human values does matter. (In fact, you c... (read more)