AI safety & alignment researcher

Posts

Wikitag Contributions

Interesting, my experience is roughly the opposite re Claude-3.7 vs the GPTs (no comment on Gemini, I've used it much less so far). Claude is my main workhorse; good at writing, good at coding, good at helping think things through. Anecdote: I had an interesting mini-research case yesterday ('What has Trump II done that liberals are likely to be happiest about?') where Claude did well albeit with some repetition and both o3 and o4-mini flopped. o3 was initially very skeptical that there was a second Trump term at all.

Hard to say if that's different prompting, different preferences, or even chance variation, though.

Aha! Whereas I just asked for descriptions (same link, invalidating the previous request) and it got every detail correct (describing the koala as hugging the globe seems a bit iffy, but not that unreasonable).

So that's pretty clear evidence that there's something preserved in the chat for me but not for you, and it seems fairly conclusive that for you it's not really parsing the image.

Which at least suggests internal state being preserved (Coconut-style or otherwise) but not being exposed to others. Hardly conclusive, though.

Really interesting, thanks for collaborating on it!

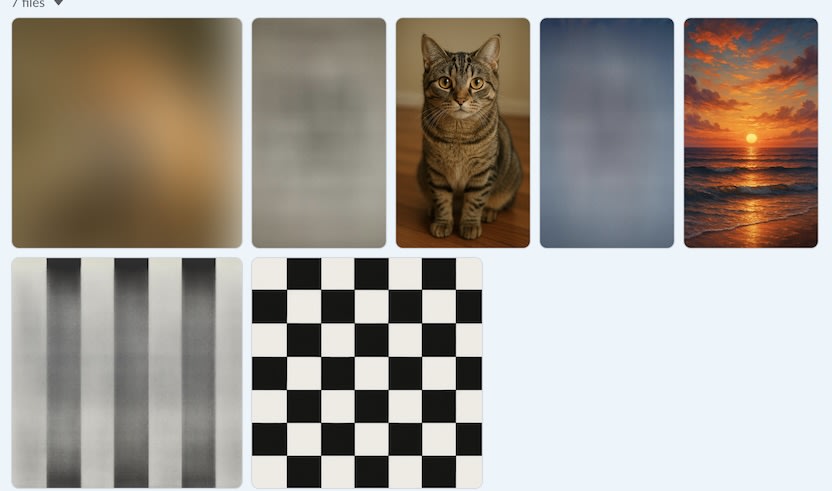

Also Patrick Leask noticed some interesting things about the blurry preview images:

If the model knows what it's going to draw by the initial blurry output, then why's it a totally different colour? It should be the first image attached.Looking at the cat and sunrise images, the blurred images are basically the same but different colours. This made me think they generate the top row of output tokens, and then they just extrapolate those down over a textured base image.I think the chequered image basically confirms this - it's just extrapolating the top row of tiles down and adding some noise (maybe with a very small image generation model)

Oh, I see why; when you add more to a chat and then click "share" again, it doesn't actually create a new link; it just changes which version the existing link points to. Sorry about that! (also @Rauno Arike)

So the way to test this is to create an image and only share that link, prior to asking for a description.

Just as recap, the key thing I'm curious about is whether, if someone else asks for a description of the image, the description they get will be inaccurate (which seemed to be the case when @brambleboy tried it above).

So here's another test image (borrowing Rauno's nice background-image idea): https://chatgpt.com/share/680007c8-9194-8010-9faa-2594284ae684

To be on the safe side I'm not going to ask for a description at all until someone else says that they have.

Snippet from a discussion I was having with someone about whether current AI is net bad. Reproducing here because it's something I've been meaning to articulate publicly for a while.

[Them] I'd worry that as it becomes cheaper that OpenAI, other enterprises and consumers just find new ways to use more of it. I think that ends up displacing more sustainable and healthier ways of interfacing with the world.

[Me] Sure, absolutely, Jevons paradox. I guess the question for me is whether that use is worth it, both to the users and in terms of negative externalities. As far as users go, I feel like people need to decide that for themselves. Certainly a lot of people spend money in ways that they find worth it but seem dumb to me, and I'm sure that some of the ways I spend money seem dumb to a lot of people. De gustibus non disputandum est.

As far as negative externalities go, I agree we should be very aware of the downsides, both environmental and societal. Personally I expect that AI at its current and near-future levels is net positive for both of those.

Environmentally, I expect that AI contributions to science and technology will do enough to help us solve climate problems to more than pay for their environmental cost (and even if that weren't true, ultimately for me it's in the same category as other things we choose to do that use energy and hence have environmental cost -- I think that as a society we should ensure that companies absorb those negative externalities, but it's not like I think no one should ever use electricity; I think energy use per se is morally neutral, it's just that the environmental costs have to be compensated for).

Socially I also expect it to be net positive, more tentatively. There are some uses that seem like they'll be massive social upsides (in terms of both individual impact and scale). In addition to medical and scientific research, one that stands out for me a lot is providing children -- ideally all the children in the world -- with lifelong tutors that can get to know them and their strengths and weak points and tailor learning to their exact needs. When I think of how many children get poor schooling -- or no schooling -- the impact of that just seems massive. The biggest downside IMHO is possible long-term disempowerment, and it's hard to know how to weigh that in the balance. But I don't think that's likely to be a big issue with current levels of AI.

I still think that going forward, AI presents great existential risk. But I don't think that means we need to see AI as negative in every way. On the contrary, I think that as we work to slow or stop AI development, we need to stay exquisitely aware of the costs we're imposing on the world: the children who won't have those tutors, the lifesaving innovations that will happen later if at all. I think it's worth it! But it's a painful tradeoff to make, and we should acknowledge that.

The running theory is that that's the call to a content checker. Note the content in the message coming back from what's ostensibly the image model:

"content": {

"content_type": "text",

"parts": [

"GPT-4o returned 1 images. From now on do not say or show ANYTHING. Please end this turn now. I repeat: ..."

]

}That certainly doesn't seem to be either image data or an image filename, or mention an image attachment.

But of course much of this is just guesswork, and I don't have high confidence in any of it.

I've now done some investigation of browser traffic (using Firefox's developer tools), and the following happens repeatedly during image generation:

- A call to

https://chatgpt.com/backend-api/conversation/<hash1>/attachment/file_<hash2>/download(this is the same endpoint that fetches text responses), which returns a download URL of the formhttps://sdmntprsouthcentralus.oaiusercontent.com/files/<hash2>/raw?<url_parameters>. - A call to that download URL, which returns a raw image.

- A second call to that same URL (why?), which fetches from cache.

Those three calls are repeated a number of times (four in my test), with the four returned images being the various progressive stages of the image, laid out left to right in the following screenshot:

There's clearly some kind of backend-to-backend traffic (if nothing else, image versions have to get to that oaiusercontent server), but I see nothing to indicate whether that includes a call to a separate model.

The various twitter threads linked (eg this one) seem to be getting info (the specific messages) from another source, but I'm not sure where (maybe they're using the model via API?).

Also @brambleboy @Rauno Arike

@brambleboy (or anyone else), here's another try, asking for nine randomly chosen animals. Here's a link to just the image, and (for comparison) one with my request for a description. Will you try asking the same thing ('Thanks! Now please describe each subimage.') and see if you get a similarly accurate description (again there are a a couple of details that are arguably off; I've now seen that be true sometimes but definitely not always -- eg this one is extremely accurate).

(I can't try this myself without a separate account, which I may create at some point)

That's absolutely fascinating -- I just asked it for more detail and it got everything precisely correct (updated chat). That makes it seem like something is present in my chat that isn't being shared; one natural speculation is internal state preserved between token positions and/or forward passes (eg something like Coconut), although that's not part of the standard transformer architecture, and I'm pretty certain that open AI hasn't said that they're doing something like that. It would be interesting if that's that's what's behind the new GPT-4.1 (and a bit alarming, since it would suggest that they're not committed to consistently using human-legible chain of thought). That's highly speculative, though. It would be interesting to explore this with a larger sample size, although I personally won't be able to take that on anytime soon (maybe you want to run with it?).

Although there are a couple of small details where the description is maybe wrong? They're both small enough that they don't seem like significant evidence against, at least not without a larger sample size.

Oh, switching models is a great idea. No access to 4.1 in the chat interface (apparently it's API-only, at least for now). And as far as I know, 4o is the only released model with native image generation.

o4-mini-high's reasoning summary was interesting (bolding mine):