1 Answers sorted by

90

Epistemic status: Exploratory

My overall chance of existential catastrophe from AI is ~50%.

My split of worlds we succeed is something like:

- 10%: Technical alignment ends up not being that hard, i.e. if you do common-sense safety efforts you end up fine.

- 20%: We solve alignment mostly through hard technical work, without that much aid from governance/coordination/etc. and likely with a lot of aid from weaker AIs to align stronger AIs.

- 20%: We solve alignment through lots of hard technical work but very strongly aided by governance/coordination/etc. to slow down and allow lots of time spent with systems that are useful to study and apply for aiding alignment, but not too scary to cause an existential catastrophe.

Timelines probably don’t matter that much for (1), maybe shorter timelines hurt a little. Longer timelines probably help to some extent for (2) to buy time for technical work, though I’m not sure how much as under certain assumptions longer timelines might mean less time with strong systems. One reason I’d think they matter for (2) is it buys more time for AI safety field-building, but it’s unclear to me how this will play out exactly. I’m unsure about the sign of extending timelines for the promise of (3), given that we could end up in a more hostile regime for coordination if the actors leading the race weren’t at all concerned about alignment. I guess I think it’s slightly positive given that it’s probably associated with more warning shots.

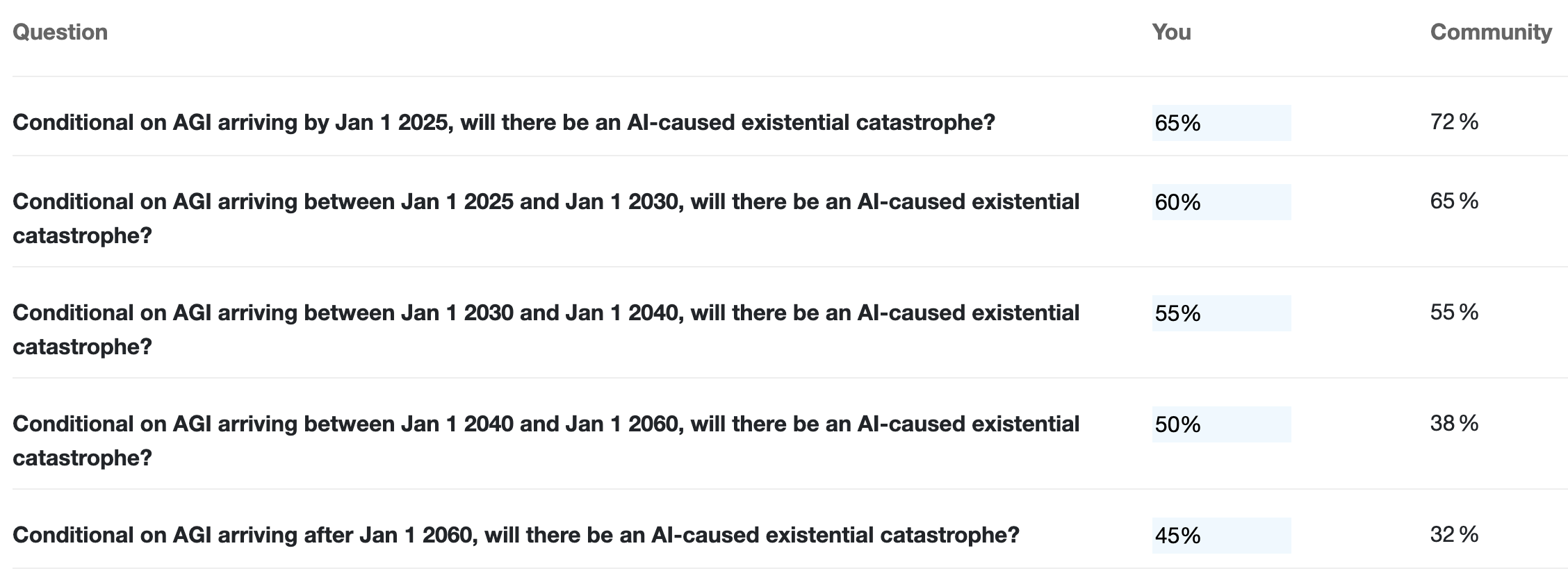

So overall, I think timelines matter a fair bit but not an overwhelming amount. I’d guess they matter most for (2). I’ll now very roughly translate these intuitions into forecasts for the chance of AI-caused existential catastrophes conditional on arrival date of AGI (in parentheses I’ll give a rough forecast for AGI arriving during this time period):

- Before 2025: 65% (1%)

- Between 2025 and 2030: 60% (8%)

- Between 2030 and 2040: 55% (28%)

- Between 2040 and 2060: 50% (25%)

- After 2060: 45% (38%)

Multiplying out and adding gives me 50.45% overall risk, consistent with my original guess of ~50% total risk.

My numbers for the last two questions were higher than I expected them to be -- I have previously said things like "If Ajeya and Paul are right about timelines, we're probably OK." I still think that. But if I condition on AGI happening in 20 years, that doesn't mean conditioning on Ajeya and Paul being right about timelines... To get more concrete: If I had Ajeya's timelines, my overall p(doom) would be <50%. But instead I have much shorter timelines. When I condition on AGI happening in 2042, part of my probability mass goes to worlds where Ajeya was basically right, but part of my probability mass goes to "weird" worlds, e.g. worlds where there's a scary AI accident in 2025 and AI gets successfully banned for 15 years until North Korea builds it. It's hard to say how much doom there is in weird worlds like this but it feels higher than in Ajeya's median world, because the cost of making AI is lower and there's more overhang and takeoff speeds are faster.

(I should add that while I'm fairly confident that existential risk from AI is very high conditional on it arriving soon, I am very unconfident in my p(doom) conditional on AI arriving later.)

Oops, looks like I voted twice on this by mistake. It didn't occur to me that the EA and LW versions linked to the same underlying poll. I'll see if it's possible to delete answers...

Crossposted to EA Forum

While there have been many previous surveys asking about the chance of existential catastrophe from AI and/or AI timelines, none as far as I'm aware have asked about how the level of AI risk varies based on timelines. But this seems like an extremely important parameter for understanding the nature of AI risk and prioritizing between interventions.

Contribute your forecasts below. I'll write up my forecast rationales in an answer and encourage others to do the same.