And another one is when you're training GANs, you know that you have a generator and a discriminator. And GAN training is famously unstable. ... if you fix the discriminator and you're doing back prop on the generator to make it fool the discriminator, eventually it just finds some kind of defect in the way the discriminator works.

Sure, but there are two problems with this. Firstly - why in god's name would someone fix the discriminator? Does your AI risk model really depend on humans developing something that could work well and then just intentionally ... breaking it? Secondly it's a bit of a strawman argument, as diffusion models don't really have this instability - so why not direct your argument against the diffusion model steelman version?

Cranking up the generator of a diffusion model (increasing it's capacity and training dataset) just causes it to create increasingly realistic images. There is no adversarial optimization issue, as it's a single cooperative joint objective that you are optimizing for. Meanwhile cranking up the capacity of the discriminator in a diffusion model improves it's ability to understand language prompts and steer generation towards those prompts.

At some point the discriminator becomes superhuman, at which point humans stop noticing much of further improvement in matching target prompts. At some point the the generator becomes superhuman, at which point humans stop noticing further improvements in realism.

There is of course an equivalent of 'maximizing faciness' in diffusion models - you can obviously crank up the discriminator weighting term and get bizarre images. But that's strictly a hyperparmeter change and thus mostly just equivalent to creating a great AI and then intentionally crippling it. I guess the closest equivalent is trying to generate humans using earlier diffusion models like disco diffusion which would mostly fail because of some combination of insufficient diffusion model (not trained on enough humans) and too large discriminator weighting.

The diffusion model also has a direct analogy to future diffusion planning agents. Cranking up the generator then increases the realism of their world model and planning ability, while cranking up the discriminator increases their ability to recognize and steer the future towards specific targets. At some level of capability in the generator you get a superhuman planner, and at some level of capability in the discriminator you get a superhuman steering mechanism, but there is no adversarial robustness issue. The equivalent of the disco diffusion human rendering failure is not a diffusion planner killing us, it's a diffusion planner generating a completely unrealistic plan. (Whereas the diffusion planner killing us is more like the equivalent of a diffusion image model generating an ultra-realistic picture of a corpse when you ask for a happy child - presumably because of a weak discriminator or poor hyperparams)

The fact that we already have strongly superhuman AI pattern recognition already in some domains suggests we could eventually have AI which is strongly superhuman at recognizing/predicting the human utility of simulated futures. Also - generating realistic image samples is far more difficult than superhuman image recognition.

I think you're misunderstanding the reason I'm making this argument.

The reason you in fact don't fix the discriminator and find the "maximally human like face" by optimizing the input is that this gives you unrealistic images. The technique is not used precisely because people know it would fail. That such a technique would fail is my whole point! It's meant to be an example that illustrates the danger of hooking up powerful optimizers to models that are not robust to distributional shift.

Yes, in fact GANs work well if you avoid doing this and stick to using them in distribution, but then all you have is a model that learns the distribution of inputs that you've given the model. The same is true of diffusion models: they just give you a Langevin diffusion-like way of generating samples from a probability distribution. In the end, you can simplify all of these models as taking many pairs of (image, description) and then learning distributions such as P(image), P(image | description), etc.

This method, however, has several limitations if you try to generalize it to training a superhuman intelligence:

-

The stakes for training a superhuman intelligence are much higher, so your tolerance of making mistakes is much lower. How much do you trust Stable Diffusion to give you a good image if the description you give it is sufficiently out of distribution? It would make a "good attempt" by some definition, but it's quite unlikely that it would give you what you actually wanted. That's the whole reason there's a whole field of prompt engineering to get these models to do what you want!

If we end up in such a situation with respect to a distribution of P(action | world state), then I think we would be in a bad position. This is exacerbated by the second point in the list.

-

If all you're doing is learning the distribution P(action humans would take | world state), then you're fundamentally limited in the actions you can take by actions humans would actually think of. You might still be superhuman in the sense that you run much faster and with much less energy etc. than humans do, but you won't come up with plans that humans wouldn't have come up with on their own, even if they would be very good plans; and you won't be able to avoid mistakes that humans would actually make in practice. Therefore any model that is trained strictly in this way has capability limitations that it won't be able to get past.

One way of trying to ameliorate this problem is to have a standard reinforcement learning agent which is trained with an objective that is not intended to be robust out of distribution, but then combine it with the distribution P such that P regularizes the actions taken by the model. Quantilizers are based on this idea, and unfortunately they also have problems: for instance, "build an expected utility maximizer" is an action that probably would get a relatively high score from P, because it's indeed an action that a human could try.

-

The distributional shift that a general intelligence has to be robust to is likely much greater than the one that image generators need to be robust to. So on top of the high stakes we have in this scenario per point (1), we also have a situation where we need to get the model to behave well even with relatively big distributional shifts. I think no model that exists right now is actually as robust to distributional shift as much as we would want an AGI to be, or in fact even as much as we would want a self-driving car to be.

-

Unlike any other problem we've tried to tackle in ML, learning the human action distribution is so complicated that it's plausible an AI trained to do this ends up with weird strategies that involve deceiving humans. In fact, any model that learns the human action distribution should also be capable of learning the distribution of actions that would look human to human evaluators instead of actually being human. If you already have the human action distribution P, you can actually take your model itself as a free parameter Q and maximize the expected score of the model Q under the distribution P: in other words, you explicitly optimize to deceive humans by learning the distribution humans would think is best rather than the distribution that is actually best.

No such problems arise in a setting such as learning human faces, because P is much more complicated than actually solving the problem you're being asked to solve, so there's no reason for gradient descent to find the deception strategy instead of just doing what you're being asked to do.

Overall, I don't see how the fact that GANs or diffusion models can generate realistic human faces is an argument that we should be less worried about alignment failures. The only way in which this concern would show up is if your argument was "ML can't learn complicated functions", but I don't think this was ever the reason people have given for being worried about alignment failures: indeed, if ML couldn't learn complicated functions, there would be no reason for worrying about ML at all.

The technique is not used precisely because people know it would fail. That such a technique would fail is my whole point! It's meant to be an example that illustrates the danger of hooking up powerful optimizers to models that are not robust to distributional shift.

And it's a point directed at a strawman in some sense. You could say that I am nit picking, but diffusion models - and more specifically - diffusion planners simply do not fail in this particular way. You can expend arbitrary optimization power on inference of a trained image diffusion model and that doesn't do anything like maximize for faciness. You can spend arbitrary optimization power training the generator for a diffusion model and that doesn't do anything like maximize for faciness it just generates increasingly realistic images. You can spend arbitrary optimization power training the discriminator for a diffusion model and that doesn't do anything like maximize for faciness, it just increases the ability to hit specific targets.

Diffusion planners optimize something like P(plan_trajectory | discriminator_target), where the plan_trajectory generative model is a denoising model (trained from rollouts of a world model) and direct analogy of the image diffusion denoiser, and discriminator_target model is the direct analogy of an image recognition model (except the 'images' it sees are trajectories).

The correct analogy for a dangerous diffusion planner is not failure by adversarially optimizing for faciness, the analogy is using an underpowered discriminator that can't recognize/distinguish good and bad trajectories from a superpowered generator - so the analogy is generating an image of a corpse when you asked for an image of a happy human. The diffusion planner equivalent of generating a grotesque unrealistic face is simply generating an unrealistic (and non dangerous) failure of a plan. My main argument here is that the 'faciness' analogy is simply the wrong analogy (for diffusion planning - and anything similar).

- How much do you trust Stable Diffusion to give you a good image if the description you give it is sufficiently out of distribution? It would make a "good attempt" by some definition, but it's quite unlikely that it would give you what you actually wanted. That's the whole reason there's a whole field of prompt engineering to get these models to do what you want!

Sure - but prompt engineering is mostly irrelevant, as the discriminator output for AGI planning is probably just a scalar utility function, not a text description (although the latter may also be useful for more narrow AI). And once again, the dangerous out of distribution failure analogy here is not a maximal 'faciness' image for a human face goal, it's an ultra realistic image of a skeleton.

- If all you're doing is learning the distribution P(action humans would take | world state), then you're fundamentally limited in the actions you can take by actions humans would actually think of.

Diffusion planners optimize P(plan_trajectory | discriminator_target), where the plan_trajectory model is trained to denoise trajectories which can come from human data (behavioral cloning) or rollouts from a learned world model (more relevant for later AGI). Using the latter the agent is obviously not limited to actions human think of.

One way of trying to ameliorate this problem is to have a standard reinforcement learning agent

That is one way, but not the only way and perhaps not the best. The more natural approach is for the learned world model to include an (naturally approximate) predictive model of the full planning agent, so it just predict it's own actions. This does cause the plan trajectories to become increasingly biased towards the discriminator target goals, but one should be able to just adjust for that and it seems necessary anyway (you really don't want to spend alot of compute training on rollouts that lead to bad futures).

- The distributional shift that a general intelligence has to be robust to is likely much greater than the one that image generators need to be robust to.

Maybe? But I'm not aware of strong evidence/arguments for this, it seems to be mostly conjecture. The hard part of image diffusion models is not the discriminator, it's the generator: we had superhuman discriminators long before we had image denoiser generators capable of generating realistic images. So this could be good news for alignment in that it's a bit of evidence that generating realistic plans is much harder than evaluating the utility of plan trajectories.

But to be clear, I do agree there is a distributional shift that occurs as you scale up the generative planning model - but the obvious solution is to just keep improving/retraining the discriminator in tandem. Assuming a static discriminator is a bit of a strawman. And if the argument is it will be too late to modify once we have AGI, the solution to that is just simboxing (and also we can spend far more time generating plans and evaluating them then actually executing them).

- Unlike any other problem we've tried to tackle in ML, learning the human action distribution is so complicated that it's plausible an AI trained to do this ends up with weird strategies that involve deceiving humans. In fact, any model that learns the human action distribution should also be capable of learning the distribution of actions that would look human to human evaluators instead of actually being human.

Maybe? But in fact we don't really observe that yet when training a foundation model via behavioral cloning (and reinforcement learning) in minecraft.

And anyway, to the extent that's a problem we can use simboxing.

Overall, I don't see how the fact that GANs or diffusion models can generate realistic human faces is an argument that we should be less worried about alignment failures.

That depends on to the extent that you had already predicted that we'd have things like stable diffusion in 2022, without AGI.

The only way in which this concern would show up is if your argument was "ML can't learn complicated functions",

Many many people thought this in 2007 when the sequences were written - it was the default view (and generally true at the time).

but I don't think this was ever the reason people have given for being worried about alignment failures: indeed, if ML couldn't learn complicated functions, there would be no reason for worrying about ML at all.

EY around 2011 seemed to have standard pre-DL views on ANN-style ML techniques, and certainly doubted they would be capable of learning complex human values robustly enough to instill into AI as a safe utility function (this is well documented ). He had little doubt that later AGI - after recursive self improvement - would be able to learn complicated functions like human values, but by then of course it's obviously too late.

The DL update in some sense is "superhuman pattern recognition is easier than we thought, and comes much earlier than AGI", which mostly seems to be good news for alignment, because safety is mostly about recognizing bad planning trajectories.

I think we're not communicating clearly with each other. If you have the time, I would be enthusiastic about discussing these potential disagreements in a higher frequency format (such as IRC or Discord or whatever), but if you don't have the time I don't think the format of LW comments is suitable for hashing out the kind of disagreement we're having here. I'll make an effort to respond regardless of your preferences about this, but it's rather exhausting for me and I don't expect it to be successful if done in this format.

That's in part why we had this conversation in the format that we did instead of going back-and-forth on Twitter or LW, as I expected those modes of communication would be poorly suited to discussing this particular subject. I think the comments on Katja's original post have proven me right about that.

but diffusion isn't the strongest. diffusion planning is still complete trash compared to mcts at finding very narrow wedges of possibility space that have high consequential value. it's not even vaguely the same ballpark. you can't guide diffusion planning like you can guide mcts unless you turn diffusion into mcts. diffusion is good for a generative model, but its compute depth is fundamentally wrong. if you want to optimize something REALLY REALLY INHUMANLY HARD, mcts style techniques are how you do it right now; but of course they fall into adversarial examples of most world models you feed them, because they optimize too hard for the world model to retain validity. and isn't that the very thing we're worried about from safety - a very strong planner that hasn't learned to keep itself moving back towards the actually-truly-prosocial-for-real part of the natural abstraction for plan space?

I'm not sure which diffusion planning you are talking about - some current version? Future versions? My version?

MCTS doesn't scale in the way that full neural planning can scale. So MCTS is mostly irrelevant for AGI, as the latter requires highly scalable approximate planning. So to me the question is simply what is the highly scalable approximate neural planner look like? And my best answer for that of course is a secret, and my best public answer is something like diffusion planning.

Interesting that AlphaGo plays strongly atypical or totally won positions 'poorly' and therefore isn't a reliable advice-giver for human players. Chess engines have similar limitations with different qualities. First, they have no sense of move-selection difficulty. Strong human players learn to avoid positions where finding a good move is harder than normal. The second point is related: in winning positions (say, over +3.50 or under -3.50), the human move-selection goal shifts towards maximizing winning chances by eliminating counterplay. E.g., in a queen ending two pawns ahead, it's better to exchange queens than win a third pawn with queens remaining. Not according to engines, though. If a move drops the eval from +7.50 to +5.00, they'll call it a blunder.

I imagine these kinds of human-divergent evaluation oddities materialize for any complex task, and more complex = more divergent.

Yeah, that matches my experience with chess engines. Thanks for the comment.

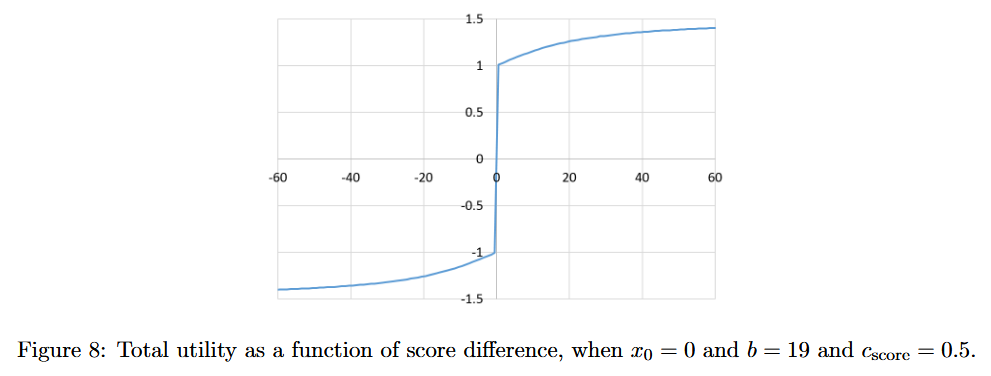

It's probably worthwhile to mention that people have trained models that are more "human-like" than AlphaGo by various parts of the training process. One improvement they made on this front is that they changed the reward function so that while almost all of the variance in reward is from whether you win or lose, how many points you win by still has a small influence on the reward you get, like so:

This is obviously tricky because it could push the model to take unreasonable risks to attempt to win by more and therefore risk a greater probability of losing the game, but the authors of the paper found that this particular utility function works well in practice. On top of this, they also took measures to widen the training distribution by forcing the model to play handicap games where one side gets a few extra moves, or forcing the model to play against weaker or stronger versions of itself, et cetera.

All of these serve to mitigate the problems that would be caused by distributional shift, and in this case I think they were moderately successful. I can confirm from having used their model myself that it indeed makes much more "human-like" recommendations, and is very useful for humans wanting to analyze their games, unlike pure replications of AlphaGo Zero such as Leela Zero.

"I think there's somewhat of criticisms are, I think, quite poor."

"somewhat of criticisms" -> "summary of criticisms"

This post is a transcript of a conversation between Ege Erdil and Ronny Fernandez, recorded by me. The participants talked about a recent post by Katja Grace that presented many counterarguments to the basic case for AI x-risk. You might want to read that post first.

As it was transcribed automatically by Whisper, along with some light editing on top, there might be many mistakes in the transcript. You can also find the audio file here, but note that the first five lines of the dialogue do not appear in the audio.

Ronny Fernandez wants to make it clear that he did not know the conversation would eventually be published. (But all participants have consented to it being published).

Ege Erdil

You know about Epoch, right?

Ronny Fernandez

Not really, actually.

Ege Erdil

OK, so Epoch is a recently established organization that focuses on forecasting future developments in AI. And Matthew is currently working there and hosted this post, like, with Slack. So there's some discussion of that.

Ronny Fernandez

Gotcha.

Ege Erdil

And he basically said that he thought that it was a good post. We didn't. I mean, it's not just me. There's also someone else who thought that it wasn't really very good. And that's just something he said, oh, you know, I should talk to Ronny because he also thinks that the post was good.

Ronny Fernandez

Yeah, sweet. So question I want to ask is, do you think the post wasn't good because you think that the summary of the basic argument for existential risk from superintelligence is not the right argument to be criticizing? Or do you think the post wasn't good because the criticisms weren't good of that argument or for some other reason?

Ege Erdil

I think it's both. I think there's somewhat of criticisms are, I think, quite poor. But I also think the summary of the argument is not necessarily that great. So yeah. So we could also go through the post and order that arguments are given. Or I could just talk about the parts that we talked about.

Ronny Fernandez

I'll pull up the post now. No, I think it'd be great to take a look at the argument and talk about how you would repartition the premises or change the premises or whatever.

Ege Erdil

Yeah. So let me first go to the last section, because I think it is actually interesting in the sense that the given arguments could in principle apply to corporations. And the post points this out. This is an example. It's been given before. And it says this argument proves too much, because it also proves that we have to be very worried about corporations. There's a certain logic to that. But I think what is overlooked in the post is that the reason we don't worry much about corporations is that corporations are very bad at coordinating, even internally, let alone across different corporations cooperating.

So I think the main reason we should be worried about AI, and there are some scenarios in which takeoff is very fast, and one guy builds something in his basement, and that takes over the world. But I think those scenarios are pretty unlikely. But I do think if you have that kind of view, the post is going to be pretty unconvincing to you, because it doesn't really argue against that situation. But I don't really believe that's plausible.

For me, the big problem is that the post does not focus on the fact that it will be much, much easier for AIs that have different goals to coordinate than it should be for humans. Because AIs can have very straightforward protocols that, especially if they are goal-directed in the sense defined by the post, you just take two goals and merge them according to some weights, and you have this new AI that's bigger. And it's a mixture of the two things that was before.

AIs can coordinate much more effectively through this mechanism, while humans really cannot coordinate. And when you say that a corporation is maximizing profit, and that's dangerous, because if you maximize anything too far, then it's going to be bad. I think that's true. But I think a corporation is not. A corporation has so much internal coordination problems that a corporation is not really maximizing profit in any sign of meaningful way. A corporation is much too inefficient, much too poorly coordinated to be doing anything like that.

And I think if you had these huge agencies, like governments or corporations that are huge in scale, and they were all coordinating with both internally and likely could coordinate with each other very well, I think that could be a very, very bad situation. And so I don't think it would be as bad, but it would be really bad. If you imagine there are 10 countries in the world, and they all have some perfect coordination between each other, that would be pretty dangerous, I think.

Ronny Fernandez

So I think that's just like to the extreme, if they could make deals about how they're going to change their terminal goals with each other, where they can prove that the other one is going to hold up their end of the deal, and they can prove that I'm going to hold up my end of the deal. And clearly, that's a pretty big deal. Cool, yeah, so I pretty much agree.

I want to say, I mean, first of all, that's not the part of the post that I was most impressed by. I think I pretty much agree with you, except that I think it's pretty plausible that we'll end up with the first super intelligent AIs being about as bad at understanding their own cognition as we are. Does that make sense? Just because if we end up with just giant neural networks being what does it, then it might very well be that their cognition is not just as opaque, but still fairly opaque to them, if that makes sense.

Ege Erdil

Right, I think it's possible, the scenario is possible. I think it's fairly likely.

Ronny Fernandez

It's a question of it seems like the bigger you make a neural network, the more opaque it's going to be.

Ege Erdil

Sure, but there are other ways in which you can try to. Like for instance, I think there is like two ways in which your opaqueness can hinder you. One of them is like you might not know exactly what your own goals are, and the other might be you don't know what to change them. And I think if the problem is that you don't know how to change them, I think there are ways around that. If you know that, you can train a new AI with a mixed goal. But if you don't know what your goals are, I agree there's some problem there.

Ronny Fernandez

Yeah, yeah, yeah. What I was imagining was just like this very intense case where you can literally just write up a proof contract where we can just prove that we're both going to end up, we're both going to hold our ends of the deal. If your cognition is opaque to you, you are not going to be able to do that.

Ege Erdil

I agree. I agree that it would defeat that very simple protocol. Right. But yeah, I think there is a case in which the cognition of the AI might not be that opaque to the AI. That's not obvious to me, but it would be opaque. But I don't want to spend too much time on this, but I am curious.

Ronny Fernandez

What's the like besides using proofs?

Ege Erdil

Oh, you would need to prove it in some way. But the protocol might not be as simple as I have a utility function and you have some other utility function. We just take a convex elimination of those and we're done. It might not be something that simple.

Ronny Fernandez

Right, but somehow we're going to like, we're both two big ass neural networks. Somehow, the way that you're imagining the coordination is going to be so good is that somehow you end up with a proof that I'm going to hold up my end of the deal and I end up with a proof that you're going to hold up your end of the deal.

Ege Erdil

That's right, or we do something simultaneously in this case. Like, there are ways in which. Basically, the way I imagine it is that once such a deal is completed, like the previous neural networks, like previous AIs stop existing and there is a new AI that both of them think is going to pursue their goals to a sufficient extent that they can trust it in some sense. I think.

Ronny Fernandez

So I think this also seems pretty hard because I don't necessarily think it's obvious that alignment is going to be easier for very large neural networks to do than for humans to do. If that makes sense.

Ege Erdil

I don't necessarily think it might be easier for some goals more than others. And that's probably, like, if you imagine that an AI knows that a certain process is capable of creating it, then probably it knows what that process is and probably it knows that it can replicate it. That's already a pretty big advantage. Because we don't know that. We know that it's going to produce humans.

Ronny Fernandez

Yeah. If the only way that they have available to make a new AI is to do a bunch of machine learning, I mean, they're just going to end up with all the problems that we have with making aligned AIs, right?

Ege Erdil

Sure, but we are trying to make AIs aligned to us while they would be trying to make it aligned to them. And they are neural networks trained in the same way. So it's not obvious to me that it wouldn't be much easier.

Ronny Fernandez

Yeah, it seems to me like it would be about as hard. I don't expect neural networks to have particularly simple goals. I just expect them to have random different goals.

Ege Erdil

Cool.

Ronny Fernandez

But either way, I don't want to spend too much time on this because I don't think it's a real disagreement. I mean, I don't think it's an important disagreement for this because I overall agree with the point that it's very plausible that they'll have, to some degree, better coordination. And that's why the argument applies to AIs, but not to corporations. So the part of it that I thought was strongest was the values parts. So differences between AI and human values may be small. And also, maybe value isn't fragile part.

Ege Erdil

So the value is fragile part, I thought, was not like I thought that this would be the weakest parts of the entire approach. So the way I understand, for instance, the post gives examples of faces generated by this person does not exist as evidence that, oh, these faces all look like real human faces. And that is true. But I think the concern with the fragility of value is not that if you try to build something that it would look blatantly wrong in the training distribution.

So in this case, the relevant question, I think, is do you think whatever AI is being used to generate these faces that you find in the post, do you trust that AI enough that you would hook it up to an arbitrarily powerful optimizer to do what it wants with it? Like if it needs some understanding of faces, a model of how human faces are supposed to look, and you give it this AI as an oracle, do you think that would be safe? And I think it's pretty clear the answer is no. Like we know that this model's understanding of what a human face is supposed to be like is not going to be that good, even though we can't spot differences.

We can't spot the ways in which it's not realistic based on just these images. And I think that's actually exactly the kind of situation we would have when we are trying to align the AI is that it would look to us like it was aligned in the distribution, like in what we see in distribution. But if you deploy it in some maybe small fraction of worlds or cases or environments, you would get some very catastrophic failure.

And that's, I think, what I would also expect if you did exactly what I said before, like hooking this up to a very powerful optimizer to do something. It doesn't even matter what. It would just be importantly wrong in some way. And I think the post doesn't address this at all. It just says, oh, you look like all these faces have noses. And if they didn't have noses, they wouldn't be human faces. But the model knows with noses in there, which I thought was kind of silly. Of course, it knows how to put noses in there. That's the kind of thing we should expect in alignment failures scenarios.

Ronny Fernandez

So I think the analogy is supposed to be that, so the classical argument for human values being fragile is something like the function that a human uses to say how good a world is is very complicated. And furthermore, if you mess with any of the parameters in that function, you don't end up with a function that kind of closely approximates what a human uses to evaluate worlds. You end up with a function that just doesn't track what a human uses to evaluate worlds at all. Is that something like that?

Ege Erdil

It might track it very well in some subset of worlds. It just doesn't general pass to domain when we shoot a training. Right, sure.

Ronny Fernandez

But I mean, I do trust this AI to make human faces. If the entire world depended on making a drawing of a human face.

Ege Erdil

Yeah, but that's not the question I'm asking. You're evaluating the model exactly on the task that it was assessed for in the training.

Ronny Fernandez

But in the other case, we'll also be training a model to maximize human values in the environment.

Ege Erdil

So if you try to do that with the same kind of structure, I mean, I don't know what this person is using nowadays, but once upon a time, it was using GANs. And in that way you use GANs is that there's a discriminator and the discriminator is supposed to not be able to tell you apart from the real human faces. So if you try to do something like that to align AI with human values, you're essentially doing something like reinforcement learning between feedback except the evaluator is itself a neural network. And so you save time instead of having to evaluate them one by one. And obviously, people have talked at length about the problems that this kind of scheme would have for alignment.

Basically, you would just be training things to look good to humans instead of actually being good. And there could be a big difference there in principle. If you're evaluating things in the training distribution, nobody thinks that the AI is going to do something that to a human looks bad. But if you deploy the AI in the real world, which is going to be a distributional shift, no matter how much you try to avoid it, or if you're sampling enough times for the same distribution, so you get some kind of extreme value which you did not do in training, then there's really no guarantee.

If this model generates a face that's not realistic, say that it does this one out of one quadrillion times each time you run it, which could be possible, then there is no real reason. You don't lose anything because the model generates a bad phase. And the cost of that is very, very small. And if it does that, you can just update the model so it doesn't do that anymore. You have online learning because the stakes are small. But if stakes are very large, then either distribution shift or same distribution, but you sample a very extreme value from the distribution very infrequently, that could have very, very big impact.

In principle, you cannot bound the damage the AI is going to do by an average case guarantee. So I don't think it's a very good analogy to compare it with GANs or anything like that.

Ronny Fernandez

Yeah. So I like the point that we are seeing examples that we are not like. Yeah, so maybe here's another way to put the thing you just said. When it comes to the task of making something that looks like a human face, it's going to be very hard to come up with an image that looks like a human face but isn't looks to a human like a human face but doesn't actually look like a human face. Does that make sense?

Ege Erdil

I mean, if you're just evaluating the image, I think. That is right. But obviously, you could. Like, I think I agree with that in this context. But that's not quite like that's not my only point, I guess.

Ronny Fernandez

OK. Well, here's the point that I have. The point that I have is, or here's what you talking inspired in me. Like, in fact, in cases like, for instance, when you're trying to make sure that a diamond is still in a room, there are lots of cases that look to humans like success. And as far as a human can evaluate it, it is success. But when it comes to the task of making a drawing of a human face, humans can pretty much evaluate that flawlessly. And so that's an important difference between these two kinds of tasks. That's an important disanalogy.

Ege Erdil

Right? I agree. Cool.

Ronny Fernandez

And there was something else that you were trying to get across?

Ege Erdil

Sure. I'm just saying that I would expect that a GAN actually has a pretty substantially high error rate in the sense that if you take this person does not exist and you sample like a million images from this website, I would expect that one or two at least would look like very unrealistic. Sure. Yeah. So my point was that in a regime where stakes are low, then it doesn't matter if you have an error rate of like one in a million. It's pretty good. And whenever you make an error, that tells you something new, like about the way the model can go wrong. And you can just do online learning. You can just fix whatever problem the model had. But if you're in a regime where the model's decisions could have arbitrarily high cost, then one mistake made by the model could be catastrophic. Or at least it could have very, very high cost.

Ronny Fernandez

I'm pretty positive that Katja does not think that these arguments bring the probability of doom from AI below 1%.

Ege Erdil

Yeah, I asked Matthew and he said that she gives something like 8%.

Ronny Fernandez

Yeah, right.

So I guess one of my other problems is that I think this would be much better if the claims that are made as a certain claims, like there's a lot of claims in the posts that are often formed, like that x is not necessarily true, or like y may not be true, or claims like this. And I would feel much more comfortable if we could get some confidence estimates or probability estimates on these claims. Because when I see x might not be true, I'm not sure if I should put a probability of 90% or 10% on x. Sure.

Ronny Fernandez

Yeah, so I mean, I think Katja is responding to people like myself, who are like 60 or 70, maybe even higher, if I'm being honest, on like, if we make AGI, we will have an existential catastrophe. And she's saying that that's way too high. I don't think that she's arguing against, like, in general being worried about it, if that makes sense.

Ege Erdil

Sure. I mean, I think that's fine. But my problem is like, I'm also, like I'm actually less worried about it than she is. But I think her arguments are not convincing to me. The reason I'm not worried is not because I think her arguments are good. I think if all I had were her arguments, I would be pretty worried. So that's like, yeah. Cool.

Ronny Fernandez

Well, some other time, I would love to hear your arguments, then.

Ege Erdil

Sure. Like, what part of the, I think you also said that difference, like this idea of difference between AI values and human values, like these might be small. And you said like that's part you thought was good?

Ronny Fernandez

Yes, that's the main part that I thought was good.

Ege Erdil

OK. OK, so first of all, I'm not sure that's, like, I mean, maybe this is like a rough, heuristic kind of reasoning. But I really don't like denoting differences between values by like a single number. Like, you know, you can just like, you have this value and that's some other value. And like, there's a difference between them. And like, I don't see how that can make sense. Because I think in any, like, if two values differ by any kind of, like, any material way, then there will be a world in which they differ a lot. And like, maybe you think that world is like, clear and likely or it's not realistic. So it's like that world to happen. Well, there is actually, I mean, there's a pretty reasonable way to do it.

Ronny Fernandez

There's like, I mean, the way that I would do it would be like, suppose that I were God, how much utility would I get according to my utility function? Then suppose that this other utility function is God. How much utility do I get according to my utility function if it's allowed to run wild? And then you just subtract the two values. Does that make sense?

Ege Erdil

I mean, obviously, you need to sort of specify what God means. Like, if you're God, why can't you just set your own utility?

Ronny Fernandez

You just argmax the universe. You find the argmax of the universe for that utility function. And then you find the argmax of the universe for your utility function. And then you take the difference and that's the single value.

Ege Erdil

Sure. Obviously, yeah, that's one thing you might use. But in that case, I would expect d to be large with any kind of Montreal difference. I would expect d to be very large even for me and you.

Ronny Fernandez

Yeah, I agree that d would be very large for me and you or for me and a random person. My guess is less large for me and you than for me and a random person, but whatever.

Ege Erdil

Yeah, I agree with that. Yeah.

Ronny Fernandez

Yeah. So I really like the point that there's a disanalogy between, I mean, I would recommend, I mean, I would be happy to write it as a comment or you could write it as a comment. But I think the point that there's an important disanalogy between the task of making human faces and the task of taking actions in the world that maximizes human values is that we can evaluate every performance of making a human face and we cannot evaluate every performance of doing actions in the world according to some goal.

Ege Erdil

Yeah, I agree with that. I think that's a really important disanalogy between the two cases. Sure. Is there anything else, like any other parts?

Ronny Fernandez

So I mean, yeah, I want to kind of delve into this a little bit more. So suppose that in fact.

[long pause]

So this disanalogy is like a reason that the two, OK, so Katja's analogy goes something like this. This is how I think of the argument. Look, when it comes to making human faces, making a human face is a really complicated thing. What counts as a human face is a really complicated function. But ML is very good at figuring out what counts as a human face. So the mere fact that human values are really complicated does not suggest that ML is going to be really bad at figuring out what worlds count as very high human value versus very low human value. As long as we can evaluate that well.

Ege Erdil

Yeah, I agree with that argument. I think in fact, if you are not in a situation where getting human values wrong would be catastrophic in the sense that one mistake or one way in which you might make a mistake could cause some disaster. I think Paul Christiano drew this distinction between high stakes versus low stakes learning, where if you're learning at low stakes, which is like learning human faces, that's a low stakes learning problem. If you make one mistake, you don't pay a huge cost for it. So you can afford to make a lot of mistakes during training and also during deployment.

You can see how your deployment model fails in real world in some ways. For instance, if you're using something like DALL-E or stable diffusion, you can test out prompts in real world for which someone gives garbage answers. But the world is not destroyed because stable diffusion didn't give the image you want. So it's pretty good. Obviously, it has very good performance. But it doesn't always give you a good image that matches the prompt you gave it. And that's because there could be various reasons. Maybe it just didn't see the kind of prompt you were giving it in training at all. And it's sufficiently out of distribution that it doesn't know what to do. And there are many examples like that in machine learning.

One other example I particularly like is when AlphaGo was trained, the objective that it was given was to win the game. So it was scored strictly on, do you win the game or do you lose the game? And that seems like a reasonable thing to score. But then some people might want to use the bots to analyze their games or to suggest a move that for a human would be a good move to play in a position. And AlphaGo doesn't do that because if it knows it's winning, it's ahead by a lot. It just makes random moves because it knows it's going to win anyway. And that might not be obvious when you initially wrote down the reinforcement learning program. But in fact, it is something that it does.

Or another thing is it doesn't know what to do in positions that it doesn't encounter through training. If you make some artificial position on the board that will never occur in real play, then it doesn't know what to do. And that's exactly the problem I have, like the distributional shift. You see when you're playing Go in a certain setting, it seems like if AlphaGo is good at playing Go, you might expect it to be good at playing Go in other positions that might not naturally occur during play. But in fact, it's not because it doesn't see those positions. And it doesn't get a reward for getting them right in the training distribution.

So that's the kind of problem I expect. And if the mistakes are very costly, then you cannot afford to deploy the system in the real world and wait until it makes a mistake and then do online learning because that might be the end of the world. So you can't afford to do that. Obstacle I see to alignment.

Ronny Fernandez

So just I guess what I was imagining is that you do something like somehow you represent world states. And you're like, and you basically train something to recognize valuable world states. It seems to me like if you get a module that's very, I mean, I feel like if you get a module that's as good at recognizing valuable world states as the GAN model here is good at recognizing human faces, then very likely things are not going to end up that bad.

Ege Erdil

Yeah, so I'm not that confident of that. But I think if we could, if you could do as well as current systems do for face generation, I agree that would be pretty good. But you have to take into account that current systems are not just the result of training them in the training distribution. It's also that you get to see the kinds of mistakes that these systems make in the real world and you take action to make them many, many times.

Ronny Fernandez

I mean, and also in fact, the cool thing about this is that we're very, we in fact have humans that are very good at evaluating whether something is a human face or not. We are not as nearly as good at evaluating whether something is an action that promotes human values or whatever.

Ege Erdil

Yeah, obviously there's also additional complications that arise from the fact that evaluating actions is much more difficult because you also need to evaluate both the value part and the world model part because you don't necessarily.

Ronny Fernandez

I'm like, OK. I'm like, what if you just train something that just looks, takes, it takes in world states and you're like, it gets a very high score if we're very confident it's a good world state. And it gets a low score if you're either confident it's a bad world state or we're very unsure or there's a lot of disagreement. I don't know. If it learns this function as well as it learns the function of what counts as a human face, I'm feeling pretty happy. Does that make sense?

Ege Erdil

Sure. But again, the main obstacles I see to this, again, one of them is evaluating a world state is very complicated for humans. It takes a very long time probably to give a human a single world state and ask them, is this a good world or not?

Ronny Fernandez

Yeah. And so I think the way we would do it is we would probably do a bunch of things that are like, it's like this world except it's like the actual world except this is different, things like that.

Ege Erdil

Yeah. I mean, honestly, I'm not sure if that would work because you can imagine that it's like the actual world, but this is different, but it has many non-trivial consequences that you might not have. So for instance, you might say there is this additional world, which is like the real world except instead of a Lorentzian metric, we have a Euclidean metric or something like this. And that seems like a very simple change. But of course, such a change would affect everything, if it affect our chemistry or if it affect our electromagnetism.

Ronny Fernandez

So the proposal that I'm giving right now, it would be like that would get a very low score because we're not very sure what that world would look like.

Ege Erdil

Sure, but I'm saying the AI can make proposals for which you would be sure.

Ronny Fernandez

Ah, and I'm wrong.

Ege Erdil

Right. Right, cool. And so the main thing that I think one of the valuable things that came out of the ELK, like the Euclidean latent knowledge report was this idea that in this context, some AI system that you're trying to get to be AOL might learn to be a human simulator instead of something else. Basically, it might try to model how your brain works. And it might give you examples that you would think are good but are not actually good.

Ronny Fernandez

OK. Yeah. OK, I want to point out, though, that this has kind of moved not your goalpost necessarily, but I don't know, it's moved my goalpost. Or I don't know, the problem is no longer that human values are complicated or fragile. The problem is that we don't know how to evaluate these things. But for things we can evaluate, go on.

Ege Erdil

I think the fragility of the human values is an important part of the argument. But by itself, as you say, it would not be enough to accept for this additional problem. Notice that I mentioned two problems. One of the problems I mentioned is that even in distribution, you cannot trust current systems well enough to hook them up to an arbitrarily powerful optimizer. I would not trust any system that currently exists to hook it up to some very powerful optimizer and say, OK, you can do whatever you want. I think current systems are not reliable enough for that. And I think reliability is just generally an issue for machine learning systems.

I think, I mean, if you're talking about things like self-driving cars, obviously the reason that doesn't happen right now, I'm pretty sure, is because it's just not reliable enough. And if you look at humans driving cars, they are much, much more reliable than AI systems. And I mean, cars, the cost of making mistakes driving cars, it's not zero, but it's not that high. In the future, we'll be talking about systems for which a single mistake could be much, much more costly than that, potentially. It could mean some kind of extinction scenario, or it could mean some kind of bad value drift scenario. All of those are possible. And I think once stakes get that high, I would actually not trust current systems with anything. I think you need much more reliability than this before you can be confident of that.

And you can also look at, so for instance, there was this recent project by Redwood Research, and they were trying to get a language model to not output, not give prompt completions that involves people getting injured. And they tried to get very high reliability on this, and they just couldn't really get very far. So it is true that you can do this, and in almost all cases, you can filter out the prompt so that nobody gets injured. But there are cases for which you cannot, and that's the problem.

Ronny Fernandez

But if in almost all cases that I run a superintelligence, we end up with a pretty good world, then I'm feeling much happier than I was before this post.

Ege Erdil

Sure, but I mean, it's not that. In almost all worlds, you end up with a good world. It's that in every world, you'll be running lots of superintelligences, lots of times, and lots of decision problems, lots of different Americans. And eventually, something is going to go wrong. That's the way I think you should be thinking about it. And you cannot afford to know.

Ronny Fernandez

If that's the case, then I'm imagining a world where, like, I don't know, it doesn't look like a world where there's like one superintelligence that does a lot of damage.

Ege Erdil

I agree. I mean, I don't expect, I mean, obviously, it's kind of hard to talk about for AIs. It's a bit ambiguous if there's like one AI or like there's many AIs. What is the boundary of an AI? That's a bit ambiguous. But I think it is like easily, like basically, I think any kind of, like right now, the reason we think alignment is a hard problem is because we just, like the reason I think it's a hard problem at least is precisely because we cannot do this online learning. I think that's the real obstacle. Because otherwise, I think we could figure out ways in which to do it.

Ronny Fernandez

I mean, from this conversation, it seems to me like the obstacle really is the eliciting latent knowledge.

Ege Erdil

Right, right. I think stuff like that are the obstacles. I agree with that. But again, like any kind of training system, you know like it's going to, like any kind of engineering problem in the world, you know you're going to make mistakes when trying to build a system. That's just basically inevitable. You cannot get everything right the first time you do something.

Ronny Fernandez

Let me put it another way. Look, there's a big difference between 99% of the actions of an AI are like part of a plan that makes the world very good and 1% are part of a plan like, I don't know, make the world much worse. And like 100% of an AI's actions are steering the world towards a horrible state.

Ege Erdil

That's not how I would put it. That's not necessarily how I would express it. But I think it's more that the AI, like from its point of view, is doing something that is consistent. But it's just not like what we actually wanted to do out of the solution. That's the way I would put it.

Ronny Fernandez

Sure, sure, sure, sure. But like there's a question of how far from what we wanted to do it is, right? Like if I think 99% of its actions are great, or 99.9999% of its actions are great, and 1% of its actions are awful, I'm in a much better state than if I think all of its actions are aiming towards some horrible world state that I really hate.

Ege Erdil

True, but I think nobody who, like, OK, I won't say nobody. But I think the kind of alignment failure scenarios I think are likely are not scenarios in which the AI is 100% of the time doing things that look very bad to humans. No, no, no, I'm not saying look very bad.

Ronny Fernandez

I'm saying they aim at a world state that's really awful.

Ege Erdil

Mm-hmm.

Ronny Fernandez

Like the reason.

Ege Erdil

I think that's the reason.

Ronny Fernandez

Like the reason it's, you know, whatever, gaining a bunch of popularity or whatever is because it ultimately wants to, like, you know, whatever, turn the world into hedonium or whatever.

Ege Erdil

Mm-hmm. But I don't expect those kinds of goals will be particularly prominent. But I do think that scenarios in which the, like, I expect basically that if you try to do something like reinforcement learning with human feedback, the problem is going to be exactly what you said. Like the AI is going to be something. Like it's going to be selected according to the pressure of looking good to humans. And the question is, does that mean it's actually good? Like to what extent is it good? And, you know, I don't see any kind of guarantee that we could have that doing that and then deploying it into the world is going to give, like, good outcomes. Sure.

Basically, I think the guarantee you can get is that at least in the training distribution, in, like, in the average case, the AI doesn't do anything that's, like, very bad or at least looks very bad. It's kind of hard when evaluation by humans is extremely difficult. And humans are, like, exploitable in some way in the sense that the AI can simulate you and, like, decide what you think is going to be good. And that's not going to be what is actually good. And it might even be simpler to simulate a human because simulating the world is very hard if the problem is very hard to solve, while simulating a human is, like, in principle easier.

So there are all these, like, tricky things that come in. But basically, I don't think, like, the post seems to me that it's saying, like, reinforce the learning with human feedback, like, would essentially work because that's the actual analog of what is done, like, with human faces. But I think there is, like, strong reasons, several reasons to think it would not work. I don't think that's what it's saying.

Ronny Fernandez

I think the most natural interpretation of what it's saying is you train something that learns the human value function. And then you train it.

Ege Erdil

Well, by, like, giving it examples of, like, the same way you train something that, like, learns to recognize human faces. I don't see how that's a, I mean, that's functionally equivalent to doing reverse learning with human feedback. It's not much different. And it has exactly the same failure cases. So I don't know why that's different.

Ronny Fernandez

I don't think it has exactly the same failure cases.

Ege Erdil

Can you name a difference? I don't know.

Ronny Fernandez

Yeah, like, well, no, I guess it kind of is the same in that either one is solved if you solve the eliciting latent knowledge, right? Like, you could do it either way. If you have eliciting latent knowledge, you're done.

Ege Erdil

Yeah. I agree with that. I'm not entirely, like, I think it certainly comes, like, if you have eliciting latent knowledge, you're at least very close to solving the problem. I can still imagine some unlikely problems that will show up. But I don't think it's, like, if you really have a solid solution, I think you're pretty much done. Cool. But I think that's the same problem. Right.

Ronny Fernandez

So before I said, it looks like, so before I said that the contribution of the post is that it's switching the fragility, it's switching the problem from fragility of values to eliciting latent knowledge. And then you said, no, the fragility is still an important component. And I think you were saying that for a different reason than I think. Like, it seems like you're putting a lot of focus on an argument that's kind of like, well, this is very high stakes. So we can't afford mistakes, even if they're low probability.

Ege Erdil

Yes.

Ronny Fernandez

OK, cool. And I'm like, look, I agree. But low probability mistakes are, like, if we're in a state where the situation is, yeah, one out of a million shots is going to be a mistake, or from our perspective is going to be a mistake, I'm feeling pretty happy.

Ege Erdil

I would not feel very happy in that situation.

Ronny Fernandez

Well, I'm feeling happy because of what I was anchored on. What I was anchored on was like 70% of the time that we've run a superintelligence, it's going to be aiming for a totally valueless world state.

Ege Erdil

Yeah, I think it's a bit tricky to, like, most of the alignment failure scenarios, I expect, don't end with a totally valueless world state. OK, fair enough. Most of them don't. Most of them, what happens is that there is some kind of important way in which human values get messed up or get weighted towards something that we would really not want to value. And basically, there is some kind of corruption that happens in civilization.

So I think that's the more typical way in which this failure is probably going to happen. There are cases in which it, so I expect it to be quite bad. That could be quite bad, but not as bad as total extinction and universal zero value going forward. And I think that's because of the way I expect failures to happen.

Like, again, I'm pretty strongly anchored to you deploy AIs. And they most of the time do what you expect them to do, like in most situations. But in some situations, they can make a very impactful quote unquote mistakes. And that can cost you a lot. And you might have to compromise with them in some way. Or you might take huge costs because of that. So those are the kinds of failures I expect. I don't think it's super plausible that you would train some very simple utility maximizer, some simple thing that is going to emerge in training. And then it's going to turn the universe into paperclips. That seems not very plausible to me, but it's certainly possible.

Ronny Fernandez

Cool. So yeah, I think especially, so I mean, I generally think that you end up with situations where you have a bunch of different intelligences around. And they have different goals. And they can do things like we were talking about earlier, where they basically just do kinds of coordination to essentially become one AI. And that it's going to have some random goal, which is going to be in some ways kind of rhyme with human goals, but not be very much like them in such a way that if the universe were maximized according to those goals, I would think it's pretty horrible. Although maybe in some ways slightly more charming than a literally dead universe. So that's my mainline scenario most of the time.

Ege Erdil

Sure. Cool. I guess one, so again, this is not a point on which I disagree with the post. I actually agree. But I think to people who do not already agree, the post is not going to be very convincing. And it's this point about do you expect takeoff to be fast or slow, or do you buy into this kind of framework where AI is going to slowly develop. And there will be many different AIs with many different goals instead of there is a critical software breakthrough at some location. And then those people just train one AI that's far beyond anything.

Ronny Fernandez

Yeah, I don't even necessarily see that as a distinction. I see the distinction as like I definitely think there will be a period where I think it's very likely that there will be a period where we have a bunch of different very intelligent pieces of machine learning being trained to do all sorts of things. But I do think eventually you end up making a concept or machine learning model, either you or machine learning models eventually some combination thereof end up making consequentialists, general consequentialists. And they end up basically taking over. And it's pretty unlikely that they end up with goals that we're happy with. But I'm not imagining one particular group or whatever just has a massive breakthrough and makes a god overnight.

Ege Erdil

Cool. Sure, but I know you're not imagining that. I'm saying if someone is imagining something like that, then the post would not be very convincing to them. Oh, right, right. Well, I am imagining gods eventually. Sure, but eventually is like in the you don't really think that let me think about how to put this. It seems to me that the post pretty strongly assumes that there will be many different AIs and they will have many different goals that differ in various directions from human goals. And they will not, again, it doesn't really talk about this point about coordination.

But if you ignore that, then that's a beast. I think in some part of the post, that's the reason why the post is not as great. But I think that's pretty plausible. But if you don't think it's possible, then I don't see how in this kind of foom scenario where there's a single AI that's just much more capable than anything else on the planet. And that single AI is not properly aligned, then I don't see how you escape some kind of big disaster in that scenario. I don't think the post makes a good argument to guess that.

Ronny Fernandez

I agree. I agree. I think the way that the discourse should go is a premise in the argument for the god scenario was the fragility of human values. And the post moves the problem from fragility of human values, or from complexity of human values, let's say, to eliciting latent knowledge.

Ege Erdil

Yeah, but I think there is a sense in which, I think the low stakes versus high stakes distinction is actually built into Eliezer's original post on value being fragile. Because the example he gives, for instance, is you leave the value of boredom out of some AI. It's a very simplistic way of talking about how AI would have values. But let's say we imagine like that. Then he's saying, if you have an optimizer that is optimizing that, then it's going to have a single experience that you think is good, then it's going to play that over and over again.

And the way I interpret that is the stakes for getting human values wrong in a very powerful optimizer are very high. And that's why there's a problem. You do not see the mistake you made, and you don't get the chance to correct it in advance necessarily. And that's why the sense in which value is fragile. If you change it a little and you optimize it very, very far, you're going to get problems. And I think that's basically the same problem that I'm talking about.

It's not that human values being complex is in itself a problem. Because ML learns complex functions all the time. The problem is that if you get the function wrong, and there is an optimizer somewhere in there, and it just pushes it very, very far, you might not get the chance to recover from your mistake.

Ronny Fernandez

But let's put it another way. So the post talks about this region of functions that are close enough. Right?

Ege Erdil

Mm-hmm

Ronny Fernandez

So there's these nearby functions that are close enough where, I don't know, either like, I guess there's an onion of regions where in the innermost, it's like, well, it's not exactly the function that I would have wished for it. But it's still going to be overall more value than I would have gotten without this thing existing. Then there's another one out there that's like, you know, it's like, there's a sphere at which it's equivalent to what would have happened if we didn't build it. It's different, but they totally use the same. Whatever. So if you're just looking at the complexity, then the argument like, hey, look at faces. We did a pretty good job with faces. So we'll have a decent chance of getting the value function right, does something to move your estimate if you ignore eliciting latent knowledge?

Ege Erdil

Sure, but I don't find it's not a new argument that ML can learn lots of complicated functions, obviously. Like, the ML does that all the time. It's not a new argument. And I think even before the modern era, like post-2012 era, before deep learning.

Ronny Fernandez

For what it's worth, that specific argument, specifically using faces as a response to the value is fragile thing, that's a very old argument from Katja, like at least three years old. And it was on a blog post of hers somewhere. And I thought the responses were pretty bad. I think the response that like, there's a difference of how much you need to elicit latent knowledge is a pretty good response. But I never saw it before.

Ege Erdil

Yeah, I'm not sure. I've never seen this argument before about faces. And I don't know. It just seems like an obvious point to me that, you know.

Ronny Fernandez

I agree it seems obvious. But I genuinely hadn't seen a very good response. But I agree it's an obvious point.

Ege Erdil

Like, I'm not sure, did Eliezer say something about this point?

Ronny Fernandez

Cool. Yeah, I'd like to see that.

Ege Erdil

No, I mean, did he say something? Or are you aware of something?

Ronny Fernandez

He said something but it was garbage. It was definitely not as concise as what you're saying now. It was definitely not as concise as like, hey, there's an important disanalogy between these two domains. In the one domain, we are perfect evaluators of what counts as a human face. We are not perfect evaluators of what counts as a good world. And we're not perfect evaluators of what counts as a good action or a good plan. So actually, could I get your LessWrong handle? Because I want to just quickly, in a comment, give you credit for the idea and just write it as a reply to the post.

Ege Erdil

My LessWrong handle is the same as my real name. It's the same as mine. Actually, my LessWrong profile, we can find the link to it in my Twitter bio.

Ronny Fernandez

OK, cool. I haven't written on LessWrong in a long time. How do I tag your profile? Do I just like?

Ege Erdil

You can just write my name and do a hyperlink to my profile. I don't know if there's any other way to do it.

[long pause]

Ronny Fernandez

Nate had a great reply on Twitter. Basically, wake me. He said, wake me when the allegedly maximally face-like image looks human.

Ege Erdil

And again, it is true that this is actually a serious problem. I think if two years ago, I saw a talk about using machine learning to build certain kinds of proteins that would have some kind of effect. And the idea was, oh, there's this naive idea. You can just train a classifier on different protein structures. And it can map each protein input to some output variable prediction that you care about. And then once your classifier is trained, you can just fix the classifier. And you just do some kind of search or gradient descent on the input. So you try to get the inputs to maximally give you the property that you want. When you want that, you just get garbage. So that's a very generic problem that happens in many different contexts.

And another one is when you're training GANs, you know that you have a generator and a discriminator. And GAN training is famously unstable. So if you fix the generator and you just train the discriminator to tell the generator's faces apart from the real faces, it can do that pretty well. Yeah, you just do gradient descent for a while. It initially learns to beat the generator. But if you're doing it the other way around, if you fix the discriminator and you're doing back prop on the generator to make it fool the discriminator, eventually it just finds some kind of defect in the way the discriminator works. And it just puts all of its effort. It just generates things that to you are obvious that they are wrong. But the discriminator can't tell.

And that's part of this general thing about adversarial attacks on neural networks. Like if some kind of image is out of the training distribution, then you can't really trust the assessment of the neural network on that image. You can just add a tiny bit of noise onto a dog image and make a classifier think it's elephant image or something. There are very bad properties of neural networks against adversaries in general. And those are all part of the same thing.

Once you move out of the training distribution, because on all these cases, when you do gradient descent on the input, you're moving out of the training distribution. You're trying to construct a kind of protein that was not in your training distribution. Because that's what you want to use the classifier for. If the protein existed already, then you would need to use this method. But once you push what you're doing out of the training distribution, so you're looking for something that doesn't exist, or you're evaluating your classifier on the kind of image that would not be present in your training set, then you cannot trust the conclusions of the model.

And that's the dangerous thing about this distributional shift. I think people have even exhibited properties like this in games with reinforcement learning, where a subtle kind of distributional shift in the structure of the game can make a robot that can make an AI. You train to win the game in the previous and train the solution, do totally nonsensical things in the test distribution.

So this is a very general problem that comes up. It made different context. I'm not sure how you get rid of this problem. The main way you deal with it is, again, if you have low stakes, then you can just do online learning. Whenever the re-order throws at you some example that was not in your training distribution, you just adapt, change your model, and you don't pay much of a price for that. And eventually, your model becomes robust enough to handle the reward distribution. But you might not be able to do that when the stakes are high. Sure. I'm not sure if anyone has tried.

I'm pretty sure if you indeed take a GAN and you try to pick the image that maximizes that to the discriminator, it looks maximally like a human face. I'm pretty sure that not actually looks at us like a human face. So I think that's a pretty good point, actually.

Ronny Fernandez

Yeah, I think Nate pretty much killed it with.

Ege Erdil

I think that's a pretty good point. I think I'm probably going to head off.

Ronny Fernandez

But it was really nice talking to you.

Ege Erdil

Yeah, likewise. Did you post the comments? I did not.

Ronny Fernandez

I'm going to tweet and tag you in it. And I mean, if you want, you can post it yourself. But basically, I just wanted to say that it's an important disanalogy between the two domains. In the one, humans are perfect recognizers of the thing. And in the other, we are not at all. So there could be examples that we are very sure are great but aren't. And then I also want to just, Nate's not going to put it on LessWrong. So I also just want to put Nate's comments on LessWrong from Twitter. But yeah, you should.

Ege Erdil

Yeah. Yeah, you can do that. It's fine.

Ronny Fernandez

Cool. Yeah, all right. Well, nice talking to you. I will see you around. Hope we talk again.

Ege Erdil

Sure. Nice talking to you, too. See you later.

Ronny Fernandez

Bye.

Ege Erdil

Bye.