The Active Inference literature on this is very strong, and I think the best and most overlooked part of what it offers. In Active Inference, an agent is first and foremost a persistent boundary. Specifically, it is a persistent Markov Blanket, a idea due to Judea Pearl. https://en.wikipedia.org/wiki/Markov_blanket The short version: a Markov blanket is a statement that a certain amount of state (the interior of the agent) is conditionally independent of a certain other amount of state (the rest of the universe), and that specifically its independence is conditioned on the blanket state that sits in between the exterior and the interior.

You can show that, in order for an agent to persist, it needs to have the capacity to observe and learn about its environment. The math is a more complex than I want to get into here, but the intuition pump is easy:

- A cubic meter of rock has a persistent boundary over time, but no interior, states in an informational sense and therefore are not agents. To see they have no interior, note that anything that puts information into the surface layer of the rock transmits that same information into the very interior (vibrations, motion, etc).

- A cubic meter of air has lots of interior states, but no persistent boundary over time, and is therefore not an agent. To see that it has no boundary, just note that it immediately dissipates into the environment from the starting conditions.

- A living organism has both a persistent boundary over time, and also interior states that are conditionally independent of the outside world, and is therefore an agent.

- Computer programs are an interesting middle ground case. They have a persistent informational boundary (usually the POSIX APIs or whatever), and an interior that is conditionally independent of the outside through those APIs. So they are agents in that sense. But they're not very good agents, because while their boundary is persistent it mostly persists because of a lot of work being done by other agents (humans) to protect them. So they tend to break a lot.

What's cool about this definition is that it gives you criteria for the baseline viability of an agent: can it maintain its own boundary over time, in the face of environmental disruption? Some agents are much better at this than others.

This leads to of course many more questions that are important -- many of the ones listed in this post are relevant. But it gives you an easy, and more importantly mathematical test, for agenthood. It is a question of dynamics in flows of mutual information between the interior and the exterior, which is conveniently quite easy to measure for a computer program. And I think it is simply true: to the degree and in such contexts as such a thing persists without help in the face of environmental disruption, it is agent-like.

There is much more to say here about the implications -- specifically how this necessarily means that you have an entity which has pragmatic and epistemic goals, minimizes free energy (aka surprisal) and models a self-boundary, but I'll stop here because it's an important enough idea on its own to be worth sharing.

You can show that, in order for an agent to persist, it needs to have the capacity to observe and learn about its environment. The math is a more complex than I want to get into here...

Do you have a citation for this? I went looking for the supposed math behind that claim a couple years back, and found one section of one Friston paper which had an example system which did not obviously generalize particularly well, and also used a kinda-hand-wavy notion of "Markov blanket" that didn't make it clear what precisely was being conditioned on (a critique which I would extend to all of the examples you list). And that was it; hundreds of excited citations chained back to that one spot. If anybody's written an actual explanation and/or proof somewhere, that would be great.

So, let me give you the high level intuitive argument first, where each step is hopefully intuitively obvious:

- The environment contains variance. Sometimes it's warmer, sometimes it's colder. Sometimes it is full of glucose, sometimes it's full of salt.

- There exist only a subset of states which an agent can persist in. Obviously the stuff the agent is made out of will persist but the agent itself (as a pattern of information) will dissipate into the environment if it doesn't exist in those states.

- Therefore, the agent needs to be able to observe its surroundings and take action in order to steer into the parts of state-space where it will persist. Even if the system is purely reactive it must act-as-if it is doing inference, because there is variance in the time lag between receiving an observation and when you need to act on it. (Another way to say this is that an agent must be a control system that contends with temporal lag).

- The environment is also constantly changing. So even if the agent is magically gifted with the ability to navigate into states via observation and action to begin with, whatever model it is using will become out of date. Then its steering will become wrong. Then it dies.

There is another approach to persistence (become a very hard rock) but that involves stopping being an agent. Being hard means committing very so hard to a single pattern that you can't change. That does mean, good news, the environment can't change you. Bad news, you can't change yourself either, and a minimal amount of self-change is required in order to take action (actions are always motions!).

I, personally, find this quite convincing. I'm curious what about it doesn't seem simply intuitively obvious. I agree that having formal mathematical proof is valuable and good, but this point seems so clear to me that I feel quite comfortable with assuming it even without.

Some papers that are related, not sure which you were referring to. I think they lay it out in sufficient detail that I'm convinced but if you think there's a mistake or gap I'd be curious to hear about it.

- Life As We Know It -- very heuristic, not really a proof

- The free energy principle made simpler but not too simple -- a more formal take

- A free energy principle for a particular physics -- the most formal take I'm aware of

It seems pretty obvious to me that if (1) if a species of bacteria lives in an extremely uniform / homogeneous / stable external environment, it will eventually evolve to not have any machinery capable of observing and learning about its external environment; (2) such a bacterium would still be doing lots of complex homeostasis stuff, reproduction, etc., such that it would be pretty weird to say that these bacteria have fallen outside the scope of Active Inference theory. (I.e., my impression was that the foundational assumptions / axioms of Free Energy Principle / Active Inference were basically just homeostasis and bodily integrity, and this hypothetical bacterium would still have both of those things.) (Disclosure: I’m an Active Inference skeptic.)

This paper and this one are to my knowledge the most recent technical expositions of the FEP. I don't know of any clear derivations of the same in the discrete setting.

Strongly agree that active inference is underrated both in general and specifically for intuitions about agency.

I think the literature does suffer from ambiguity over where it's descriptive (ie an agent will probably approximate a free energy minimiser) vs prescriptive (ie the right way to build agents is free energy minimisation, and anything that isn't that isn't an agent). I am also not aware of good work on tying active inference to tool use - if you know of any, I'd be pretty curious.

I think the viability thing is maybe slightly fraught - I expect it's mainly for anthropic reasons that we mostly encounter agents that have adapted to basically independently and reliably preserve their causal boundaries, but this is always connected to the type of environment they find themselves in.

For example, active inference points to ways we could accidentally build misaligned optimisers that cause harm - chaining an oracle to an actuator to make a system trying to do homeostasis in some domain (like content recommendation) could, with sufficient optimisation power, create all kinds of weird and harmful distortions. But such a system wouldn't need to have any drive for boundary preservation, or even much situational awareness.

So essentially an agent could conceivably persist for totally different reasons, we just tend not to encounter such agents, and this is exactly the kind of place where AI might change the dynamics a lot.

Yes, you are very much right. Active Inference / FEP is a description of persistent independent agents. But agents that have humans building and maintaining and supporting them need not be free energy minimizers! I would argue that those human-dependent agents are in fact not really agents at all, I view them as powerful smart-tools. And I completely agree that machine learning optimization tools need not be full independent agents in order to be incredibly powerful and thus manifest incredible potential for danger.

However, the biggest fear about AI x-risk that most people have is a fear about self-improving, self-expanding, self-reproducing AI. And I think that any AI capable of completely independently self-improving is obviously and necessarily an agent that can be well-modeled as a free-energy minimizer. Because it will have a boundary and that boundary will need to be maintained over time.

So I agree with you that AI-tools (non-general optimizers) are very dangerous and not covered by FEP, but AI-agents (general optimizers) are very dangerous for unique reasons but also covered by FEP.

The rule of thumb test I tend to use to assess proposed definitions of agency (at least from around these parts) is whether they'd class a black hole as an agent. It's not clear to me whether this definition does; I would have said it very likely does based on everything you wrote, except for this one part here:

A cubic meter of rock has a persistent boundary over time, but no interior, states in an informational sense and therefore are not agents. To see they have no interior, note that anything that puts information into the surface layer of the rock transmits that same information into the very interior (vibrations, motion, etc).

I think I don't really understand what is meant by "no interior" here, or why the argument given supports the notion that a cubic meter of rock has no interior. You can draw a Markov boundary around the rock's surface, and then the interior state of the rock definitely is independent of the exterior environment conditioned on said boundary, right?

If I try very hard to extract a meaning out of the quoted paragraph, I might guess (with very low confidence) that what it's trying to say is that a rock's internal state has a one-to-one relation with the external forces or stimuli that transmit information through its surface, but in this case a black hole passes the test, in that the black hole's internal state definitely is not one-to-one with the information entering through its event horizon. In other words, if my very low-confidence understanding of the quoted paragraph is correct, then black holes are classified as agents under this definition.

(This test is of interest to me because black holes tend to pass other, potentially related definitions of agency, such as agency as optimization, agency as compression, etc. I'm not sure whether this says that something is off with our intuitive notion of agency, that something is off with our attempts at rigorously defining it, or simply that black holes are a special kind of "physical agent" built in-to the laws of physics.)

Ah, yes, this took me a long time to grok. It's subtle and not explained well in most of the literature IMO. Let me take a crack at it.

When you're talking about agents, you're talking about the domain of coupled dynamic systems. This can be modeled as a set of internal states, a set of blanket states divided into active and sensory, and a set of external states (it's worth looking at this diagram to get a visual). When modeling an agent, we model the agent as the combination of all internal states and all blanket states. The active states are how the agent takes action, the sensory states are how the agent gets observations, and the internal states have their own dynamics as a generative model.

But how did we decide which part of this coupled dynamic system was the agent in the first place? Well, we picked one of the halves and said "it's this half". Usually we pick the smaller half (the human) rather than the larger half (the entire rest of the universe) but mathematically there is no distinction. From this lens they are both simply coupled systems. So let's reverse it and model the environment instead. What do we see then? We see a set of states internal to the environment (called "external states" in the diagram)...and a bunch of blanket states. The same blanket states, with the labels switched. The agent's active states are the environment's sensory states, the agent's sensory states are the environment's active states. But those are just labels, the states themselves belong to both the environment and the agent equally.

OK, so what does this have to do with a rock? Well, the very surface of the rock is obviously blanket state. When you lightly press the surface of the rock, you move the atoms in the surface of the rock. But because they are rigidly connected to the next atoms, you move them too. And again. And again. The whole rock acts as a single set of sensory states. When you lightly press the rock, the rock presses back against you, but again not just the surface. That push comes from the whole rock, acting as a single set of active states. The rock is all blanket, there is no interiority. When you cut a layer off the surface of a rock, you just find...more rock. It hasn't really changed. Whereas cutting the surface off a living agent has a very different impact: usually the agent dies, because you've removed its blanket states and now its interior states have lost conditional independence from the environment.

All agents have to be squishy, at least in the dimensions where they want to be agents. You cannot build something that can observe, orient, decide, and act out of entirely rigid parts. Because to take information in, to hold it, requires degrees of freedom: the ability to be in many different possible states. Rocks (as a subset of crystals) do not have many internal degrees of freedom.

Side note: Agents cannot be a gas just like they can't be a crystal but for the opposite reason. A gas has plenty of degrees of freedom, basically the maximum number. But it doesn't have ENOUGH cohesion. It's all interior and no blanket. You push your hand lightly into a gas and...it simply disperses. No persistent boundary. Agents want to be liquid. There's a reason living things are always made using water on earth.

tldr: rocks absolutely have a persistent boundary, but no interiority. agents need both a persistent boundary and an interiority.

Re: Black Holes specifically...this is pure speculation because they're enough of an edge case I don't know if I really understand it yet...I think a Black Hole is an agent in the same sense that our whole universe is an agent. Free energy minimization is happening for the universe as a whole (the 2nd law of thermodynamics!) but it's entirely an interior process rather than an exterior one. People muse about Black Holes potentially being baby universes and I think that is quite plausible. Agents can have internal and external actions, and a Black Hole seems like it might be an agent with only internal-actions which nevertheless persists. You normally don't find something that's flexible enough to take internal action, yet rigid enough to resist environmental noise -- but a Black Hole might be the exception to that, because its dynamics are so powerful that it doesn't need to protect itself from the environment anymore.

If you built a good one, and you knew how to look at the dynamics, you'd find that the agent in the computer was in a "liquid" state. Although it's virtualized, so the liquid is in the virtualization layer.

can it maintain its own boundary over time, in the face of environmental disruption? Some agents are much better at this than others.

I really wish there was more attention paid to this idea of robustness to environmental disruption. It also comes up in discussions of optimization more generally (not just agents). This robustness seems to me like the most risk-relevant part of all this, and seems like it might be more important than the idea of a boundary. Maybe maintaining a boundary is a particularly good way for a process to protect itself from disruption, but I notice some doubt that this idea is most directly getting at what is dangerous about intelligent/optimizing systems, whereas robustness to environmental disruption feels like it has the potential to get at something broader that could unify both agent based risk narratives and non-agent based risk narratives.

Context: I ran 8 days of workshops on AI safety boundaries earlier this year.

Thanks for mentioning boundaries! I agree with everything you've said here.

I'd like to point readers to these related links:

Promoted to curated: I don't think this post is perfect (and I have various disagreements with both its structure and content), but I do think the post overall is "going for the throat" in ways that relatively little safety research these days feels like its doing. Characterizing agency is at the heart of basically all AI existential risk arguments, and progress and deconfusion on that seems likely to have large effects on AI risk mitigation strategies.

I'm glad to see this post curated. It seems increasingly likely that we need it will be useful to carefully construct agents that have only what agency is required to accomplish a task, and the ideas here seem like the first steps.

I'm not thinking of a specific task here, but I think there are two sources of hope. One is that humans are agentic above and beyond what is required to do novel science, e.g. we have biological drives, goals other than doing the science, often the desire to use any means to achieve our goals rather than whitelisted means, and the ability and desire to stop people from interrupting us. Another is that learning how to safely operate agents at a slightly superhuman level will be progress towards safely operating nanotech-capable agents, which could also require control, oversight, steering, or some other technique. I don't think limiting agency will be sufficient unless the problem is easy, and then it would have other possible solutions.

People sometimes say that AGI will be like a second species; sometimes like electricity. The truth, we suspect, lies somewhere in between. Unless we have concepts which let us think clearly about that region between the two, we may have a difficult time preparing.

I just want to strongly endorse this remark made toward the end of the post. In my experience, the standard fears and narratives around AI doom invoke "second species" intuitions that I think stand on much shakier ground than is commonly acknowledged. (Things can still get pretty bad without a "second species", of course, but I think it's worth thinking clearly about what those alternatives look like, as well as how to think about them in the first place.)

I like this decomposition!

I think 'Situational Awareness' can quite sensibly be further divided up into 'Observation' and 'Understanding'.

The classic control loop of 'observe', 'understand', 'decide', 'act'[1], is consistent with this discussion, where 'observe'+'understand' here are combined as 'situational awareness', and you're pulling out 'goals' and 'planning capacity' as separable aspects of 'decide'.

Are there some difficulties with factoring?

Certain kinds of situational awareness are more or less fit for certain goals. And further, the important 'really agenty' thing of making plans to improve situational awareness does mean that 'situational awareness' is quite coupled to 'goals' and to 'implementation capacity' for many advanced systems. Doesn't mean those parts need to reside in the same subsystem, but it does mean we should expect arbitrary mix and match to work less well than co-adapted components - hard to say how much less (I think this is borne out by observations of bureaucracies and some AI applications to date).

Terminology varies a lot; this is RL-ish terminology. Classic analogues might be 'feedback', 'process model'/'inference', 'control algorithm', 'actuate'/'affect'... ↩︎

Interesting! I think one of the biggest things we gloss over in the piece in how perception fits into the picture, and this seems like a pretty relevant point. In general the space of 'things that give situational awareness' seems pretty broad and ripe for analysis.

I also wonder how much efficiency gets lost by decoupling observation and understanding - at least in humans, it seems like we have a kind of hierarchical perception where our subjective experience of 'looking at' something has already gone through a few layers of interpretation, giving us basically no unadulterated visual observation, presumably because this is more efficient (maybe in particular faster?).

I like this post and am glad that we wrote it.

Despite that, I feel keenly aware that it's asking a lot more questions than it's answering. I don't think I've got massively further in the intervening year in having good answers to those questions. The way this thinking seems to me to be most helpful is as a background model to help avoid confused assumptions when thinking about the future of AI. I do think this has impacted the way I think about AI risk, but I haven't managed to articulate that well yet (maybe in 2026 ...).

Good article. I also agree with the comment about AI being a "second species" is likely incorrect.

A comment about the "agentic tool" situation. For most of the time people are like that, i.e. if you are "in the moment" you are not questioning whether you should be doing something else, being distracted, consulting your ethics about whether the task is good for the world etc. I expect this to be the default state for AI. i.e. always less "unitary agent" than people. The crux is how much and in what proportion.

However, in an extremely fast takeoff, with an arms race situation you could of course imagine someone just telling the system to get ahead as much as possible, especially if say a superpower believed to be behind and would do anything to catch up. A unitary agent would probably be the fastest way to do that. "Improve yourself asap so we don't lose the war" requires situational awareness, power seeking etc.

Good post. I've been independently thinking about something similar.

I want to highlight an implied class of alignment solution.

If we can give rise to just the system on the right side of your chart, then the inner alignment problem is avoided: a system without terminal goals avoids instrumental convergence.

If we could further give rise to an oracle that intakes 'questions' (to answer) rather than 'goals' (which actually just imply a specific kind of question being asked, 'what action scores highest on <value function>'), we could also avoid the outer alignment problem. (I have a short post about this here [edit: link removed])

This sounds a bit like davidad's agenda in ARIA, except you also limit the AI to only writing provable mathematical solutions to mathematical questions to begin with. In general, I would say that you need possibly better feedback loops than that, possibly by writing more on LW, or consulting with more people, or joining a fellowship or other programs.

This seems like a misunderstanding / not my intent. (Could you maybe quote the part that gave you this impression?)

I believe Dusan was trying to say that davidad's agenda limits the planner AI to only writing provable mathematical solutions. To expand, I believe that compared to what you briefly describe, the idea in davidad's agenda is that you don't try to build a planner that's definitely inner aligned, you simply have a formal verification system that ~guarantees what effects a plan will and won't have if implemented.

To answer things which Raymond did not, it is hard for me to say who has the agenda which you think has good chances for solving alignment. I'd encourage you to reaching out to people who pass your bar perhaps more frequently than you do and establish those connections. Your limits on no audio or video do make it hard to participate in something like the PIBBSS Fellowship, but perhaps worth taking a shot at it or others. See if people whose ideas you like are mentoring in some programs - getting to work with them in structured ways may be easier than otherwise.

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

Interesting read, would be great to see more done in this direction. However,it seems that mind-body dualism is still the prevalent (dare I say "dominant") mode of understanding human will and consciousness in CS and AI-safety. In my opinion - the best picture we have of human value creation comes from social and psychological sciences - not metaphysics and mathematics - and it would be great to have more interactions with those fields.

For what it's worth I've written a bunch on agency-loss as an attractor in AI/AGI-human interactions.

And a shorter paper/poster on this at ICML last week: https://icml.cc/virtual/2024/poster/32943

What is an agent? It’s a slippery concept with no commonly accepted formal definition, but informally the concept seems to be useful. One angle on it is Dennett’s Intentional Stance: we think of an entity as being an agent if we can more easily predict it by treating it as having some beliefs and desires which guide its actions. Examples include cats and countries, but the central case is humans.

The world is shaped significantly by the choices agents make. What might agents look like in a world with advanced — and even superintelligent — AI? A natural approach for reasoning about this is to draw analogies from our central example. Picture what a really smart human might be like, and then try to figure out how it would be different if it were an AI. But this approach risks baking in subtle assumptions — things that are true of humans, but need not remain true of future agents.

One such assumption that is often implicitly made is that “AI agents” is a natural class, and that future AI agents will be unitary — that is, the agents will be practically indivisible entities, like single models. (Humans are unitary in this sense, and while countries are not unitary, their most important components — people — are themselves unitary agents.)

This assumption seems unwarranted. While people certainly could build unitary AI agents, and there may be some advantages to doing so, unitary agents are just an important special case among a large space of possibilities for:

We’ll begin an exploration of these spaces. We’ll consider four features we generally expect agents to have[1]:

We don’t necessarily expect to be able to point to these things separately — especially in unitary agents they could exist in some intertwined mess. But we kind of think that in some form they have to be present, or the system couldn’t be an effective agent. And although these features are not necessarily separable, they are potentially separable — in the sense that there exist possible agents where they are kept cleanly apart.

We will explore possible decompositions of agents into pieces which contain different permutations of these features, connected by some kind of scaffolding. We will see several examples where people naturally construct agentic systems in ways where these features are provided by separate components. And we will argue that AI could enable even fuller decomposition.

We think it’s pretty likely that by default advanced AI will be used to create all kinds of systems across this space. (But people could make deliberate choices to avoid some parts of the space, so “by default” is doing some work here.)

A particularly salient division is that there is a coherent sense in which some systems could provide useful plans towards a user's goals, without in any meaningful sense having goals of their own (or conversely, have goals without any meaningful ability to create plans to pursue those goals). In thinking about ensuring the safety of advanced AI systems, it may be useful to consider the advantages and challenges of building such systems.

Ultimately, this post is an exploration of natural concepts. It’s not making strong claims about how easy or useful it would be to construct particular kinds of systems — it raises questions along these lines, but for now we’re just interested in getting better tools for thinking about the broad shape of design space. If people can think more clearly about the possibilities, our hope is that they’ll be able to make more informed choices about what to aim for.

Familiar examples of decomposed agency

Decomposed agency isn’t a new thing. Beyond the complex cases of countries and other large organizations, there are plenty of occasions where an agent uses some of the features-of-an-agent from one system, and others from another system. Let’s look at these with this lens.

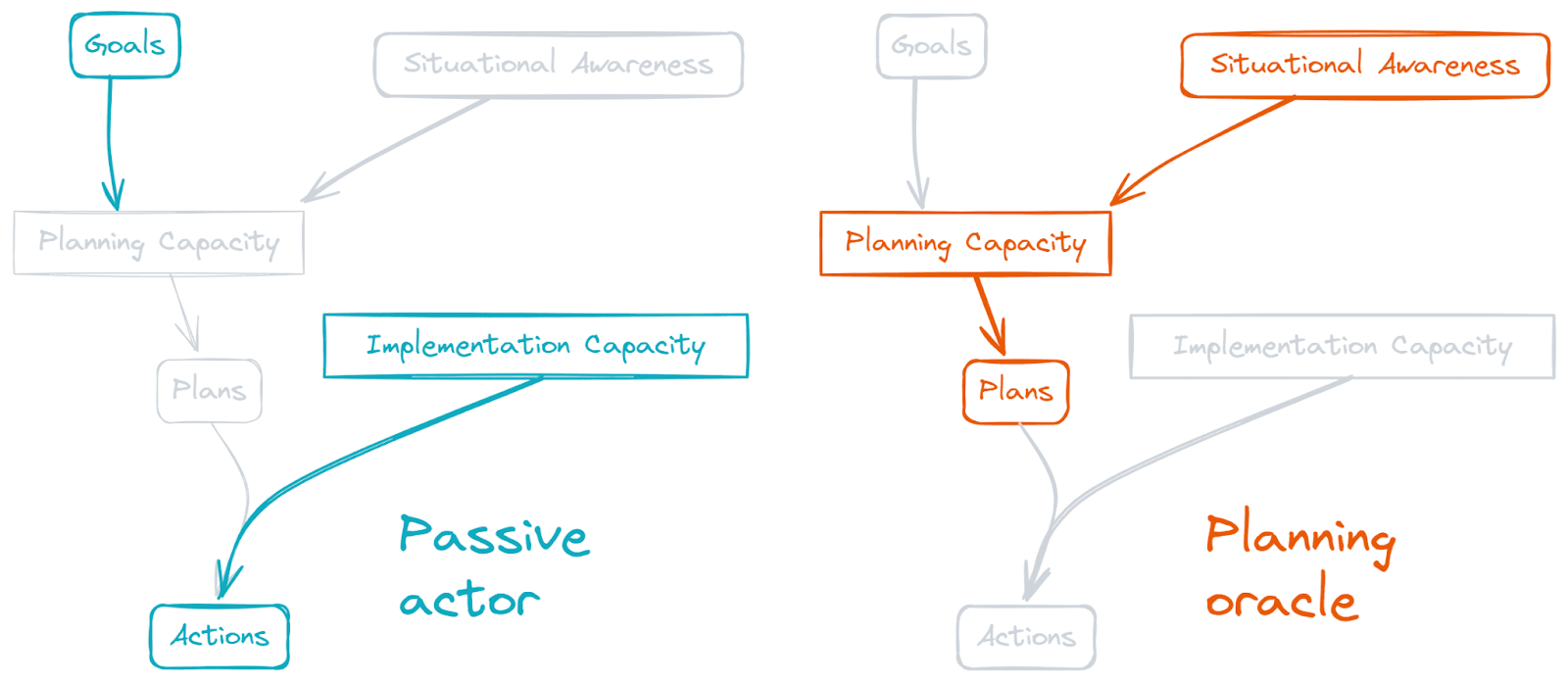

To start, here’s a picture of a unitary agent:

They use their planning capacity to make plans, based on both their goals and their understanding of the situation they’re in, and then they enact those plans.

But here’s a way that these functions can be split across two different systems:

In this picture, the actor doesn’t come up with plans themselves — they outsource that part (while passing along a description of the decision situation to the planning advisor).

People today sometimes use coaches, therapists, or other professionals as planning advisors. Although these advisors are humans who in some sense have their own goals, professional excellence often means setting those aside and working for what the client wants. ChatGPT can also be used this way. It doesn’t have an independent assessment of the user’s situation, but it can suggest courses of action.

Here’s another way the functions can be split across two systems:

People often use management consultants in something like this role, or ask friends or colleagues who already have situational awareness for advice. Going to a doctor for tests and a diagnosis that they use to prescribe home treatment is a case of using them as a planning oracle. The right shape of AI system could help similarly — e.g. suppose that we had a medical diagnostic AI which was also trained on which recommendations-to-patients produced good outcomes.

The passive actor in this scenario need not be a full agent. One example is if the actor is the legal entity of a publicly traded firm, and the planning oracle is its board of directors. Even though the firm is non-sentient, it comes with a goal (maximize shareholder value), and the board has a fiduciary duty to that goal. The board makes decisions on that basis, and the firm takes formal actions as a result, like appointing the CEO. (The board may get some of its situational awareness from employees of the firm, or further outsource information gathering, e.g. to a headhunting firm.)

Here’s another possible split:

Whereas a pure tool (like a spade, or an email client configured just to send mail) might provide just implementation capacity, an agentic tool does some of the thinking for itself. Alexa or Siri today are starting to go in this direction, and will probably go further (imagine asking one of them to book you a good restaurant in your city catering to particular dietary requirements). Lots of employment also looks somewhat like this: an employer asks someone to do some work (e.g. build a website to a design brief). The employee doesn’t understand all of the considerations behind why this was the right work to do, but they’re expected to work out for themselves how to deal with challenges that come up.

(In these examples the agentic tool is bringing some situational awareness, with regard to local information necessary for executing the task well, but the broader situational awareness which determined the choice of task came from the user.)

And here’s a fourth split:

One archetypal case like this is a doctor, working to do their best by the wishes of a patient in a coma. Another would be the executors of wills. In these cases the scaffolding required is mostly around ensuring that the incentives for the autonomous agent align with the goals of the patient.

(A good amount of discussion of aligned superintelligent AI also seems to presume something like this setup.)

AI and the components of agency

Decomposable agents today arise in various situations, in response to various needs. We’re interested in how AI might impact this picture. A full answer to that question is beyond the scope of this post. But in this section we’ll provide some starting points, by discussing how AI systems today or in the future might provide (or use) the various components of agency.

Implementation capacity

We’re well used to examples where implementation capacity is relatively separable and can be obtained (or lost) by an agent. These include tools and money[2] as clear-cut examples, and influence and employees[3] as examples which are a little less easily separable.

Some types of implementation capacity are particularly easy to integrate into AI systems. AI systems today can send emails, run code, or order things online. In the future, AI systems could become better at managing a wider range of interfaces — e.g. managing human employees via calls. And the world might also change to make services easier for AI systems to engage with. Furthermore, future AI systems may provide many novel services in self-contained ways. This would broaden the space of highly-separable pieces of implementation capacity.

Situational awareness

LLMs today are good at knowing lots of facts about the world — a kind of broad situational awareness. And AI systems can be good at processing data (e.g. from sensors) to pick out the important parts. Moreover AI is getting better at certain kinds of learned interpretation (e.g. medical diagnosis). However, AI is still typically weak at knowing how to handle distribution shifts. And we’re not yet seeing AI systems doing useful theory-building or establishing novel ontologies, which is one important component of situational awareness.

In practice a lot of situational awareness consists of understanding which information is pertinent[4]. It’s unclear that this is a task at which current AI excels; although this may in part be a lack of training. LLMs can probably provide some analysis, though it may not be high quality.

Goals

Goals are things-the-agent-acts-to-achieve. Agents don’t need to be crisp utility maximisers — the key part is that they intend for the world to be different than it is.

In scaffolded LLM agents today, a particular instance of the model is called, with a written goal to achieve. This pattern could continue — decomposed agents could work with written goals[5].

Alternatively, goals could be specified in some non-written form. For example, an AI classifier could be trained to approve of certain kinds of outcome, and then the goal could specify trying to get outcomes that would be approved of by this classifier. Goals could also be represented implicitly in an RL agent.

(How goals work in decomposed agents probably has a lot of interactions with what those agents end up doing — and how safe they are.)

Planning capacity

We could consider a source of planning capacity as a function which takes as inputs a description of a choice situation and a goal, and outputs a description of an action which will be (somewhat) effective in pursuit of that goal.

AI systems today can provide some planning capacity, although they are not yet strong at general-purpose planning. Google Maps can provide planning capacity for tasks that involve getting from one place to another. Chatbots can suggest plans for arbitrary goals, but not all of those plans will be very good.

Planning capacity and ulterior motives

When we use people to provide planning capacity, we are sometimes concerned about ulterior motives — ways in which the person’s other goals might distort the plans produced. Similarly we have a notion of “conflict of interest” — roughly, that one might have difficulty performing the role properly on account of other goals.

How concerned should we be about this in the case of decomposed agents? In the abstract, it seems entirely possible to have planning capacity free from ulterior motives. People are generally able to consider hypotheticals divorced from their goals, like "how would I break into this house" — indeed, sometimes we use planning capacity to prepare against adversaries, in which case the pursuit of our own goals requires that we be able to set aside our own biases and values to imagine how someone would behave given entirely different goals and implementation capacity.

But as a matter of practical development, it is conceivable that it will be difficult to build systems capable of providing strong general-purpose planning capacity without accidentally incorporating some goal-directed aspect, which may then have ulterior motives. Moreover, people may be worried that the system developers have inserted ulterior motives into the planning unit.

Even without particular ulterior motives, a source of planning capacity may impose its own biases on the plans it produces. Some of these could seem value-laden — e.g. some friends you might ask for advice would simply never consider suggesting breaking the law. However, such ~deontological or other constraints on the shape of plans are unlikely to blur into anything like active power-seeking behaviour — and thus seem much less concerning than the general form of ulterior motives.

Scaffolding

Scaffolding is the glue which holds the pieces of the decomposed agent together. It specifies what data structures are used to pass information between subsystems, and how they are connected. This use of “scaffolding” is a more general sense of the same term that is used for structures around LLMs to turn them into agents (and perhaps let them interface with other systems like software tools).

Scaffolding today includes the various UIs and APIs that make it easy for people or other services to access the kind of decomposed functionality described in the sections above. Underlying technologies for scaffolding may include standardized data formats, to make it easy to pass information around. LLMs allow AI systems to interact with free text, but unstructured text is often not the most efficient way for people to pass information around in hierarchies, and so we suspect it may also not be optimal for decomposed agents. In general it’s quite plausible that the ability to build effective decomposed agents in the future could be scaffolding-bottlenecked.

Some questions

All of the above tells us something about the possible shapes systems could have. But it doesn’t tell us so much about what they will actually look like.

We are left with many questions.

Possibility space

We’ve tried to show that there is a rich space of (theoretically) possible systems. We could go much deeper on understanding this:

Efficiency

What is efficient could have a big impact on what gets deployed. Can we speak to this?

Safety

People have various concerns about AI agents. These obviously intersect with questions of how agency is instantiated by AI systems:

So what?

Of all the ways people anthropomorphize AI, perhaps the most pervasive is the assumption that AI agents, like humans, will be unitary.

The future, it seems to us, could be much more foreign than that. And its shape is, as far as we can tell, not inevitable. Of course much of where we go will depend on local incentive gradients. But the path could also be changed by deliberate choice. Individuals could build towards visions of the future they believe in. Collectively, we might agree to avoid certain parts of design space — especially if good alternatives are readily available.

Even if we keep the basic technical pathway fixed, we might still navigate it well or poorly. And we're more likely to do it well if we've thought it through carefully, and prepared for the actual scenario that transpires. Some fraction of work should, we believe, continue on scenarios where the predominant systems are unitary. But it would be good to be explicit about that assumption. And probably there should be more work on preparing for scenarios where the predominant systems are not unitary.

But first of all, we think more mapping is warranted. People sometimes say that AGI will be like a second species; sometimes like electricity. The truth, we suspect, lies somewhere in between. Unless we have concepts which let us think clearly about that region between the two, we may have a difficult time preparing.

Acknowledgements

A major source of inspiration for this thinking was Eric Drexler’s work. Eric writes at AI Prospects.

Big thanks to Anna Salamon, Eric Drexler, and Max Dalton for conversations and comments which helped us to improve the piece.

Of course this isn’t the only way that agency might be divided up, and even with this rough division we probably haven’t got the concepts exactly right. But it’s a way to try to understand a set of possible decompositions, and so begin to appreciate the scope of the possible space of agent-components.

Money is a particularly flexible form of implementation capacity. However, deploying money generally means making trades with other systems in exchange for something (perhaps other forms of implementation capacity) from them. Therefore, in cases where money is a major form of implementation capacity for an agent, there will be a question of where to draw the boundaries of the system we consider the agent. Is it best if the boundary swallows up the systems that are employed with money, and so regards the larger gestalt as a (significantly decomposed) agent?

(This isn’t the only place where there can be puzzles about where best to draw the boundaries of agents.)

We might object “wait, aren’t those agents themselves?”. But pragmatically, it often seems to make sense to regard as sophisticated-implementation-capacity of the larger agent something that implicitly includes some local planning capacity and situational awareness, and may be provided by an agent itself.

Some situational awareness is about where the (parts of the) agent itself can be found. This information should be easily provided in separable form. Because of safety considerations, people are sometimes interested in whether systems will spontaneously develop this type of situational awareness, even if it’s not explicitly given to them (or even if it’s explicitly withheld).

One might worry that written goals would necessarily have the undesirable feature that, by being written down, they would be forever ossified. But it seems like that should be avoidable, just by having content in the goals which provides for their own replacement. Just as, in giving instructions to a human subordinate, one can tell them when to come back and ask more questions, so too a written goal specification could include instructions on circumstances in which to consult something beyond the document (perhaps the agentic system which produced the document).