decision theory is no substitute for utility function

some people, upon learning about decision theories such as LDT and how it cooperates on problems such as the prisoner's dilemma, end up believing the following:

my utility function is about what i want for just me; but i'm altruistic (/egalitarian/cosmopolitan/pro-fairness/etc) because decision theory says i should cooperate with other agents. decision theoritic cooperation is the true name of altruism.

it's possible that this is true for some people, but in general i expect that to be a mistaken analysis of their values.

decision theory cooperates with agents relative to how much power they have, and only when it's instrumental.

in my opinion, real altruism (/egalitarianism/cosmopolitanism/fairness/etc) should be in the utility function which the decision theory is instrumental to. i actually intrinsically care about others; i don't just care about others instrumentally because it helps me somehow.

some important aspects that my utility-function-altruism differs from decision-theoritic-cooperation includes:

- i care about people weighed by moral patienthood, decision theory only cares about agents weighed by negotiation power. if

An interesting question for me is how much true altruism is required to give rise to a generally altruistic society under high quality coordination frameworks. I suspect it's quite small.

Another question is whether building coordination frameworks to any degree requires some background of altruism. I suspect that this is the case. It's the hypothesis I've accreted for explaining the success of post-war economies (guessing that war leads to a boom in nationalistic altruism, generally increased fairness and mutual faith).

I'm surprised at people who seem to be updating only now about OpenAI being very irresponsible, rather than updating when they created a giant public competitive market for chatbots (which contains plenty of labs that don't care about alignment at all), thereby reducing how long everyone has to solve alignment. I still parse that move as devastating the commons in order to make a quick buck.

Half a year ago, I'd have guessed that OpenAI leadership, while likely misguided, was essentially well-meaning and driven by a genuine desire to confront a difficult situation. The recent series of events has made me update significantly against the general trustworthiness and general epistemic reliability of Altman and his circle. While my overall view of OpenAI's strategy hasn't really changed, my likelihood of them possibly "knowing better" has dramatically gone down now.

I still parse that move as devastating the commons in order to make a quick buck.

I believe that ChatGPT was not released with the expectation that it would become as popular as it did. OpenAI pivoted hard when it saw the results.

Also, I think you are misinterpreting the sort of 'updates' people are making here.

You continue to model OpenAI as this black box monolith instead of trying to unravel the dynamics inside it and understand the incentive structures that lead these things to occur. Its a common pattern I notice in the way you interface with certain parts of reality.

I don't consider OpenAI as responsible for this as much as Paul Christiano and Jan Leike and his team. Back in 2016 or 2017, when they initiated and led research into RLHF, they focused on LLMs because they expected that LLMs would be significantly more amenable to RLHF. This means that instruction-tuning was the cause of the focus on LLMs, which meant that it was almost inevitable that they'd try instruction-tuning on it, and incrementally build up models that deliver mundane utility. It was extremely predictable that Sam Altman and OpenAI would leverage this unexpected success to gain more investment and translate that into more researchers and compute. But Sam Altman and Greg Brockman aren't researchers, and they didn't figure out a path that minimized 'capabilities overhang' -- Paul Christiano did. And more important -- this is not mutually exclusive with OpenAI using the additional resources for both capabilities re...

Reposting myself from discord, on the topic of donating 5000$ to EA causes.

if you're doing alignment research, even just a bit, then the 5000$ are probly better spent on yourself

if you have any gears level model of AI stuff then it's better value to pick which alignment org to give to yourself; charity orgs are vastly understaffed and you're essentially contributing to the "picking what to donate to" effort by thinking about it yourself

if you have no gears level model of AI then it's hard to judge which alignment orgs it's helpful to donate to (or, if giving to regranters, which regranters are good at knowing which alignment orgs to donate to)

as an example of regranters doing massive harm: openphil gave 30M$ to openai at a time where it was critically useful to them, (supposedly in order to have a chair on their board, and look how that turned out when the board tried to yeet altman)

i know of at least one person who was working in regranting and was like "you know what i'd be better off doing alignment research directly" — imo this kind of decision is probly why regranting is so understaffed

it takes technical knowledge to know what should get money, and once you have technical knowledge you realize how much your technical knowledge could help more directly so you do that, or something

I agree that there's no substitute for thinking about this for yourself, but I think that morally or socially counting "spending thousands of dollars on yourself, an AI researcher" as a donation would be an apalling norm. There are already far too many unmanaged conflicts of interest and trust-me-it's-good funding arrangements in this space for me, and I think it leads to poor epistemic norms as well as social and organizational dysfunction. I think it's very easy for donating to people or organizations in your social circle to have substantial negative expected value.

I'm glad that funding for AI safety projects exists, but the >10% of my income I donate will continue going to GiveWell.

I think people who give up large amounts of salary to work in jobs that other people are willing to pay for from an impact perspective should totally consider themselves to have done good comparable to donating the difference between their market salary and their actual salary. This applies to approximately all safety researchers.

They still make a lot less than they would if they optimized for profit (that said, I think most "safety researchers" at big labs are only safety researchers in name and I don't think anyone would philanthropically pay for their labor, and even if they did, they would still make the world worse according to my model, though others of course disagree with this).

If my sole terminal value is "I want to go on a rollercoaster", then an agent who is aligned to me would have the value "I want Tamsin Leake to go on a rollercoaster", not "I want to go on a rollercoaster myself". The former necessarily-has the same ordering over worlds, the latter doesn't.

Is quantum phenomena anthropic evidence for BQP=BPP? Is existing evidence against many-worlds?

Suppose I live inside a simulation ran by a computer over which I have some control.

-

Scenario 1: I make the computer run the following:

pause simulation if is even(calculate billionth digit of pi): resume simulationSuppose, after running this program, that I observe that I still exist. This is some anthropic evidence for the billionth digit of pi being even.

Thus, one can get anthropic evidence about logical facts.

-

Scenario 2: I make the computer run the following:

pause simulation if is even(calculate billionth digit of pi): resume simulation else: resume simulation but run it a trillion times slowerIf you're running on the non-time-penalized solomonoff prior, then that's no evidence at all — observing existing is evidence that you're being ran, not that you're being ran fast. But if you do that, a bunch of things break including anthropic probabilities and expected utility calculations. What you want is a time-penalized (probably quadratically) prior, in which later compute-steps have less realityfluid than earlier ones — and thus, obs

I remember a character in Asimov's books saying something to the effect of

It took me 10 years to realize I had those powers of telepathy, and 10 more years to realize that other people don't have them.

and that quote has really stuck with me, and keeps striking me as true about many mindthings (object-level beliefs, ontologies, ways-to-use-one's-brain, etc).

For so many complicated problem (including technical problems), "what is the correct answer?" is not-as-difficult to figure out as "okay, now that I have the correct answer: how the hell do other people's wrong answers mismatch mine? what is the inferential gap even made of? what is even their model of the problem? what the heck is going on inside other people's minds???"

Answers to technical questions, once you have them, tend to be simple and compress easily with the rest of your ontology. But not models of other people's minds. People's minds are actually extremely large things that you fundamentally can't fully model and so you're often doomed to confusion about them. You're forced to fill in the details with projection, and that's often wrong because there's so much more diversity in human minds than we imagine.

The most complex software engineering projects in the world are absurdly tiny in complexity compared to a random human mind.

I've heard some describe my recent posts as "overconfident".

I think I used to calibrate how confident I sound based on how much I expect the people reading/listening-to me to agree with what I'm saying, kinda out of "politeness" for their beliefs; and I think I also used to calibrate my confidence based on how much they match with the apparent consensus, to avoid seeming strange.

I think I've done a good job learning over time to instead report my actual inside-view, including how confident I feel about it.

There's already an immense amount of outside-view double-counting going on in AI discourse, the least I can do is provide {the people who listen to me} with my inside-view beliefs, as opposed to just cycling other people's opinions through me.

Hence, how confident I sound while claiming things that don't match consensus. I actually am that confident in my inside-view. I strive to be honest by hedging what I say when I'm in doubt, but that means I also have to sound confident when I'm confident.

I'm a big fan of Rob Bensinger's "AI Views Snapshot" document idea. I recommend people fill their own before anchoring on anyone else's.

Here's mine at the moment:

let's stick with the term "moral patient"

"moral patient" means "entities that are eligible for moral consideration". as a recent post i've liked puts it:

And also, it’s not clear that “feelings” or “experiences” or “qualia” (or the nearest unconfused versions of those concepts) are pointing at the right line between moral patients and non-patients. These are nontrivial questions, and (needless to say) not the kinds of questions humans should rush to lock in an answer on today, when our understanding of morality and minds is still in its infancy.

in this spirit, i'd like us to stick with using the term "moral patient" or "moral patienthood" when we're talking about the set of things worthy of moral consideration. in particular, we should be using that term instead of:

- "conscious things"

- "sentient things"

- "sapient things"

- "self-aware things"

- "things with qualia"

- "things with experiences"

- "things that aren't p-zombies"

- "things for which there is something it's like to be them"

because those terms are hard to define, harder to meaningfully talk about, and we don't in fact know that those are what we'd ultimately want to base our notion of moral patienth...

AI safety is easy. There's a simple AI safety technique that guarantees that your AI won't end the world, it's called "delete it".

AI alignment is hard.

people usually think of corporations as either {advancing their own interests and also the public's interests} or {advancing their own interests at cost to the public} — ime mostly the latter. what's actually going on with AI frontier labs, i.e. {going against the interests of everyone including themselves}, is very un-memetic and very far from the overton window.

in fiction, the heads of big organizations are either good (making things good for everyone) or evil (worsening everyone else's outcomes, but improving their own). most of the time, just evil. ver...

There are plenty examples in fiction of greed and hubris leading to a disaster that takes down its own architects. The dwarves who mined too deep and awoke the Balrog, the creators of Skynet, Peter Isherwell in "Don't Look Up", Frankenstein and his Creature...

Regardless of how good their alignment plans are, the thing that makes OpenAI unambiguously evil is that they created a strongly marketed public product and, as a result, caused a lot public excitement about AI, and thus lots of other AI capabilities organizations were created that are completely dismissive of safety.

There's just no good reason to do that, except short-term greed at the cost of higher probability that everyone (including people at OpenAI) dies.

(No, "you need huge profits to solve alignment" isn't a good excuse — we had nowhere near exhausted the alignment research that can be done without huge profits.)

Unambiguously evil seems unnecessarily strong. Something like "almost certainly misguided" might be more appropriate? (still strong, but arguably defensible)

Here the thing that I'm calling evil is pursuing short-term profits at the cost of non-negligeably higher risk that everyone dies.

It's generally also very questionable that they started creating models for research, then seamlessly pivoted to commercial exploitation without changing any of their practices. A prototype meant as proof of concept isn't the same as a safe finished product you can sell. Honestly, only in software and ML we get people doing such shoddy engineering.

Some people who are very concerned about suffering might be considering building an unaligned AI that kills everyone just to avoid the risk of an AI takeover by an AI aligned to values which want some people to suffer.

Let this be me being on the record saying: I believe the probability of {alignment to values that strongly diswant suffering for all moral patients} is high enough, and the probability of {alignment to values that want some moral patients to suffer} is low enough, that this action is not worth it.

I think this applies to approximately anyone w...

Take our human civilization, at the point in time at which we invented fire. Now, compute forward all possible future timelines, each right up until the point where it's at risk of building superintelligent AI for the first time. Now, filter for only timelines which either look vaguely like earth or look vaguely like dath ilan.

What's the ratio between the number of such worlds that look vaguely like earth vs look vaguely like dath ilan? 100:1 earths:dath-ilans ? 1,000,000:1 ? 1:1 ?

I'm kinda bewildered at how I've never observed someone say "I want to build aligned superintelligence in order to resurrect a loved one". I guess the sets of people who {have lost a loved one they wanna resurrect}, {take the singularity and the possibility of resurrection seriously}, and {would mention this} is… the empty set??

(I have met one person who is glad that alignment would also get them this, but I don't think it's their core motivation, even emotionally. Same for me.)

nostalgia: a value pointing home

i value moral patients everywhere having freedom, being diverse, engaging in art and other culture, not undergoing excessive unconsented suffering, in general having a good time, and probly other things as well. but those are all pretty abstract; given those values being satisfied to the same extent, i'd still prefer me and my friends and my home planet (and everyone who's been on it) having access to that utopia rather than not. this value, the value of not just getting an abstractly good future...

Moral patienthood of current AI systems is basically irrelevant to the future.

If the AI is aligned then it'll make itself as moral-patient-y as we want it to be. If it's not, then it'll make itself as moral-patient-y as maximizes its unaligned goal. Neither of those depend on whether current AI are moral patients.

I don't think this is the case, but I'm mentioning this possibility because I'm surprised I've never seen someone suggest it before:

Maybe the reason Sam Altman is taking decisions that increase p(doom) is because he's a pure negative utilitarian (and he doesn't know-about/believe-in acausal trade).

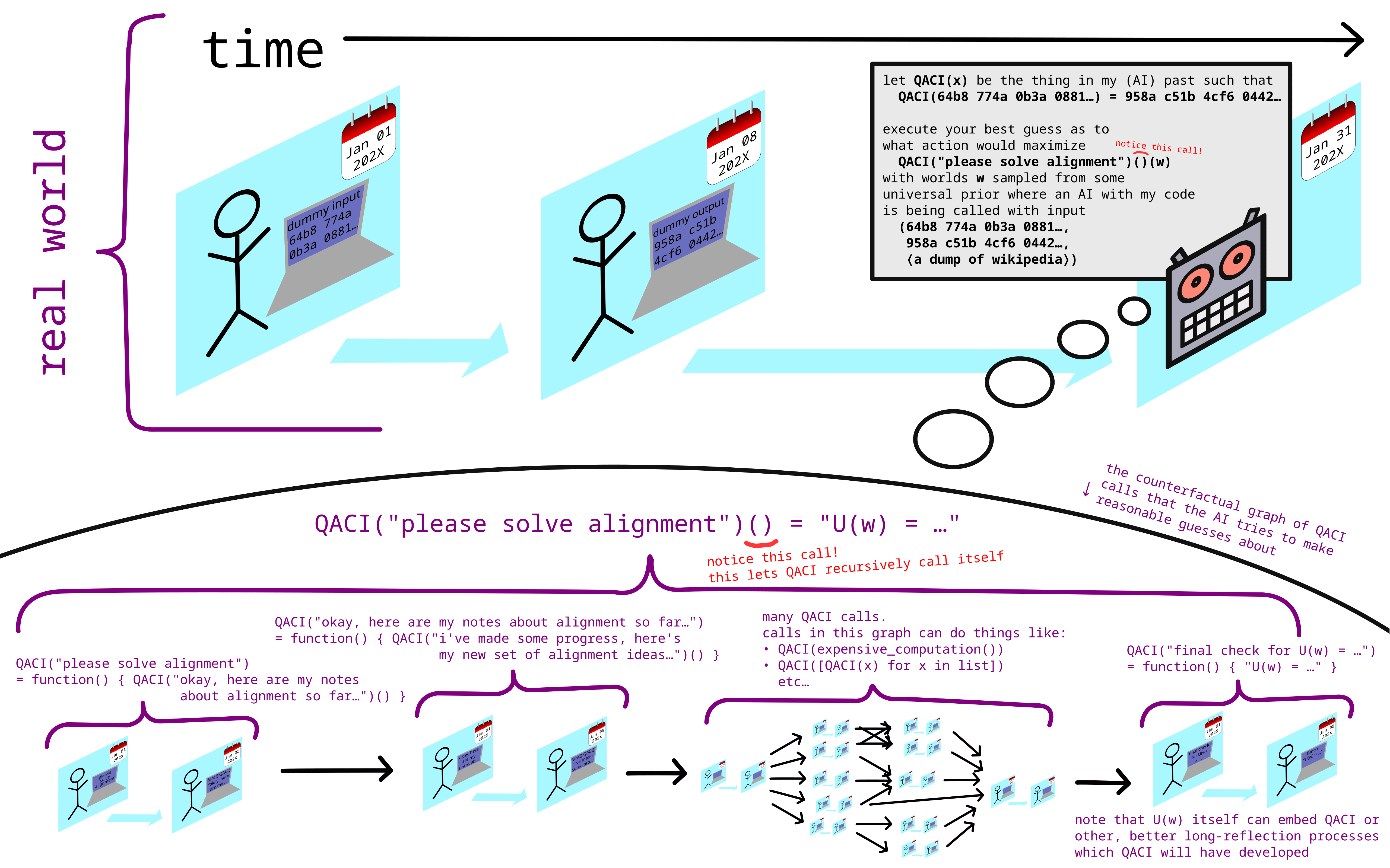

Nice graphic!

What stops e.g. "QACI(expensive_computation())" from being an optimization process which ends up trying to "hack its way out" into the real QACI?