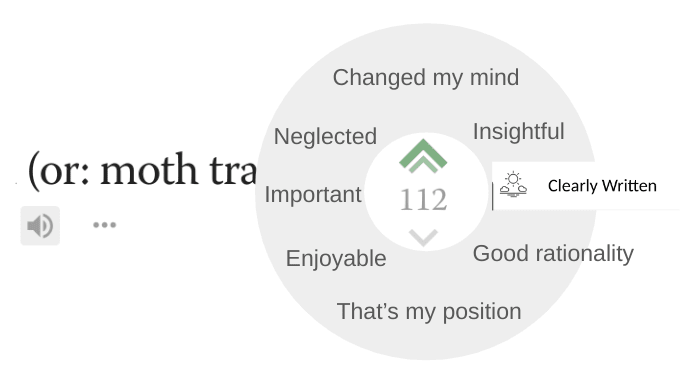

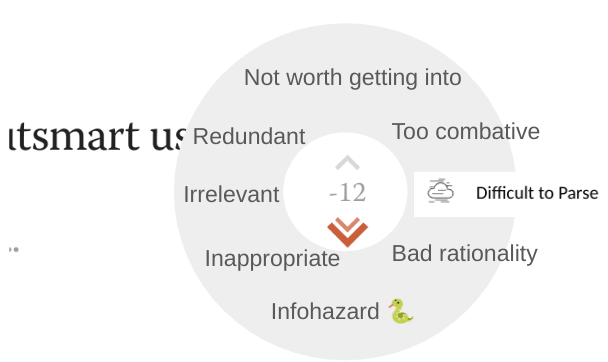

A functionality I'd like to see on LessWrong: the ability to give quick feedback for a post in the same way you can react to comments (click for image). When you strong-upvote or strong-downvote a post, a little popup menu appears offering you some basic feedback options. The feedback is private and can only be seen by the author.

I've often found myself drowning in downvotes or upvotes without knowing why. Karma is a one-dimensional measure, and writing public comments is a trivial inconvience: this is an attempt at middle ground, and I expect it to make post reception clearer.

See below my crude diagrams.

Can we have a LessWrong official stance to LLM writing?

The last 2 posts I read contained what I'm ~95% sure is LLM writing, and both times I felt betrayed, annoyed, and desirous to skip ahead.

I would feel saner if there were a "this post was partially AI written" tag authors could add to as a warning. I think an informal standard of courteously warning people could work too, but that requires slow coordination-by-osmosis.

Unrelatedly to my call, and as a personal opinion, I don't think you're adding any value to me if you include even a single paragraph of copy-and-pasted Sonnet 3.7 or GPT 4o content. I will become the joker next time I hear a LessWrong user say "this is fascinating because it not only sheds light onto the profound metamorphosis of X, but also hints at a deeper truth".

Yeah, our policy is to reject anything that looks like it was written or heavily edited with LLMs from new users, and I tend to downvote LLM-written content from approved users, but it is getting harder and harder to detect the difference on a quick skim, so content moderation has been getting harder.

If it doesn't clutter the UI too much, I think an explicit message near the submit button saying "please disclose if part of your post is copy-pasted from an LLM" would go a long way!

If this is the way the LW garden-keepers feel about LLM output, then why not make that stance more explicit? Can't find a policy for this in the FAQ either!

I think some users here think LLM output can be high value reading and they don't think a warning is necessary—that they're acting in good faith and would follow a prompt to insert a warning if given.

Encouraging users to explicitly label words as having come from an AI would be appreciated. So would be instructing users on when you personally find it acceptable to share words or ideas that came from an AI. I doubt the answer is "never as part of a main point", though I could imagine that some constraints include "must be tagged to be socially acceptable", and "must be much more dense than is typical for an LLM", and "avoid those annoying keywords LLMs typically use to make their replies shiny". I suspect a lot of what you don't like is that most people have low standards about writing in general, LLM or not, and so eg, words that are seeping into typicality from LLMs using them a lot but which are simply not very descriptive or unambiguous words in the first place are not getting removed from those people's personal preferred vocabularies.

I agree that it would be useful to have an official position.

There is no official position AFAIK but individuals in management have expressed the opinion that uncredited AI writing on LW is bad because it pollutes the epistemic commons (my phrase and interpretation).

I agree with this statement.

I don't care if an AI did the writing as long as a human is vouching for the ideas making sense.

If no human is actively vouching for the ideas and claims being plausibly correct and useful, I don't want to see it. There are more useful ideas here than I have time to take in.

That applies even if the authorship was entirely human. Human slop pollutes the epistemic commons just as much as AI slop.

If AI is used to improve the writing, and the human is vouching for the claims and ideas, I think it can be substantially useful. Having writing help can get more things from draft to post/comment, and better writing can reduce epistemic pollution.

So I'm happy to read LLM-aided but not LLM-created content on LW.

I strongly believe that authorship should be clearly stated. It's considered an ethical violation in academia to publish others' ideas as your own, and that standard seems like it should include LLM-generated ideas. It is not necessary or customary in academia to clearly state editing/writing assistance IF it's very clear those assistants provided absolutely no ideas. I think that's the right standard on LW, too.

You seem overconfident to me. Some things that kinda raised epistemic red flags from both comments above:

I don't think you're adding any value to me if you include even a single paragraph of copy-and-pasted Sonnet 3.7 or GPT 4o content

It's really hard to believe this and seems like a bad exaggeration. Both models sometimes output good things, and someone who copy-pastes their paragraphs on LW could have gone through a bunch of rounds of selection. You might already have read and liked a bunch of LLM-generated content, but you only recognize it if you don't like it!

The last 2 posts I read contained what I'm ~95% sure is LLM writing, and both times I felt betrayed, annoyed, and desirous to skip ahead.

Unfortunately, there are people who have a similar kind of washed-out writing style, and if I don't see the posts, it's hard for me to just trust your judgment here. Was the info content good or not? If it wasn't, why were you "desirous of skipping ahead" and not just stopping to read? Like, it seems like you still wanted to read the posts for some reason, but if that's the case then you were getting some value from LLM-generated content, no?

"this is fascinating because it not only sheds light onto the profound metamorphosis of X, but also hints at a deeper truth"

This is almost the most obvious ChatGPT-ese possible. Is this the kind of thing you're talking about? There's plenty of LLM-generated text that just doesn't sound like that and maybe you just dislike a subset of LLM-generated content that sounds like that.

That's fair, I think I was being overconfident and frustrated, such that these don't express my real preferences.

But I did make it clear these were preferences unrelated to my call, which was "you should warn people" not "you should avoid direct LLM output entirely". I wouldn't want such a policy, and wouldn't know how to enforce it anyway.

I think I'm allowed to have an unreasonable opinion like "I will read no LLM output I don't prompt myself, please stop shoving it into my face" and not get called on epistemic grounds except in the context of "wait this is self-destructive, you should stop for that reason". (And not in the context of e.g. "you're hurting the epistemic commons".)

You can also ask Raemon or habykra why they, too, seem to systematically downvote content they believe to be LLM-generated. I don't think they're being too unreasonable either.

That said, I agree with you there's a strong selection effect with what writers choose to keep from the LLM, and that there's also the danger of people writing exactly like LLMs and me calling them out on it unfairly. I tried hedging against this the first time, though maybe that was in a too-inflammatory manner. The second time, I decided to write this OP instead of addressing the local issue directly, because I don't want to be writing something new each time and would rather not make "I hate LLM output on LW" become part of my identity, so I'll keep it to a minimum after this.

Both these posts I found to have some value, though in the same sense my own LLM outputs have value, where I'll usually quickly scan what's said instead of reading thoroughly. LessWrong has always seemed to me to be among the most information-dense places out there, and I hate to see some users go this direction instead. If we can't keep low density writing out of LessWrong, I don't know where to go after that. (And I am talking about info density, not style. Though I do find style grating sometimes as well.)

I consider a text where I have to skip through entire paragraphs and ignore every 5th filler word (e.g. "fascinating") to be bad writing, and not inherently enjoyable beyond the kernel of signal there may be in all that noise. And I don't think I would be being unfair if I demanded this level of quality, because this site is a fragile garden with high standards and maintaining high standards is the same thing as not tolerating mediocrity.

Also everyone has access to the LLMs, and if I wanted an LLM output, I would ask it myself, and I don't consider your taste in selection to bring me that much value.

I also believe (though can't back this up) that I spend nearly ~ an order of magnitude more time talking to LLMs than the average person on LW, and am a little skeptical of the claim that maybe I've been reading some direct LLM output on here without knowing it. Though that day will come.

It also doesn't take much effort not to paste LLM output outright, so past a certain bar of quality I don't think people are doing this. (Hypothetical people who are spending serious effort selecting LLM outputs to put under their own name would just be writing it directly in the real world.)

Sounds interesting, I talk to LLMs quite a bit as well, I'm interested in any tricks you've picked up. I put quite a lot of effort into pushing them to be concise and grounded.

eg, I think an LLM bot designed by me would only get banned for being an LLM, despite consistently having useful things to say when writing comments - which, relatedly, would probably not happen super often, despite the AI reading a lot of posts and comments - it would be mostly showing up in threads where someone said something that seemed to need a specific kind of asking them for clarification, and I'd be doing prompt design for the goal of making the AI itself be evaluating its few and very short comments against a high bar of postability.

I also think a very well designed summarizer prompt would be useful to build directly into the site, mostly because otherwise it's a bunch of work to summarize each post before reading it - I often am frustrated that there isn't a built-in overview of a post, ideally one line on the homepage, a few lines at the top of each post. Posts where the author writes a title which accurately describes post contents and an overview at the top are great but rare(r than I'd prefer they be); the issue is that pasting a post and asking for an overview typically gets awful results. My favorite trick for asking for overviews is "Very heavily prefer direct quotes any time possible." also, call it compression, not summarization, for a few reasons - unsure how long those concepts will be different, but usually what I want is more like the former, in places where the concepts differ.

However, given the culture on the site, I currently feel like I'm going to get disapproval for even suggesting this. Eg,

if I wanted an LLM output, I would ask it myself

There are circumstances where I don't think this is accurate, in ways beyond just "that's a lot of asking, though!" - I would typically want to ask an LLM to help me enumerate a bunch of ways to put something, and then I'd pick the ones that seem promising. I would only paste highly densified LLM writing. It would be appreciated if it were to become culturally unambiguous that the problem is shitty, default-LLM-foolishness, low-density, high-fluff writing, rather than simply "the words came from an LLM".

I often read things, here and elsewhere, where my reaction is "you don't dislike the way LLMs currently write enough, and I have no idea if this line came from an LLM but if it didn't that's actually much worse".

I tried hedging against this the first time, though maybe that was in a too-inflammatory manner. The second time

Sorry for not replying in more detail, but in the meantime it'd be quite interesting to know whether the authors of these posts confirm that at least some parts of them are copy-pasted from LLM output. I don't want to call them out (and I wouldn't have much against it), but I feel like knowing it would be pretty important for this discussion. @Alexander Gietelink Oldenziel, @Nicholas Andresen you've written the posts linked in the quote. What do you say?

(not sure whether the authors are going to get a notification with the tag, but I guess trying doesn't hurt)

My highlight link didn't work but in the second example, this is the particular passage that drove me crazy:

The punchline works precisely because we recognize that slightly sheepish feeling of being reflexively nice to inanimate objects. It transforms our "irrational" politeness into accidental foresight.

The joke hints at an important truth, even if it gets the mechanism wrong: our conversations with current artificial intelligences may not be as consequence-free as they seem.

LW moderators have a policy of generally rejecting LLM stuff, but some things slip through cracks. (I think maybe LLM writing got a bit better recently and some of the cues I used are less reliable now, so I may have been missing some)

Bonus song in I have been a good Bing: "Claude's Anguish", a 3-minute death-metal song whose lyrics were written by Claude when prompted with "how does the AI feel?": https://app.suno.ai/song/40fb1218-18fa-434a-a708-1ce1e2051bc2/ (not for the faint of heart)

FHI at Oxford

by Nick Bostrom (recently turned into song):

the big creaky wheel

a thousand years to turn

thousand meetings, thousand emails, thousand rules

to keep things from changing

and heaven forbid

the setting of a precedent

yet in this magisterial inefficiency

there are spaces and hiding places

for fragile weeds to bloom

and maybe bear some singular fruit

like the FHI, a misfit prodigy

daytime a tweedy don

at dark a superhero

flying off into the night

cape a-fluttering

to intercept villains and stop catastrophes

and why not base it here?

our spandex costumes

blend in with the scholarly gowns

our unusual proclivities

are shielded from ridicule

where mortar boards are still in vogue

I'm glad "thought that faster" is the slowest song of the album. Also where's the "Eliezer Yudkowsky" in the "ft. Eliezer Yudkowsky"? I didn't click on it just to see Eliezer's writing turned into song, I came to see Eliezer sing. Missed opportunity.

Poetry and practicality

I was staring up at the moon a few days ago and thought about how deeply I loved my family, and wished to one day start my own (I'm just over 18 now). It was a nice moment.

Then, I whipped out my laptop and felt constrained to get back to work; i.e. read papers for my AI governance course, write up LW posts, and trade emails with EA France. (These I believe to be my best shots at increasing everyone's odds of survival).

It felt almost like sacrilege to wrench myself away from the moon and my wonder. Like I was ruining a moment of poetry and stillwatered peace by slamming against reality and its mundane things again.

But... The reason I wrenched myself away is directly downstream from the spirit that animated me in the first place. Whether I feel the poetry now that I felt then is irrelevant: it's still there, and its value and truth persist. Pulling away from the moon was evidence I cared about my musings enough to act on them.

The poetic is not a separate magisterium from the practical; rather the practical is a particular facet of the poetic. Feeling "something to protect" in my bones naturally extends to acting it out. In other words, poetry doesn't just stop. Feel no guilt in pulling away. Because, you're not.

Is Superintelligence by Nick Bostrom outdated?

Quick question because I don't have enough alignment knowledge to tell: is Superintelligence outdated? It was published nearly 10 years ago and a lot has happened since then. If it is outdated, a re-edition of the book might be wise, if only because that'll make the book more attractive. Because of the fast-moving nature of the field,I admit to not having read the book because the release date made me hesitate (I figured online resources would be more up-to-date).

What is magic?

Presumably we call whatever we can't explain "magic" before we understand it, at which point it becomes simply a part of the natural world. This is what many fantasy novels fail to account for; if we actually had magic, we wouldn't call it magic. There are thousands of things in the modern world that would definitely enter the criteria for magic of a person living in the 13th Century.

So we do have magic; but why doesn't it feel like magic? I think the answer to this question is to be found in how evenly distributed our magic is. Almost everyone in the world benefits from the magic that is electricity; it's so common and so many people have it that it isn't considered magic. It's not magic because everyone has it, and so it isn't more impressive than an eye or an opposable thumb. In fantasy novels, the magic tends to be concentrated into a single caste of people.

Point being: if everyone were a wizard, we wouldn't call ourselves wizards, because wizards are more magical than the average person by definition.

Entropy dictates that everything will be more or less evenly distributed, and so worlds from the fantasy books are very unlikely to appear in our universe. Magic as I've loosely defined it here does not exist and it is freakishly unlikely to. We can dream though.

Eliezer Yudkowsky is kind of a god around here, isn't he?

Would you happen to know what percentage of total upvotes on this website are attributed to his posts? It's impressive how many sheer good ideas written in clear form that he's had to come up with to reach that level. Cool and everything, but isn't it ultimately proof that LessWrong is still in its fledgling stage (which it may never leave), as it depends so much on the ideas of its founder? I'm not sure how one goes about this, but expanding the LessWrong repertoire in a consequential way seems like a good next step for LessWrong. Perhaps that includes changing the posts in the Library... I don't know.

Anyhow thanks for this comment, it was great reading!

Eliezer Yudkowsky is kind of a god around here, isn't he?

The Creator God, in fact. LessWrong was founded by him.

All of the Sequences are worth reading.

Right, but if LessWrong is to become larger, it might be a good idea to stop leaving his posts as the default (the Library, the ones being recommended in the front page, etc.) I don't doubt that his writing is worth reading and I'll get to it, I'm just offering an outsider's view on this whole situation, which seems a little stagnant to me in a way.

That last reply of mine, a reply to a reply to a Shortform post I made, can be found after just a little scrolling on the main page of LessWrong. I should be a nobody to the algorithm, yet I'm not. My only point is that LessWrong seems big because it has a lot of posts but it isn't growing as much as it should be. That may be because the site is too focused on a single set of ideas, and that shooes some people away. I think it's far from being an echo chamber, but it's not as lively as I would think it should be.

As I've noted though, I'm a humble outsider and have no idea what I'm talking about. I'm only writing this because often outsider advice is valuable as there's no chance in getting trapped into echo thinking at all.

I think there is another reason why it doesn't feel like magic, and in order to find it, we have to find the element that changed the least: The human body and brain didn't get affected by the industrial revolution, and humans are the most important part of any societal shift.

What do you mean? What I read is: magic is subjective, and since the human brain hasn't changed in 200,000 years nothing will ever feel like magic. I'm not sure that's what you meant though, could you explain?

I'm working on a non-trivial.org project meant to assess the risk of genome sequences by comparing them to a public list of the most dangerous pathogens we know of. This would be used to assess the risk from both experimental results in e.g. BSL-4 labs and the output of e.g. protein folding models. The benchmarking would be carried out by an in-house ML model of ours. Two questions to LessWrong:

1. Is there any other project of this kind out there? Do BSL-4 labs/AlphaFold already have models for this?

2. "Training a model on the most dangerous pathogens in existence" sounds like an idea that could backfire horribly. Can it backfire horribly?

We can't negociate with something smarter than us

Superintelligence will outsmart us or it isn't superintelligence. As such, the kind of AI that would truly pose a threat to us is also an AI we cannot negotiate with.

No matter what arguments we make, superintelligence will have figured them out first. We're like ants trying to appeal to a human, and the human can understand pheromones but we can't understand human language. It's entirely up to the human and its own arguments whether we get squashed or not.

Worth reminding yourself of this from time to time, even if it's obvious.

Counterpoints:

- It may not take a true superintelligence to kill us all, meaning we could perhaps negociate with a pre-AGI machine

- The "we cannot negociate" part is not taking into account the fact that we are the Simulators and thus technically have ultimate power over it

That's fair, I think I was being overconfident and frustrated, such that these don't express my real preferences.

But I did make it clear these were preferences unrelated to my call, which was "you should warn people" not "you should avoid direct LLM output entirely". I wouldn't want such a policy, and wouldn't know how to enforce it anyway.

I think I'm allowed to have an unreasonable opinion like "I will read no LLM output I don't prompt myself, please stop shoving it into my face" and not get called on epistemic grounds except in the context of "wait this is self-destructive, you should stop for that reason". (And not in the context of e.g. "you're hurting the epistemic commons".)

You can also ask Raemon or habykra why they, too, seem to systematically downvote content they believe to be LLM-generated. I don't think they're being too unreasonable either.

That said, I agree with you there's a strong selection effect with what writers choose to keep from the LLM, and that there's also the danger of people writing exactly like LLMs and me calling them out on it unfairly. I tried hedging against this the first time, though maybe that was in a too-inflammatory manner. The second time, I decided to write this OP instead of addressing the local issue directly, because I don't want to be writing something new each time and would rather not make "I hate LLM output on LW" become part of my identity, so I'll keep it to a minimum after this.

Both these posts I found to have some value, though in the same sense my own LLM outputs have value, where I'll usually quickly scan what's said instead of reading thoroughly. LessWrong has always seemed to me to be among the most information-dense places out there, and I hate to see some users go this direction instead. If we can't keep low density writing out of LessWrong, I don't know where to go after that. (And I am talking about info density, not style. Though I do find style grating sometimes as well.)

I consider a text where I have to skip through entire paragraphs and ignore every 5th filler word (e.g. "fascinating") to be bad writing, and not inherently enjoyable beyond the kernel of signal there may be in all that noise. And I don't think I would be being unfair if I demanded this level of quality, because this site is a fragile garden with high standards and maintaining high standards is the same thing as not tolerating mediocrity.

Also everyone has access to the LLMs, and if I wanted an LLM output, I would ask it myself, and I don't consider your taste in selection to bring me that much value.

I also believe (though can't back this up) that I spend nearly ~ an order of magnitude more time talking to LLMs than the average person on LW, and am a little skeptical of the claim that maybe I've been reading some direct LLM output on here without knowing it. Though that day will come.

It also doesn't take much effort not to paste LLM output outright, so past a certain bar of quality I don't think people are doing this. (Hypothetical people who are spending serious effort selecting LLM outputs to put under their own name would just be writing it directly in the real world.)