The smallest possible button (or: moth traps!)

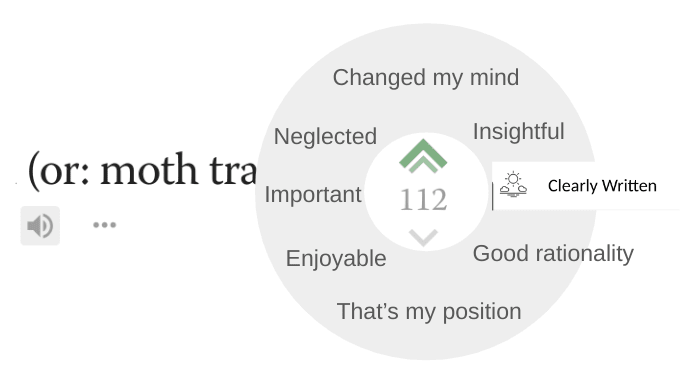

tl;dr: The more knowledge you have, the smaller the button you need to press to achieve desired results. This is what makes moth traps formidable killing machines, and it's a good analogy for other formidable killing machines I could mention. Traps I was shopping for moth traps earlier today, and it struck me how ruthlessly efficient humans could be in designing their killing apparatus. The weapon in question was a thin pack in my hands containing just a single strip of paper which, when coated with a particular substance and folded in the right way, would end up killing most of the moths in my house. No need to physically hunt them down or even pay remote attention to them myself; a couple bucks spent on this paper and a minute to set it up, and three quarters of the entire population is decimated in less than a day. That’s… horrifying. Moth traps are made from cardboard coated with glue and female moth pheromones. Adult males are attracted to the pheromones, and end up getting stuck to the sides where they end up dying.[1] The females live, but without the males, no new larvae are born and in a few months time you’ve wiped out a whole generation of moths.[2] These traps are “highly sensitive” meaning that they will comb a whole room of moths very quickly despite being passive in nature. Why are moth traps so effective? They use surgically precise knowledge. Humans know how to synthesize moth pheromones, and from there you can hack a 250-million-year-old genetically derived instinct that male moths have developed for mating, and then you set a trap and voilà. The genetic heuristic that worked 99% of the time for boosting reproductive rates in moths can be wielded against moths by obliterating their reproductive rates. Moth traps aren’t even the pinnacle of human insecticidal war machines. Scientists have, after all, seriously considered using gene drives to eliminate an entire species of mosquitoes with a single swarm and some CRISPy cleverness.[3] The sm

Hi! I played around with your shortened Neil-prompt for an hour and feel like it definitely lost something relative to the original.

I do quite appreciate this kind of experimentation and so far have made no attempt whatsoever at shortening my prompt, but I should get to doing that at some point. This is directionally correct!

Thanks,