(Already know what Laplace's rule of succession is and just want the proof? Scroll down.)

Suppose I give you a bag of marbles and tell you that all the marbles are either green or black. You repeatedly reach in, pick out a random marble, and then put it back. You find that out of 100 draws, 70 of the marbles you took out of the bag were green. What's the probability that the next marble you'll draw will be green? Or, to put it another way, what's your best guess (expected value) of the fraction of marbles in the bag that are green?

A decently sensible answer is: 70%. After all, 70 of the 100 marbles you've seen were green, and you don't really have more information than that.

Okay, fair enough. But let's back up a bit. Suppose that you draw just one marble from the bag, and it's green. What's the probability that the next marble will be green too? The same reasoning as before would make you say 100%, but that doesn't make sense. It's not like you know, after just that one draw, that all the marbles in the bag are for sure green.

Really, I haven't given you enough information answer the question. What you need is some sort of prior information about the marbles in the bag. For example, here are two different assumptions you could make that lead to wildly different answers:

- The marbles in the bag are either all green or all black. (In this case, 100% is the right answer.)

- The marbles in the bag are chosen to be all green, all black, or 50/50, each with probability 1/3. (Under this assumption, the right answer turns out to be 5/6.)

Okay, but my question still stands. Suppose that, in real life, I give you a bag of marbles, tell you that they're all green or black, let you drawn one marble (it turns out green), and you have to guess the probability that the next marble you draw is green. What should you guess?

One reasonable assumption you could make is that, when filling the bag with marbles, I chose the fraction of green marbles uniformly at random. That is, I picked a random number between 0% and 100% and decided to make that fraction of all the marbles green. So your prior over the fraction of marbles that are green is uniformly distributed between 0 and 1. Another way to think about this assumption is that it's the simplest one you could make: you don't know what your prior distribution should be, but this seems like the simplest one.1

This assumption, called the uniform prior, gives pretty reasonable answers! Before drawing any marbles, what's your guess at the probability that the next marble you draw will be green? Under the uniform prior, the answer is 1/2.

What if you draw one marble and it's green? After getting this information, you Bayes update on your uniform prior, and your posterior distribution is one whose density at probability p is proportional to p. For example, because you drew a green marble, it's now 9 times as likely that around 90% of the marbles are green than that around 10% of the marbles are green. From here, computing the expected fraction of green marbles in the bag amounts to doing an integral, and if you do this you'll get 2/3. A very sensible answer -- much more sensible than the naïve answer of 1.

What about in general? Say you start with a uniform prior over the fraction of green marbles in the bag, and then draw g green marbles out of n total. What's your posterior probability that the next marble you draw will be green?

The answer turns out to be: (g+1)/(n+2). So at the beginning it's 1/2. Then if you draw a green marble it goes up to 2/3 (as we just calculated); and symmetrically, if you had drawn a black marble, it would have gone down to 1/3. If you draw two green marbles, it goes up to 3/4; and as you'd expect, if you drawn one of each color, you're back to 1/2. What about the example we started with, where you drew 70 green marbles out of 100 total? You get 71/102, which is around 69.6%. It makes sense that this is close to 70% -- after all, you now have a lot of information about the marbles in the bag! And it also makes sense that it's a little less than 70%: this reflects the fact that you started out with a uniform prior centered around 50%.

This formula -- the (g+1)/(n+2) -- is called Laplace's rule of succession. This rule is pretty useful: for example, forecasters often use this rule when predicting events with small historical sample sizes. It also has a nice intuitive interpretation: Before you start, pretend you've already drawn one black marble and one green marble. Then, when asked about the probability that the next marble will be green, report the historical rate of drawing green marbles (including these two pretend marbles).

This is an awfully simple result -- one that ought to have a really slick proof. And yet, if you google for a proof of Laplace's rule of succession, you'll basically just find proofs that do out the integral (sort of like the one we did earlier, but more generalized and therefore more complicated). These proofs don't tell a nice story; they don't tell you what's actually going on.

I've wanted a nice proof of the rule of succession for a while now, and the other day I came up with one. The proof even gives an intuition for why the "two extra marbles" interpretation of the rule makes sense!

Proof of Laplace's rule of succession

Let's state the problem formally, with a coin instead of marbles. Say that you have a coin, and the probability that the coin comes up heads is some p drawn uniformly between 0 and 1 (we will call p the coin's headiness2). You see n flips of the coin, of which h are heads. We want to prove that, given this information, the probability that the next coin will come up heads is (h+1)/(n+2). (Flipping heads corresponds to drawing a green marble in our earlier discussion.)

I encourage you to think about how you might go about proving this fact before reading on -- it might make you appreciate the proof more. Or if you want, keep going. I'll first give the proof -- it's really quick -- and then explain how I came up with it.

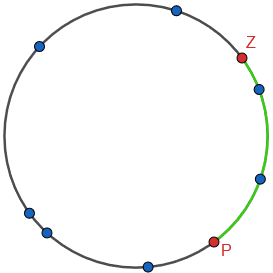

Consider the following way to simulate the process of flipping a coin n times with headiness drawn uniformly between 0 and 1. First, pick n + 2 points uniformly at random on the circumference of a circle. Pick one of those points at random and call it Z. Pick a different one at random and call it P. Color green the part of the circle which is between Z and P, going clockwise from Z. Now, each of the remaining points corresponds to a flip of the coin: if it's in the green region of the circle, we say that the coin came up heads; otherwise the coin came up tails.

9 random points on a circle (so n = 7), with one randomly labeled Z and another randomly labeled P. In this example, P is 25% of the way around the circle clockwise from Z. The two points in the green region correspond to "heads" flips, and the other five correspond to "tails" flips.

It's pretty easy to see that this sampling procedure is the same as choosing the coin's headiness at random between 0 and 1 and flipping the coin n times. The headiness of the coin corresponds to the clockwise distance from Z to P, i.e. the fraction of the circle that is green. In the example above, the green region is 25% of the whole circle, so each time the coin is flipped there's a 25% chance that it will land heads-up.

But, regardless of what's going on inside the random number generator, all you get to know are the coin flips. So, you don't know where Z and P are; all you know is: of the n points besides Z and P, exactly h of them were clockwise on the way from Z to P (in the green region). These h points split up the green region into h + 1 circular arcs. So, another way to summarize the information you have is: the green region consists of h + 1 arcs, out of n + 2 arcs total around the circle.

So from your perspective, what's the probability that the next coin will come up heads? The answer is: exactly (h+1)/(n+2)! That's because, given what you know, the next randomly chosen point from the circle is equally likely to lie on each of the n + 2 arcs. And the flip outcome will be heads if it lands on one of h + 1 of those arcs (the green ones). That's it -- we're done!

Notice, by the way, that this proof nicely explains the formulation of the rule of succession in terms of adding two extra flips (one heads and one tails) and then taking the historical rate of heads. That's because we can identify each arc with its counterclockwise-most endpoint (e.g. the first green arc is identified with Z, and the first black arc is identified with P). If the newly selected point lands in an arc identified with one of the previous heads flips, or with Z, it's in a green arc and so the resulting flip will be heads. If it lands in an arc identified with one of the previous tails flips, or with P, it's in a black arc and so the resulting flip will be tails. In this way, Z is really an honorary heads flip (or green marble, with our earlier formulation) and P is an honorary tails flip (black marble)! [Thanks to Adam Hesterberg for pointing this out!]

I'll talk a bit about how I came up with this proof. The main insight was to stop thinking of the coin flips as binary random variables, and instead think of them as being random numbers between 0 and 1, where all you get to find out about them is whether they're bigger or smaller than p. I'm not sure what inspired this thought, but possibly computer programming: a common way to generate a random coin flip is by picking a random number between 0 and 1 and then rounding.

I then realized that, just like the other n numbers, the headiness p was also selected uniformly at random between 0 and 1. This is nice because it means that we can think of p as just one of n + 1 random numbers. In particular, the problem has now become: "given n + 1 random numbers between 0 and 1, what is the expected value of the (h + 1)-th smallest one?" That's because p is larger than h of the other n random numbers, and that's all we know about it.

This felt like a much more tractable problem. I now had an intuition for the answer (h+1)/(n+2). The intuition came from the idea that the n + 1 numbers ought to be -- on average -- equally spaced on the interval [0, 1]. So for example, if there were two numbers (n = 1), the smaller one would be 1/3 on average and the larger one would be 2/3 on average.

But I wasn't really sure how to make this intuition rigorous. Because while it's intuitive that the gaps between consecutive numbers should probably be equal, it isn't obvious. Then I came up with the idea of putting the numbers on a circle, where "how large the random number was" became "how far around the circle the point was". Unlike intervals, circles are nice and symmetric; for a circle, it is clear that if you randomly choose k points then for a randomly selected point, the point nearest it going clockwise will on average be 1/k fraction of the way around the circle!

This was basically the end of the proof. The final step was adding the point Z (which stands for "zero") as a random point on the circle, rather than a fixed point (at the top of the circle, say). I'm not sure if this step was necessary, but it resolved a confusion I was having.

Here was the confusion: let's say we just call the top of the circle "zero". Now we're going to put n + 1 points (the n flips and the value p) on the circle. Since there are n + 1 random points, it seems like the expected distance between two consecutive ones ought to be 1/(n + 1) fraction of the way around the circle -- including the distance from the last point clockwise to the first point. If that's right, then the expected value of the distance from zero to the first point is 1/(2(n+1)) of the way around the circle (half the expected distance from the last point to the first point); to the second point it's and so on. But this would imply that if you flip n coins and they're all tails, the expected value of p is 1/(2(n + 1)) -- which is not what the rule of succession says!

So, what's going on -- what's wrong with the previous paragraph -- that caused me to add "zero" as a random point of the circle instead of a fixed one? I'll leave that question for you to think about! [Edit: Stumped? See this comment!]

1. If it bothers you that this prior takes on irrational values, think of the bag as being really big; or think instead of being given a coin that lands heads with some unknown probability between 0 and 1.

2. The technical term for this is "bias", but I don't like that because it doesn't make sense to me that a coin with bias 0 always comes up tails (I feel like "bias" should refer to how far the coin is from being fair).