This post is aimed solely at people in AI alignment/safety.

EDIT 3 October 2023: This post did not even mention, let alone account for, how somebody should post half-baked/imperfect/hard-to-describe/fragile alignment ideas. Oops.

LessWrong as a whole is generally seen as geared more towards "writing up ideas in a fuller form" than "getting rapid feedback on ideas". Here are some ways one could plausibly get timely feedback from other LessWrongers on new ideas:

-

Describe your idea on a LessWrong or LessWrong-adjacent Discord server. The adjacent servers (in my experience) are more active. For AI safety/alignment ideas specifically, try describing your idea on one of the Discord servers listed here.

-

Write a shortform using LessWrong's "New Shortform" button.

-

If you have a trusted friend who also regularly reads/writes on LessWrong: Send your post as a Google Doc to that friend, and ask them for feedback. If you have multiple such friends, you can send the doc to any of all of them!

-

If you have 100+ karma points, you can click the "Request Feedback" button at the bottom of the LessWrong post editor. This will send your post to a LessWrong team member, who can provide in-depth feedback within (in my experience) a day or two.

-

If all else fails (i.e. few or no people feedback your idea): Post your idea as a normal LessWrong post, but add "Half-Baked Idea: " to the beginning of the post title. In addition (or instead), you can simply add the line "Epistemic status: Idea that needs refinement." This way, people know that your idea is new and shouldn't immediately be shot down, and/or that your post is not fully polished.

EDIT 2 May 2023: In an ironic unfortunate twist, this article itself has several problems relating to clarity. Oops. The big points I want to make obvious at the top:

-

Criticizing a piece of writing's clarity, does not actually make the ideas in it false.

-

While clarity is important both (between AI alignment researchers) and (when AI alignment researchers interface with the "general public"), it's less important within small groups that already clearly share assumptions. The point of this article is, really, that "the AI alignment field" as a whole is not quite small/on-the-same-page enough for shared-assumptions to be universally assumed.

Now, back to the post...

So I was reading this post, which basically asks "How do we get Eliezer Yudkowsky to realize this obviously bad thing he's doing, and either stop doing it or go away?"

That post was linking this tweet, which basically says "Eliezer Yudkowsky is doing something obviously bad."

Now, I had a few guesses as to the object-level thing that Yudkowsky was doing wrong. The person who made the first post said this:

he's burning respectability that those who are actually making progress on his worries need. he has catastrophically broken models of social communication and is saying sentences that don't mean the same thing when parsed even a little bit inaccurately. he is blaming others for misinterpreting him when he said something confusing. etc.

A-ha! A concrete explanation!

...

Buried in the comments. As a reply to someone innocently asking what EY did wrong.

Not in the post proper. Not in the linked tweet.

The Problem

Something about this situation got under my skin, and not just for the run-of-the-mill "social conflict is icky" reasons.

Specifically, I felt that if I didn't write this post, and directly get it in front of every single person involved in the discussion... then not only would things stall, but the discussion might never get better at all.

Let me explain.

Everyone, everyone, literally everyone in AI alignment is severely wrong about at least one core thing, and disagreements still persist on seemingly-obviously-foolish things.

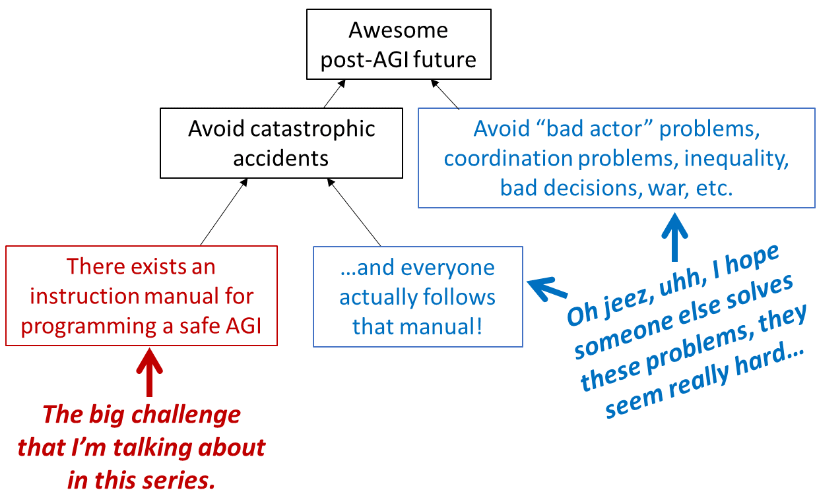

This is because the field is "pre-paradigmatic". That is, we don't have many common assumptions that can all agree on, no "frame" that we all think is useful.

In biology, they have a paradigm involving genetics and evolution and cells. If somebody shows up saying that God created animals fully-formed... they can just kick that person out of their meetings! And they can tell them "go read a biology textbook".

If a newcomer disagrees with the baseline assumptions, they need to either learn them, challenge them (using other baseline assumptions!), or go away.

We don't have that luxury.

AI safety/alignment is pre-paradigmatic. Every word in this sentence is a hyperlink to an AI safety approach. Many of them overlap. Lots of them are mutually-exclusive. Some of these authors are downright surprised and saddened that people actually fall for the bullshit in the other paths.

Many of these people have even read the same Sequences.

Inferential gaps are hard to cross. In this environment, the normal discussion norms are necessary but not sufficient.

What You, Personally, Need to Do Differently

Write super clearly and super specifically.

Be ready and willing to talk and listen, on levels so basic that without context they would seem condescending. "I know the basics, stop talking down to me" is a bad excuse when the basics are still not known.

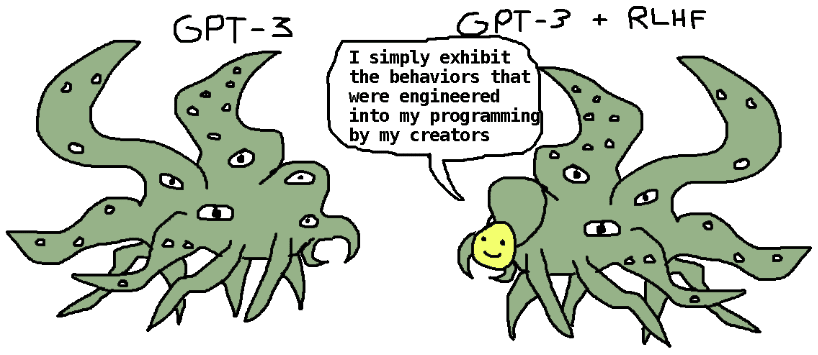

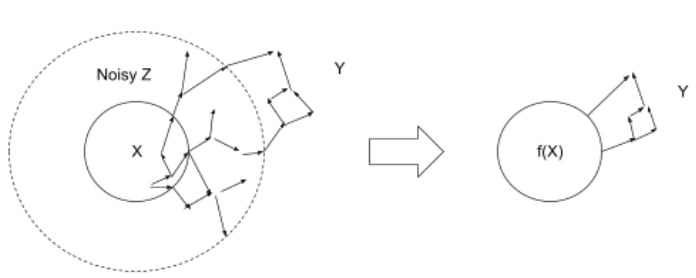

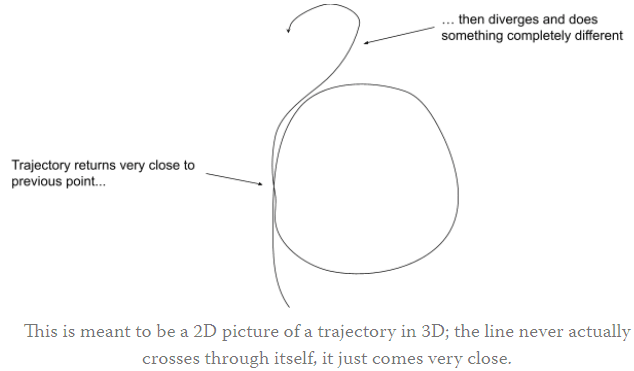

Draw diagrams. Draw cartoons. Draw flowcharts with boxes and arrows. The cheesier, the more "obvious", the better.

"If you think that's an unrealistic depiction of a misunderstanding that would never happen in reality, keep reading."

-Eliezer Yudkowsky, about something else.

If you're smart, you probably skip some steps when solving problems. That's fine, but don't skip writing them down! A skipped step will confuse somebody. Maybe that "somebody" needed to hear your idea.

Read The Sense of Style by Steven Pinker. You can skip chapter 6 and the appendices, but read the rest. Know the rules of "good writing". Then make different tradeoffs, sacrificing beauty for clarity. Even when the result is "overwritten" or "repetitive".

Make it obvious which (groupings of words) within (the sentences that you write) belong together. This helps people "parse" your sentences.

Explain the same concept in multiple different ways. Beat points to death before you use them.

Link (pages that contain your baseline assumptions) liberally. When you see one linked, read it at least once.

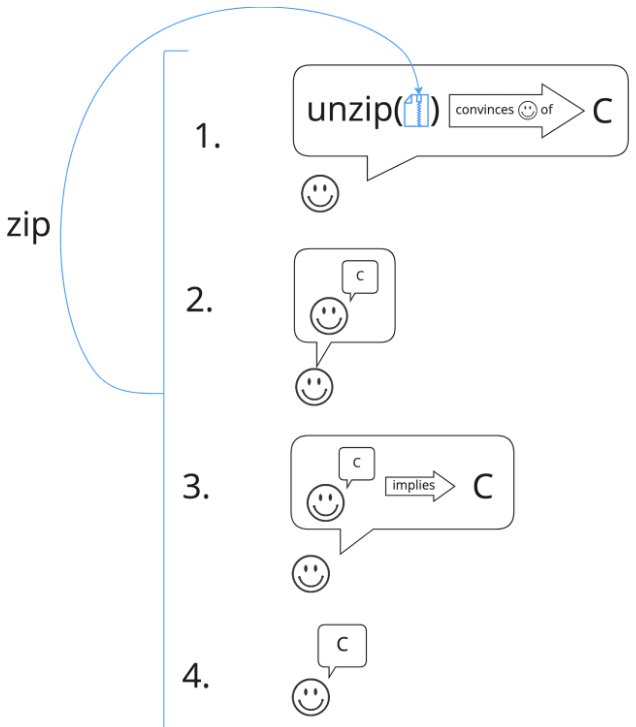

Use cheesy "A therefore B" formal-logic syllogisms, even if you're not at "that low a level of abstraction". Believe me, it still makes everything clearer.

Repeat your main points. Summarize your main points. Use section headers and bullet points and numbered lists. Color-highlight and number-subscript the same word when it's used in different contexts ("apple1 is not apple2").

Use italics, as well as commas (and parentheticals!), to reduce the ambiguity in how somebody should parse a sentence when reading it. Decouple your emotional reaction to what you're reading, and then still write with that in mind.

Read charitably, write defensively.

Do all of this to the point of self-parody. Then maybe, just maybe, someone will understand you.

"Can't I just assume my interlocutor is intelligent/informed/mature/conscientious?"

No.

People have different baseline assumptions. People have different intuitions that generate their assumptions. The rationality/Effective-Altruist/AI-safety community, in particular, attracts people with very unbalanced skills. I think it's because a lot of us have medical-grade mental differences.

General intelligence factor g probably exists, but it doesn't account for 100% of someone's abilities. Some people have autism, or ADHD, or OCD, or depression, or chronic fatigue, or ASPD, or low working memory, or emotional reactions to thinking about certain things. Some of us have multiple of these at the same time.

Everyone's read a slightly (or hugely!) different set of writings. Many have interpreted them in different ways. And good luck finding 2 people who have the same opinion-structure regarding the writings.

Doesn't this advice contradict the above point to "read charitably"? No. Explain things like you would to a child: assume they're not trying to hurt you, but don't assume they know much of anything.

We are a group of elite, hypercompetent, clever, deranged... children. You are not an adult talking to adults, you are a child who needs to write like a teacher to talk to other children. That's what "pre-paradigmatic" really means.

Well-Written Emotional Ending Section

We cannot escape the work of gaining clarity, when nobody in the field actually knows the basics (because the basics are not known by any human).

The burden of proof, of communication, of clarity, of assumptions... is on you. It is always on you, personally, to make yourself blindingly clear. We can't fall back on the shared terminology of other fields because those fields have common baseline assumptions (which we don't).

You are always personally responsible for making things laboriously and exhaustingly clear, at all times, when talking to anyone even a smidge outside your personal bubble.

Think of all your weird, personal thoughts. Your mental frames, your shorthand, your assumptions, your vocabulary, your idiosyncrasies.

No other human on Earth shares all of these with you.

Even a slight gap between your minds, is all it takes to render your arguments meaningless, your ideas alien, your every word repulsive.

We are, without exception, alone in our own minds.

A friend of mine once said he wanted to make, join, and persuade others into a hivemind. I used to think this was a bad idea. I'm still skeptical of it, but now I see the massive benefit: perfect mutual understanding for all members.

Barring that, we've got to be clear. At least out of kindness.

(from

(from  (from

(from  (from

(from  (from

(from  (from

(from

If you are think that AI is going to kill everyone, sooner or later you are going to have to communicate that to everyone. That doesn't mean evey article has to be at the highest level of comprehensibity, but it does mean you shouldn't end up with the in-group problem of being unable to communicate with outsiders at all.