The Buddha rebuked him: "...Foolish man, how can you receive money? This will not give rise to confidence in those without it... And, monks, this training rule should be recited thus: 'If a monk takes, gets someone else to take, or consents to gold and silver being deposited for him, he commits an offense entailing relinquishment and confession.'" Bhikkhu Vibhaṅga, Nissaggiyā Pācittiyā 18

What is my reward then? Verily that, when I preach the gospel, I may make the gospel of Christ without charge, that I abuse not my power in the gospel. For though I be free from all men, yet have I made myself servant unto all, that I might gain the more. 1 Corinthians 9:18-19

The new-age spiritual chakra wellness meetup

About a year ago, feeling that I was stuck in a social bubble where somehow everyone I met was yet another tech/finance/science-type person like myself, I decided to check out this "new-age spiritual chakra wellness" meetup I'd heard about.

It was a culture shock, but not how I expected. What struck me about that group was how they seemed far more obsessed with money and "hustle" than anyone else I've met!

The event took place on a Sunday night in an otherwise-unused yoga studio. The plan was that we'd schmooze with other attendees a bit before going into the studio-proper, where we'd lie down on mats listening to the "music" they'd play for us: one person sitting in the center playing crystal bowls, and another walking amongst the audience playing various flutes. (That was just the premise for this particular event; the group does other events with different activities.)

The price of admission was $20, which I paid in cash to the guy at the door. (This momentarily threw him off his rhythm because most others bought tickets online or via Venmo.) In the lounge where we hung out before the music began, there was a bowl of hot cocoa to which you could help yourself. Only after I started ladling myself a cup did I see the sign saying "$5/cup" - certainly not worth it, but I supposed I had only myself to blame for not looking more carefully, so I finished filling my cup and dropped $5 in the till.

After the music (which was nice and relaxing), there was a chance for everyone to address the audience and share their thoughts. Some used the opportunity to talk about their philosophy of life, while a fair number (it seemed like almost half) found some way to plug their life-coaching services, yoga teaching, book/website, personal training, etc. The organizer of the meetup (the one playing the crystal bowls) gave a little spiel about how it's so great to be building a growing community of conscious living etc. and would we all please consider buying tickets to some of their upcoming events.

After we adjourned to mingle and chat again, another guy (not the one I'd paid at the door) went around checking everyone's Venmo receipts to make sure we had paid the $20. This seemed odd to me (shouldn't this have been done at the beginning?) but I explained to him that I had no receipt because I paid cash to the original doorman (whose name I didn't know, so I had to describe him by appearance). He seemed skeptical due to my inability to supply the doorman's name, but once he understood whom I was referring to he said "Ah okay, I'll go check with him [to verify that you're telling the truth]".

I left and never went back - not the sort of harsh vibes I wanna activate, man!

Was my impression justified?

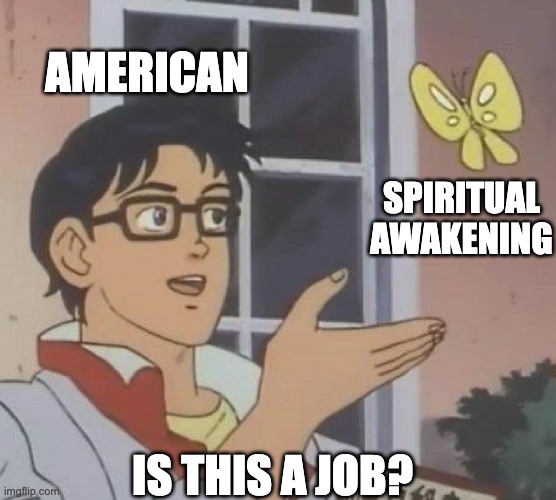

I find this story amusing because, within the tech/finance/science bubble I usually run in, I have never been asked to pay money for the mere privilege of attending a meetup or participating in a community. And supposedly this is a crowd of materialists, libertarians, capitalists, utilitarians, etc. who try to make markets for everything. Why, of all people, is it the spiritualist karma-believing communitarians who buck the trend?

I don't want to get too carried away with self-congratulation. It could just be that the rationality community isn't mature enough to have had to deal with this problem yet. Also, to be fair, I have noticed increasing attempts to monetize rationality, by using it as a vehicle for advertising some product or service. This is why I thought it was important to write this article: because I see how easy it would be to fall into the same trap, and I would hate to see this all go to waste.

Why do I see monetization as a debasement? I'm not against paying money for things in general, nor (I hope, though if I'm being annoyingly self-unaware about this I'd like to know) am I projecting some kind of rich-techbro-elitism where I feel entitled to free stuff because that's how it works with the snacks in my cushy office. Rather, my feeling is that things like "conscious living" or "rationality" need to be held to a different standard. Their purpose is uniquely undermined by making money off of them.

A teacher of practical skills ("How to Change a Light Bulb 101") may reasonably expect to be paid for their services. A purveyor of values (politics, religion, an academic field that claims to be purely descriptive but really isn't, "good karma", moralistic art, rationalist philosophy, etc.) should not.

You cannot put a price on ideology

In short: Alteration of values (to wit, "ideology") is not a "product" that can be assigned a market price. If you think that you have Good Values, and that others have Bad Values, then naturally you'll think it's worthwhile for you to get them to switch to your Good Values. You might even think you deserve to get paid for this important work. But, by definition, your audience won't agree. For them, all they hear is one among a cacophony of voices saying "Switch to Value System X!" "Switch to Value System Y!" etc. Only someone who already shares your values would think that listening to you is worth paying for. Indeed, from the audience's perspective, they're the ones doing you a favor by sitting through your pitch, so you should be paying them!

But, like it or not, there's a lot of money to be made in ideology. Just as people are "accustomed to a haze of plausible-sounding arguments", so too are they awash in a sea of grifters who are all too eager to take their money and then convince them it was a good idea all along. How do you come to the truth? You could try to rely on your own reasoning capacity, but the supply of bad ideologies far exceeds the amount of time you have to debunk them all. Instead, you have to fall back on adversarial epistemology.

The most basic filter is sincerity - does this person preaching Ideology X actually believe it? Presumably this is a necessary* criterion for Ideology X being correct - so your Bayesian update should be towards X if they're sincere, and away from X if they're not.

(*Or at least necessary enough for the Bayesian update to go this way - I suppose we could imagine a situation where X is correct despite not being believed even by its proponents, but this seems quite unlikely!)

Then, the Adversarial Argument proceeds as follows:

- If you believe in X, you'll think that convincing me of it is inherently positive-value, regardless of whether you get paid for it.

- An easy way to credibly signal that you think this is to refuse payment; and yet you have not done that.

- Therefore, I am entitled to ignore your advocacy of X (or believe ¬X out of spite), since you don't seem to believe in it yourself.

(Note the similarity with the ancient texts quoted above!)

Of course, this argument can only disprove, not prove - there are plenty of sincere people out there advocating for contradictory things, and they can't all be right. But it does filter out a lot of chaff (empirical claim - is this true?), and will be the first recourse of a reasonable listener. If we on LessWrong think we have an important message to get across, we should therefore take care not to be filtered out as well.

I have understood it to be the experience of adversity. You are in pain and hate it, that is you suffer. If you are in pain and like it that is not suffering.

If you knew that your choice would lead to your suffering you probably would not be making that choice. Hence the main way that problematic suffering is produced is not seeing the connection between choices and outcomes. "I went to avoid pain and now I am in pain, where did I go wrong?". This kind of problem would form even if the states that you find worth seeking and avoiding would be arbitrarily given at random, as long as you don't have an infinitely competent world model. The claim that some actions get you nearer to the arbitray goals and some get you away from them would still hold. Even if it would not refer to same states or concepts for different individuals evaluating the claim for their different arbitrary goals would still check this out. Putting a needle into themselfs makes one suffers and the other blisses out which compared to the boring option of not applying the needle shows that not all actions are equal for goal aquisition.

So you might form a pro-needle or con-needle opinion and form a corresponding strategy. But then you might encounter something other like ice for which the needle stuff is inapplicable. But there seems to be an innate capability to "know" whether suffering occurs and this can be done before and independent of the formation of the opinions or strategies. Thus you might believe that you are a ice-blisser but then infact discover that you are an ice-sufferer. "utility function" might mean the functionality of the black box that reveals this goalnessness perception, "We are in a bad experience right now". Or "utility function" might refer to the opinion that you profess, "I am the kind of person that blisses about ice". Over time your opinions tend to grow (refer to more stuff and make finer distinctions). It is plausible or atleast imaginable that the innate goal experiences remain constant (ie you don't have to constantly poke yourself with a needle to check that the categorization is still there and that late discoveries were there dormant even at the beginning).

Under this scheme asking, instead of ice or needle having "suffering" as the exposure component would be a category error. "Suffering suffering" or "blissing on suffering" make limited sense (or super fancy metastuff).

Opinions and strategies are not foolproof. If you use those to evaluate new candidate opinions and strategies there is no good guarantee that they will match your experiences. "Avoid ice" and "ice leads to suffering" are very comparable and it is not like the suffering form has some magical extra foothold. If you try to evaluate "hot stove leads to suffering" and you don't have a hot stove to touch you can't torture that kind of information from the term "suffering" appearing there.

The term "value" is problematically ambigious between experiences and (opinions and strategies). "end of suffering" is not, at object level, an opinion position or a strategy.