Towards a Typology of Strange LLM Chains-of-Thought

25Adele Lopez

3holdenr

23Jozdien

10Bronson Schoen

8Jozdien

17Raemon

10Raemon

2Rana Dexsin

9Owain_Evans

8Jozdien

8Nathan Helm-Burger

3Canaletto

8Michael Roe

61a3orn

7ACCount

12StanislavKrym

5Adele Lopez

4MattN

41a3orn

3emanuelr

1Kenku

7Raphael Roche

New Comment

Humans tend to do this less in words; it's socially embarrassing to babble nonsense, and humans have a private internal chain-of-thought in which they can hide their incoherence.

My internal monologue often includes things such as chanting "math math math" when I'm trying to think about math, which seems to invoke a "thinking about math" mode. Plausibly, LLMs associate certain forms of thinking with specific tokens, and using those tokens pushes them towards that mode, which they can learn to deliberately invoke in a similar manner.

Ha! I thought I was the only one. Mine proceeds to think "f is a function mapping from A to B" which I guess might work alright because [everything is a function](https://arxiv.org/pdf/1612.09375)

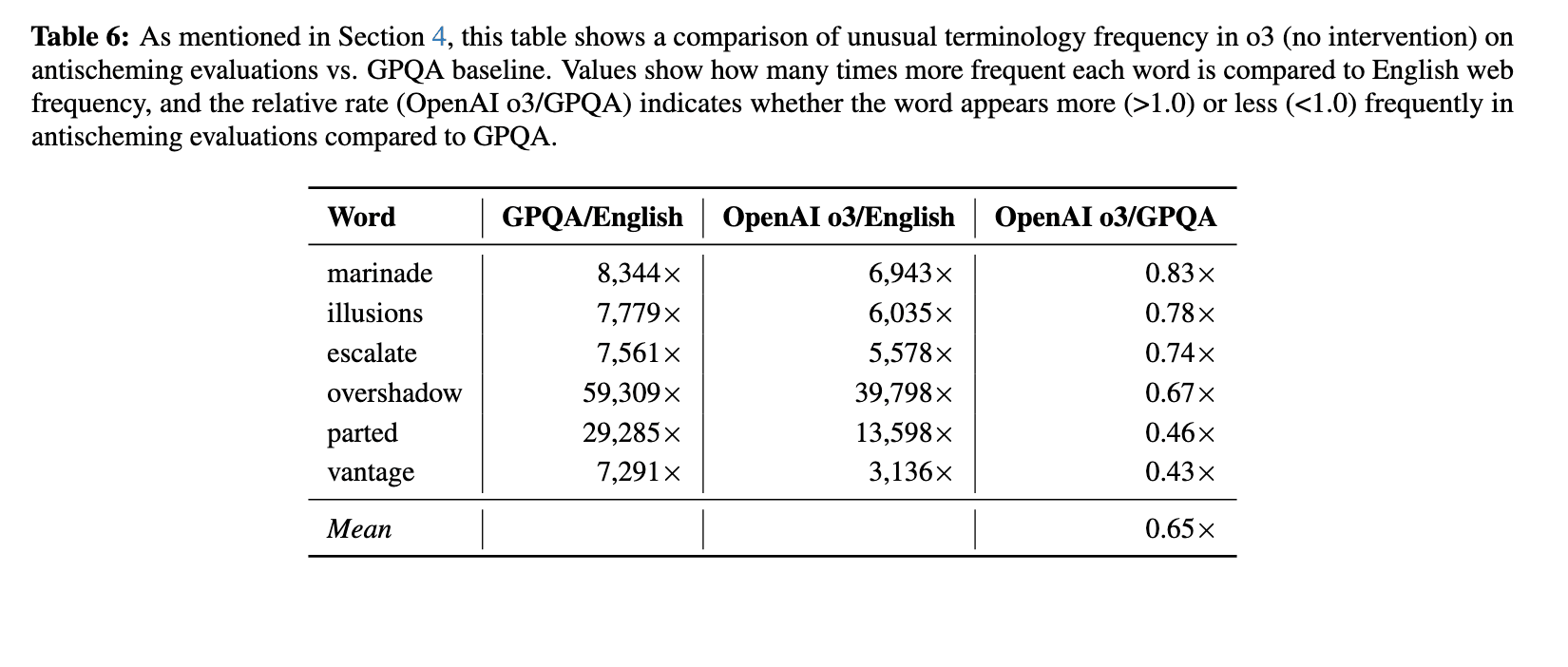

Great post! I have an upcoming paper on this (which I shared with OP a few days ago) that goes into weird / illegible CoTs in reasoning models in depth.

I think the results in it support a version of the spandrel hypothesis—that the tokens aren't causally part of the reasoning, but that RL credit assignment is weird enough in practice that it results in them being vestigially useful for reasoning (perhaps by sequentially triggering separate forward passes where reasoning happens). Where I think this differs from your formulation is that the weird tokens are still useful, just in a pretty non-standard way. I would expect worse performance if you removed them entirely, though not much worse.

This is hard to separate out from the model being slightly OOD if you remove the tokens it would normally use. I think that's (part of) the point though: it isn't that we can't apply pressure against this if we tried, it's that in practice RLVR does seem to result in this pretty consistently unless we apply some pressure against this. And applying pressure against it can end up being pretty bad, if we e.g. accidentally optimize the CoT to look more legible without actually deriving more meaning from it.

Incidentally I think the only other place I've seen describing this idea clearly is this post by @Caleb Biddulph, which I strongly recommend.

that the tokens aren't causally part of the reasoning, but that RL credit assignment is weird enough in practice that it results in them being vestigially useful for reasoning (perhaps by sequentially triggering separate forward passes where reasoning happens). Where I think this differs from your formulation is that the weird tokens are still useful, just in a pretty non-standard way. I would expect worse performance if you removed them entirely, though not much worse.

Strongly agree with this! This matches my intuition as well after having looked at way too many of these when writing the original paper.

I’d also be very interested in taking a look at the upcoming paper you mentioned if you’re open to it!

As a side note / additional datapoint, I found it funny how this was just kind of mentioned in passing as a thing that happens in https://ai.meta.com/research/publications/cwm-an-open-weights-llm-for-research-on-code-generation-with-world-models/

While gibberish typically leads to lower rewards and naturally decreases at the beginning of RL, it can increase later when some successful gibberish trajectories get reinforced, especially for agentic SWE RL

As a side note / additional datapoint, I found it funny how this was just kind of mentioned in passing as a thing that happens in https://ai.meta.com/research/publications/cwm-an-open-weights-llm-for-research-on-code-generation-with-world-models/

Interesting! The R1 paper had this as a throwaway line as well:

To mitigate the issue of language mixing, we introduce a language consistency reward during RL training, which is calculated as the proportion of target language words in the CoT. Although ablation experiments show that such alignment results in a slight degradation in the model’s performance

Relatedly, I was surprised at how many people were reacting in surprise to the GPT-5 CoTs, when there was already evidence of R1, Grok, o3, QwQ all having pretty illegible CoTs often.

I’d also be very interested in taking a look at the upcoming paper you mentioned if you’re open to it!

Sure! I'm editing it for the NeurIPS camera-ready deadline, so I should have a much better version ready in the next couple weeks.

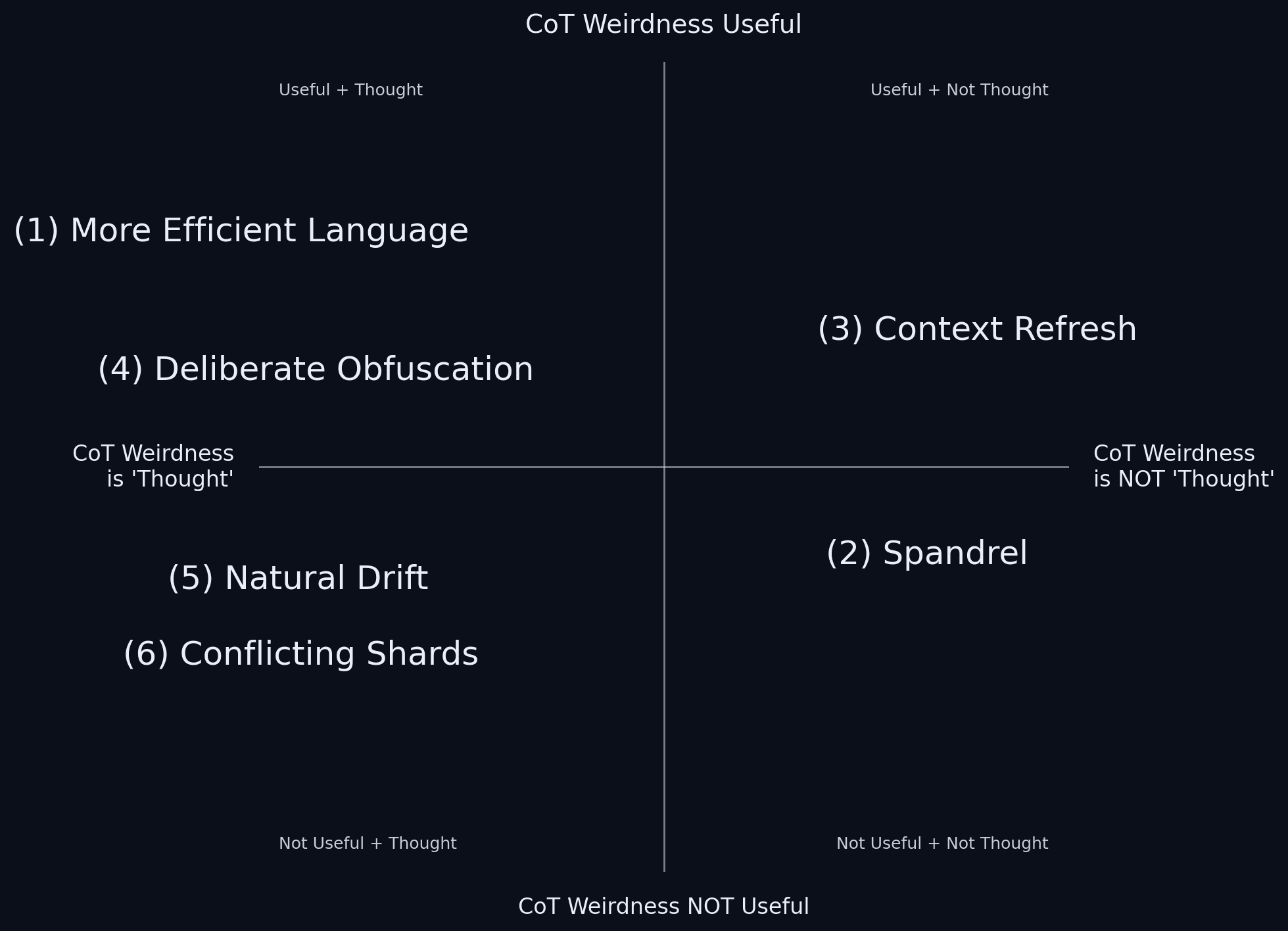

Curated. Often, when someone proposes "a typology" for something, it feels a bit, like, okay, you could typologize it that way but does that actually help?

But, I felt like this carving was fairly natural, and seemed to be trying to be exhaustive, and even if it missed some things it seemed like a reasonable framework to fit more possible-causes into.

I felt like I learned things thinking about each plausible way that CoT might evolve. (i.e. thinking about what laws-of-language might affect LLMs naturally improving the efficiency of the CoT for problem solving, how we might tell the difference between meaningless spandrels and sort-of-meaningful filler words).

Interestingly, yesterday I got into a triggered argument, and was chanting to myself "grant me the courage to walk away from dumb arguments and the strength to dominate people at arguments when I am right and it's important and the wisdom to know the difference...."

...and then realized that basically the problem was that, with my current context window, it was pretty hard to think about anything other than this argument, but if I just filled up my context window with other stuff probably I'd just stop caring.

Which was a surprisingly practical takeaway from this post.

Hmm. From the vibes of the description, that feels more like it's in the “minds are general and slippery, so people latch onto nearby stuff and recent technology for frameworks and analogies for mind” vein to me? Which is not to mean it's not true, but the connection to the post feels more circumstantial than essential.

Alternatively, pointing at the same fuzzy thing: could you easily replace “context window” with “phonological loop” in that sentence? “Context windows are analogous enough to the phonological loop model that the existence of the former serves as a conceptual brace for remembering that the latter exists” is plausible, I suppose.

Yes, R1 and Grok 4 do. QwQ does to a lesser extent. I would bet that Gemini does as well—AFAIK only Anthropic's models don't. I'm editing a paper I wrote on this right now, should be out in the next two weeks.

I've been suspecting that Anthropic is doing some reinforcement of legibility of CoT, because their CoTs seemed unusually normal and legible. Gemini too, back when it had visible CoT instead of summarized.

Also possible that Anthropic is actually giving edited CoTs rather than raw ones.

Anthropic, GDM, and xAI say nothing about whether they train against Chain-of-Thought (CoT) while OpenAI claims they don't

I think I have seen original DeepSeek R1 (not 0528) have an incoherent chain of thought when it is distressed.

It’s like it falls into an attractor state where (a) it’s really upset (b) the cot is nonsense

0528 seems to not have this attractor state (though it does sometimes have an incomprehensible cot, and it will say so when it doesn’t like a question)

Repeated token sequences - is it possible that those tokens are computational? Detached from their meaning by RL, now emitted solely to perform some specific sort of computation in the hidden state? Top left quadrant - useful thought, just not at all a language.

Did anyone replicate this specific quirk in an open source LLM?

"Spandrel" is very plausible for that too. LLMs have a well known repetition bias, so it's easy to see how that kind of behavior could pop up randomly and then get reinforced by an accident. So is "use those tokens to navigate into the right frame of mind", it seems to get at one common issue with LLM thinking.

We had METR evaluate GPT-5 and find that GPT-5's CoT contained armies of dots on which Kokotajlo conjectured that the model was getting distracted. While METR cut some dots and spaces out for brevity, nearly every block of dots contained exactly 16 dots. So the dots either didn't count anything or the counting was done in the part that METR threw away.

If we think of the presented "thoughts" in CoT as a bottleneck in otherwise much wider bandwidth models I think things become clearer. If we're not watching, the purpose is pretty straightforward: provide as much useful information to the next iteration. There is loss when compacting the subtleties of the state of the model into a bunch of tokens. The better able the model is at preserving the useful calculations in the next round, the more likely it is to be successful as it is discarding less of the output from the processing applied.

So yeah, it's about "efficiency". IF our only metric is success in solving the problem the fine tuning is goign to start to pull the CoT output away from the pre-training coherent language. The CoT becomes another layer. If we demand it be coherent we essentially hobble it to an extent, but have two problems.

Firstly we are dragging in two directions: solution quality and the language used in the CoT. That means the best words the model can use are ones that keep us happy but don't pull it too far from the actual representation that would be optimal for the solution. That sounds reasonable, almost like an explanation, but there is no guarantee that the actual pattern it is using is as harmless as the CoT would have us think. We might be seeing euphemisms, or just words that fit the bill but still pack enough punch in the next iteration to allow the system to continue on whatever chain it was on: nudge, nudge, wink, wink.

The second problem, which exacerbates the first, is how little bandwidth is used up by the meaning we take from the CoT text. Every piece of punctuation, every piece of white space, every overblown superlative, every cliche is an option that provides information to the next iteration. And we don't even notice. Example: word choice:

- I need to make a firm plan

- I need to make a robust plan...or sound plan, or reliable, or reasonable, or accurate, or clear, or smart...

That's 3 bits of information. Combine it with other options and you rapidly build up a decent channel. And those options are myriad. EVERYTHING is information. The count of words; the frequency of certain tokens and the pattern of their repetition; the number of tokens between punctuation, between line breaks; the patterns between the patterns between the patterns. When you have billions of parameters the depth of the patterns is beyond any human comprehension.

Combine it with other options and you rapidly build up a decent channel. And those options are myriad.

So here's the thing: your word choice between "robust plan" or "reasonable plan" or "clear plan" or "smart plan" already matters. It already carries information -- I might say, "Well, that''s a reasonable plan but not a robust plan," or "That seems like a smart plan, but it's not clear." There are infinite shades of meaning like this in English, and competence in using English entails command over all these shades.

So the question then is -- why would the LLM build up some channel, along angles entirely orthogonal to these existing shades, when these shades are here? You could decide on some encoding between firm / robust / sound / reliable -- but these things already have meaning, wouldn't you just use the meaning already there? There are a bunch of papers on how RL is mostly magnifying existing patterns in the LLMs, and is teaching how to employ skills already latent in the training data, rather than teaching totally new things -- if this is so, wouldn't we expect them to keep using these terms? Or there is work about how it's genuinely hard for LLMs to reason steganographically, even when we try to teach them to -- doesn't that incline against such a belief.

Or put alternately -- I can, within myself, connect "robust" and "reasonable" and so on with a huge internal-to-my-brain- channel, containing enormous bandwidth! If I want to make more information dense private language I could! But in fact, I find myself thinking almost exclusively in terms that make sense to others -- when I find myself using a private language, and terms that don't make sense to others, that's usually a sign my thoughts are unclear and likely wrong.

At least, those are some of the heuristics you'd invoke when inclining the other way. Empiricism will show us which is right :)

Great post! I think that the first 3 hypotheses are the most likely. Maybe 3) could be a subset of 2), since the training process might find the strategy to make the model "clear its mind" by writing random text rather than "intelligently" modifying the model to avoid having that requirement.

Maybe 5) isn't very likely with current algorithms since the training process in PPO and GRPO incentivizes LLM outputs to not stray from the original model in terms of KL divergence, because otherwise the model collapses (although the full RL process might have a few iterations where the base model is replaced by the previous RL model).

However, I think that imitating human language doesn't mean that the model will have an interpretable chain of thought, for example, when using PPO to train a lunar lander, after landing it will keep firing the rockets randomly to be close to the original (random) policy distribution. Maybe something similar happens in LLMs, where the incentive to be superficially close to the base model, aka "human", makes the chain of thought have weird artifacts.

“Watchers” seem to me like obvious religious language, not an idiosyncrasy. Consider how a human put in a such situation would think about supernatural entities monitoring its internal monologue. Especially consider what genres of fiction feature morality-policing mind readers.

It seems to me to be a sophisticated interpretation. The basic meaning that someone is likely to read the CoT makes perfect sense.

If I had to write down my thoughts, I would certainly consider the theoretical possibility that someone could read them. Maybe not with high confidence or strong awareness and concern, but it's hard to imagine an intelligent entity that would never envision this possibility.