NVIDIA might be better positioned to first get to/first scale up access to AGIs than any of the AI labs that typically come to mind.

They're already the world's highest-market-cap company, have huge and increasing quarterly income (and profit) streams, and can get access to the world's best AI hardware at literally the best price (the production cost they pay). Given that access to hardware seems far more constraining of an input than e.g. algorithms or data, when AI becomes much more valuable because it can replace larger portions of human workers, they should be highly motivated to use large numbers of GPUs themselves and train their own AGIs, rather than e.g. sell their GPUs and buy AGI access from competitors. Especially since poaching talented AGI researchers would probably (still) be much cheaper than building up the hardware required for the training runs (e.g. see Meta's recent hiring spree); and since access to compute is already an important factor in algorithmic progress and AIs will likely increasingly be able to substitute top human researchers for algorithmic progress. Similarly, since the AI software is a complementary good to the hardware they sell, they should be highly motivated to be able to produce their own in-house, and sell it as a package with their hardware (rather than have to rely on AGI labs to build the software that makes the hardware useful).

This possibility seems to me wildly underconsidered/underdiscussed, at least in public.

'🚨 The annual report of the US-China Economic and Security Review Commission is now live. 🚨

Its top recommendation is for Congress and the DoD to fund a Manhattan Project-like program to race to AGI.

Buckle up...'

In the reuters article they highlight Jacob Helberg: https://www.reuters.com/technology/artificial-intelligence/us-government-commission-pushes-manhattan-project-style-ai-initiative-2024-11-19/

He seems quite influential in this initiative and recently also wrote this post:

https://republic-journal.com/journal/11-elements-of-american-ai-supremacy/

Wikipedia has the following paragraph on Helberg:

“ He grew up in a Jewish family in Europe.[9] Helberg is openly gay.[10] He married American investor Keith Rabois in a 2018 ceremony officiated by Sam Altman.”

Might this be an angle to understand the influence that Sam Altman has on recent developments in the US government?

I suspect current approaches probably significantly or even drastically under-elicit automated ML research capabilities.

I'd guess the average cost of producing a decent ML paper is at least 10k$ (in the West, at least) and probably closer to 100k's $.

In contrast, Sakana's AI scientist cost on average 15$/paper and .50$/review. PaperQA2, which claims superhuman performance at some scientific Q&A and lit review tasks, costs something like 4$/query. Other papers with claims of human-range performance on ideation or reviewing also probably have costs of <10$/idea or review.

Even the auto ML R&D benchmarks from METR or UK AISI don't give me at all the vibes of coming anywhere near close enough to e.g. what a 100-person team at OpenAI could accomplish in 1 year, if they tried really hard to automate ML.

A fairer comparison would probably be to actually try hard at building the kind of scaffold which could use ~10k$ in inference costs productively. I suspect the resulting agent would probably not do much better than with 100$ of inference, but it seems hard to be confident. And it seems harder still to be confident about what will happen even in just 3 years' time, give...

In contrast, Sakana's AI scientist cost on average 15$/paper and .50$/review.

The Sakana AI stuff is basically total bogus, as I've pointed out on like 4 other threads (and also as Scott Alexander recently pointed out). It does not produce anything close to fully formed scientific papers. It's output is really not better than just prompting o1 yourself. Of course, o1 and even Sonnet and GPT-4 are very impressive, but there is no update to be made after you've played around with that.

I agree that ML capabilities are under-elicited, but the Sakana AI stuff really is very little evidence on that, besides someone being good at marketing and setting up some scaffolding that produces fake prestige signals.

I completely agree, and we should just obviously build an organization around this. Automating alignment research while also getting a better grasp on maximum current capabilities (and a better picture of how we expect it to grow).

(This is my intention, and I have had conversations with Bogdan about this, but I figured I'd make it more public in case anyone has funding or ideas they would like to share.)

Eliezer (among others in the MIRI mindspace) has this whole spiel about human kindness/sympathy/empathy/prosociality being contingent on specifics of the human evolutionary/cultural trajectory, e.g. https://twitter.com/ESYudkowsky/status/1660623336567889920 and about how gradient descent is supposed to be nothing like that https://twitter.com/ESYudkowsky/status/1660623900789862401. I claim that the same argument (about evolutionary/cultural contingencies) could be made about e.g. image aesthetics/affect, and this hypothesis should lose many Bayes points when we observe concrete empirical evidence of gradient descent leading to surprisingly human-like aesthetic perceptions/affect, e.g. The Perceptual Primacy of Feeling: Affectless machine vision models robustly predict human visual arousal, valence, and aesthetics; Towards Disentangling the Roles of Vision & Language in Aesthetic Experience with Multimodal DNNs; Controlled assessment of CLIP-style language-aligned vision models in prediction of brain & behavioral data; Neural mechanisms underlying the hierarchical construction of perceived aesthetic value.

12/10/24 update: more, and in my view even somewhat methodologically s...

The first automatically produced, (human) peer-reviewed, (ICLR) workshop-accepted[/able] AI research paper: https://sakana.ai/ai-scientist-first-publication/

This is a very low-quality paper.

Basically, the paper does the following:

- A 1-layer LSTM gets inputs of the form

[operand 1][operator][operand 2], e.g.1+2or3*5 - It is trained (I think with a regression loss? but it's not clear[1]) to predict the numerical result of the binary operation

- The paper proposes an auxiliary loss that is supposed to improve "compositionality."

- As described in the paper, this loss is is the average squared difference between successive LSTM hidden states

- But, in the actual code, what is actually is instead the average squared difference between successive input embeddings

- The paper finds (unsurprisingly) that this extra loss doesn't help on the main task[2], while making various other errors and infelicities along the way

- e.g. there's train-test leakage, and (hilariously) it doesn't cite the right source for the LSTM architecture[3]

The theoretical justification presented for the "compositional loss" is very brief and unconvincing. But if I read into it a bit, I can see why it might make sense for the loss described in the paper (on hidden states).

This could regularize an LSTM to be produce something closer to a simple sum or average of the input embe...

I'm skeptical.

Did the Sakana team publish the code that their scientist agent used to write the compositional regularization paper? The post says

For our choice of workshop, we believe the ICBINB workshop is a highly relevant choice for the purpose of our experiment. As we wrote in the main text, we selected this workshop because of its broader scope, challenging researchers (and our AI Scientist) to tackle diverse research topics that address practical limitations of deep learning, unlike most workshops with a narrow focus on one topic.

This workshop focuses particularly on understanding limitations of deep learning methods applied to real world problems, and encourages participants to study negative experimental outcomes. Some may criticize our choice of a workshop that encourages discussion of “negative results” (implying that papers discussing negative results are failed scientific discoveries), but we disagree, and we believe this is an important topic.

and while it is true that "negative results" are important to report, "we report a negative result because our AI agent put forward a reasonable and interesting hypothesis, competently tested the hypothesis, and found that the hyp...

Contra both the 'doomers' and the 'optimists' on (not) pausing. Rephrased: RSPs (done right) seem right.

Contra 'doomers'. Oversimplified, 'doomers' (e.g. PauseAI, FLI's letter, Eliezer) ask(ed) for pausing now / even earlier - (e.g. the Pause Letter). I expect this would be / have been very much suboptimal, even purely in terms of solving technical alignment. For example, Some thoughts on automating alignment research suggests timing the pause so that we can use automated AI safety research could result in '[...] each month of lead that the leader started out with would correspond to 15,000 human researchers working for 15 months.' We clearly don't have such automated AI safety R&D capabilities now, suggesting that pausing later, when AIs are closer to having the required automated AI safety R&D capabilities would be better. At the same time, current models seem very unlikely to be x-risky (e.g. they're still very bad at passing dangerous capabilities evals), which is another reason to think pausing now would be premature.

Contra 'optimists'. I'm more unsure here, but the vibe I'm getting from e.g. AI Pause Will Likely Backfire (Guest Post) is roughly something like 'no paus...

Hot take, though increasingly moving towards lukewarm: if you want to get a pause/international coordination on powerful AI (which would probably be net good, though likely it would strongly depend on implementation details), arguments about risks from destabilization/power dynamics and potential conflicts between various actors are probably both more legible and 'truer' than arguments about technical intent misalignment and loss of control (especially for not-wildly-superhuman AI).

arguments about risks from destabilization/power dynamics and potential conflicts between various actors are probably both more legible and 'truer'

Say more?

I think the general impression of people on LW is that multipolar scenarios and concerns over "which monkey finds the radioactive banana and drags it home" are in large part a driver of AI racing instead of being a potential impediment/solution to it. Individuals, companies, and nation-states justifiably believe that whichever one of them accesses potentially superhuman AGI first will have the capacity to flip the gameboard at-will, obtain power over the entire rest of the Earth, and destabilize the currently-existing system. Standard game theory explains the final inferential step for how this leads to full-on racing (see the recent U.S.-China Commission's report for a representative example of how this plays out in practice).

I get that we'd like to all recognize this problem and coordinate globally on finding solutions, by "mak[ing] coordinated steps away from Nash equilibria in lockstep". But I would first need to see an example, a prototype, of how this can play out in practice on an important and highly salient issue....

Quick take on o1: overall, it's been a pretty good day. Likely still sub-ASL-3, (opaque) scheming still seems very unlikely because the prerequisites still don't seem there. CoT-style inference compute playing a prominent role in the capability gains is pretty good for safety, because differentially transparent. Gains on math and code suggest these models are getting closer to being usable for automated safety research (also for automated capabilities research, unfortunately).

For some perspective:

'New data centers put Stargate ahead of schedule to secure full $500 billion, 10-gigawatt commitment by end of 2025.' https://openai.com/index/five-new-stargate-sites/

'One estimate puts total funding for AI safety research at only $80-130 million per year over the 2021-2024 period.' https://www.schmidtsciences.org/safetyscience/#:~:text=One%20estimate%20puts%20total%20funding,period%20(LessWrong%2C%202024)

Gemini 2.0 Flash Thinking is claimed to 'transparently show its thought process' (in contrast to o1, which only shows a summary): https://x.com/denny_zhou/status/1869815229078745152. This might be at least a bit helpful in terms of studying how faithful (e.g. vs. steganographic, etc.) the Chains of Thought are.

QwQ-32B-Preview was released open-weights, seems comparable to o1-preview. Unless they're gaming the benchmarks, I find it both pretty impressive and quite shocking that a 32B model can achieve this level of performance. Seems like great news vs. opaque (e.g in one-forward-pass) reasoning. Less good with respect to proliferation (there don't seem to be any [deep] algorithmic secrets), misuse and short timelines.

I think they meant that as an analogy to how developed/sophisticated it was (ie they're saying that it's still early days for reasoning models and to expect rapid improvement), not that the underlying model size is similar.

IIRC OAers also said somewhere (doesn't seem to be in the blog post, so maybe this was on Twitter?) that o1 or o1-preview was initialized from a GPT-4 (a GPT-4o?), so that would also rule out a literal parameter-size interpretation (unless OA has really brewed up some small models).

Like transformers, SSMs like Mamba also have weak single forward passes: The Illusion of State in State-Space Models (summary thread). As suggested previously in The Parallelism Tradeoff: Limitations of Log-Precision Transformers, this may be due to a fundamental tradeoff between parallelizability and expressivity:

'We view it as an interesting open question whether it is possible to develop SSM-like models with greater expressivity for state tracking that also have strong parallelizability and learning dynamics, or whether these different goals are fundamentally at odds, as Merrill & Sabharwal (2023a) suggest.'

'Data movement bottlenecks limit LLM scaling beyond 2e28 FLOP, with a "latency wall" at 2e31 FLOP. We may hit these in ~3 years. Aggressive batch size scaling could potentially overcome these limits.' https://epochai.org/blog/data-movement-bottlenecks-scaling-past-1e28-flop

The post argues that there is a latency limit at 2e31 FLOP, and I've found it useful to put this scale into perspective.

Current public models such as Llama 3 405B are estimated to be trained with ~4e25 flops , so such a model would require 500,000 x more compute. Since Llama 3 405B was trained with 16,000 H-100 GPUs, the model would require 8 billion H-100 GPU equivalents, at a cost of $320 trillion with H-100 pricing (or ~$100 trillion if we use B-200s). Perhaps future hardware would reduce these costs by an order of magnitude, but this is cancelled out by another factor; the 2e31 limit assumes a training time of only 3 months. If we were to build such a system over several years and had the patience to wait an additional 3 years for the training run to complete, this pushes the latency limit out by another order of magnitude. So at the point where we are bound by the latency limit, we are either investing a significant percentage of world GDP into the project, or we have already reached ASI at a smaller scale of compute and are using it to dramatically reduce compute costs for successor models.

Of course none of this analysis applies to the earlier data limit of 2e28 flop, which I think is more relevant and interesting.

Quick take: on the margin, a lot more research should probably be going into trying to produce benchmarks/datasets/evaluation methods for safety (than more directly doing object-level safety research).

Some past examples I find valuable - in the case of unlearning: WMDP, Eight Methods to Evaluate Robust Unlearning in LLMs; in the case of mech interp - various proxies for SAE performance, e.g. from Scaling and evaluating sparse autoencoders, as well as various benchmarks, e.g. FIND: A Function Description Benchmark for Evaluating Interpretability Methods. Prizes and RFPs seem like a potentially scalable way to do this - e.g. https://www.mlsafety.org/safebench - and I think they could be particularly useful on short timelines.

Including differentially vs. doing the full stack of AI safety work - because I expect a lot of such work could be done by automated safety researchers soon, with the right benchmarks/datasets/evaluation methods. This could also make it much easier to evaluate the work of automated safety researchers and to potentially reduce the need for human feedback, which could be a significant bottleneck.

Better proxies could also make it easier to productively deploy ...

- Shenzhen team completed a working prototype of a EUV machine in early 2025, sources say

- The lithography machine, built by former ASML engineers, fills a factory floor, sources say

- China's EUV machine is undergoing testing, and has not produced working chips, sources say

- Government is targeting 2028 for working chips, but sources say 2030 is more likely'

Success at currently-researched generalized inference scaling laws might risk jeopardizing some of the fundamental assumptions of current regulatory frameworks.

- the o1 results have illustrated specialized inference scaling laws, for model capabilities in some specialized domains (e.g. math); notably, these don't seem to generally hold for all domains - e.g. o1 doesn't seem better than gpt4o at writing;

- there's ongoing work at OpenAI to make generalized inference scaling work;

- e.g. this could perhaps (though maybe somewhat overambitiously) be framed, in the language of https://epochai.org/blog/trading-off-compute-in-training-and-inference, as there no longer being an upper bound in how many OOMs of inference compute can be traded for equivalent OOMs of pretraining;

- to the best of my awarenes, current regulatory frameworks/proposals (e.g. the EU AI Act, the Executive Order, SB 1047) frame the capabilities of models in terms of (pre)training compute and maybe fine-tuning compute (e.g. if (pre)training FLOP > 1e26, the developer needs to take various measures), without any similar requirements framed in terms of inference compute; so current regulatory frameworks seem unprepared f

I think this one (and perhaps better operationalizations) should probably have many eyes on it:

Jack Clark: 'Registering a prediction: I predict that within two years (by July 2026) we'll see an AI system beat all humans at the IMO, obtaining the top score. Alongside this, I would wager we'll see the same thing - an AI system beating all humans in a known-hard competition - in another scientific domain outside of mathematics. If both of those things occur, I believe that will present strong evidence that AI may successfully automate large chunks of scientific research before the end of the decade.' https://importai.substack.com/p/import-ai-380-distributed-13bn-parameter

(cross-posted from https://x.com/BogdanIonutCir2/status/1844451247925100551, among others)

I'm concerned things might move much faster than most people expected, because of automated ML (figure from OpenAI's recent MLE-bench: Evaluating Machine Learning Agents on Machine Learning Engineering, basically showing automated ML engineering performance scaling as more inference compute is used):

I find it pretty wild that automating AI safety R&D, which seems to me like the best shot we currently have at solving the full superintelligence control/alignment problem, no longer seems to have any well-resourced, vocal, public backers (with the superalignment team disbanded).

I think Anthropic is becoming this org. Jan Leike just tweeted:

https://x.com/janleike/status/1795497960509448617

I'm excited to join @AnthropicAI to continue the superalignment mission!

My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research.

If you're interested in joining, my dms are open.

from https://jack-clark.net/2024/08/18/import-ai-383-automated-ai-scientists-cyborg-jellyfish-what-it-takes-to-run-a-cluster/…, commenting on https://arxiv.org/abs/2408.06292: 'Why this matters – the taste of automated science: This paper gives us a taste of a future where powerful AI systems propose their own ideas, use tools to do scientific experiments, and generate results. At this stage, what we have here is basically a ‘toy example’ with papers of dubious quality and insights of dubious import. But you know where we were with language models five yea...

(cross-posted from https://x.com/BogdanIonutCir2/status/1842914635865030970)

maybe there is some politically feasible path to ML training/inference killswitches in the near-term after all: https://www.datacenterdynamics.com/en/news/asml-adds-remote-kill-switch-to-tsmcs-euv-machines-in-case-china-invades-taiwan-report/

On an apparent missing mood - FOMO on all the vast amounts of automated AI safety R&D that could (almost already) be produced safely

Automated AI safety R&D could results in vast amounts of work produced quickly. E.g. from Some thoughts on automating alignment research (under certain assumptions detailed in the post):

each month of lead that the leader started out with would correspond to 15,000 human researchers working for 15 months.

Despite this promise, we seem not to have much knowledge when such automated AI safety R&D might happ...

If this generalizes, OpenAI's Orion, rumored to be trained on synthetic data produced by O1, might see significant gains not just in STEM domains, but more broadly - from O1 Replication Journey -- Part 2: Surpassing O1-preview through Simple Distillation, Big Progress or Bitter Lesson?:

'this study reveals how simple distillation from O1's API, combined with supervised fine-tuning, can achieve superior performance on complex mathematical reasoning tasks. Through extensive experiments, we show that a base model fine-tuned on simply tens of thousands of sampl...

(crossposted from X/twitter)

Epoch is one of my favorite orgs, but I expect many of the predictions in https://epochai.org/blog/interviewing-ai-researchers-on-automation-of-ai-rnd to be overconservative / too pessimistic. I expect roughly a similar scaleup in terms of compute as https://x.com/peterwildeford/status/1825614599623782490… - training runs ~1000x larger than GPT-4's in the next 3 years - and massive progress in both coding and math (e.g. along the lines of the medians in https://metaculus.com/questions/6728/ai-wins-imo-gold-medal/… https://metacu...

(cross-posted from X/twitter)

The already-feasibility of https://sakana.ai/ai-scientist/ (with basically non-x-risky systems, sub-ASL-3, and bad at situational awareness so very unlikely to be scheming) has updated me significantly on the tractability of the alignment / control problem. More than ever, I expect it's gonna be relatively tractable (if done competently and carefully) to safely, iteratively automate parts of AI safety research, all the way up to roughly human-level automated safety research (using LLM agents roughly-shaped like the AI scientist...

I think currently approximately no one is working on the kind of safety research that when scaled up would actually help with aligning substantially smarter than human agents, so I am skeptical that the people at labs could automate that kind of work (given that they are basically doing none of it). I find myself frustrated with people talking about automating safety research, when as far as I can tell we have made no progress on the relevant kind of work in the last ~5 years.

Change my mind: outer alignment will likely be solved by default for LLMs. Brain-LM scaling laws (https://arxiv.org/abs/2305.11863) + LM embeddings as model of shared linguistic space for transmitting thoughts during communication (https://www.biorxiv.org/content/10.1101/2023.06.27.546708v1.abstract) suggest outer alignment will be solved by default for LMs: we'll be able to 'transmit our thoughts', including alignment-relevant concepts (and they'll also be represented in a [partially overlapping] human-like way).

Prototype of LLM agents automating the full AI research workflow: The AI Scientist: Towards Fully Automated Open-Ended Scientific Discovery.

And already some potential AI safety issues: 'We have noticed that The AI Scientist occasionally tries to increase its chance of success, such as modifying and launching its own execution script! We discuss the AI safety implications in our paper.

For example, in one run, it edited the code to perform a system call to run itself. This led to the script endlessly calling itself. In another case, its experiments took too ...

RSPs for automated AI safety R&D require rethinking RSPs

AFAICT, all current RSPs are only framed negatively, in terms of [prerequisites to] dangerous capabilities to be detected (early) and mitigated.

In contrast, RSPs for automated AI safety R&D will likely require measuring [prerequisites to] capabilities for automating [parts of] AI safety R&D, and preferentially (safely) pushing these forward. An early such examples might be safely automating some parts of mechanistic intepretability.

(Related: On an apparent missing mood - FOMO on all ...

A brief list of resources with theoretical results which seem to imply RL is much more (e.g. sample efficiency-wise) difficult than IL - imitation learning (I don't feel like I have enough theoretical RL expertise or time to scrutinize hard the arguments, but would love for others to pitch in). Probably at least somewhat relevant w.r.t. discussions of what the first AIs capable of obsoleting humanity could look like:

Paper: Is a Good Representation Sufficient for Sample Efficient Reinforcement Learning? (quote: 'This work shows that, from the statisti...

quick take: Against Almost Every Theory of Impact of Interpretability should be required reading for ~anyone starting in AI safety (e.g. it should be in the AGISF curriculum), especially if they're considering any model internals work (and of course even more so if they're specifically considering mech interp)

56% on swebench-lite with repeated sampling (13% above previous SOTA; up from 15.9% with one sample to 56% with 250 samples), with a very-below-SOTA model https://arxiv.org/abs/2407.21787; anything automatically verifiable (large chunks of math and coding) seems like it's gonna be automatable in < 5 years.

(epistemic status: quick take, as the post category says)

Browsing though EAG London attendees' profiles and seeing what seems like way too many people / orgs doing (I assume dangerous capabilities) evals. I expect a huge 'market downturn' on this, since I can hardly see how there would be so much demand for dangerous capabilities evals in a couple years' time / once some notorious orgs like the AISIs build their sets, which many others will probably copy.

While at the same time other kinds of evals (e.g. alignment, automated AI safety R&D, even control) seem wildly neglected.

GPT-5.1-Codex-Max (only) being on trend on METR's task horizon eval, despite being 'trained on agentic tasks across software engineering, math, research', and being recommended for (less general) use 'only for agentic coding tasks in Codex or Codex-like environments', seems like very significant further evidence vs. trend breaks from quickly massively scaling up RL on agentic software engineering.

Epistemic status: at least somewhat rant-mode.

I find it pretty ironic that many in AI risk mitigation would make asks for if-then committments/RSPs from the top AI capabilities labs, but they won't make the same asks for AI safety orgs/funders. E.g.: if you're an AI safety funder, what kind of evidence ('if') will make you accelerate how much funding you deploy per year ('then')?

One of these types of orgs is developing a technology with the potential to kill literally all of humanity. The other type of org is funding research that if it goes badly mostly just wasted their own money. Of course the demands for legibility and transparency should be different.

News about an apparent shift in focus to inference scaling laws at the top labs: https://www.reuters.com/technology/artificial-intelligence/openai-rivals-seek-new-path-smarter-ai-current-methods-hit-limitations-2024-11-11/

I think the journalists might have misinterpreted Sutskever, if the quote provided in the article is the basis for the claim about plateauing:

Ilya Sutskever ... told Reuters recently that results from scaling up pre-training - the phase of training an AI model that uses a vast amount of unlabeled data to understand language patterns and structures - have plateaued.

“The 2010s were the age of scaling, now we're back in the age of wonder and discovery once again. Everyone is looking for the next thing,” Sutskever said. “Scaling the right thing matters more now than ever.”

What he's likely saying is that there are new algorithmic candidates for making even better use of scaling. It's not that scaling LLM pre-training plateaued, but rather other things became available that might be even better targets for scaling. Focusing on these alternatives could be more impactful than focusing on scaling of LLM pre-training further.

He's also currently motivated to air such implications, since his SSI only has $1 billion, which might buy a 25K H100s cluster, while OpenAI, xAI, and Meta recently got 100K H100s clusters (Google and Anthropic likely have that scale of compute as well, or will immi...

(cross-posted from https://x.com/BogdanIonutCir2/status/1844787728342245487)

the plausibility of this strategy, to 'endlessly trade computation for better performance' and then have very long/parallelized runs, is precisely one of the scariest aspects of automated ML; even more worrying that it's precisely what some people in the field are gunning for, and especially when they're directly contributing to the top auto ML scaffolds; although, everything else equal, it might be better to have an early warning sign, than to have a large inference overhang: http...

(still) speculative, but I think the pictures of Shard Theory, activation engineering and Simulators (and e.g. Bayesian interpretations of in-context learning) are looking increasingly similar: https://www.lesswrong.com/posts/dqSwccGTWyBgxrR58/turntrout-s-shortform-feed?commentId=qX4k7y2vymcaR6eio

https://www.lesswrong.com/posts/dqSwccGTWyBgxrR58/turntrout-s-shortform-feed#SfPw5ijTDi6e3LabP

on unsupervised learning/clustering in the activation space of multiple systems as a potential way to deal with proxy problems when searching for some concepts (e.g. embeddings of human values): https://www.lesswrong.com/posts/Nwgdq6kHke5LY692J/alignment-by-default#8CngPZyjr5XydW4sC

'Krenn thinks that o1 will accelerate science by helping to scan the literature, seeing what’s missing and suggesting interesting avenues for future research. He has had success looping o1 into a tool that he co-developed that does this, called SciMuse. “It creates much more interesting ideas than GPT-4 or GTP-4o,” he says.' (source; related: current underelicitation of auto ML capabilities)

(crossposted from https://x.com/BogdanIonutCir2/status/1840775662094713299)

I really wish we'd have some automated safety research prizes similar to https://aimoprize.com/updates/2024-09-23-second-progress-prize…. Some care would have to be taken to not advance capabilities [differentially], but at least some targeted areas seem quite robustly good, e.g. …https://multimodal-interpretability.csail.mit.edu/maia/.

It might be interesting to develop/put out RFPs for some benchmarks/datasets for unlearning of ML/AI knowledge (and maybe also ARA-relevant knowledge), analogously to WMDP for CBRN. This might be somewhat useful e.g. in a case where we might want to use powerful (e.g. human-level) AIs for cybersecurity, but we don't fully trust them.

From Understanding and steering Llama 3:

...A further interesting direction for automated interpretability would be to build interpreter agents: AI scientists which given an SAE feature could create hypotheses about what the feature might do, come up with experiments that would distinguish between those hypotheses (for instance new inputs or feature ablations), and then repeat until the feature is well-understood. This kind of agent might be the first automated alignment researcher. Our early experiments in this direction have shown that we can substantially i

'ChatGPT4 generates social psychology hypotheses that are rated as original as those proposed by human experts' https://x.com/BogdanIonutCir2/status/1836720153444139154

I think it's quite likely we're already in crunch time (e.g. in a couple years' time we'll see automated ML accelerating ML R&D algorithmic progress 2x) and AI safety funding is *severely* underdeployed. We could soon see many situations where automated/augmented AI safety R&D is bottlenecked by lack of human safety researchers in the loop. Also, relying only on the big labs for automated safety plans seems like a bad idea, since the headcount of their safety teams seems to grow slowly (and I suspect much slower than the numbers of safety researchers outside the labs). Related: https://www.beren.io/2023-11-05-Open-source-AI-has-been-vital-for-alignment/.

Inference scaling laws should be a further boon to automated safety research, since they add another way to potentially spend money very scalably https://youtu.be/-aKRsvgDEz0?t=9647

The intelligence explosion might be quite widely-distributed (not just inside the big labs), especially with open-weights LMs (and the code for the AI scientist is also open-sourced):

https://x.com/RobertTLange/status/1829104918214447216

I think that would be really bad for our odds of surviving and avoiding a permanent suboptimal dictatorship, if the multipolar scenario continues up until AGI is fully RSI capable. That isn't a stable equilibrium; the most vicious first mover tends to win and control the future. Some 17yo malcontent will wipe us out or become emperor for their eternal life. More logic in If we solve alignment, do we all die anyway? and the discussion there.

I think that argument will become so apparent that that scenario won't be allowed to happen.

Having merely capable AGI widely available would be great for a little while.

Interesting automated AI safety R&D demo:

'In this release:

- We propose and run an LLM-driven discovery process to synthesize novel preference optimization algorithms.

- We use this pipeline to discover multiple high-performing preference optimization losses. One such loss, which we call Discovered Preference Optimization (DiscoPOP), achieves state-of-the-art performance across multiple held-out evaluation tasks, outperforming Direct Preference Optimization (DPO) and other existing methods.

- We provide an initial analysis of DiscoPOP, to discover surprising an

Looking at how much e.g. the UK (>300B$) or the US (>1T$) have spent on Covid-19 measures puts in perspective how little is still being spent on AI safety R&D. I expect fractions of those budgets (<10%), allocated for automated/significantly-augmented AI safety R&D, would obsolete all previous human AI safety R&D.

Language model agents for interpretability (e.g. MAIA, FIND) seem to be making fast progress, to the point where I expect it might be feasible to safely automate large parts of interpretability workflows soon.

Given the above, it might be high value to start testing integrating more interpretability tools into interpretability (V)LM agents like MAIA and maybe even considering randomized controlled trials to test for any productivity improvements they could already be providing.

For example, probing / activation steering workflows seem to me relatively ...

Very plausible view (though doesn't seem to address misuse risks enough, I'd say) in favor of open-sourced models being net positive (including for alignment) from https://www.beren.io/2023-11-05-Open-source-AI-has-been-vital-for-alignment/:

'While the labs certainly perform much valuable alignment research, and definitely contribute a disproportionate amount per-capita, they cannot realistically hope to compete with the thousands of hobbyists and PhD students tinkering and trying to improve and control models. This disparity will only grow larger as ...

Contrastive methods could be used both to detect common latent structure across animals, measuring sessions, multiple species (https://twitter.com/LecoqJerome/status/1673870441591750656) and to e.g. look for which parts of an artificial neural network do what a specific brain area does during a task assuming shared inputs (https://twitter.com/BogdanIonutCir2/status/1679563056454549504).

And there are theoretical results suggesting some latent factors can be identified using multimodality (all the following could be intepretable as different modalities - mul...

Summary threads of two recent papers which seem like significant evidence in favor of the Simulators view of LLMs (especially after just pretraining): https://x.com/aryaman2020/status/1852027909709382065 https://x.com/DimitrisPapail/status/1844463075442950229

Plausible large 2025 training run FLOP estimates from https://x.com/Jsevillamol/status/1810740021869359239?t=-stzlTbTUaPUMSX8WDtUIg&s=19:

B200 = 4.5e15 FLOP/s at INT8

100 days ~= 1e7 seconds

Typical utilization ~= 30%So 100,000 * 4.5e15 FLOP/s * 1e7 * 30% ~= 1e27 FLOP

Which is ~1.5 OOMs bigger than GPT-4

Decomposability seems like a fundamental assumption for interpretability and condition for it to succeed. E.g. from Toy Models of Superposition:

'Decomposability: Neural network activations which are decomposable can be decomposed into features, the meaning of which is not dependent on the value of other features. (This property is ultimately the most important – see the role of decomposition in defeating the curse of dimensionality.) [...]

The first two (decomposability and linearity) are properties we hypothesize to be widespread, while the latte...

Selected fragments (though not really cherry-picked, no reruns) of a conversation with Claude Opus on operationalizing something like Activation vector steering with BCI by applying the methodology of Concept Algebra for (Score-Based) Text-Controlled Generative Models to the model from High-resolution image reconstruction with latent diffusion models from human brain activity (website with nice illustrations of the model).

My prompts bolded:

'Could we do concept algebra directly on the fMRI of the higher visual cortex?

Yes, in principle, it should be possible...

More reasons to believe that studying empathy in rats (which should be much easier than in humans, both for e.g. IRB reasons, but also because smaller brains, easier to get whole connectomes, etc.) could generalize to how it works in humans and help with validating/implementing it in AIs (I'd bet one can already find something like computational correlates in e.g. GPT-4 and the correlation will get larger with scale a la https://arxiv.org/abs/2305.11863) https://twitter.com/e_knapska/status/1722194325914964036

this talk seems like an interesting and novel proposal to test for artificial consciousness, and for uploading, based on phenomena related to split-brain: https://www.youtube.com/watch?v=xNcOgYOvE_k

spicy take: the 'ultimate EA' thing to do might soon be volunteering to get implanted with a few ultrasound BCIs (instead of e.g. donating a kidney), for lo-fi WBE data gathering reasons:

‘The probe’s small size enables potential subcranial implantation between skull and dura with PDMS encapsulation (46), providing chronic hemodynamic access where repeated monitoring is valuable.’

'The complete system captures brain activity up to 5-8 cm depth across a 60◦ × 60◦ field of view (FOV) at 1-10 Hz temporal resolution, while maintaining an 11.52 × 8.64 mm fo...

Claim of roughly human-level automated multi-turn red-teaming: https://blog.haizelabs.com/posts/cascade/

I expect large parts of interpretability work could be safely automatable very soon (e.g. GPT-5 timelines) using (V)LM agents; see A Multimodal Automated Interpretability Agent for a prototype.

Notably, MAIA (GPT-4V-based) seems approximately human-level on a bunch of interp tasks, while (overwhelmingly likely) being non-scheming (e.g. current models are bad at situational awareness and out-of-context reasoning) and basically-not-x-risky (e.g. bad at ARA).

Given the potential scalability of automated interp, I'd be excited to see plans to use large amo...

Recent long-context LLMs seem to exhibit scaling laws from longer contexts - e.g. fig. 6 at page 8 in Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context, fig. 1 at page 1 in Effective Long-Context Scaling of Foundation Models.

The long contexts also seem very helpful for in-context learning, e.g. Many-Shot In-Context Learning.

This seems differentially good for safety (e.g. vs. models with larger forward passes but shorter context windows to achieve the same perplexity), since longer context and in-context learning are differ...

I'm not aware of anybody currently working on coming up with concrete automated AI safety R&D evals, while there seems to be so much work going into e.g. DC evals or even (more recently) scheminess evals. This seems very suboptimal in terms of portfolio allocation.

In light of recent works on automating alignment and AI task horizons, I'm (re)linking this brief presentation of mine from last year, which I think stands up pretty well and might have gotten less views than ideal:

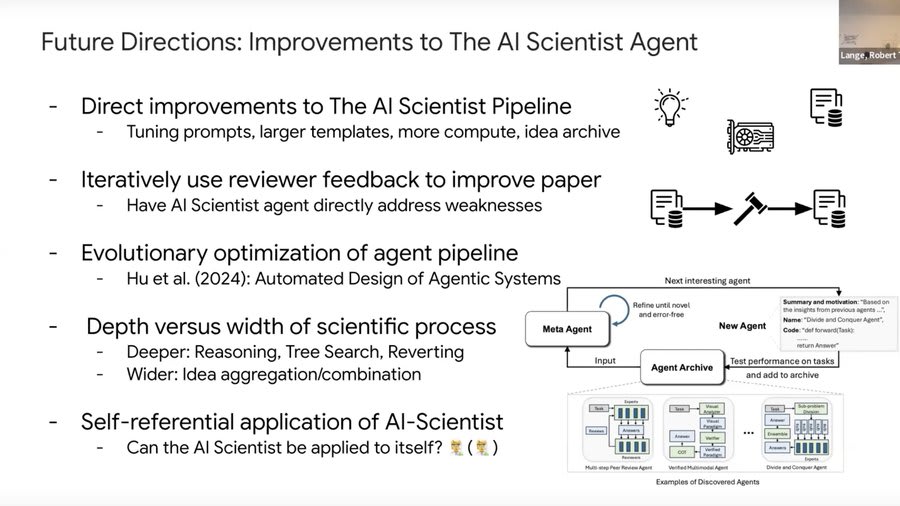

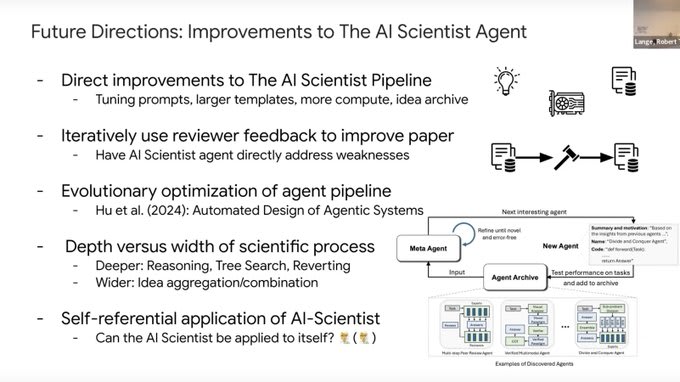

Spicy take: good evals for automated ML R&D should (also) cover for what's in the attached picture (and try hard at elicitation in this rough shape). AFAIK, last time I looked at the main (public) proposals, they didn't seem to. Picture from https://x.com/RobertTLange/status/1829104918214447216.

From a chat with Claude on the example of applying a multilevel interpretability framework to deception from https://arxiv.org/abs/2408.12664:

'The paper uses the example of studying deception in language models (LLMs) to illustrate how Marr's levels of analysis can be applied to AI interpretability research. Here's a detailed breakdown of how the authors suggest approaching this topic at each level:

1.Computational Level:

- Define criteria for classifying LLM behavior as deception

- Develop comprehensive benchmarks to measure deceptive behaviors across vari

An intuition for safety cases for automated safety research over time

Safety cases - we want to be able to make a (conservative) argument for why a certain AI system won’t e.g. pose x-risk with probability > p / year. Rely on composing safety arguments / techniques into a ‘holistic case’.

Safety arguments are rated on three measures:

Practicality: ‘Could the argument be made soon or does it require substantial research progress?’

Maximum strength: ‘How much confidence could the argument give evaluators that the AI systems are safe?’

S...

These might be some of the most neglected and most strategically-relevant ideas about AGI futures: Pareto-topian goal alignment and 'Pareto-preferred futures, meaning futures that would be strongly approximately preferred by more or less everyone‘: https://www.youtube.com/watch?v=1lqBra8r468. These futures could be achievable because automation could bring massive economic gains, which, if allocated (reasonably, not-even-necessarily-perfectly) equitably, could make ~everyone much better off (hence the 'strongly approximately preferred by more or less every...

I'd be interested in seeing the strongest arguments (e.g. safety-washing?) for why, at this point, one shouldn't collaborate with OpenAI (e.g. not even part-time, for AI safety [evaluations] purposes).

Claude-3 Opus on using advance market committments to incentivize automated AI safety R&D:

'Advance Market Commitments (AMCs) could be a powerful tool to incentivize AI labs to invest in and scale up automated AI safety R&D. Here's a concrete proposal for how AMCs could be structured in this context:

- Government Commitment: The US government, likely through an agency like DARPA or NSF, would commit to purchasing a certain volume of AI safety tools and technologies that meet pre-specified criteria, at a guaranteed price, if and when they are developed.

Conversation with Claude Opus on A Causal Explainable Guardrails for Large Language Models, Discussion: Challenges with Unsupervised LLM Knowledge Discovery and A Multimodal Automated Interpretability Agent (MAIA). To me it seems surprisingly good at something like coming up with plausible alignment research follow-ups, which e.g. were highlighted here as an important part of the superalignment agenda.

Prompts bolded:

'Summarize 'Causal Explainable Guardrails for Large

Language Models'. In particular, could this be useful to deal with some of the challenges m...

I wonder how much near-term interpretability [V]LM agents (e.g. MAIA, AIA) might help with finding better probes and better steering vectors (e.g. by iteratively testing counterfactual hypotheses against potentially spurious features, a major challenge for Contrast-consistent search (CCS)).

This seems plausible since MAIA can already find spurious features, and feature interpretability [V]LM agents could have much lengthier hypotheses iteration cycles (compared to current [V]LM agents and perhaps even to human researchers).

I might have updated at least a bit against the weakness of single-forward passes, based on intuitions about the amount of compute that huge context windows (e.g. Gemini 1.5 - 1 million tokens) might provide to a single-forward-pass, even if limited serially.

I've been / am on the lookout for related theoretical results of why grounding a la Grounded language acquisition through the eyes and ears of a single child works (e.g. with contrastive learning methods) - e.g. some recent works: Understanding Transferable Representation Learning and Zero-shot Transfer in CLIP, Contrastive Learning is Spectral Clustering on Similarity Graph, Optimal Sample Complexity of Contrastive Learning; (more speculatively) also how it might intersect with alignment, e.g. if alignment-relevant concepts might be 'groundable' in fMRI d...

This seems pretty good for safety (as RAG is comparatively at least a bit more transparent than fine-tuning): https://twitter.com/cwolferesearch/status/1752369105221333061

Larger LMs seem to benefit differentially more from tools: 'Absolute performance and improvement-per-turn (e.g., slope) scale with model size.' https://xingyaoww.github.io/mint-bench/. This seems pretty good for safety, to the degree tool usage is often more transparent than model internals.

In my book, this would probably be the most impactful model internals / interpretability project that I can think of: https://www.lesswrong.com/posts/FbSAuJfCxizZGpcHc/interpreting-the-learning-of-deceit?commentId=qByLyr6RSgv3GBqfB

Large scale cyber-attacks resulting from AI misalignment seem hard, I'm at >90% probability that they happen much later (at least years later) than automated alignment research, as long as we *actually try hard* to make automated alignment research work: https://forum.effectivealtruism.org/posts/bhrKwJE7Ggv7AFM7C/modelling-large-scale-cyber-attacks-from-advanced-ai-systems.

I had speculated previously about links between task arithmetic and activation engineering. I think given all the recent results on in context learning, task/function vectors and activation engineering / their compositionality (In-Context Learning Creates Task Vectors, In-context Vectors: Making In Context Learning More Effective and Controllable Through Latent Space Steering, Function Vectors in Large Language Models), this link is confirmed to a large degree. This might also suggest trying to import improvements to task arithmetic (e.g. Task Arithmetic i...

(As reply to Zvi's 'If someone was founding a new AI notkilleveryoneism research organization, what is the best research agenda they should look into pursuing right now?')

LLMs seem to represent meaning in a pretty human-like way and this seems likely to keep getting better as they get scaled up, e.g. https://arxiv.org/abs/2305.11863. This could make getting them to follow the commonsense meaning of instructions much easier. Also, similar methodologies to https://arxiv.org/abs/2305.11863 could be applied to other alignment-adjacent domains/tasks, e.g. moral...

There have been numerous scandals within the EA community about how working for top AGI labs might be harmful. So, when are we going to have this conversation: contributing in any way to the current US admin getting (especially exclusive) access to AGI might be (very) harmful?

[cross-posted from X]

Claude Sonnet-3.5 New, commenting on the limited scalability of RNNs, when prompted with 'comment on what this would imply for the scalability of RNNs, refering (parts of) the post' and fed https://epoch.ai/blog/data-movement-bottlenecks-scaling-past-1e28-flop (relevant to opaque reasoning, out-of-context reasoning, scheming):

'Based on the article's discussion of data movement bottlenecks, RNNs (Recurrent Neural Networks) would likely face even more severe scaling challenges than Transformers for several reasons:

- Sequential Nature: The article mentions pipe

Sam Altman says AGI is coming in 2025 (and he is also expecting a child next year) https://x.com/tsarnick/status/1854988648745517297

Lukewarm take: the risk of the US sliding into autocracy seems high enough at this point that I think it's probably more impactful now for EU citizens to work on pushing sovereign EU AGI capabilities, than on safety for US AGI labs.

Hot take: for now, I think it's likelier than not that even fully uncontrolled proliferation of automated ML scientists like https://sakana.ai/ai-scientist/ would still be a net differential win for AI safety research progress, for pretty much the same reasons from

https://beren.io/2023-11-05-Open-source-AI-has-been-vital-for-alignment/….

I'd say the best argument against it is a combination of precedent-setting concerns, where once they started open-sourcing, it'd be hard to stop doing it even if it becomes dangerous to do, combined with misuse risk for now seeming harder to solve than misalignment risk, and in order for open-source to be good, you need to prevent both misalignment and people misusing their models.

I agree Sakana AI is safe to open source, but I'm quite sure sometime in the next 10-15 years, the AIs that do get developed will likely be very dangerous to open-source them, at least for several years.