If and only if a month has exactly two characters in its Roman numeral, then it has less than 31 days. No exception.

Save your knuckles!

There's a poem I know:

Thirty days has September, April, June, and November.

All the rest have thirty-one.

Except for February.

Agreement: Upvote

How much do you agree with this, separate from whether you think it's a good comment?

Come on, if you didn't click the button, you have some explaining to do!

Desired feature for LessWrong:

Ability to register bets that's is linked to accounts. Leaderboards, offering and accepting bets, ability to assign arbiters etc

It would be cool to see and track various bets - LessWrong is the obvious platform

The issue with forecasting platforms is that for any one of them there is a huge chance they won't be around irn 5 or 10 years which defeats the point of long term bets

EDIT: another feature we desperately need: right now if I'm logged in on the alignment forum it doesn't show me the lesswrong comments. That's okay & by design. However if would be good if it at least told me that there are LW comments on the alignmentForum post in the first place - that I may then decide to read or not read. It can be very confusing this way: finding a post without any comments that you could have sworn had more engagement last time you checked.

In general, even in the rationality community, people's reactions, including my own, to the fact that doom seems imminent -- whether it's in 5 years or 50 years -- seems much too small. I wonder how much of this is because it feels science fiction-y.

If it was nuclear war, would that change things? An asteroid hitting? What about whether it is mainstream people vs non-mainstream people pulling the alarm? If a majority of mainstream academics were pulling the alarm on an asteroid hitting in the next 5-50 years, would reactions be different?

In the worlds where we have AI doom it's likely because of large amounts of easy optimization slack that AGI exploits leading to hard takeoff, or perhaps coordination failures and deceptive alignment in slower takeoff scenarios. Either way there doesn't seem to be much one can do about that other than contribute to AI safety.

Contrast to nuclear war, where more concrete conventional preparation like bomb shelters and disaster survival preparation has at least some non-epsilon expected payout.

Also, most of the current leaders/experts in AI don't put much probability on doom compared to LW folks.

Does anyone happen to know the maximum number of inputs to any element in a digital computer at the level of electronic gates? E.g., there are of course AND and OR gates, so the number is at least 2. But you can also set up an electronic circuit such that it implements, e.g., an AND with 3 inputs (though this is never required since you can make a 3-input AND out of two 2-input ANDs, and similarly for any other gate with 3+ inputs). Hence the question, what is the highest number of inputs that does physically exist in a typical CPU?

I'm pretty proud of this and will take the option to brag a little :-)

I've found an integer sequence that is both meaningfully generateable and doesn't occur in the OEIS (and superseeker also doesn't find any way to generate it).

The first six integers in the sequence are 1,2,8,144,10752,3306240, any further ones are (for me at the moment) basically impossible to compute (they are divisible by the factorial numbers, which makes sense (in context), the sequence divided by the factorial numbers is 1,2,4,24,448,27552).

I'd be interested if anyone finds some interesting structure in the existing numbers, I've only noticed that they have surprisingly small prime factors.

Has anyone here considered working on the following?:

https://www.super-linear.org/prize?recordId=recT1AQw4H7prmDE8

$500 prize pool for creating an accurate, comprehensive, and amusing visual map of the AGI Safety ecosystem, similar to XKCD’s map of online communities or Scott Alexander’s map of the rationalist community.

Payout will be $400 to the map which plex thinks is highest quality, $75 to second place, $25 to third. The competition will end one month after the first acceptable map is submitted, as judged by plex.

Resources, advice, conditions:

- This is a partial list of items which might make sense to include.

- You are advised to iterate, initially posting a low-effort sketch and getting feedback from others in your network, then plex.

- You may create sub-prizes on Bountied Rationality for the best improvements to your map (if you borrow ideas from other group’s public bounties and win this prize you must cover the costs of their bounty payouts).

- You may use DALL-E 2 or other image generation AIs to generate visual elements.

- You may collaborate with others and split the prize, but agree internally on roles and financial division, and distribute it yourselves.

- You can use logos as fair-use, under editorial/educational provision.

- You can scale items based on approximate employee count (when findable), Alexa rank (when available), number of followers (when available) or wild guess (otherwise).

- You agree to release the map for public use.

I asked about FLI's map in this question and it received some traction. I might go ahead and try this, starting with FLI's map and expanding off of it.

Is there a keyboard shortcut for “go to next unread comment” (i.e. next comment marked with green line)? In large threads I currently scroll a while until I find the next green comment, but there must be a better way.

Hello! I've heard you can ask people about your content in the open thread. Sorry if I'm asking too soon.

Could you help me to explain this ("Should AI learn human values, human norms or something else?") idea better? It's a 3 minute read.

I also would like to discuss with somebody those thought experiments. Not in a 100% formal way.

Is it just me or has the center part of the homepage moved slightly left? Something just feels off when I look at it.

My notes and comments on the book Noise:

https://digitalsauna.wordpress.com/2022/09/17/noise-by-kahneman-sibony-sunstein-2021/

Someone recently asked me if there was audio for https://www.lesswrong.com/highlights compiled into a playlist anywhere. I can't seem to find it; does this already exist?

As of last Friday, you can actually play the audio for each post directly from the post page. We may figure out a playlist in another format. I'm curious what your preferred format would be.

I'll pass this along! It wasn't for me, it was for someone who apparently likes to queue up LW posts in their podcast player and wanted to know if there was an automatic way to do so.

(it looks like you have intercom hidden. I just un-hid it for you, so you can message us with it. Use the circle in the bottom-right corner)

Notes/commentary on Bryan Caplan's "Selfish Reasons to Have More Kids":

Is there a way to get the book in a physical form? I want to share it with a family member who expressed interest, but they really need a physical book to read. I could just get the epub printed I'm sure, but I'd like to purchase it in a way that helps the organization if possible.

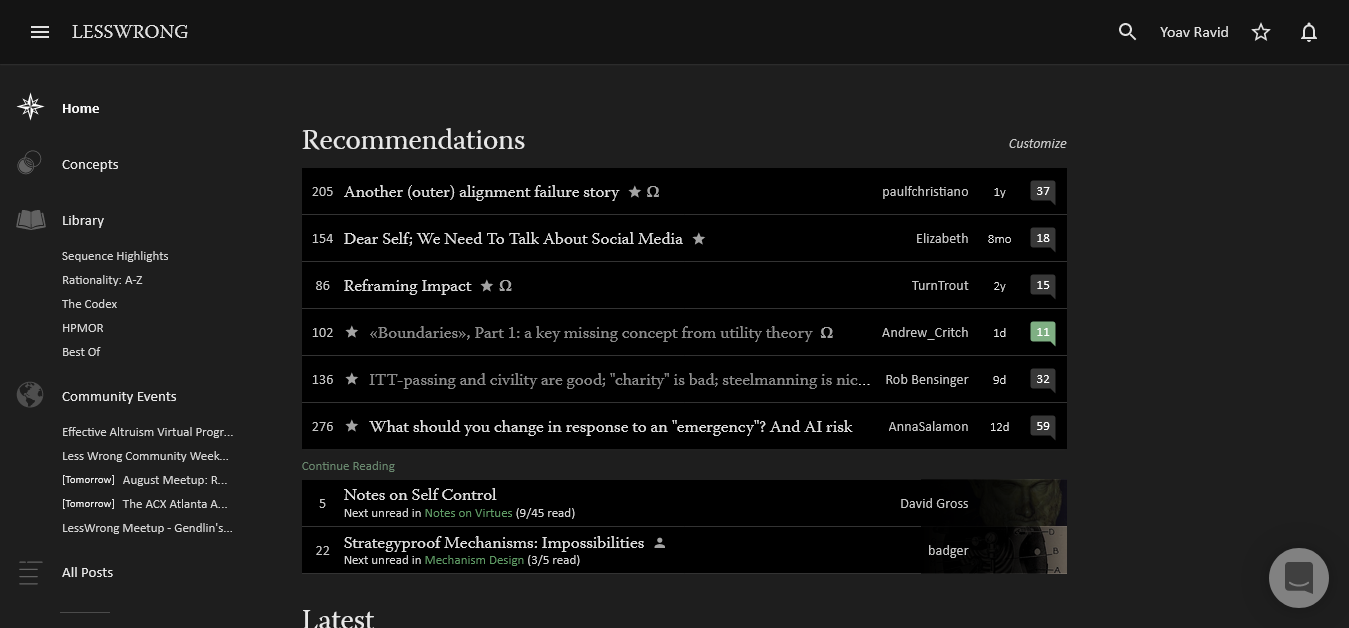

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library, checking recent Curated posts, seeing if there are any meetups in your area, and checking out the Getting Started section of the LessWrong FAQ. If you want to orient to the content on the site, you can also check out the new Concepts section.

The Open Thread tag is here. The Open Thread sequence is here.