Unofficial Followup to: Fake Selfishness, Post Your Utility Function

A perception-determined utility function is one which is determined only by the perceptual signals your mind receives from the world; for instance, pleasure minus pain. A noninstance would be number of living humans. There's an argument in favor of perception-determined utility functions which goes like this: clearly, the state of your mind screens off the state of the outside world from your decisions. Therefore, the argument to your utility function is not a world-state, but a mind-state, and so, when choosing between outcomes, you can only judge between anticipated experiences, and not external consequences. If one says, "I would willingly die to save the lives of others," the other replies, "that is only because you anticipate great satisfaction in the moments before death - enough satisfaction to outweigh the rest of your life put together."

Let's call this dogma perceptually determined utility. PDU can be criticized on both descriptive and prescriptive grounds. On descriptive grounds, we may observe that it is psychologically unrealistic for a human to experience a lifetime's worth of satisfaction in a few moments. (I don't have a good reference for this, but) I suspect that our brains count pain and joy in something like unary, rather than using a place-value system, so it is not possible to count very high.

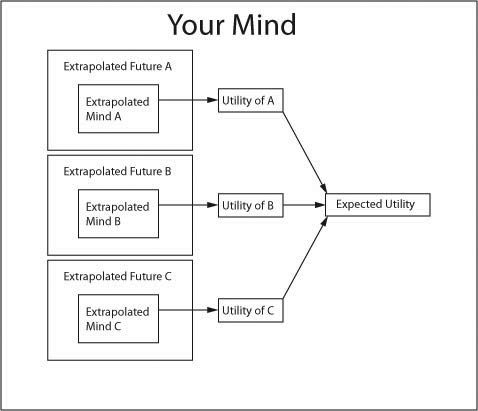

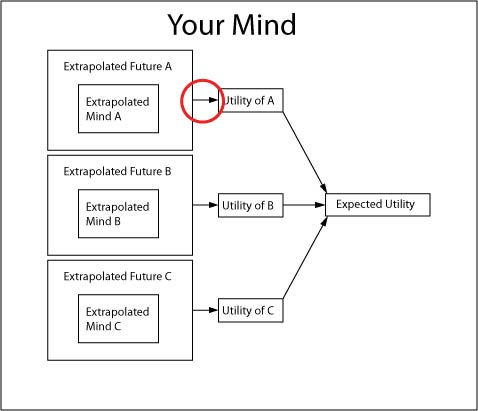

The argument I've outlined for PDU is prescriptive, however, so I'd like to refute it on such grounds. To see what's wrong with the argument, let's look at some diagrams. Here's a picture of you doing an expected utility calculation - using a perception-determined utility function such as pleasure minus pain.

Here's what's happening: you extrapolate several (preferably all) possible futures that can result from a given plan. In each possible future, you extrapolate what would happen to you personally, and calculate the pleasure minus pain you would experience. You call this the utility of that future. Then you take a weighted average of the utilities of each future — the weights are probabilities. In this way you calculate the expected utility of your plan.

But this isn't the most general possible way to calculate utilities.

Instead, we could calculate utilities based on any properties of the extrapolated futures — anything at all, such as how many people there are, how many of those people have ice cream cones, etc. Our preferences over lotteries will be consistent with the Von Neumann-Morgenstern axioms. The basic error of PDU is to confuse the big box (labeled "your mind") with the tiny boxes labeled "Extrapolated Mind A," and so on. The inputs to your utility calculation exist inside your mind, but that does not mean they have to come from your extrapolated future mind.

So that's it! You're free to care about family, friends, humanity, fluffy animals, and all the wonderful things in the universe, and decision theory won't try to stop you — in fact, it will help.

Edit: Changed "PD" to "PDU."

Utility maximisation is not really a theory about how humans work. AFAIK, nobody thinks that humans have an internal representation of utility which they strive to maximise. Those that entertain this idea are usually busy constructing a straw-man critique.

It is like how you can model catching a ball with PDEs. You can build a pretty good model like that - even though it bears little relationship to the actual internal operation.

[2011 edit: hmm - the mind actually works a lot more like that than I previously thought!]

That's kind of ironic that you mention PDE's, since PCT actually proposes that we do use something very like an evolutionary algorithm to satisfice our multi-goal controller setups. IOW, I don't think it's quite accurate to say that PDE's "bear little relationship to the actual internal operation."