Announcing Apollo Research

TL;DR 1. We are a new AI evals research organization called Apollo Research based in London. 2. We think that strategic AI deception – where a model outwardly seems aligned but is in fact misaligned – is a crucial step in many major catastrophic AI risk scenarios and that detecting deception in real-world models is the most important and tractable step to addressing this problem. 3. Our agenda is split into interpretability and behavioral evals: 1. On the interpretability side, we are currently working on two main research bets toward characterizing neural network cognition. We are also interested in benchmarking interpretability, e.g. testing whether given interpretability tools can meet specific requirements or solve specific challenges. 2. On the behavioral evals side, we are conceptually breaking down ‘deception’ into measurable components in order to build a detailed evaluation suite using prompt- and finetuning-based tests. 4. As an evals research org, we intend to use our research insights and tools directly on frontier models by serving as an external auditor of AGI labs, thus reducing the chance that deceptively misaligned AIs are developed and deployed. 5. We also intend to engage with AI governance efforts, e.g. by working with policymakers and providing technical expertise to aid the drafting of auditing regulations. 6. We have starter funding but estimate a $1.4M funding gap in our first year. We estimate that the maximal amount we could effectively use is $4-6M $7-10M* in addition to current funding levels (reach out if you are interested in donating). We are currently fiscally sponsored by Rethink Priorities. 7. Our starting team consists of 8 researchers and engineers with strong backgrounds in technical alignment research. 8. We are interested in collaborating with both technical and governance researchers. Feel free to reach out at info@apolloresearch.ai. 9. We intend to hire once our funding gap is closed. If you’d like to s

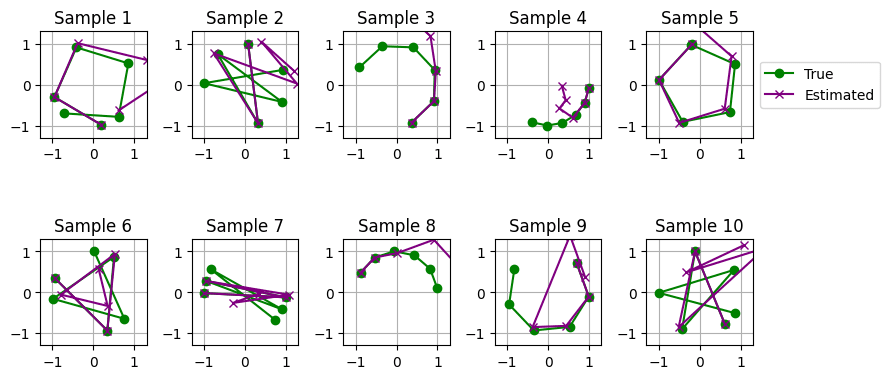

We've been seeing similar things when pruning graphs of language model computations generated with parameter decomposition. I have a suspicion that something like this might be going on in the recent neuron interpretability work as well, though I haven't verified that. If you just zero or mean ablate lots of nodes in a very big causal graph, you can get basically any end result you want with very few nodes, because you can select sets of nodes to ablate that are computationally important but cancel each other out in exactly the way you need to get the right answer.[1]

I think the trick is to not do complete ablations, but instead ablate stochastically... (read more)