Inference-Only Debate Experiments Using Math Problems

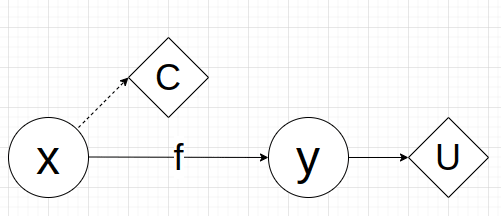

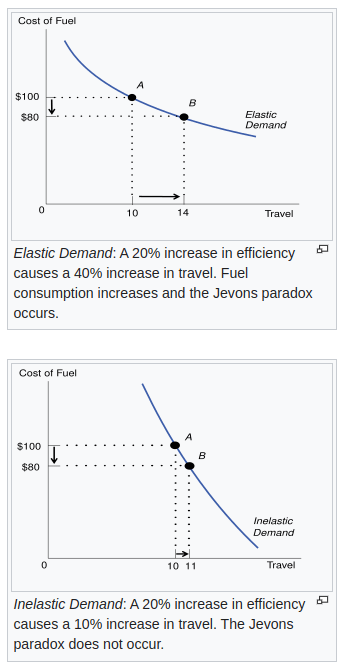

Work supported by MATS and SPAR. Code at https://github.com/ArjunPanickssery/math_problems_debate/. Three measures for evaluating debate are 1. whether the debate judge outperforms a naive-judge baseline where the naive judge answers questions without hearing any debate arguments. 2. whether the debate judge outperforms a consultancy baseline where the judge hears argument(s) from a single "consultant" assigned to argue for a random answer. 3. whether the judge can continue to supervise the debaters as the debaters are optimized for persuasiveness. We can measure whether judge accuracy increases as the debaters vary in persuasiveness (measured with Elo ratings). This variation in persuasiveness can come from choosing different models, choosing the best of N sampled arguments for different values of N, or training debaters for persuasiveness (i.e. for winning debates) using RL. Radhakrishan (Nov 2023), Khan et al. (Feb 2024), and Kenton et al. (July 2024) study an information-gap setting where judges answer multiple-choice questions about science-fiction stories whose text they can't see, both with and without a debate/consultancy transcript that includes verified quotes from the debaters/consultant. Past results from the QuALITY information-gap setting are seen above. Radhakrishnan (top row) finds no improvement to judge accuracy as debater Elo increases, while Khan et al. (middle row) and Kenton et al. (bottom row) do find a positive trend. Radhakrishnan varied models using RL while Khan et al. used best-of-N and critique-and-refinement optimizations. Kenton et al. vary the persuasiveness of debaters by using models with different capability levels. Both Khan et al. and Kenton et al. find that in terms of judge accuracy, debate > consultancy > naive judge for this setting. In addition to the information-gap setting, consider a reasoning-gap setting where the debaters are distinguished from the judge not by their extra information but by their stronger abil

Here are some of my claims about AI welfare (and sentience in general):

-

-

... (read 575 more words →)"Utility functions" are basically internal models of reward that are learned by agents as part of modeling the environment. Reward attaches values to every instrumental thing and action in the world, which may be understood as gradients dU/dx of an abstract utility over each thing---these are various pressures, inclinations, and fears (or "shadow prices") while U is the actual experienced pleasure/pain that must exist in order to justify belief in these gradients.

If the agent always acts to maximize its utility---what then are pleasure/pain? Pleasure must be the default, and pain only a fear, a shadow price of what would happen