Yes! I was wondering how to make the object feel personal and unique. Adding picture is a great idea! You could use a pocket printer like this one to print pictures regularly and update them.

I also agree with having one device per group to keep the affordance clear.

We tried to realize a prototype from a raspberry pi nano with a friend, but it was pretty hard to deal with the audio, only larger device would support it through micro jack plug. Any idea on how to make an MVP (actually 2!) in a weekend?

That look great! Thanks for the prototype :) I like how you (or the LLM?) went far on the river esthetics with the wiggly cards

Great question!

I can see how AI will make the playdough world from the businessman into something liquid, maybe even superfluid. The only walls you see are latencies, physical laws or strong infrastructure bottlenecks. There is no humans to give order to, only actuators, your fingers are acting at a distance, not hitting the keyboard like the businessman.

Personally, I am interested in archetypes that take pieces of the tree and the businessman. Like how the ants is a chimera of the fly and the dog.

I agree that the speed of change makes it harder to develop deep practice, but I don't think it's a such a big blocker.

There are many instances of practices that need to generalize and evolve as the underlying tech changes: in cyber sec, every new tech is an opportunity for breaches, so the practice of the hacker/safety mindset needs to translate fast to every new development, in programming it is common for professionals to learn new languages / frameworks every 2-4 years.

Even if the models change every few months, I think it's fair to say we could imagine deep practice that don't depend on the specific details of a model but can translate to new models (more like the hacker mindset of cyber sec than the muscle memory of violin).

I also think it is possible to develop interfaces (like comfyUI) that creates a layer of practice that is independent of the model changes. For instance, you can learn workflow design patterns in how to combine LoRAs, and other adaptors that can generalize across models. Though it's probably limited currently as more advanced image generation model might make the whole LoRAs ecosystem obsolete.

Here is a choice: you could buy an alarm clock (I personally like this one ) and make your bedroom phone-free.

The Balkan house analogy has been almost literally applied to the architecture of the seat of the European Parliament in Strasbourg. It is an unfinished amphitheater symbolizing the ever going construction of the Union.

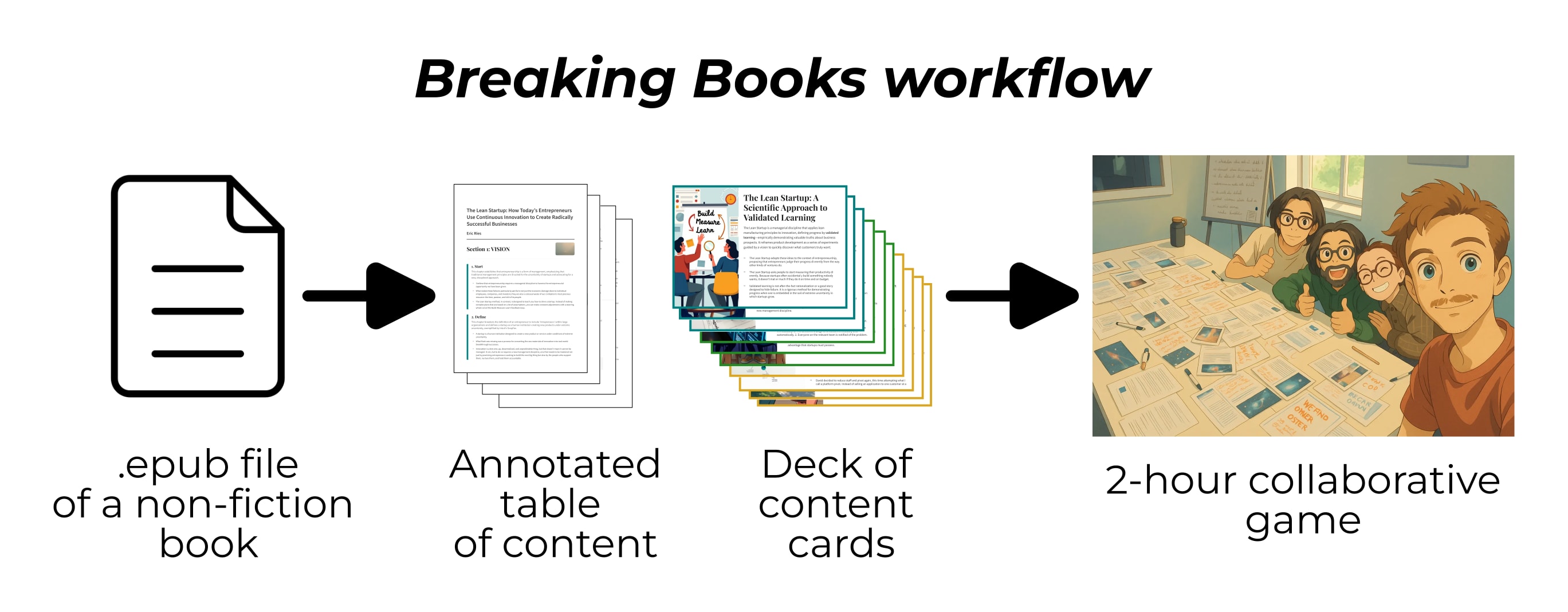

I started with using this format of book club and wondered if we could push it further: can we design an event where you read nothing from the book ahead of the event?

For this, I build a tool for collective reading, where the participants piece back the story of the book by organizing a mind map using cards designed by a LLMs and diffusion models.

I ran ~ 10 workshops, and it is good enough that I keep organizing it!

If you'd like to learn more: https://www.lesswrong.com/posts/BnaKSQk6XvMYxHETS/breaking-books-a-tool-to-bring-books-to-the-social-sphere