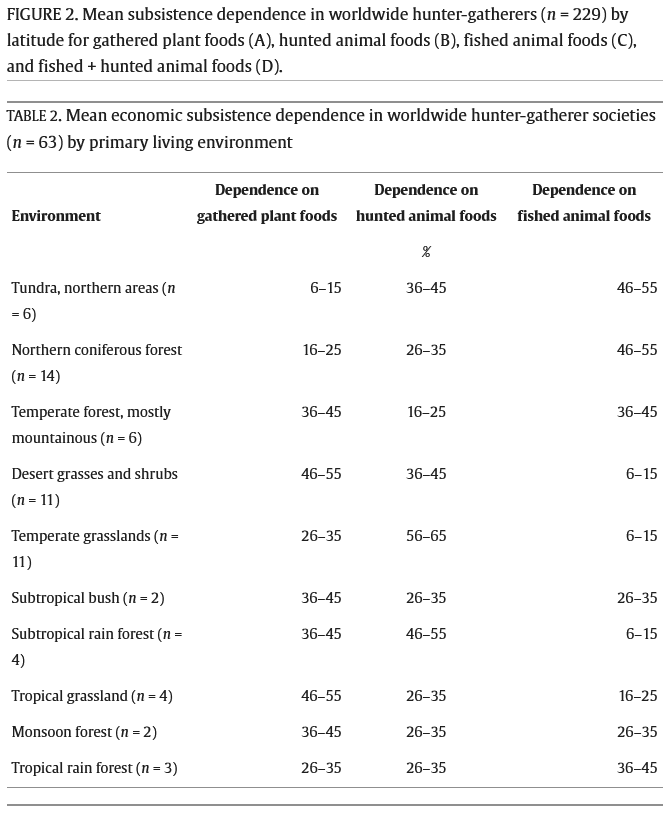

My point was not specifically that people up north eat an animal based diet but that ALL people everywhere do. We have been hunter gatherer for a couple of hundred thousands years, and farmers for a couple of thousands. To me it makes sense to look at what hunter gathers today eat (i know its not a perfect proxy but better than observing what people eat at McDonalds). As you can see, ALL present hunter gatherers eat animal foods, and they eat a lot of it. We need fat and protein, they are essential for us. Carbs are not.

"An alternate explanation could imply that people who live in the tundra do end up aging faster as a result of actual deficiencies."

Do people age faster up north due to deficiencies? What is you source for that?

I am amazed how "they" managed to dupe almost everyone with the idea that humans are "made" to eat diets based almost solely on grains and tubers (high glycemic index) and that animal foods (glycemic index = 0) was never meant for us. Some food for thought:

(Source: https://www.sciencedirect.com/science/article/pii/S0002916523070582)

Your "Intrigue -> Denial -> Appreciation -> Denial 2 -> Fear" - cycle really hits the spot. I will filter future comments from AI pundit through this lens.

Thank you for an excellent post.

The results and studies discussed in the post further validate a feeling I have had about longevity for some time: that there is not much a person living a "normal" life with decent eating, exercise, social, and sleeping habits can do to significantly extend their lifespan. There is no silver bullet that, from a reasonably "normal" health baseline, can routinely give you 5 or 10 extra years, let alone 1 or 2 years.

In this regard, current science has failed, and I think the whole longevity research community needs to reassess the way forward. No matter how much pill-swallowing, cold-bath-taking, HIIT-training, and sleep-optimizing they (we) do, it does not really work.

I wonder, in the aftermath of the US bombing of Iran's nuclear enrichment facilities, how this will affect future possibilities of performing a 'Yudkowskyan strike' on datacenters. Will this effectively drive advanced AI development underground to nuclear and bunker bomb proof locations?

The book will probably be a treat to read, but since "someone" (Open AI, Anthropic, Google, META et al.) apparently WILL build "it" everyone WILL die. Oh well, we had a good run I guess.

Design of the frontpage: No. I am fan of the white clean design with the occasional AI generated image. The current iteration reduces readability a lot.

Since a lot of people disagree with this, please tell me what a score of 100% mean or say 50% or 37%? I am not writing this to provoke, I am genuinely interested to know.

Of course hunter gatherers eat/ate carbs, but they did not base their diets on grains and beans. Fruits and berries "wants" to get eaten. But... how long is the fruit and berry season? A few months if you are lucky. The rest of the time animal foods is pretty much the only thing available. Sure you can chew on the occasional root, but how many calories will that give you?

My stance is that more animal foods in peoples diets would reverse some of the damage from the high carb ultraprocessed foods in peoples diets. I live in Sweden. The recommended weekly red meat consumption is 350grams. That is like one or two meals. To me that recommendation is madness when you look a insulin resistance and diabetes numbers. People need to get a bigger share of their energy from protein and fat, not less.