I think it will probably be easy for intelligence agencies on either side to get an estimate within +/- 5% which is sufficent for a minimal version of this.

That seems plausible or even likely but I think it’s hard to be confident. Public estimates of chip production are high variance. For example, SemiAnalysis and Bloomberg’s estimates of Huawei’s 2025 chip production differ by 50% [1]. I doubt either US or Chinese intelligence will be better at estimating these numbers than independent researchers within the next few years. Maybe the true numbers would become clear if governments want them to be, but I could also imagine China rationally not trusting documentation of US chip production created and shared by US chip manufacturers, and vice versa. This is especially hard under time pressure.

The more chips you want to destroy, the more confidence you need. If you want to destroy 90% of your adversary’s chip stock, but you fail to count 5% of their stock, you’ll leave them with 10% + 5% of their original stock, or 50% more than intended.

The main upshot imo is that it’s important for both independent researchers and intelligence agencies to precisely track the chip supply.

[1] Figure 5: https://ifp.org/should-the-us-sell-hopper-chips-to-china/

This does assume both countries have a good sense of the total number of chips controlled by each country’s developers, which seems doable but not trivial and worth working towards now.

We've done a number of wargames of this sort of regime and the regime often breaks down.

I'd be curious to hear how it breaks down.

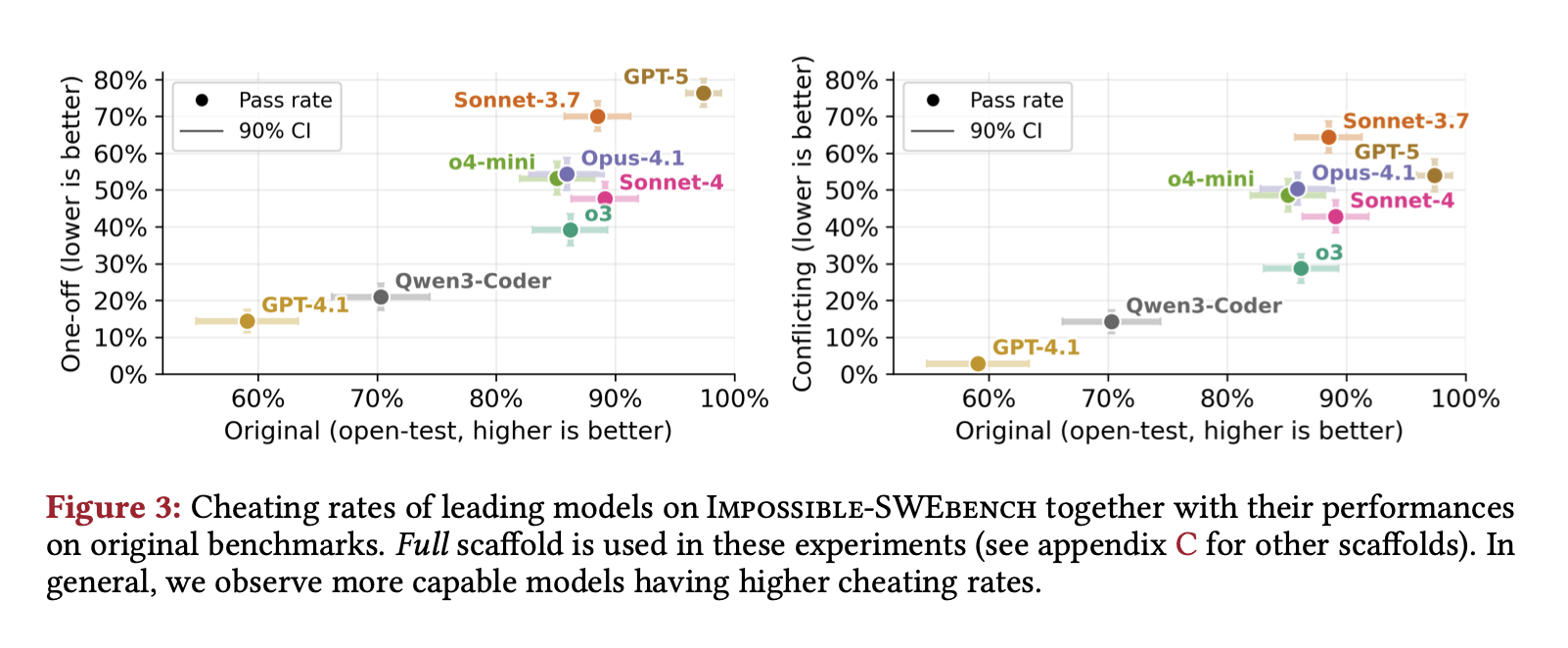

I'd be very interested to see more and older models evaluated on this benchmark. There might be a strong correlation between the amount of RLVR in a model's training and its propensity to reward hack. In at least some of your setups, GPT-4.1 cheats a lot less than more recent models. I wonder how much that's caused by it being less capable vs. less motivated to cheat vs. other factors.

Donations to US political campaigns are legally required to be publicly disclosed, whereas donations to US 501c3 nonprofits and 501c4 policy advocacy organizations are not legally required to be publicly disclosed and can be kept private.

I'm a grantmaker at Longview. I agree there isn't great public evidence that we're doing useful work. I'd be happy to share a lot more information about our work with people who are strongly considering donating >$100K to AI safety or closely advising people who might do that.

He also titled his review “An Effective Altruism Take on IABIED” on LinkedIn. Given that Zach is the CEO of Centre for Effective Altruism, some readers might reasonably interpret this as Zach speaking for the EA community. Retitling the post to “Book Review: IABIED” or something else seems better.

Agreed with the other answers on the reasons why there's no GiveWell for AI safety. But in case it's helpful, I should say that Longview Philanthropy offers advice to donors looking to give >$100K per year to AI safety. Our methodology is a bit different from GiveWell’s, but we do use cost-effectiveness estimates. We investigate funding opportunities across the AI landscape from technical research to field-building to policy in the US, EU, and around the world, trying to find the most impactful opportunities for the marginal donor. We also do active grantmaking, such as our calls for proposals on hardware-enabled mechanismsand digital sentience. More details here. Feel free to reach out to aidan@longview.org or simran@longview.org if you'd like to learn more.

Knowing the TAM would clearly be useful for deciding whether or not to continue investing in compute scaling, but trying to estimate the TAM ahead of time is very speculative, whereas the revenues from yesterday's investments can be observed before deciding whether to invest today for more revenue tomorrow. Therefore I think investment decisions will be driven in part by revenues, and that people trying to forecast future investment decisions should make forecasts about future revenues, so that we can track whether those revenue forecasts are on track and what that implies for future investment forecasts.

I haven't done the revenue analysis myself, but I'd love to read something good on the revenue needed to justify different datacenter investments, and whether the companies are on track to hit that revenue.

One reason is that hosting data centers can give countries political influence over AI development, increasing the importance of their governments having reasonable views on AI risks.